Introduction

The rapid evolution of artificial intelligence is reshaping the landscape of data management, making AI data observability more critical than ever. Organizations are increasingly recognizing the need for robust strategies that enhance visibility and ensure data integrity, ultimately driving operational efficiency. However, as data volumes surge and complexities multiply, how can businesses effectively navigate the challenges of maintaining reliable data observability?

This article delves into ten key insights that illuminate the path toward enhanced efficiency through strategic data observability practices. By understanding these insights, organizations can optimize their data management processes and achieve greater operational success. Are you ready to explore how these practices can transform your approach to data management?

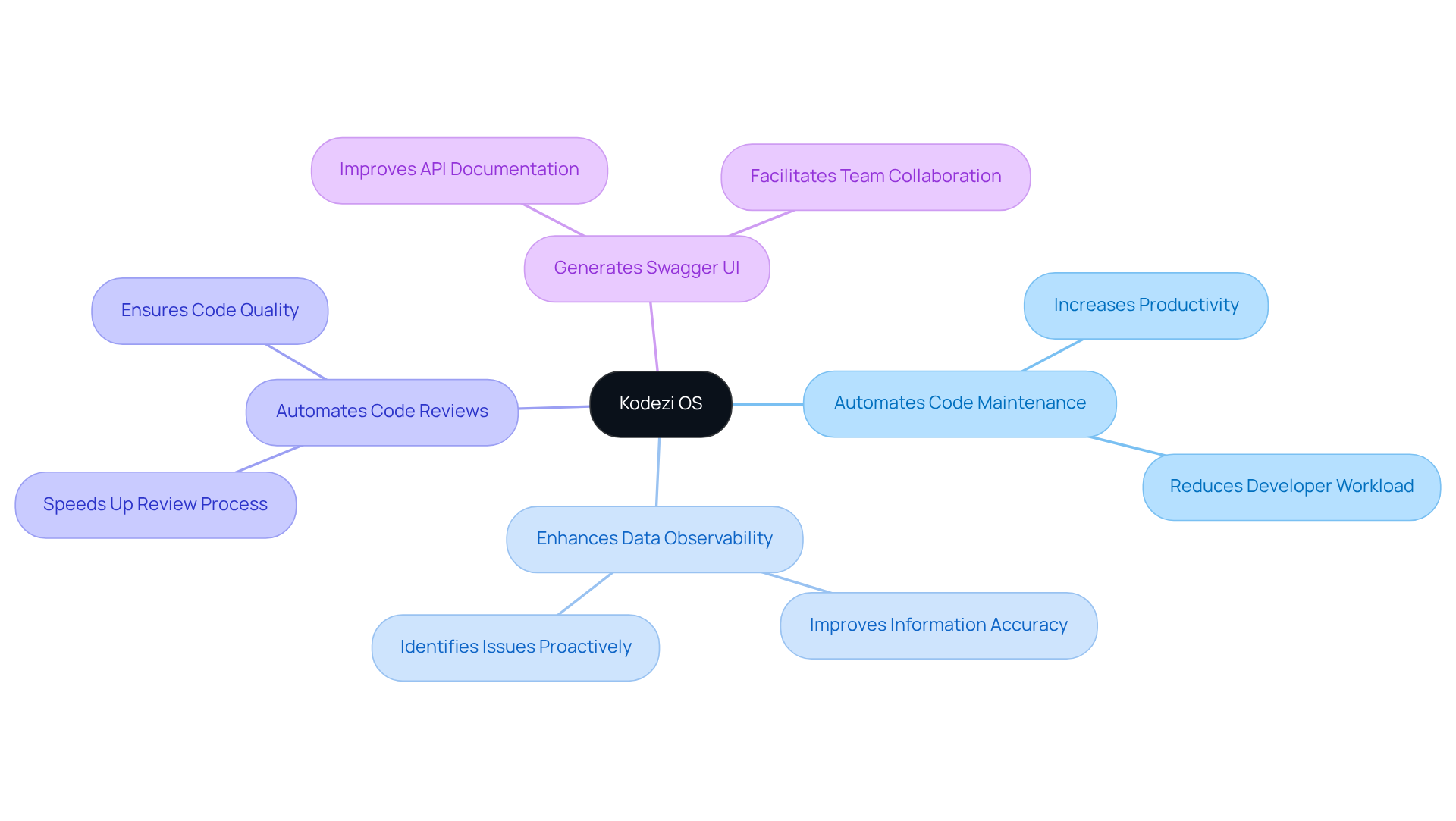

Kodezi | Professional OpenAPI Specification Generator - AI Dev-Tool: Automate Code Maintenance for Enhanced Data Observability

Coding challenges can be daunting for developers, often leading to inefficiencies and frustration. Kodezi OS steps in as a professional OpenAPI specification generator that automates code upkeep, significantly enhancing information visibility. By consistently overseeing codebases, ai data observability independently identifies and addresses problems, which ensures information accuracy and reliability.

This level of automation alleviates the workload on engineering teams. Imagine being able to prioritize innovation over routine maintenance tasks! Kodezi OS effortlessly incorporates ai data observability into the development lifecycle, encouraging a proactive stance on maintaining integrity and improving overall software quality.

Furthermore, Kodezi automates code reviews and synchronizes API documentation. It even generates Swagger UI sites, empowering teams to focus on what truly matters: innovation. With Kodezi, you can enhance productivity and elevate code quality, making your development process smoother and more efficient.

Are you ready to transform your coding practices? Explore the tools available on the Kodezi platform and see how they can revolutionize your workflow.

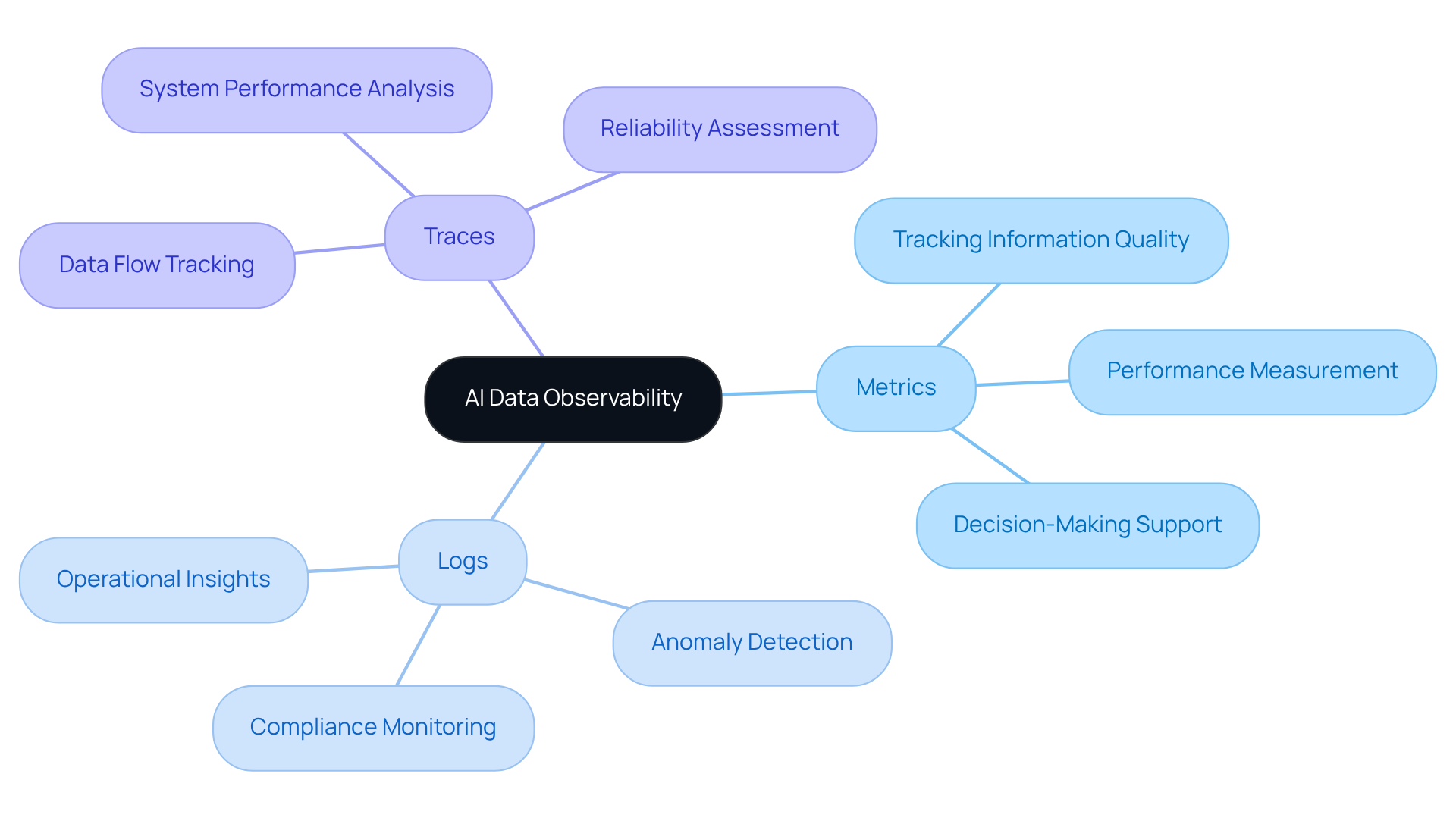

Understanding Data Observability: Key Components and Definitions

The concept of AI data observability is crucial for tracking and understanding the state of information as it flows through systems. It involves essential elements like metrics, logs, and traces that contribute to AI data observability, providing valuable insights into information quality, performance, and reliability.

Furthermore, by implementing robust methods of AI data observability, organizations can effectively identify anomalies and ensure compliance. This not only upholds high standards of information integrity but also leads to improved decision-making and operational efficiency.

Have you considered how these practices could enhance your organization’s performance? By prioritizing information visibility, you can transform your data management approach and drive better outcomes.

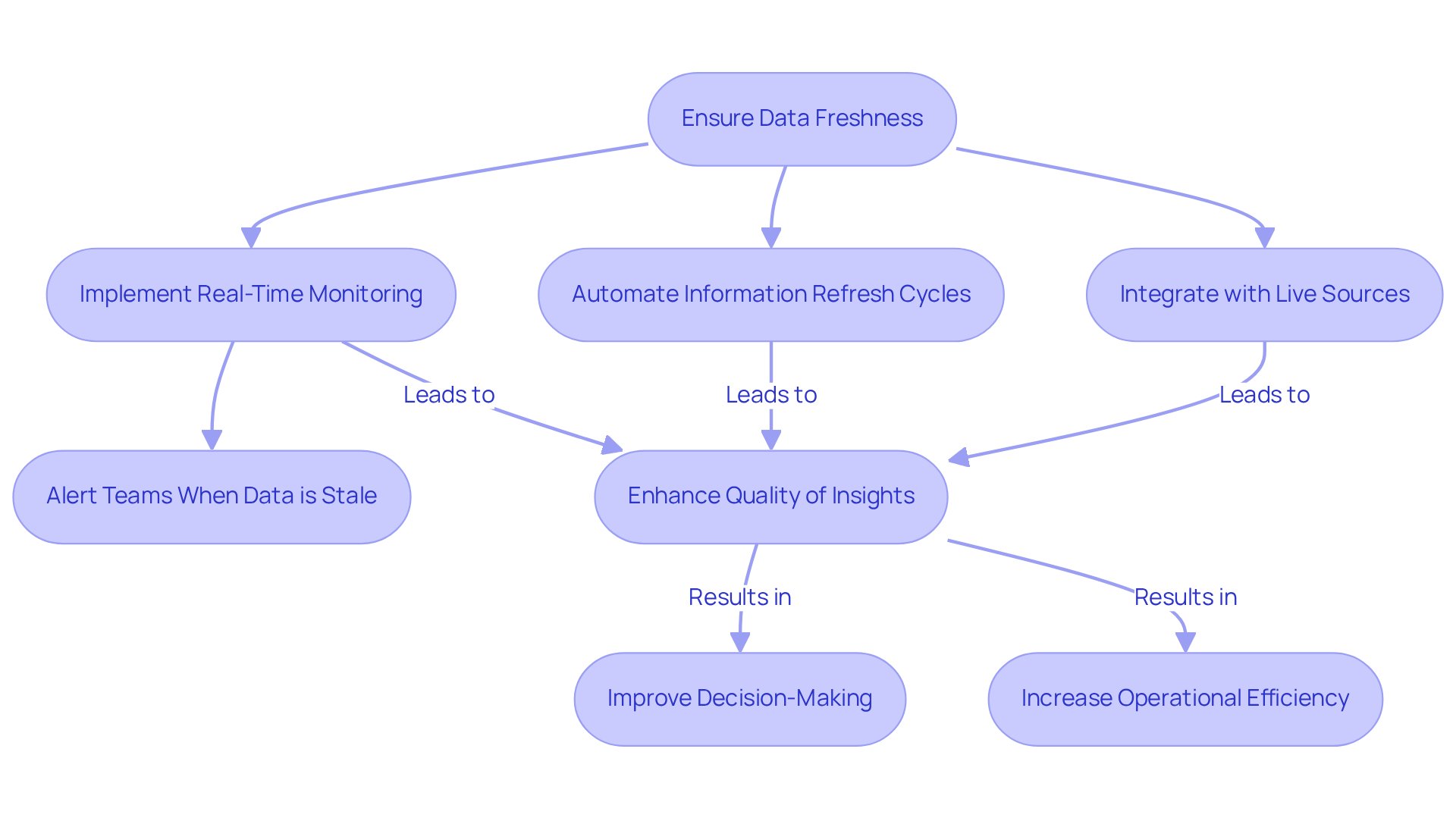

Data Freshness: Ensuring Timely and Relevant Information

Data freshness is vital for ensuring that the information used in analytics and decision-making stays current and relevant. How can organizations achieve this? By implementing real-time information monitoring systems that continuously track content age and alert teams when details become stale. Techniques like automated information refresh cycles and integration with live sources are essential for maintaining freshness, significantly enhancing the quality of insights derived from the information.

For instance, companies utilizing real-time analytics can anticipate a 23% rise in profitability, emphasizing the concrete advantages of prompt information (McKinsey). Furthermore, by 2025, almost 95% of enterprises are expected to invest in real-time information analytics (Gartner), highlighting the increasing acknowledgment of its significance. A major airline, for example, decreased delays by 25% through real-time information analysis, showcasing the practical benefits of ensuring information freshness.

By embracing these practices, companies can guarantee that their information remains a valuable resource. This not only promotes informed decision-making but also enhances operational efficiency. Are you ready to ensure your data is always fresh and relevant?

Data Lineage: Tracking the Journey of Your Data

The systematic tracking of information from its origin to its final destination is crucial for organizations today. It involves transformation, aggregation, and utilization across various systems. By establishing a clear ancestry, organizations can adhere to governance policies, simplify audits, and swiftly identify the root causes of quality issues. Automated ancestry tracking tools enhance AI data observability by providing visual representations of information flows and transformations. This makes it easier for teams to manage their information effectively.

Why is information tracking so vital for compliance and audits? Organizations that implement robust information tracking practices often see improved compliance results. They can clearly demonstrate their information handling procedures. For instance, companies that have adopted comprehensive information tracking solutions report a notable decrease in audit inconsistencies. These tools offer detailed insights into transformations and usage. A notable example is a financial organization that utilized lineage tools to enhance their audit processes, achieving a 30% reduction in compliance-related issues over a year.

As industry specialists point out, effective monitoring of information flow is essential for maintaining integrity and compliance. Hilary Mason, a leading data scientist, emphasizes that "the core advantage of information is that it reveals something about the world that you were previously unaware of." This perspective highlights the critical role that information traceability plays in fostering a culture of responsibility and transparency within organizations.

Furthermore, with the staggering statistic that 5 exabytes of information are created every two days, the need for strong information tracking practices becomes even more evident. Organizations must invest in tools and practices that enhance information tracking to effectively manage this vast amount of data.

In summary, the impact of information flow on compliance and audits is profound. By investing in tools and practices that improve lineage tracking, organizations can achieve AI data observability while meeting regulatory requirements and enhancing their overall governance framework. This leads to better decision-making and operational efficiency.

![]()

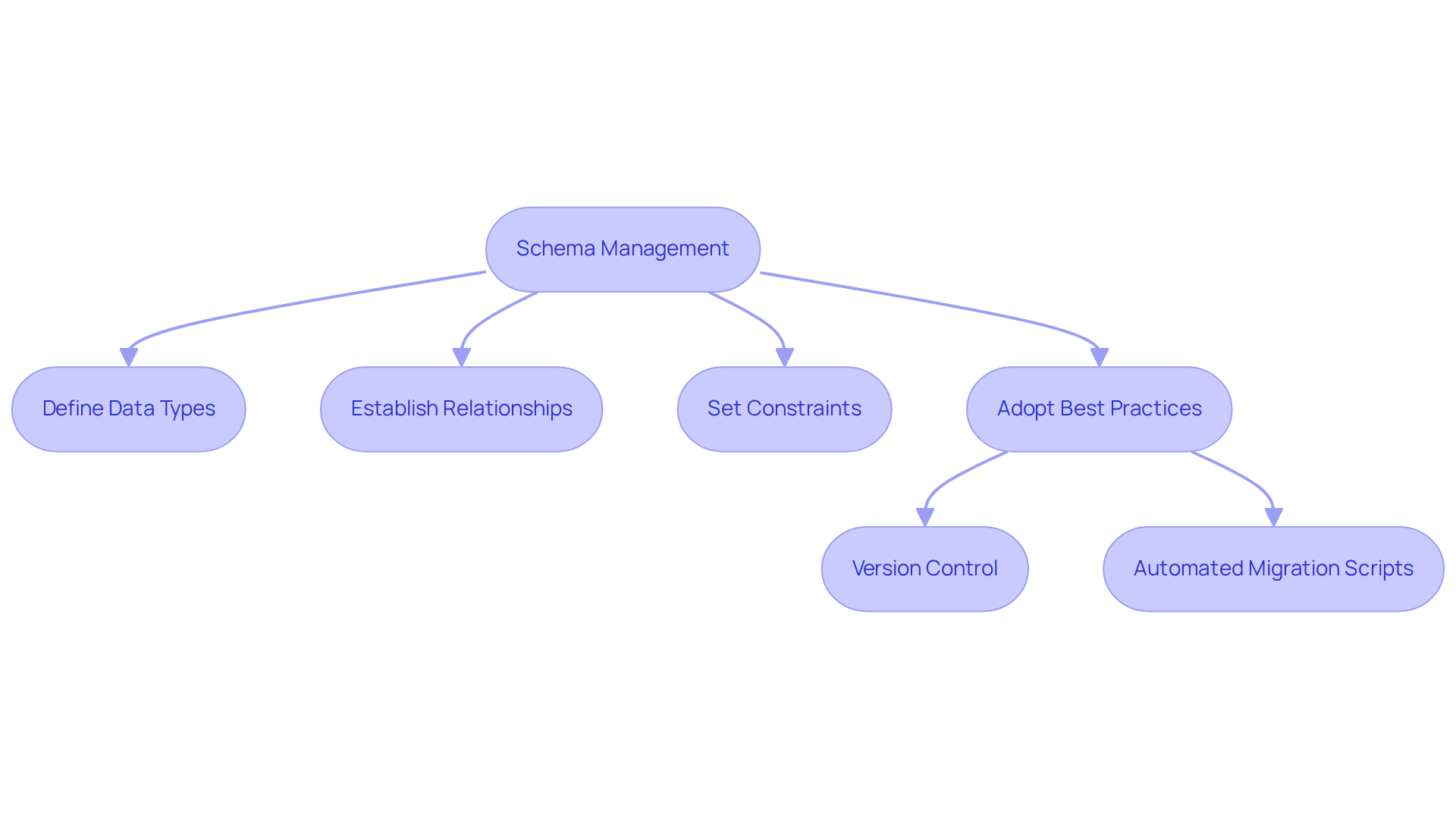

Schema Management: Maintaining Data Integrity and Consistency

Schema management plays a vital role in overseeing the organization of data within databases, ensuring both consistency and integrity. Have you ever considered how crucial it is to define data types, establish relationships, and set constraints that dictate how information is stored and accessed? As Veda Bawo, director of information governance, points out, "You can have all of the fancy tools, but if [your] information quality is not good, you're nowhere."

By adopting best practices for schema evolution - like version control and automated migration scripts - organizations can effectively adapt to changing data requirements while safeguarding information quality. For instance, companies such as dbForge Edge utilize automated migration scripts to streamline schema updates. This approach minimizes the risk of errors and enhances operational efficiency.

Furthermore, efficient schema management not only boosts data visibility but also empowers teams to uphold high standards of integrity. This ultimately fosters improved decision-making and operational success. Are you ready to elevate your data management practices?

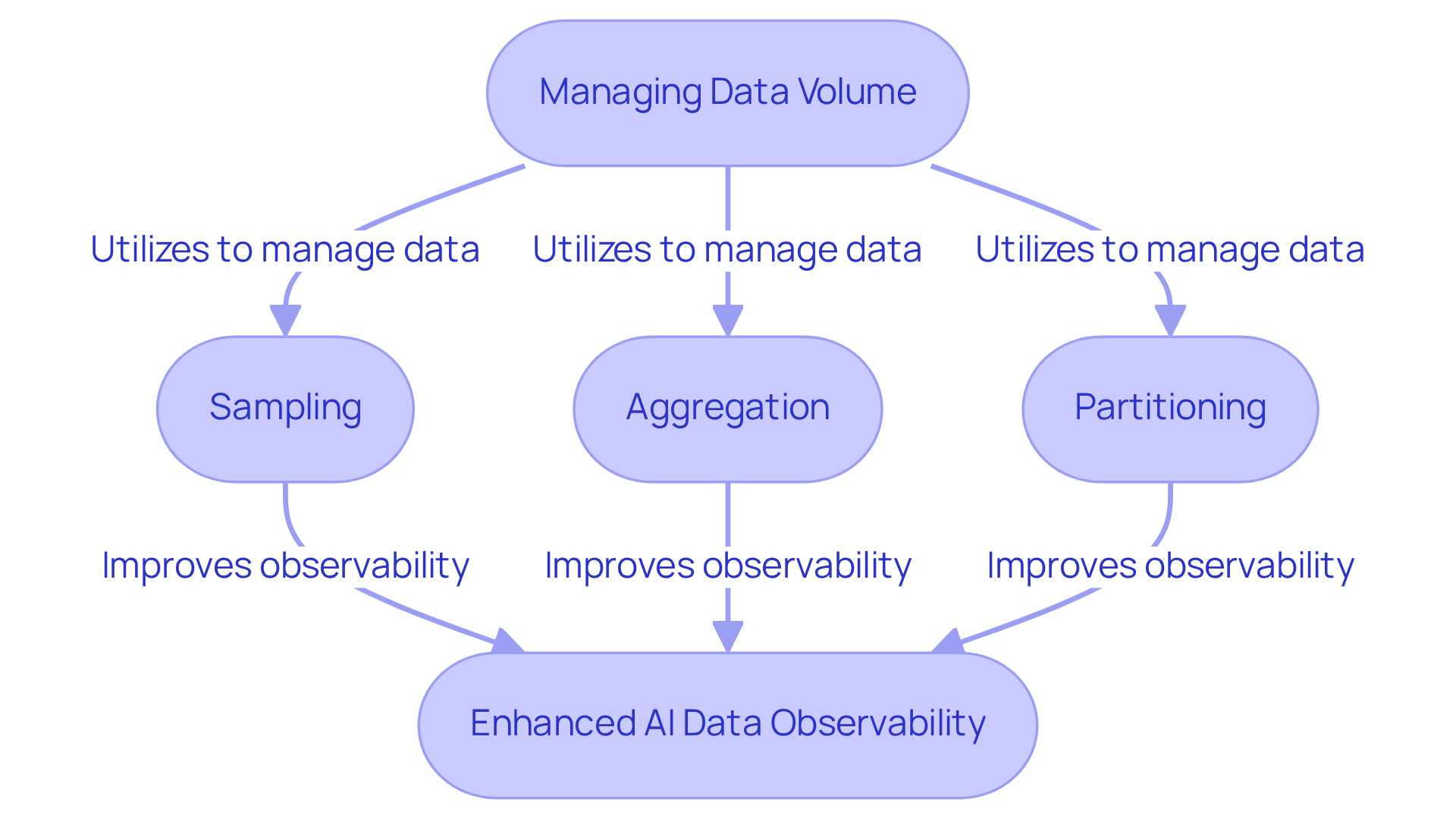

Data Volume: Managing Scale for Effective Observability

Managing information volume is a significant challenge for organizations today. As the amount of data being processed, stored, and analyzed continues to grow, it’s essential to implement scalable monitoring solutions to ensure AI data observability. These solutions can handle large datasets without sacrificing performance.

How can organizations effectively manage this increasing volume? Methods like sampling, aggregation, and partitioning play a crucial role. By utilizing these techniques, monitoring tools can remain responsive and provide timely insights, ensuring that data management is efficient and effective.

Furthermore, proactively addressing volume challenges enhances overall AI data observability capabilities. This means organizations can not only keep up with data demands but also improve their decision-making processes.

Are you ready to elevate your data management strategies? Explore the tools available that can help you tackle these challenges head-on.

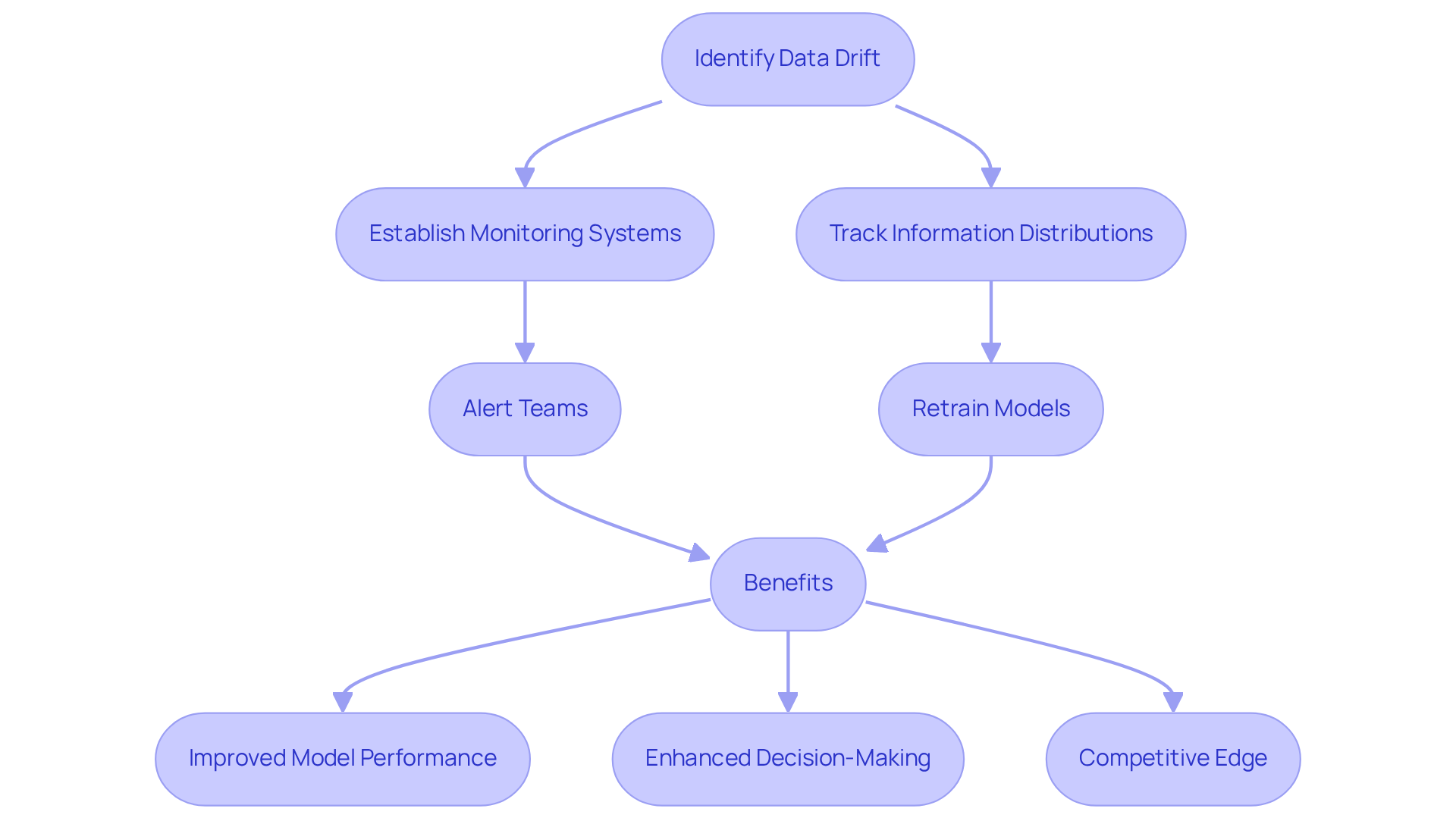

Data Drift: Identifying and Mitigating Risks in AI Models

Statistical drift can significantly impact the performance of AI models. Have you ever wondered how changes in data over time affect your systems? To tackle this challenge, companies need to establish ongoing monitoring systems focused on AI data observability that track information distributions and alert teams to substantial changes.

Furthermore, techniques like retraining models with updated information and employing adaptive algorithms can help maintain model accuracy in the face of drift. By proactively managing information drift, organizations can enhance their AI data observability to ensure their AI systems remain reliable and effective.

In addition, consider the benefits of staying ahead of these changes:

- Improved model performance

- Enhanced decision-making

- Ultimately, a competitive edge in the market

Don't let statistical drift catch you off guard - implement these strategies to safeguard your AI investments.

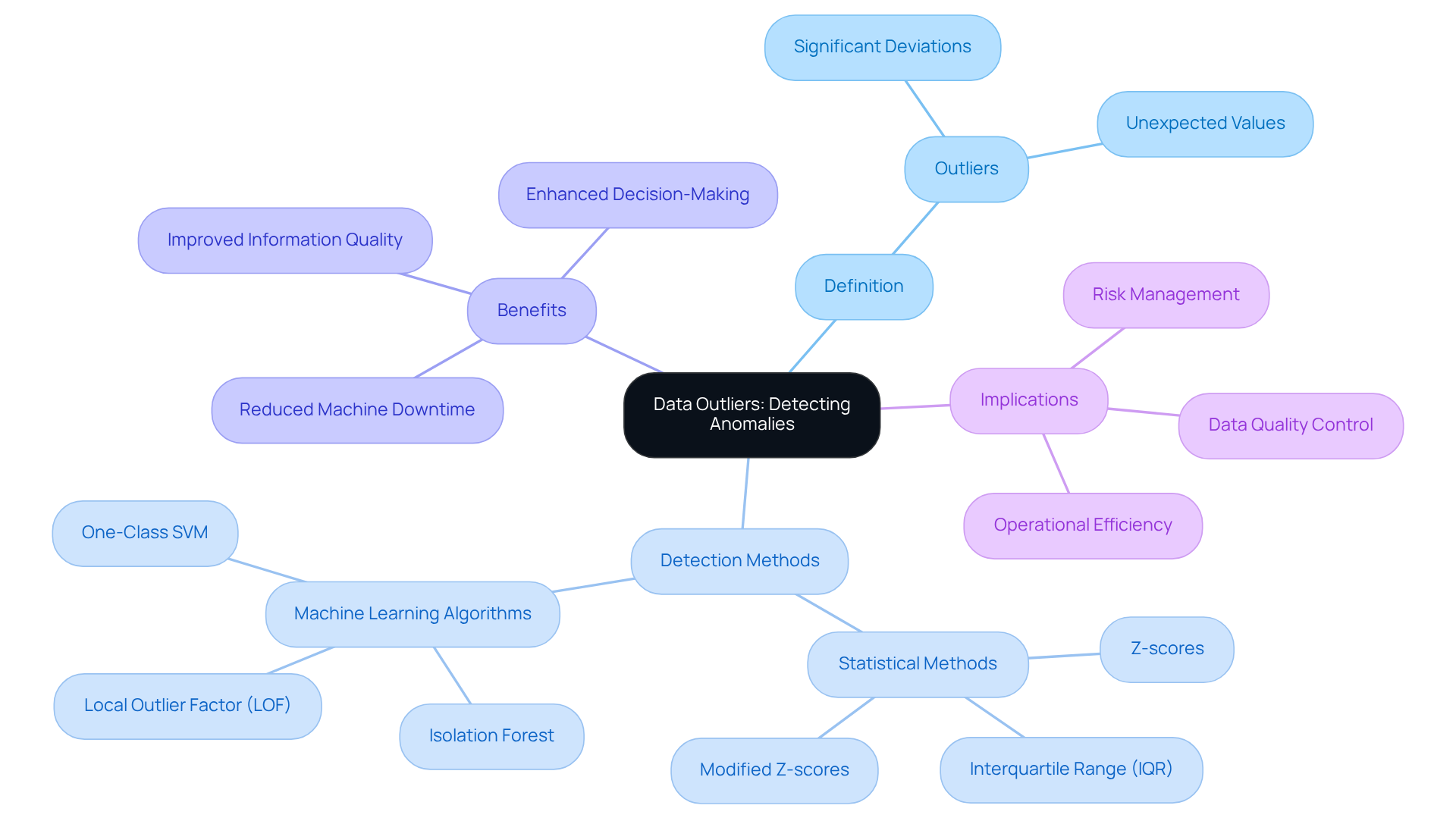

Data Outliers: Detecting Anomalies for Better Insights

Outliers are observations that significantly deviate from expected patterns within a collection, and detecting them is crucial for maintaining quality and ensuring accurate insights. As FirstEigen states, 'An anomaly is a point that does not conform to most of the information.' Anomaly detection plays an essential role across various sectors, helping organizations identify points that may indicate inaccuracies or mistakes.

For instance, statistical methods like Z-scores and the Interquartile Range (IQR) are commonly used to pinpoint outliers. In addition, machine learning algorithms, such as Isolation Forest and Local Outlier Factor (LOF), automate the identification process, enhancing efficiency and precision. Organizations that implement ai data observability can achieve substantial improvements in information quality.

Consider this: McKinsey & Company reports that using anomaly detection techniques can reduce machine downtime by up to 50% and increase machine life by 40%. Furthermore, automated systems can manage an unlimited number of metrics, making them perfect for extensive information environments. This scalability is essential as information volumes continue to increase.

Data analysts emphasize the importance of addressing outliers promptly to prevent skewed analyses. Removing anomalous entries improves the overall reliability of collections, enabling organizations to focus on more consistent and trustworthy information. By utilizing both statistical techniques and machine learning, companies can proactively manage quality with ai data observability, resulting in enhanced decision-making and improved operational efficiency.

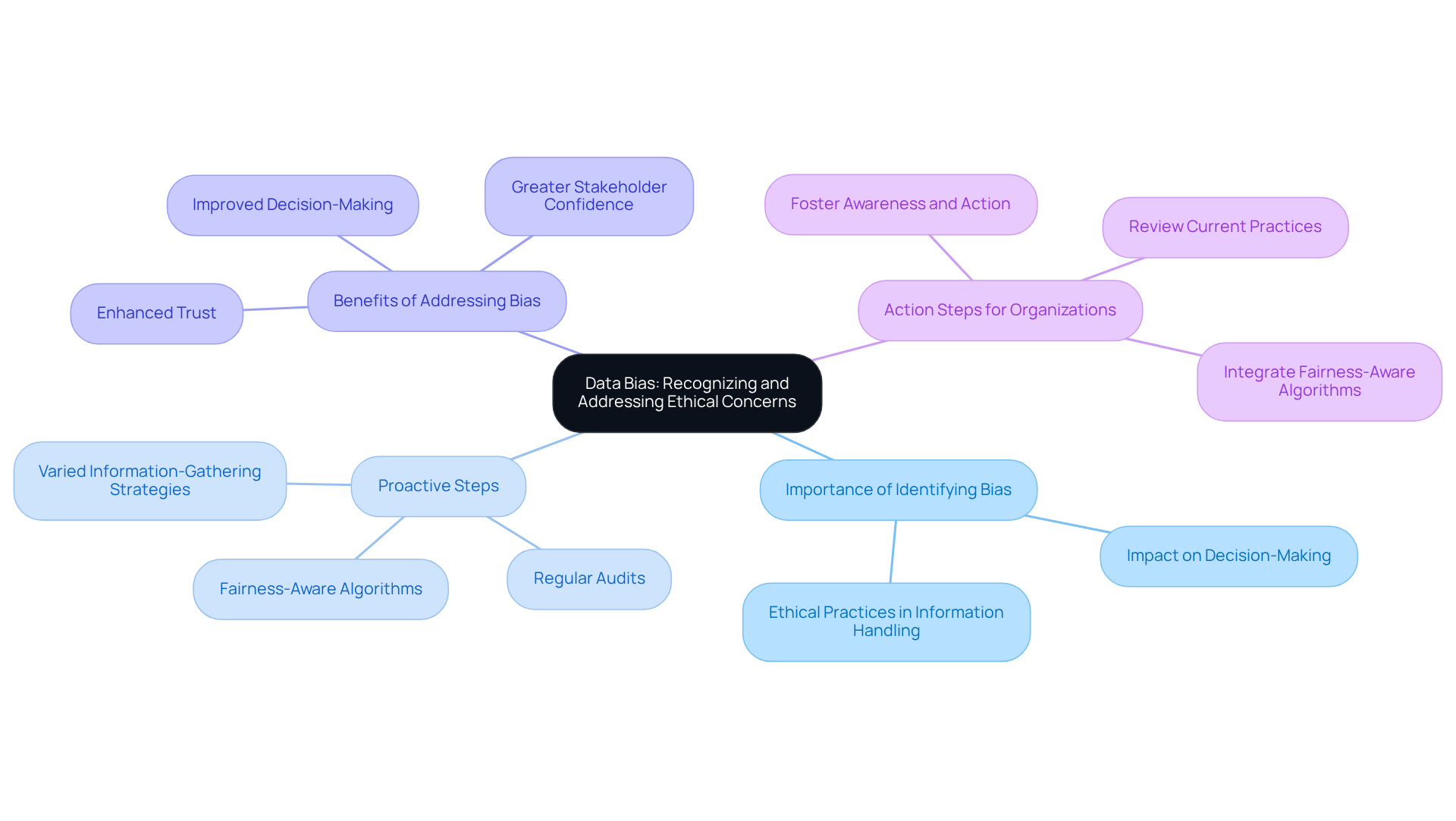

Data Bias: Recognizing and Addressing Ethical Concerns

Information bias represents systematic errors that can lead to flawed outcomes in analysis and AI models. Why is identifying and tackling bias so crucial? Because it underpins ethical practices in information handling. Organizations can take proactive steps by implementing varied information-gathering strategies and conducting regular audits for bias. Furthermore, employing fairness-aware algorithms can significantly reduce bias in their collections.

By emphasizing ethical factors in information visibility, entities not only enhance trust but also foster responsibility in their analytics-driven efforts. Imagine the impact of a more equitable approach to data-improved decision-making and greater stakeholder confidence. In addition, addressing bias can lead to more accurate insights, ultimately benefiting the organization as a whole.

So, what steps can your organization take today to mitigate information bias? Start by reviewing your current practices and consider integrating fairness-aware algorithms into your systems. The journey towards ethical information practices begins with awareness and action.

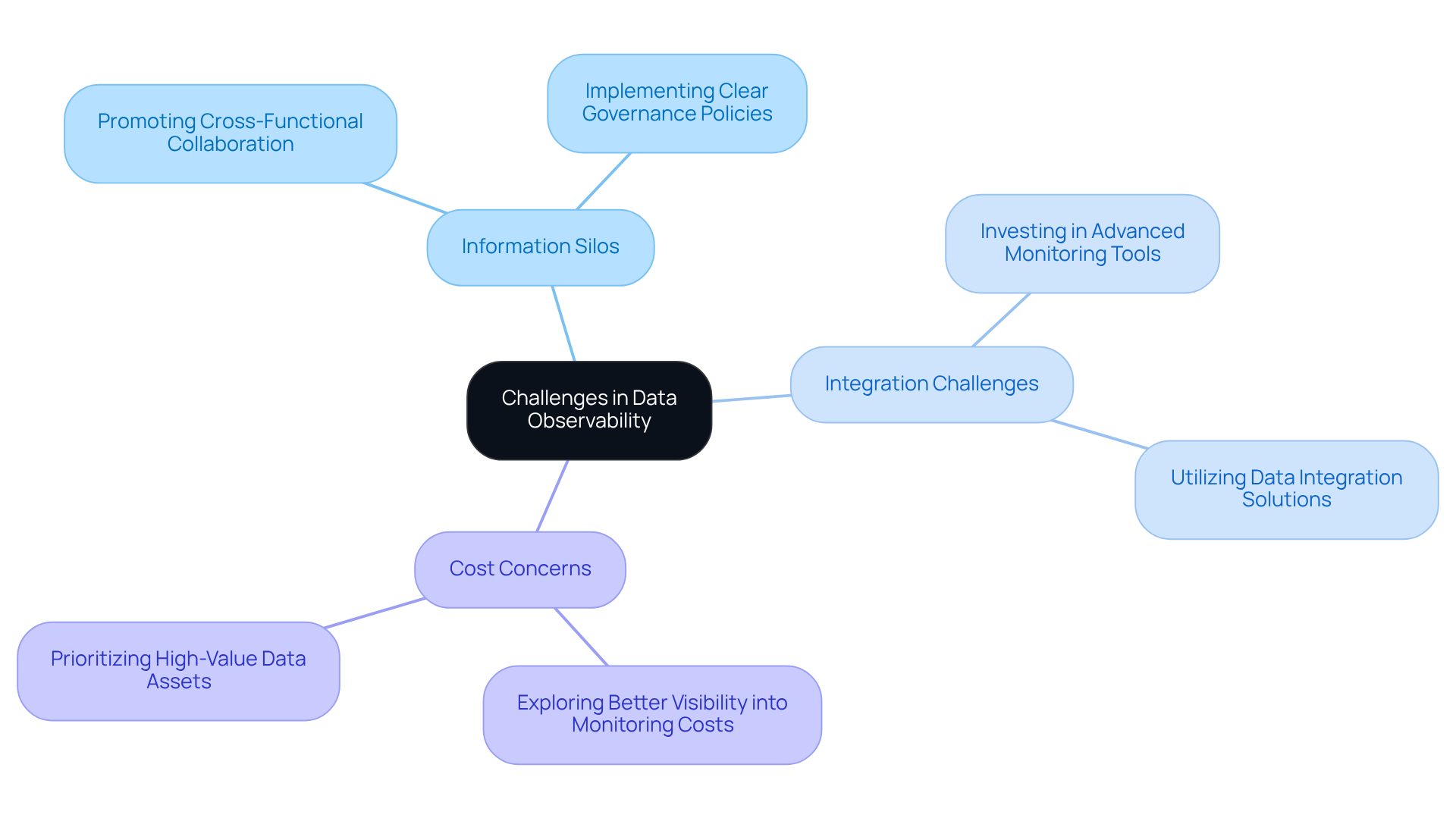

Challenges in Data Observability: Navigating the Complex Landscape

Organizations encounter significant hurdles in establishing effective information visibility practices, primarily due to persistent information silos and integration challenges. Did you know that a staggering 91% of organizations express concerns about costs while striving for complete visibility? Many struggle to manage the increasing volume of data generated from diverse sources.

To tackle this complex landscape, teams need to adopt a comprehensive strategy. This includes:

- Investing in advanced monitoring tools

- Promoting cross-functional collaboration

For instance, PhonePe, a financial technology firm, successfully reduced information silos and improved data quality by leveraging a monitoring platform, leading to enhanced operational performance.

Furthermore, implementing clear governance policies is crucial. Currently, 28% of organizations are embracing a collective model for monitoring and security, reflecting a growing recognition of the need for integrated strategies. By proactively addressing these challenges, organizations can significantly boost their data observability capabilities. This, in turn, drives better business outcomes. Are you ready to enhance your organization's information visibility?

Conclusion

AI data observability stands as a cornerstone for organizations striving to boost efficiency and uphold data integrity. By emphasizing visibility into data flows and quality, companies can proactively pinpoint issues, streamline processes, and ultimately enhance decision-making. Advanced tools like Kodezi OS not only automate code maintenance but also cultivate a culture of continuous improvement within development teams.

Key insights throughout the article shed light on various facets of AI data observability, such as:

- The importance of data freshness

- Lineage tracking

- Schema management

- Anomaly detection

Each of these components is vital in ensuring that data remains accurate, relevant, and compliant with governance standards. By implementing best practices in these areas, organizations can effectively mitigate risks linked to data drift and bias, leading to heightened operational efficiency and trustworthiness of insights derived from their data.

In conclusion, the path to effective data observability transcends merely adopting new technologies; it necessitates a comprehensive strategy that includes monitoring, governance, and cross-functional collaboration. As organizations grapple with increasing data complexities, investing in robust observability practices becomes essential for navigating challenges and unlocking the full potential of their data. Embracing these insights today can pave the way for a more efficient and informed future, ultimately enhancing competitive advantage in an ever-evolving landscape.

Frequently Asked Questions

What is Kodezi and how does it assist developers?

Kodezi is a professional OpenAPI specification generator that automates code maintenance, enhancing data observability. It helps developers by overseeing codebases, identifying and addressing problems, which ensures information accuracy and reliability.

How does Kodezi improve productivity for engineering teams?

Kodezi alleviates the workload on engineering teams by automating routine maintenance tasks, allowing them to prioritize innovation. It integrates AI data observability into the development lifecycle, improving overall software quality and efficiency.

What features does Kodezi offer for code management?

Kodezi automates code reviews, synchronizes API documentation, and generates Swagger UI sites, enabling teams to focus on innovation and enhancing productivity and code quality.

What is AI data observability and why is it important?

AI data observability involves tracking and understanding information as it flows through systems, using metrics, logs, and traces. It is crucial for identifying anomalies, ensuring compliance, and improving decision-making and operational efficiency.

How can organizations ensure data freshness?

Organizations can ensure data freshness by implementing real-time information monitoring systems that track content age and alert teams when details become stale. Techniques like automated information refresh cycles and integration with live sources are essential.

What are the benefits of maintaining data freshness?

Maintaining data freshness enhances the quality of insights derived from information, promotes informed decision-making, and improves operational efficiency. For example, real-time analytics can lead to a 23% rise in profitability.

What is the expected trend regarding real-time information analytics by 2025?

By 2025, almost 95% of enterprises are expected to invest in real-time information analytics, indicating a growing recognition of its significance in enhancing organizational performance.