Overview

In the realm of coding, developers often face significant challenges, particularly in vulnerability detection processes. This article highlights four strategies to effectively integrate AI into these processes, ultimately enhancing security measures. By leveraging high-quality data, continuous learning, human oversight, and collaboration, developers can overcome common obstacles such as false positives and the complexities of integrating with legacy systems. These strategies not only improve the efficiency of threat identification but also enhance the accuracy of coding practices.

Furthermore, the emphasis on collaboration and human oversight ensures that the integration of AI is not just about technology, but also about the people who use it. This approach fosters a more comprehensive understanding of security threats, leading to better outcomes in coding practices. As developers explore these strategies, they will find that the combination of advanced AI and human insight can significantly enhance their security measures.

In addition, considering the challenges faced in coding, it is crucial to adopt these strategies to improve both productivity and code quality. By addressing these pain points, developers can create a more secure coding environment that not only meets current demands but also anticipates future vulnerabilities.

Ultimately, the integration of AI into vulnerability detection processes presents a compelling opportunity for developers. By embracing these strategies, they can enhance their security measures and ensure a more efficient and accurate approach to threat identification in their coding practices.

Introduction

The landscape of cybersecurity is rapidly evolving, presenting significant challenges for organizations as traditional vulnerability detection methods struggle to keep pace with increasingly sophisticated threats. Are manual processes, such as code reviews and static analysis, leaving your organization exposed to potential breaches? As the urgency for more effective solutions grows, artificial intelligence emerges as a transformative force in vulnerability detection.

By automating the analysis of vast datasets and learning from historical patterns, AI enhances the accuracy of threat identification and streamlines response times. This article delves into the limitations of conventional methods, the benefits of integrating AI, best practices for implementation, and the challenges organizations face in this critical area of cybersecurity.

Explore how embracing AI can significantly improve your cybersecurity posture.

Understand Traditional Vulnerability Detection Methods

Identifying weaknesses in code can be a significant challenge for developers. Conventional methods often rely on manual procedures, such as code assessments and static analysis tools, which can be both time-consuming and inefficient. Techniques like fuzz testing, symbolic execution, and formal verification are commonly employed, yet they may not keep pace with the rapid evolution of threats. For instance, manual code reviews can overlook minor weaknesses, while static analysis tools may produce false alarms, leading to wasted resources. Understanding these limitations is essential to appreciate the transformative impact of vulnerability detection AI in this domain, as it can automate and significantly enhance these processes.

Studies indicate that vulnerability detection AI can outperform manual code evaluations, which may yield identification rates as low as 30% for specific types of flaws. This statistic underscores the inefficiency of relying solely on human oversight. The need to bridge academic research with practical implementation is further emphasized by Md Nizam Uddin, who highlighted the importance of identifying current research deficiencies in this area. Practical examples abound, with organizations experiencing breaches due to overlooked weaknesses in their code, demonstrating the urgent need for more effective identification techniques that AI can provide.

Moreover, prioritizing risk mitigation efforts based on severity information is crucial, as it can dramatically influence the effectiveness of identification strategies. Integrating vulnerability detection AI tools into flaw identification processes not only addresses these challenges but also results in more secure codebases. By exploring the tools available on platforms like Kodezi, developers can significantly improve their productivity and code quality, making a compelling case for the adoption of AI in coding practices.

Leverage AI for Enhanced Vulnerability Detection

Developers often face significant challenges in coding, especially in identifying and resolving vulnerabilities within their code using vulnerability detection AI. AI significantly improves threat detection by utilizing vulnerability detection AI to automate the analysis of extensive datasets and identifying patterns indicative of security risks. Through the application of machine learning algorithms, these systems learn from historical data, refining their accuracy over time. Kodezi addresses these challenges effectively by consistently examining codebases, immediately detecting and resolving possible weaknesses in real-time, while also tackling performance issues, incorporating exception handling, and improving code formatting.

The benefits of using Kodezi are substantial. This rapid issue resolution not only optimizes performance but also ensures compliance with the latest security best practices and coding standards. Organizations that have embraced vulnerability detection AI, such as Kodezi's automated code debugging, report significant decreases in analysis durations and enhanced rates of threat identification. For instance, a financial organization that incorporated AI into its risk management procedures achieved an impressive 50% reduction in response time to threats. This exemplifies the tangible benefits of AI integration, showcasing its role in enhancing security protocols and maintaining robust defenses against emerging vulnerabilities with vulnerability detection AI.

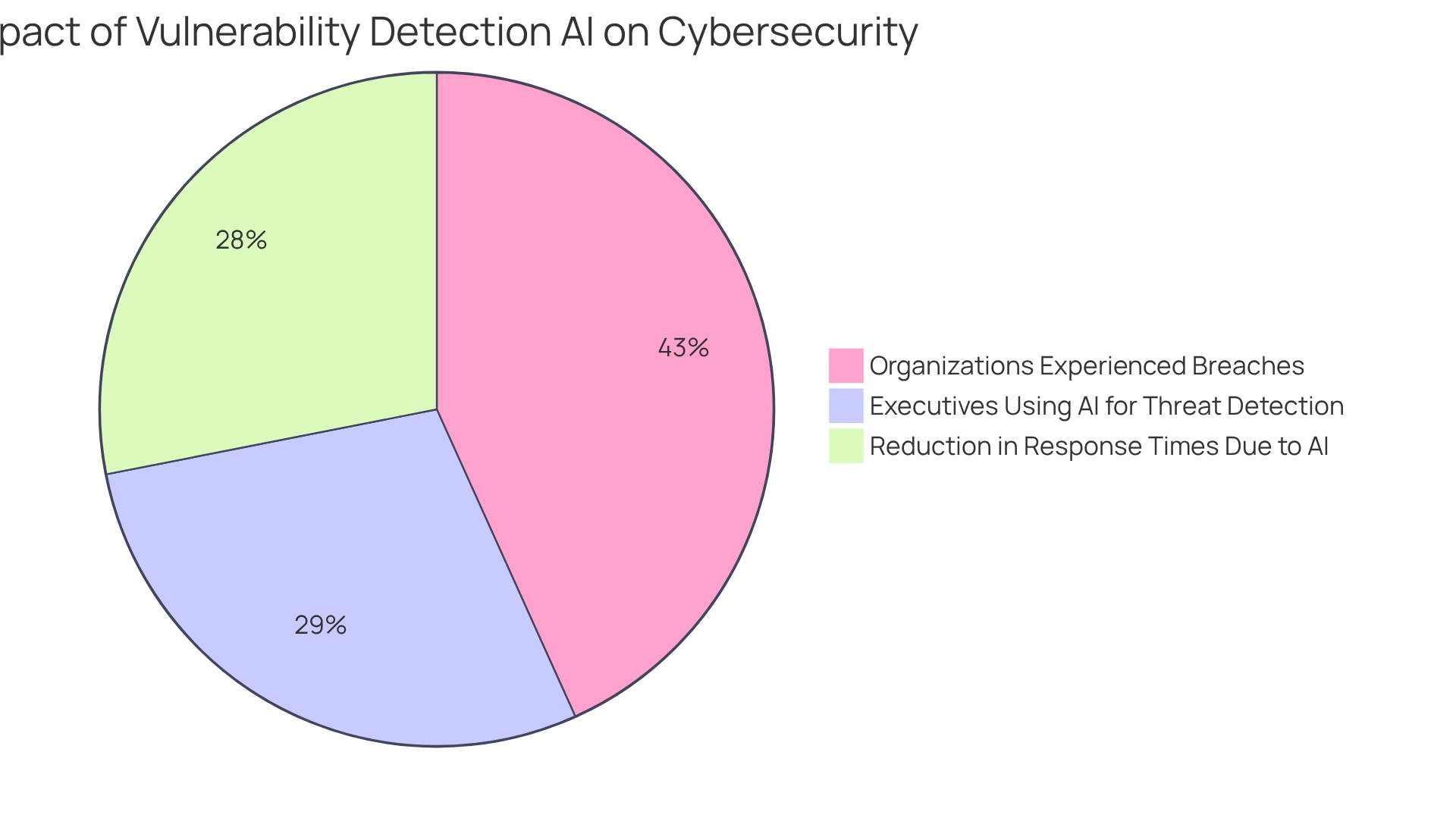

Furthermore, statistics reveal that 77% of organizations have experienced breaches, underscoring the urgent need for robust safeguards. As Anna Ribeiro, Industrial Cyber News Editor, remarks, 'Organizations are increasingly adopting vulnerability detection AI for threat identification, network monitoring, and predictive analytics, highlighting optimism about its security advantages.' In addition, a notable 51% of executives extensively utilize vulnerability detection AI for identifying cyber threats, indicating strong support for advanced technologies in the industry. These insights collectively strengthen the essential significance of AI in contemporary risk management.

Are you ready to explore the tools available on the Kodezi platform? By leveraging AI-driven capabilities, you can enhance your coding efficiency and bolster your security posture.

Implement Best Practices for AI Integration in Vulnerability Detection

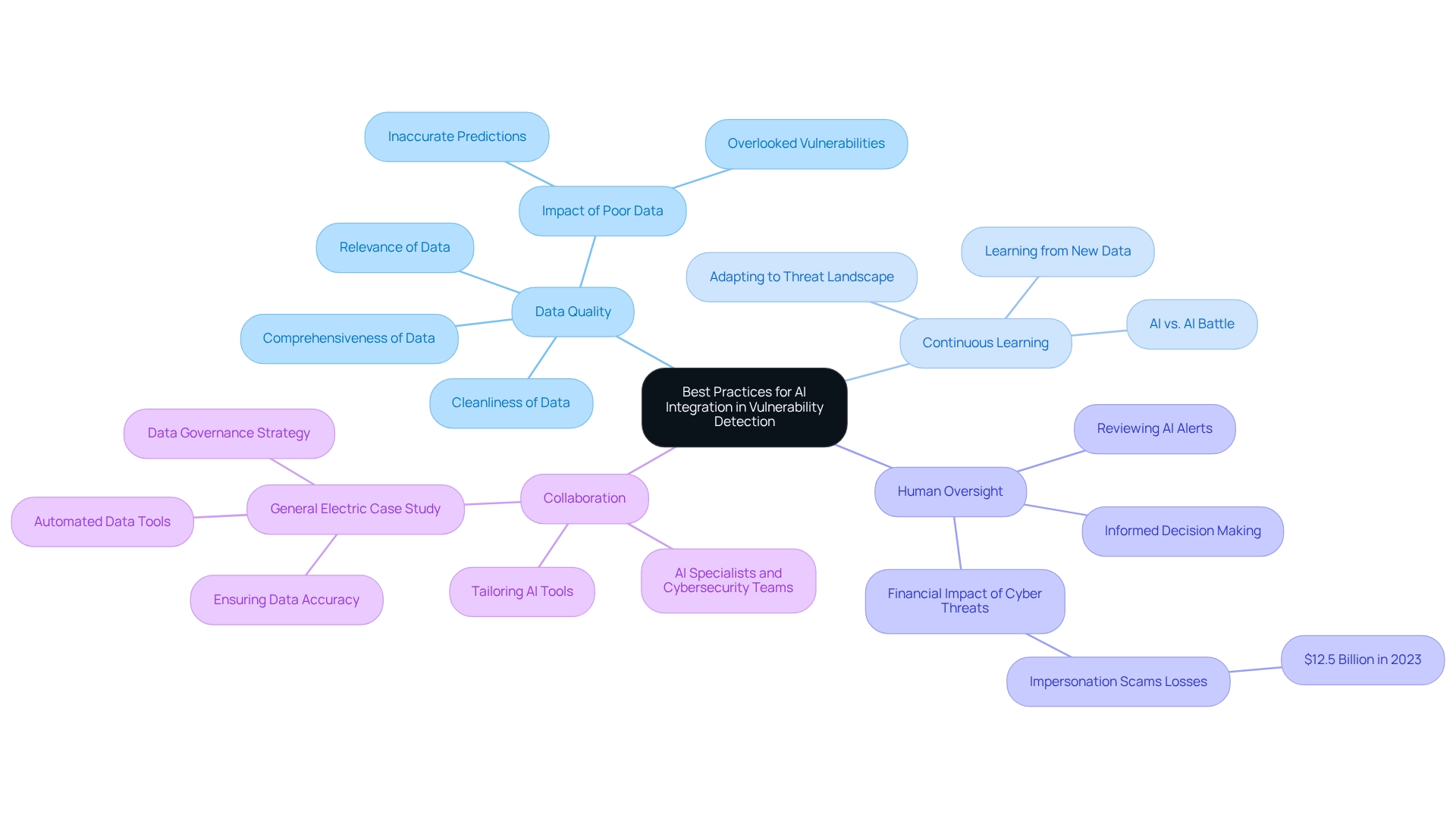

To effectively integrate vulnerability detection AI, organizations must prioritize best practices that enhance their security posture. The foundation of effective AI implementation lies in the quality of information. Organizations should prioritize the cleanliness, relevance, and comprehensiveness of the data used to train AI models. High-quality information is essential; poor data can lead to inaccurate predictions and overlooked vulnerabilities. The significance of this is underscored by the staggering statistic that breaches compromised over 353 million victims in 2023, highlighting the urgent need for robust information governance. Furthermore, the global AI cybersecurity market was valued at $22.4 billion in 2023 and is projected to grow to $60.6 billion by 2028, indicating a substantial investment in AI for cybersecurity.

Continuous Learning: Establishing systems that enable AI models to learn from new data continuously is crucial. This flexibility ensures alignment with the rapidly changing threat landscape, allowing AI tools to remain effective against emerging risks. Cybersecurity experts predict a long-term battle of AI versus AI in combating cyber threats, making continuous learning not just beneficial but essential.

Human Oversight: While AI can automate numerous processes, the role of human expertise is indispensable. Security teams should actively review AI-generated alerts to validate findings and make informed decisions, thus enhancing the overall effectiveness of the vulnerability detection process. The financial impact of cybersecurity threats is significant; the Federal Bureau of Investigation reported that impersonation scams cost $12.5 billion nationally in losses in 2023.

Collaboration: Encouraging collaboration between AI specialists and cybersecurity teams is vital for tailoring AI tools to specific security needs. This partnership can lead to more efficient implementations and a deeper understanding of how AI can best support an entity's cybersecurity goals. For instance, General Electric successfully implemented a data governance and quality management strategy within its Predix platform for industrial data analytics, ensuring the data feeding its AI models was both accurate and reliable. By adhering to these optimal methods, companies can significantly enhance their vulnerability detection AI capabilities, leveraging AI while mitigating the associated risks.

Address Challenges in AI Vulnerability Detection Implementation

Integrating vulnerability detection AI presents several substantial challenges that organizations must address.

- False Positives are a significant concern. AI systems frequently generate false alerts, leading to alert fatigue among security teams. To combat this, fine-tuning algorithms and implementing strategies to minimize occurrences is essential. Studies indicate that up to 70% of alerts can be false positives in some systems. Therefore, effective cybersecurity tools should prioritize actionable alerts, significantly improving response times and resource allocation.

- Integration with Legacy Systems poses another challenge. Many organizations still rely on outdated systems that may not seamlessly connect with modern AI tools. Research shows that those depending on legacy systems took an average of 322 days to perform the same operations effectively. A phased approach to integration can help mitigate compatibility issues, facilitating a smoother transition and enhanced functionality.

- Privacy Concerns are critical as well. Utilizing sensitive information for training AI models raises significant privacy issues. Organizations must prioritize compliance with data protection regulations to ensure their AI implementations do not compromise user privacy or violate legal standards.

- Skill Gaps within teams can hinder effective implementation. A notable lack of expertise in AI and cybersecurity can be addressed by investing in training initiatives and employing experts, enabling teams to utilize vulnerability detection AI tools efficiently. By proactively tackling these challenges, organizations can establish a robust and secure AI-based threat detection framework.

For example, hybrid solutions for vulnerability management that combine automated scanning with expert validation can enhance alert accuracy. This enables organizations to act confidently on legitimate alerts and streamline their vulnerability management processes. Ultimately, this approach can significantly improve overall security posture and reduce the risk of costly breaches.

Conclusion

The integration of artificial intelligence into vulnerability detection represents a crucial advancement in combating cyber threats. Traditional methods, often dependent on manual processes and outdated tools, have proven insufficient in addressing the complexities of modern vulnerabilities. By acknowledging the limitations of these conventional approaches, organizations can better understand the transformative potential of AI, which automates analysis and improves accuracy, ultimately leading to more effective security measures.

AI's capacity to analyze extensive datasets and learn from historical patterns enables organizations to identify and remediate vulnerabilities with unmatched speed and precision. Implementing best practices—such as ensuring data quality, fostering continuous learning, and maintaining human oversight—further enhances the effectiveness of AI-driven solutions. Collaboration between AI specialists and cybersecurity teams is essential for customizing these tools to meet specific organizational needs, thereby ensuring a more robust defense against emerging threats.

Nevertheless, the path to effective AI integration is fraught with challenges. Organizations must contend with issues like false positives, integration with legacy systems, data privacy concerns, and skill gaps. By proactively addressing these challenges, companies can establish a resilient AI-driven vulnerability detection framework that not only bolsters their security posture but also equips them to respond to the evolving landscape of cyber threats.

Embracing AI is no longer optional; it is essential for organizations seeking to protect their assets and maintain compliance in a progressively complex digital environment. The future of cybersecurity hinges on the intelligent integration of technology and human expertise, paving the way for a more secure and resilient approach to vulnerability detection.

Frequently Asked Questions

What are traditional methods for vulnerability detection in code?

Traditional methods include manual procedures such as code assessments and static analysis tools, which can be time-consuming and inefficient. Other techniques like fuzz testing, symbolic execution, and formal verification are also commonly used.

What are the limitations of conventional vulnerability detection methods?

Conventional methods can overlook minor weaknesses, produce false alarms, and may not keep pace with rapidly evolving threats. For instance, manual code reviews can yield low identification rates, sometimes as low as 30% for specific flaws.

How does vulnerability detection AI improve upon traditional methods?

Vulnerability detection AI can automate and enhance the identification processes, leading to higher identification rates and more secure codebases. It addresses the challenges faced by conventional methods, making it a more effective solution.

Why is it important to prioritize risk mitigation efforts based on severity information?

Prioritizing risk mitigation based on severity can significantly influence the effectiveness of identification strategies, ensuring that the most critical vulnerabilities are addressed first.

What role does practical implementation play in vulnerability detection?

Bridging academic research with practical implementation is essential for improving vulnerability detection methods. Identifying current research deficiencies can lead to more effective techniques and tools.

How can developers improve their productivity and code quality using AI?

By integrating vulnerability detection AI tools into their coding practices, such as those available on platforms like Kodezi, developers can enhance their productivity and improve the overall quality of their code.