Overview

The article emphasizes the significance of optimizing Kubernetes performance and testing, addressing the common challenges developers face in container orchestration environments. It highlights essential tools such as:

- Kube-burner

- ClusterLoader2

- Prometheus

It details how each contributes to effective performance evaluations. These tools manage resources, monitor performance metrics, and enhance overall system reliability during Kubernetes performance tests. By understanding these functionalities, developers can significantly improve their Kubernetes experience.

Introduction

In the rapidly evolving landscape of cloud-native applications, developers often encounter significant challenges in optimizing Kubernetes performance for efficiency and reliability. How can they navigate these complexities? This is where Kodezi comes into play. With its automated OpenAPI specification generation, Kodezi streamlines the development process, allowing developers to focus on what truly matters: building high-quality applications.

Furthermore, Kodezi offers robust features that enhance performance testing within Kubernetes environments. From Kube-burner's load testing capabilities to insights into monitoring systems like Prometheus, each tool provides unique advantages that can elevate application performance. As developers seek to optimize their Kubernetes clusters, scalable frameworks such as KubeEdge and automation solutions like Skaffold become invaluable resources.

In addition, understanding these tools is essential for developers who aim to stay ahead in the competitive tech landscape. With the demand for high-performance applications on the rise, leveraging Kodezi's suite of tools can lead to improved productivity and code quality. Are you ready to explore how these solutions can transform your development practices?

Kodezi | Professional OpenAPI Specification Generator - AI Dev-Tool: Streamline Your Kubernetes Performance Testing

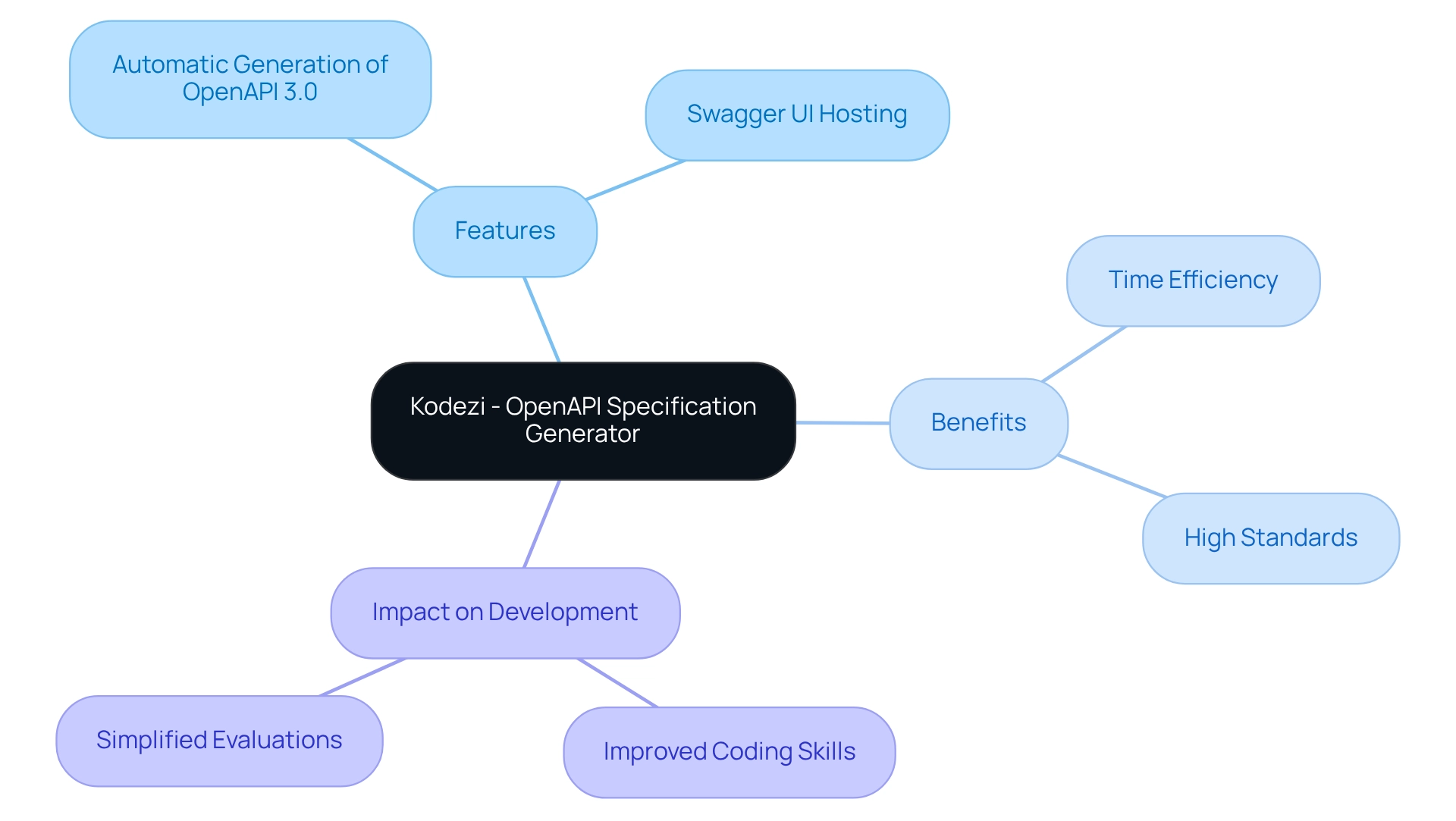

In the ever-evolving landscape of software development, developers often face significant challenges in managing API documentation. Kodezi stands out as a powerful OpenAPI Specification generator that automates this process, greatly enhancing the efficiency of container orchestration evaluation.

How does Kodezi tackle these challenges? With its automatic generation of OpenAPI 3.0 specs, you can quickly create specifications from your codebase, ensuring that evaluations accurately reflect actual API behavior. Furthermore, Kodezi allows you to generate and host a Swagger UI site, facilitating seamless validation of API endpoints and fostering collaboration with team members.

The benefits of using Kodezi extend beyond mere documentation. By saving valuable time and upholding high standards in API development, Kodezi is crucial for effective evaluations in container orchestration environments, especially in kubernetes/perf-tests. Imagine improving your coding abilities and simplifying your evaluation efforts—this is what Kodezi offers.

With the API evaluation market increasingly driven by the need for reliable digital interactions, Kodezi's capabilities are essential for developers aiming to optimize their assessment processes. Why wait? Begin at no cost or request a demonstration today to see how Kodezi can transform your approach to coding.

Kube-burner: Test Kubernetes Performance and Scale Effectively

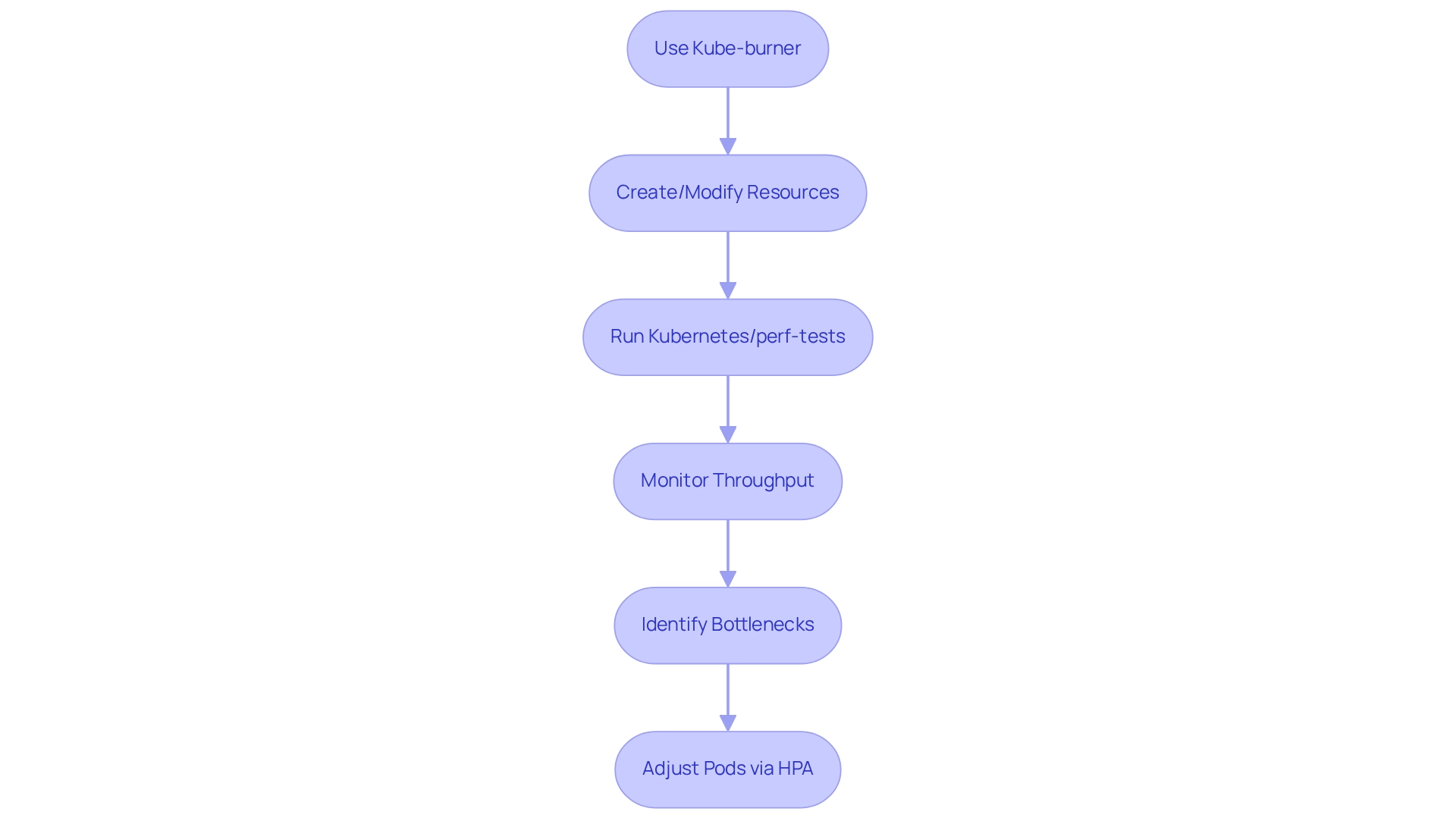

Kube-burner is a robust open-source utility designed to tackle the coding challenges developers face in coordinating scale assessments and efficiency evaluations within container orchestration clusters. This tool empowers users to effectively create, remove, and modify Kubernetes resources on a large scale, facilitating a comprehensive evaluation of cluster functionality through Kubernetes/perf-tests under various load conditions.

By simulating real-world scenarios, Kubernetes/perf-tests efficiently identify bottlenecks and optimize resource allocation, ensuring systems can handle expected traffic loads with ease. Furthermore, utilizing Kubernetes/perf-tests allows teams to monitor throughput—the number of requests a system responds to over time—crucial for understanding system efficiency. High throughput indicates that a program can manage a substantial volume of requests, essential for sustaining peak efficiency during evaluations.

For instance, a recent deployment of the Horizontal Pod Autoscaler (HPA) demonstrated how Kube-burner can automatically adjust the number of pods based on CPU usage metrics, scaling a PHP program up to four replicas when CPU usage exceeded 80%. Significantly, the HPA also reduces the number of pods when the load generator is scaled down, ensuring effective resource management and system stability during varying load conditions.

In addition, Kubernetes/perf-tests stands out for its seamless integration with other open-source tools for container orchestration testing and scale evaluation, providing users with a comprehensive toolkit. As companies increasingly focus on smaller, targeted AI implementations, as noted by Michael Dell, the importance of Kubernetes/perf-tests in optimizing Kubernetes cluster efficiency becomes even more evident. This makes it an essential resource for developers aiming to enhance their software's efficiency.

To fully leverage the advantages of Kube-burner, teams should consider integrating it into their evaluation strategies to ensure robust application functionality under diverse conditions.

ClusterLoader2: Optimize Kubernetes Cluster Performance

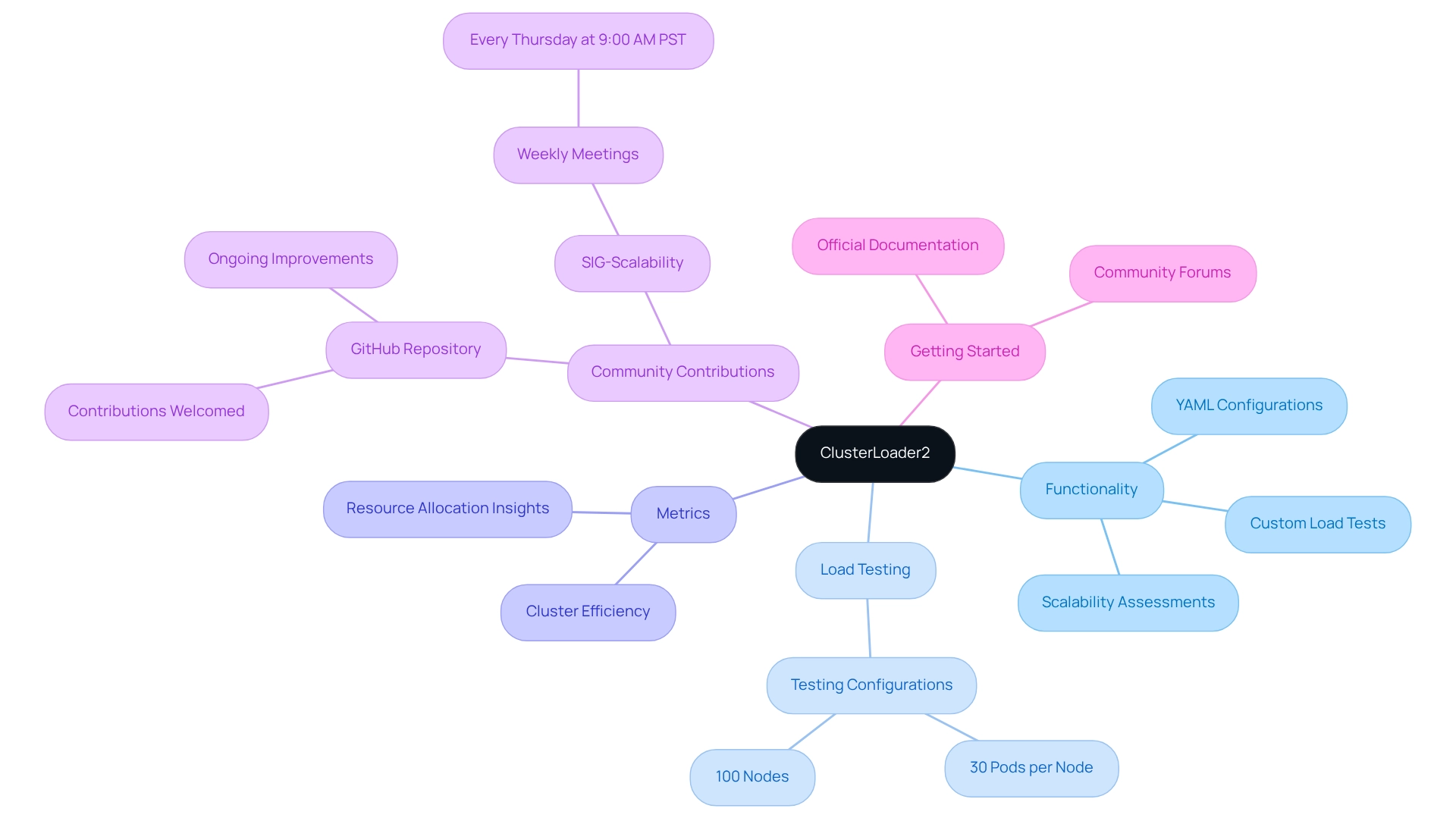

ClusterLoader2 serves as an essential evaluation tool for kubernetes/perf-tests, specifically designed to assess the scalability and efficiency of Kubernetes clusters. Its flexibility shines through the ability to define custom load tests via YAML configurations, catering to a variety of testing needs. This adaptability allows teams to tailor their assessments to specific scenarios, ensuring comprehensive insights.

By delivering detailed metrics on cluster efficiency, ClusterLoader2 enables teams to make informed, data-driven decisions regarding resource allocation and scaling strategies. For instance, tests conducted on complete clusters with 30 pods per node across 100 nodes provide a robust framework for understanding efficiency under load. Such metrics are vital for enhancing application performance and ensuring that kubernetes/perf-tests can effectively manage diverse workloads. As container orchestration evolves, the importance of scalability assessment tools like ClusterLoader2 for kubernetes/perf-tests becomes increasingly clear. Contributions to the GitHub repository are encouraged, fostering a collaborative environment for ongoing improvements and experimentation. This community-driven approach not only enhances the tool's capabilities but also aligns with the latest advancements in container orchestration scalability testing methodologies, ensuring users have access to the most effective strategies for optimizing efficiency.

To begin using ClusterLoader2, developers can refer to the official documentation and community forums for guidance on setting up their initial load tests. Engaging with the Special Interest Group (SIG-Scalability), which meets every Thursday at 9:00 AM PST, can also provide valuable insights and support from fellow cloud-native technology enthusiasts.

Prometheus: Monitor and Analyze Kubernetes Performance Metrics

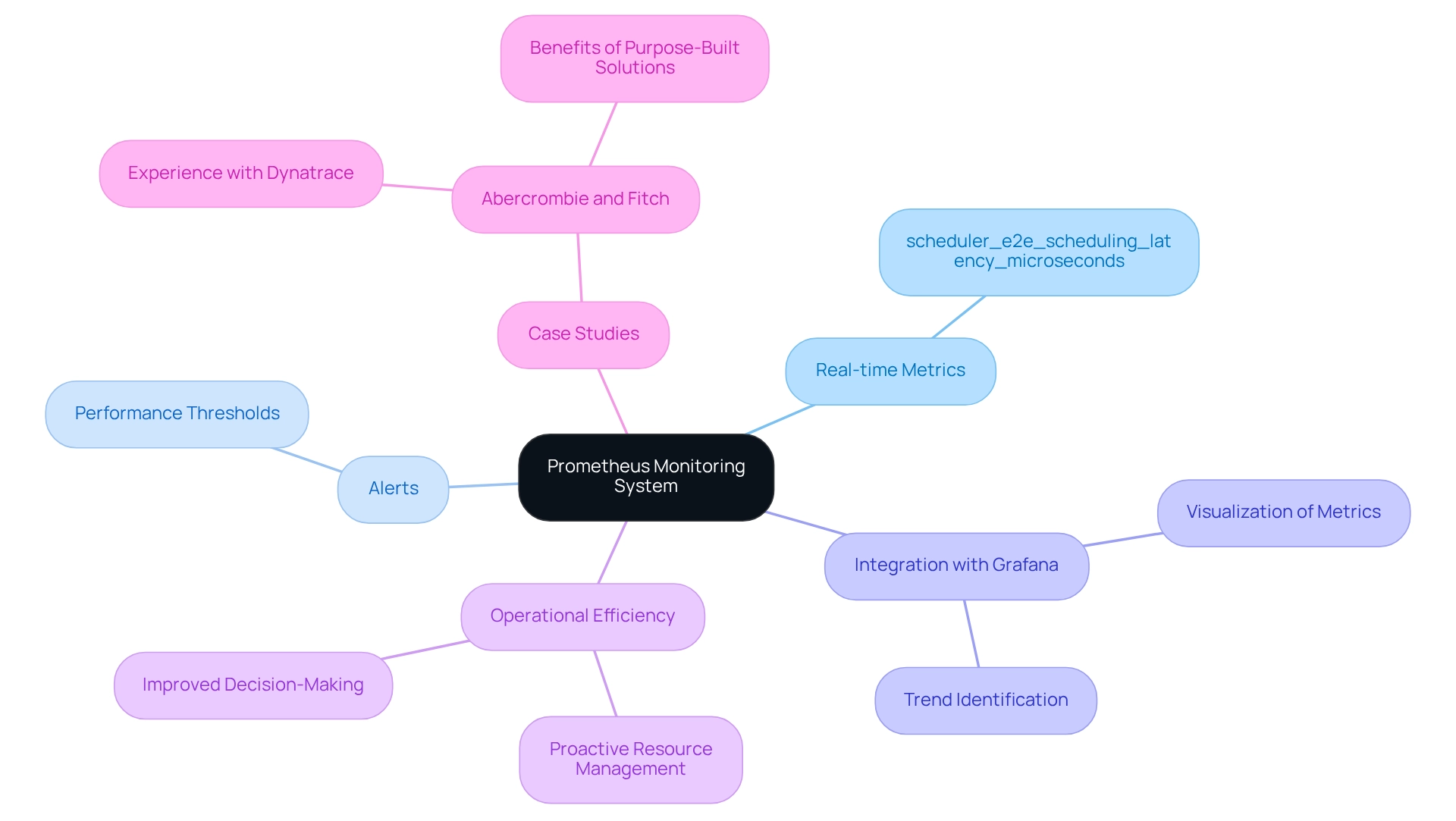

Prometheus serves as a powerful open-source monitoring system that collects and retains metrics as time series data, offering real-time insights into Kubernetes/perf-tests cluster operations. This tool enables developers to set alerts based on specific performance thresholds related to kubernetes/perf-tests, which supports proactive resource management. As organizations increasingly focus on real-time metrics gathering in 2025, the use of kubernetes/perf-tests has become essential for enhancing operational efficiency and system reliability. Notably, the scheduler_e2e_scheduling_latency_microseconds metric evaluates the total elapsed latency in scheduling workload pods, underscoring the necessity of prompt monitoring in kubernetes/perf-tests.

The integration of Prometheus with Grafana in kubernetes/perf-tests allows teams to effectively visualize metrics, simplifying the identification of trends and potential issues before they affect application availability. This combination not only improves monitoring capabilities but also facilitates informed decision-making through comprehensive data analysis in kubernetes/perf-tests.

Case studies illustrate the effectiveness of Prometheus in practical scenarios, such as Abercrombie and Fitch's experience with Dynatrace in containerized environments. The IT Director noted, "Most alternative solutions are unaware of containerized environments and find it difficult to monitor Red Hat OpenShift. Dynatrace was purpose-built for these environments, giving us instant answers and out of the box value from day one." This highlights how efficient monitoring tools can significantly enhance operational management in kubernetes/perf-tests for container orchestration.

As the container orchestration platform evolves, the integration of Prometheus with tools like Grafana remains vital for ensuring high performance and reliability in kubernetes/perf-tests for cloud-native systems. Furthermore, Part 3 of this series will delve into methods for collecting container orchestration metrics for deeper visibility, encouraging readers to seek further insights.

KubeEdge: Enhance Performance Testing for Edge Computing

KubeEdge is an innovative open-source framework designed to enhance the functionalities of container orchestration systems within edge computing environments. This platform allows developers to manage edge nodes and software seamlessly, making it essential for deploying and evaluating applications where efficiency and reliability are crucial.

By facilitating efficiency assessments in edge scenarios, KubeEdge ensures that applications maintain high performance even in distributed and resource-constrained settings. For instance, a notable case study demonstrates a deployment scheme for kubernetes/perf-tests, effectively testing the Kubernetes control plane and connecting hollow edge nodes to CloudCore instances. This showcases a structured approach to managing large-scale Kubernetes environments, with the KubeEdge control plane deployed across five CloudCore instances to bolster its deployment capabilities.

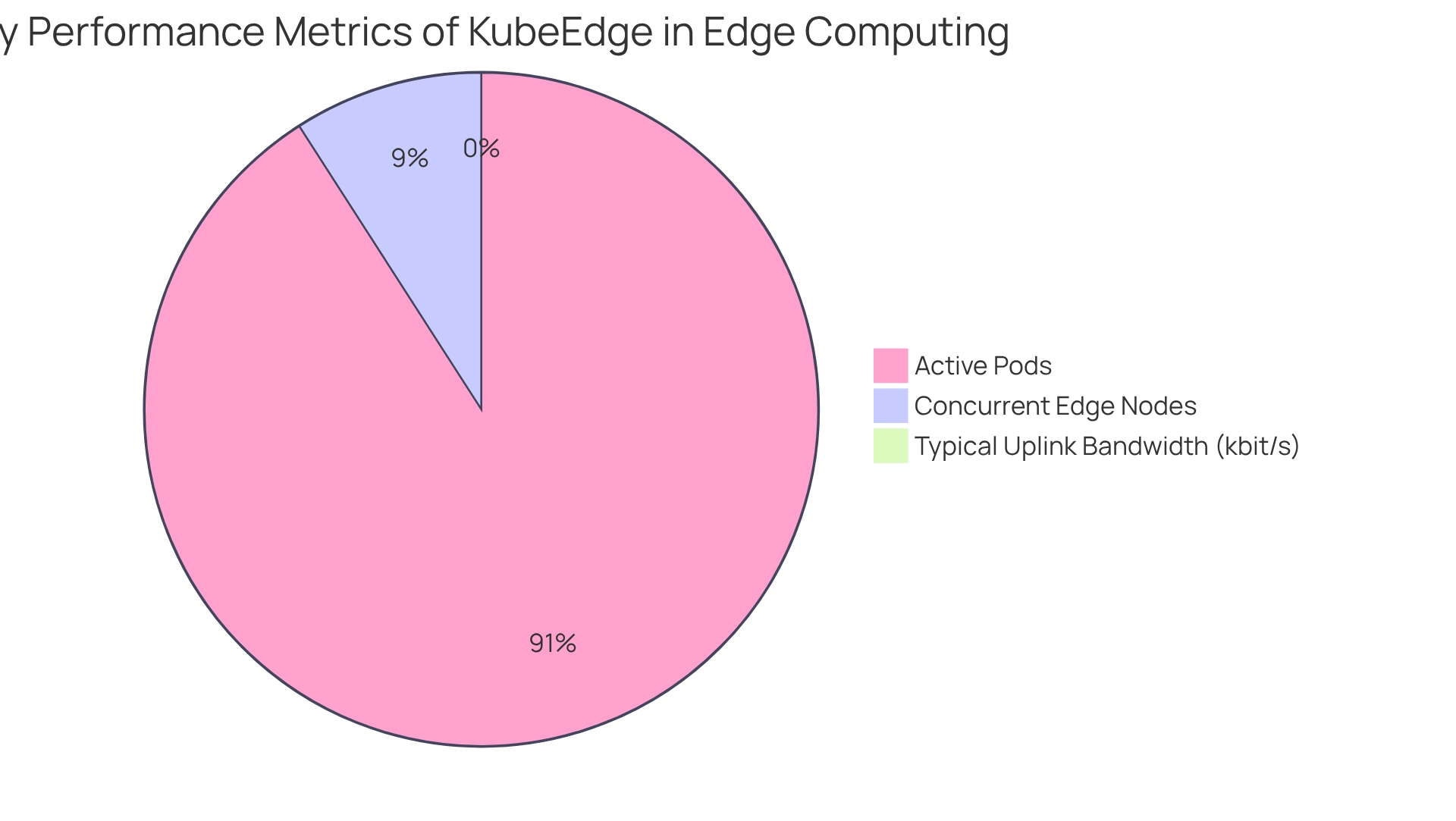

Recent kubernetes/perf-tests illustrate KubeEdge's remarkable scalability, revealing its ability to successfully scale to 100,000 concurrent edge nodes while managing over 1,000,000 active pods on those nodes. This capability is particularly beneficial for industries that rely on effective software functionality and dependability. Furthermore, the typical uplink bandwidth to each edge node is approximately 0.25 kbit/s, underscoring the importance of enhancing functionality in such constrained environments. As edge computing continues to evolve, KubeEdge stands out as a vital resource for improving assessments, ensuring that applications remain robust and effective across diverse operational contexts.

Apache JMeter: Conduct Load Testing for Kubernetes Applications

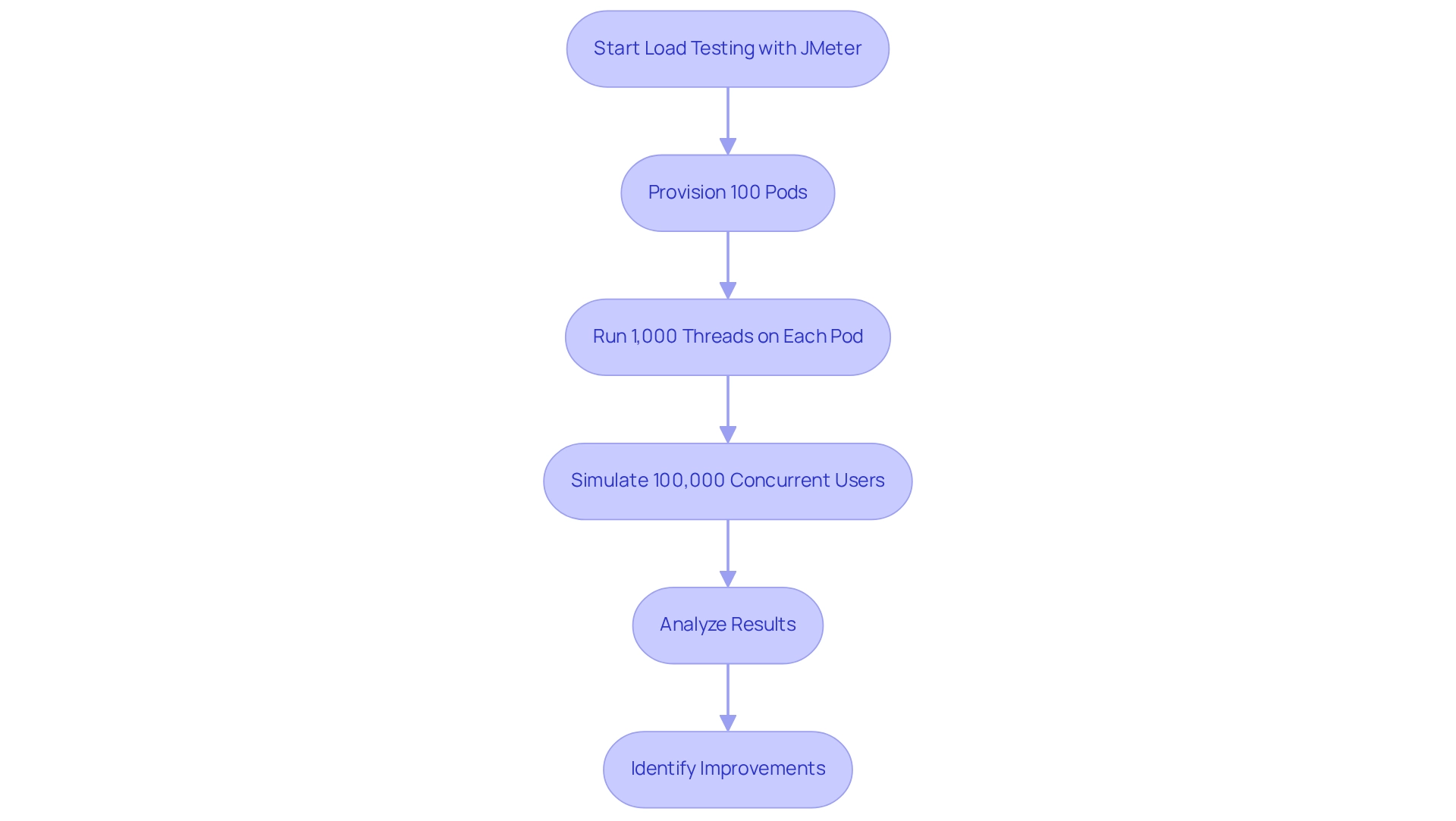

Apache JMeter stands out as a robust open-source tool tailored for the load evaluation of web systems, particularly those operating within container orchestration environments. It empowers developers to simulate the experience of multiple users engaging with the software simultaneously, yielding vital insights into functionality when under pressure. By integrating JMeter with kubernetes/perf-tests, teams can automate their load evaluation processes, thereby ensuring that applications remain resilient and capable of managing high traffic levels without compromising quality.

In a recent load-testing scenario, the provisioning of 100 pods, each with a slave running 1,000 threads, facilitated the simulation of an impressive 100,000 concurrent users. This capability underscores JMeter's scalability and effectiveness in high-demand situations, directly catering to developers' requirements for robust functionality under stress. As Shishir Khandelwal from the DevOps team remarked, "We needed a more automated and scalable solution," which emphasizes the significance of tools like JMeter in fulfilling these needs.

Looking ahead, improvements for JMeter's load assessment solutions are on the horizon, focusing on the parameterization of additional elements within the Helm chart. This enhancement will provide direct control over thread counts and heap memory allocation for the JMeter master, further boosting the tool's flexibility and usability. Such advancements are crucial for optimizing efficiency in container orchestration settings, ensuring that teams can tailor their load evaluations to specific software requirements.

Real-world examples highlight JMeter's optimization capabilities in kubernetes/perf-tests environments, illustrating its effectiveness in high-traffic scenarios. As teams increasingly rely on automated load evaluation, the integration of JMeter with Kubernetes/perf-tests becomes essential for maintaining software performance and reliability.

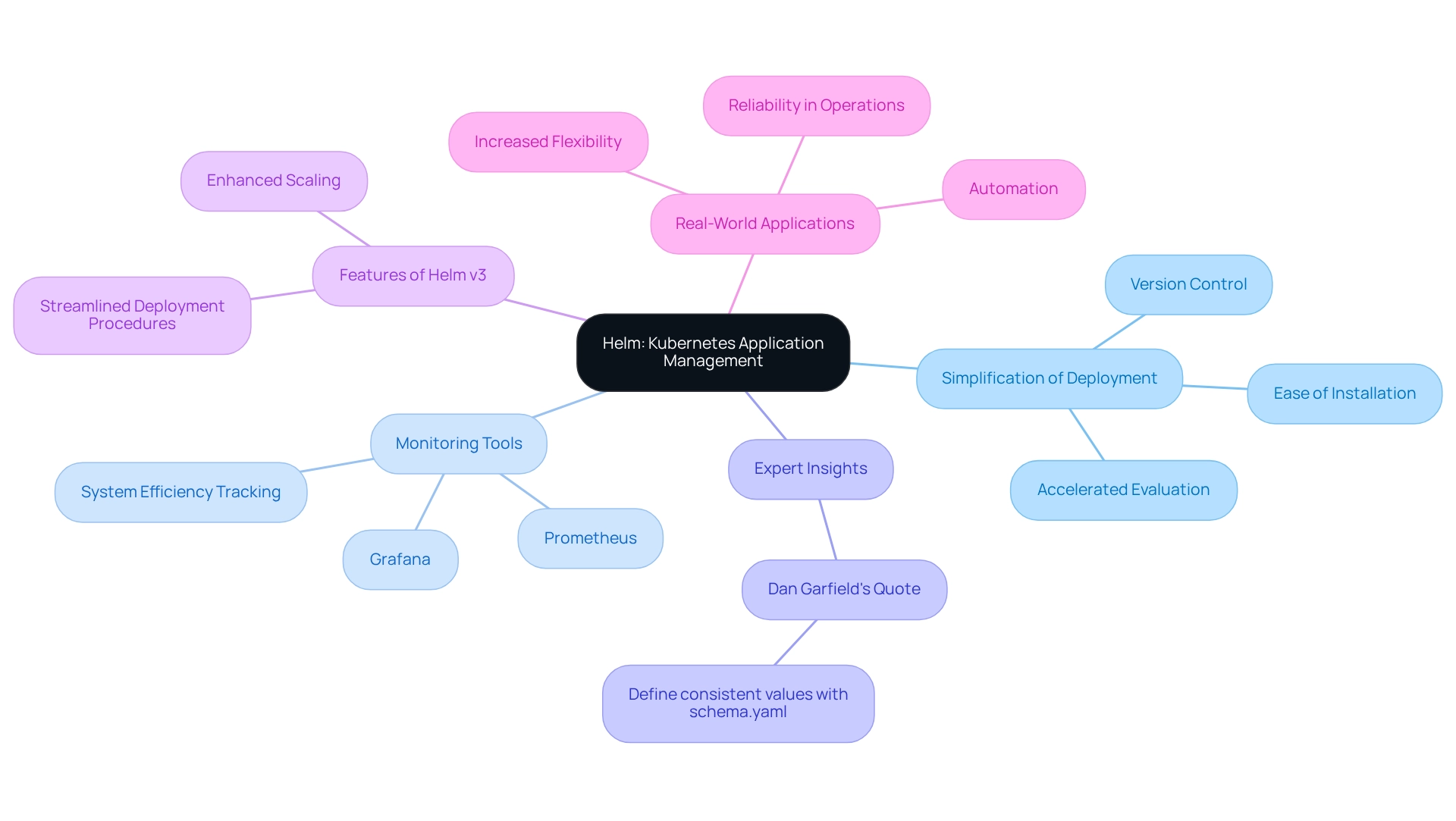

Helm: Simplify Kubernetes Application Management for Better Performance Testing

Helm serves as an open-source package manager for Kubernetes, addressing the coding challenges developers often encounter. By simplifying the deployment and management of software, Helm charts allow developers to define, install, and upgrade software with ease. This streamlined process significantly accelerates the evaluation of software, which is crucial for assessing effectiveness. Teams can swiftly implement various software versions and evaluate their quality under diverse conditions, enhancing their optimization strategies and improving the overall functionality of container orchestration systems.

Furthermore, monitoring tools like Prometheus and Grafana play a vital role in tracking Helm deployments. They provide essential insights into system efficiency during evaluations, which is critical for identifying bottlenecks and ensuring the accuracy of testing results. Helm v3, the latest iteration, introduces enhanced features that facilitate scaling in container orchestration, making software management more efficient for teams.

Expert insights underscore the significance of package managers such as Helm in streamlining container orchestration management. Dan Garfield, VP of Open Source, highlights the importance of establishing consistent values with schema.yaml, which helps maintain application integrity during testing. This practice ensures that configurations remain stable, reducing the likelihood of errors that could compromise assessment outcomes in kubernetes/perf-tests. Case studies reveal that organizations using Helm have achieved increased flexibility, automation, and reliability in their kubernetes/perf-tests operations. By adopting Helm, these teams have optimized their deployment workflows for kubernetes/perf-tests, resulting in improved testing outcomes. As we look toward 2025, Helm continues to evolve, introducing features that enhance testing efficiency. The latest updates focus on streamlining deployment procedures, allowing developers to concentrate on refining their software rather than navigating intricate configurations. For instance, real-world applications of Helm charts demonstrate how they can be utilized for optimization, showcasing the tangible benefits of this powerful tool within the container orchestration landscape.

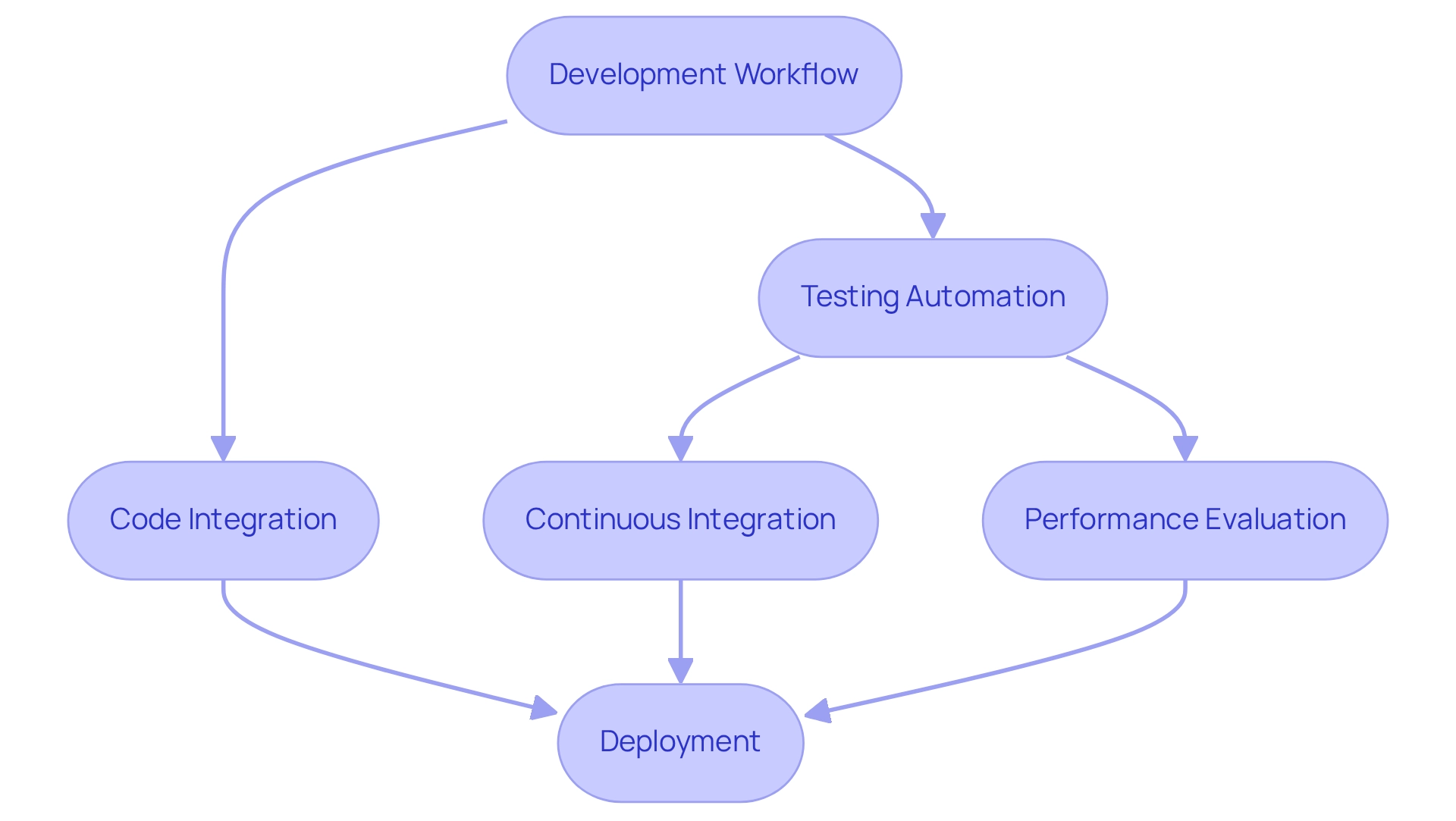

Skaffold: Automate Kubernetes Development and Performance Testing

In the ever-evolving landscape of software development, coding challenges can often impede progress. Developers frequently grapple with the complexities of building, evaluating, and deploying software for kubernetes/perf-tests. This is where Skaffold comes into play, a free tool designed to streamline these processes. By simplifying development workflows, Skaffold allows developers to concentrate on coding, seamlessly integrating evaluation into the development cycle. This automation not only accelerates the development schedule but also ensures that quality remains a top priority, ultimately leading to superior applications.

Real-world examples underscore the effectiveness of Skaffold in enhancing development cycles. Teams utilizing Skaffold have reported a significant reduction in deployment times, enabling quicker iterations and more comprehensive evaluations. Additionally, the tool’s automation capabilities facilitate continuous integration and delivery, which are crucial for maintaining quality standards in dynamic environments.

Statistics reveal that organizations employing automation tools like Skaffold experience up to a 30% increase in development efficiency. This highlights the importance of integrating testing early in the development process. As Sandra Svanidzaitė, PhD, CBAP, notes, "The document offers a summary of user stories in agile software development," stressing the value of incorporating user feedback to enhance software functionality.

Furthermore, with Kodezi's AI-driven automated code debugging features, developers can swiftly identify and resolve codebase issues. This ensures that their software not only performs optimally but also adheres to the latest security best practices and coding standards. Such rapid problem-solving and efficiency enhancements are vital for maintaining high-quality software in the fast-paced world of kubernetes/perf-tests development.

As the realm of container orchestration development continues to evolve, the significance of automation tools like Skaffold becomes increasingly pronounced. By automating quality testing and leveraging advanced debugging features, developers can ensure their software meets the highest standards of excellence and efficiency. This, in turn, fosters innovation and productivity within their workflows.

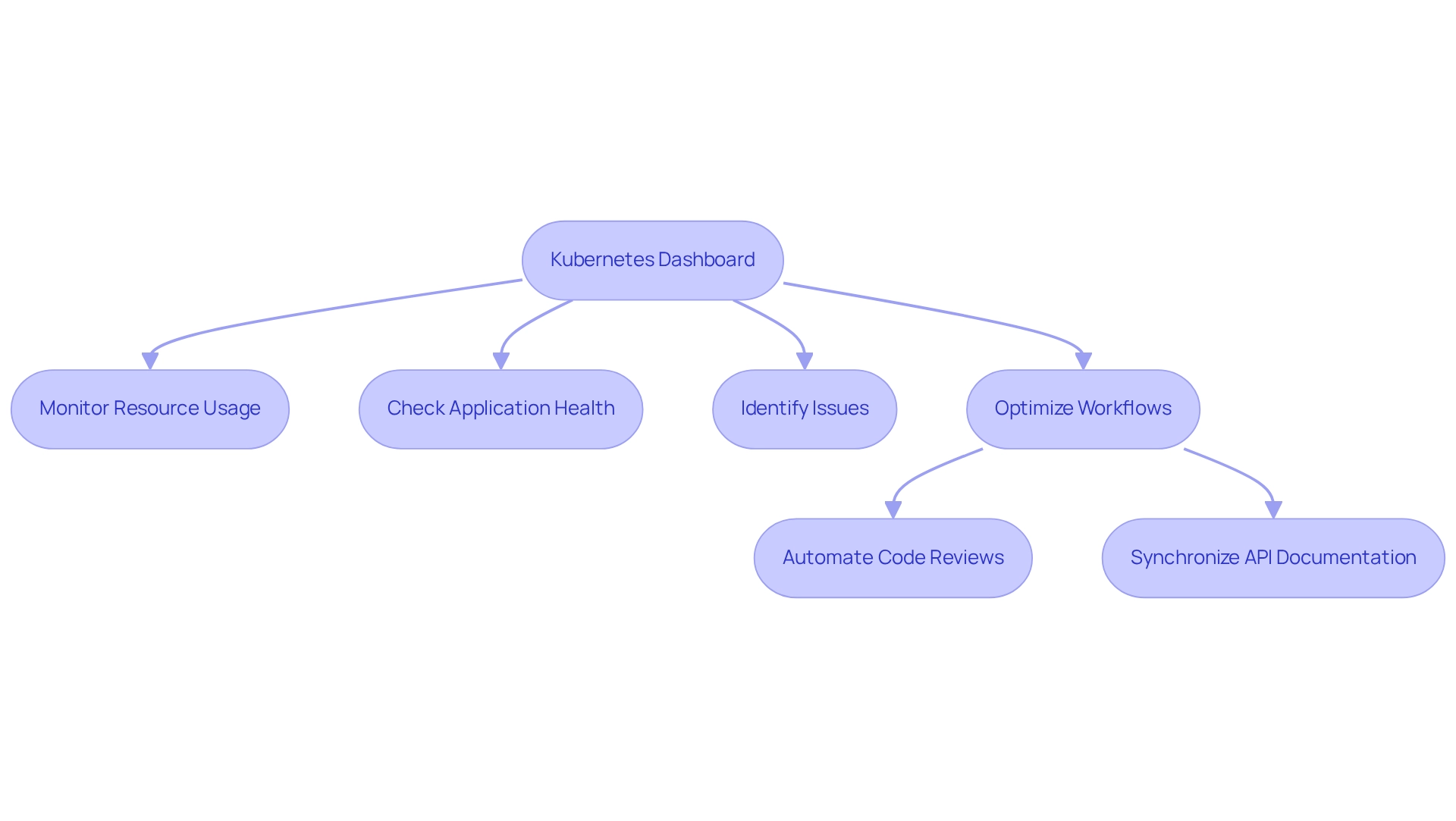

Kubernetes Dashboard: Visualize and Monitor Cluster Performance

The container orchestration dashboard serves as a vital web-based user interface for developers, enabling efficient management of software within the cluster while closely monitoring effectiveness. This tool offers essential insights into resource usage, application health, and other critical metrics, allowing teams to swiftly identify and resolve issues. By leveraging the Kubernetes Dashboard alongside Kodezi's suite of developer tools, including the Kodezi CLI, developers can significantly enhance their understanding of cluster efficiency and optimize their workflows. Kodezi automates code reviews and synchronizes API documentation with code changes, which is essential for making informed decisions about resource allocation and optimization strategies.

Recent statistics indicate that monitoring the percentage of unavailable pods is crucial for maintaining service health, underscoring the Dashboard's role in proactive operations management. Industry leaders emphasize the importance of visualization in management, with Coralogix stating, "Full-stack observability for 70% less 24/7 support, no extra cost," highlighting the value of effective visualization tools in improving operational efficiency and response times.

Real-world examples demonstrate how teams have harnessed the Dashboard for resource optimization, achieving significant improvements in application efficiency and resource allocation. For instance, Kodezi's case study on seamless code translation showcases how developers can effortlessly switch between different programming frameworks and languages, optimizing their resource management without extensive rewriting. Furthermore, with the rise of advanced visualization tools for container orchestration in 2025, the Dashboard remains a fundamental resource for developers seeking to enhance their clusters effectively. Case studies reveal that organizations utilizing the Dashboard, along with Kodezi's automation features, have successfully improved their cluster monitoring, leading to better resource management and overall operational success.

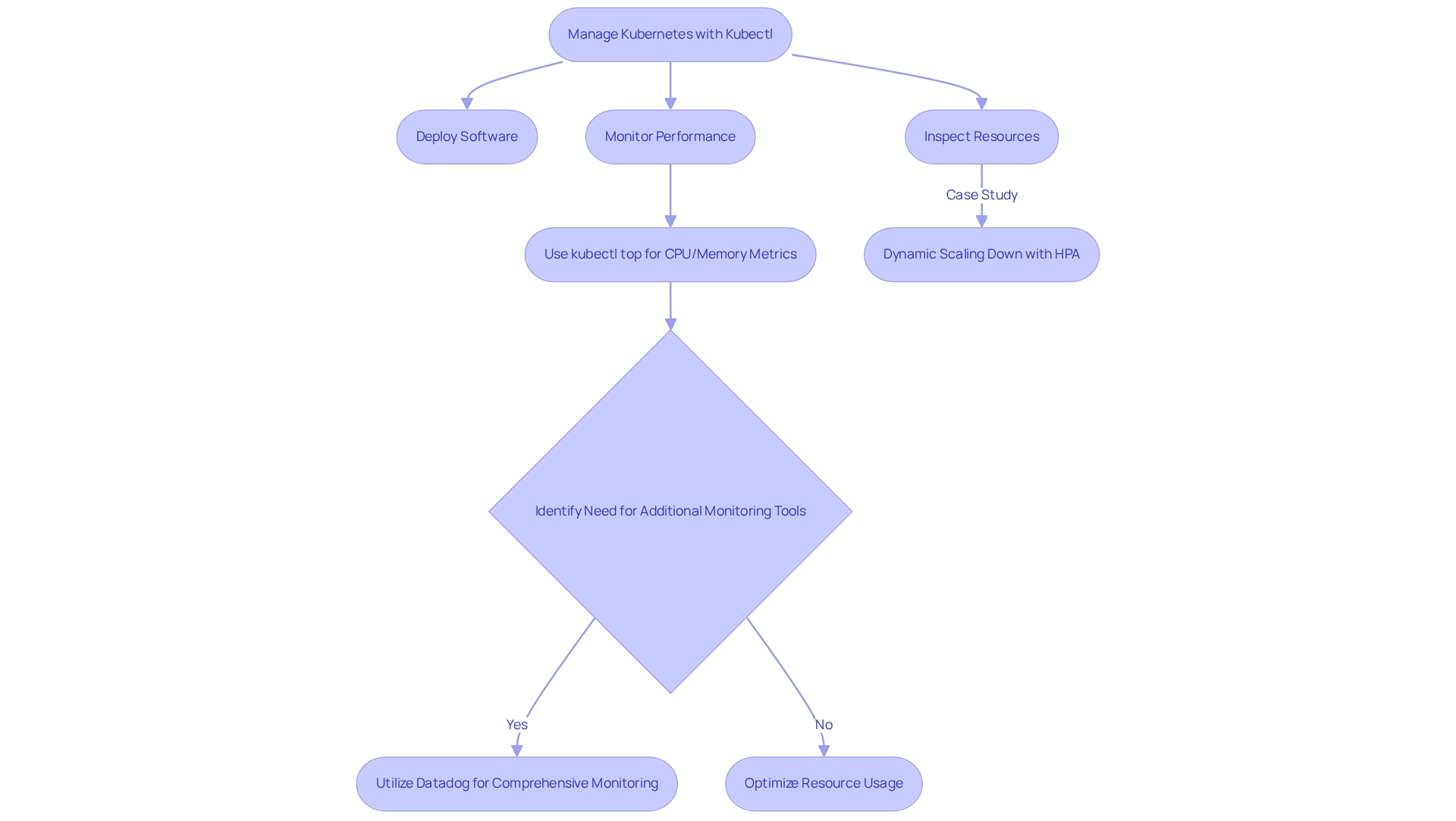

Kubectl: Command-Line Tool for Effective Kubernetes Performance Testing

Managing container orchestration clusters presents significant challenges for developers. Kubectl serves as the primary command-line interface that addresses these challenges, enabling efficient software deployment, resource inspection, and cluster operation oversight. Its versatility is particularly beneficial for testing efficiency, allowing for quick command execution and automation of various testing processes. By utilizing Kubectl, developers can effectively manage software efficiency and resources, ensuring their container orchestration environments are finely tuned for optimal performance.

However, while kubectl top provides essential CPU and memory metrics, it lacks deeper insights into network, disk I/O, or software performance. This limitation highlights the need for comprehensive monitoring solutions. As Datadog states, "the next and final segment of this series explains how to utilize Datadog for overseeing container orchestration clusters, and demonstrates how you can begin gaining insight into every aspect of your containerized environment in minutes."

A case study on dynamic scaling down with Horizontal Pod Autoscaler (HPA) exemplifies how it can adjust pod numbers in response to reduced load, optimizing resource usage according to application demands. This capability underscores the critical role of command-line tools like Kubectl in enhancing the efficiency and performance management of kubernetes/perf-tests. Furthermore, it emphasizes the necessity for additional monitoring tools to achieve a complete understanding of cluster performance.

Conclusion

In the evolving landscape of Kubernetes performance optimization, developers face significant coding challenges. Kodezi emerges as a pivotal tool, offering an automated approach to OpenAPI specification generation that enhances API documentation and streamlines performance testing. This capability allows teams to concentrate on building high-quality applications while ensuring that performance tests accurately reflect actual API behavior.

Furthermore, the integration of tools such as Kube-burner, ClusterLoader2, and Prometheus empowers developers to conduct thorough performance assessments. Kube-burner's ability to simulate real-world scenarios and identify bottlenecks is complemented by ClusterLoader2's customizable load testing capabilities. In addition, Prometheus, paired with Grafana, provides real-time metrics essential for proactive resource management and performance monitoring. Collectively, these tools enable developers to optimize their Kubernetes clusters effectively.

As the demand for high-performance applications continues to rise, leveraging frameworks like KubeEdge and automation solutions such as Skaffold becomes increasingly important. These resources not only enhance application performance but also improve developer productivity by simplifying workflows and enabling rapid iterations. The Kubernetes Dashboard and Kubectl command-line interface play crucial roles in visualizing and managing resource allocation, further supporting the overall goal of optimizing application performance.

In conclusion, the landscape of Kubernetes development is evolving rapidly, and staying ahead requires a strategic approach to performance testing and optimization. By embracing tools like Kodezi and its complementary resources, developers can enhance their capabilities, streamline their processes, and ultimately deliver robust applications that meet the high standards of today’s digital interactions. The journey toward Kubernetes excellence is not just about adopting new technologies; it’s about fostering an environment where innovation and efficiency thrive.

Frequently Asked Questions

What is Kodezi and how does it help with API documentation?

Kodezi is an OpenAPI Specification generator that automates the process of managing API documentation. It allows developers to quickly create OpenAPI 3.0 specifications from their codebase, ensuring that evaluations accurately reflect actual API behavior.

What features does Kodezi offer for API validation and collaboration?

Kodezi enables users to generate and host a Swagger UI site, which facilitates seamless validation of API endpoints and fosters collaboration among team members.

What are the benefits of using Kodezi for API development?

Kodezi saves valuable time and upholds high standards in API development, making it crucial for effective evaluations in container orchestration environments, particularly in kubernetes/perf-tests.

How does Kube-burner assist in container orchestration evaluations?

Kube-burner is an open-source utility that helps developers create, remove, and modify Kubernetes resources on a large scale, facilitating comprehensive evaluations of cluster functionality through Kubernetes/perf-tests.

What is the purpose of Kubernetes/perf-tests?

Kubernetes/perf-tests simulate real-world scenarios to identify bottlenecks and optimize resource allocation, ensuring systems can handle expected traffic loads efficiently.

Can you provide an example of how Kube-burner works?

An example includes the Horizontal Pod Autoscaler (HPA), which automatically adjusts the number of pods based on CPU usage metrics, scaling a PHP program when CPU usage exceeds certain thresholds to manage resources effectively.

What is ClusterLoader2 and how does it contribute to Kubernetes evaluations?

ClusterLoader2 is a tool designed to assess the scalability and efficiency of Kubernetes clusters. It allows teams to define custom load tests via YAML configurations, providing detailed metrics that inform resource allocation and scaling strategies.

How can developers start using ClusterLoader2?

Developers can begin using ClusterLoader2 by referring to the official documentation and community forums for guidance on setting up initial load tests. Engaging with the Special Interest Group (SIG-Scalability) for support and insights is also encouraged.