Introduction

Code optimization is a crucial aspect of software development that can greatly impact efficiency and productivity. It involves various techniques, such as profiling, benchmarking, and selecting the right data types and structures, to enhance code performance. By optimizing algorithms, minimizing redundant operations, utilizing loops and iterations effectively, and optimizing cache usage and memory management, developers can significantly improve code execution and resource utilization.

Additionally, leveraging built-in functions and libraries, reducing network latency, employing asynchronous programming, and regularly refactoring and maintaining code are key strategies for achieving optimal results. Testing and validating optimizations are equally important to ensure that enhancements do not introduce new complications. In this article, we will explore the importance of code optimization and delve into best practices for achieving maximum efficiency and productivity in software development.

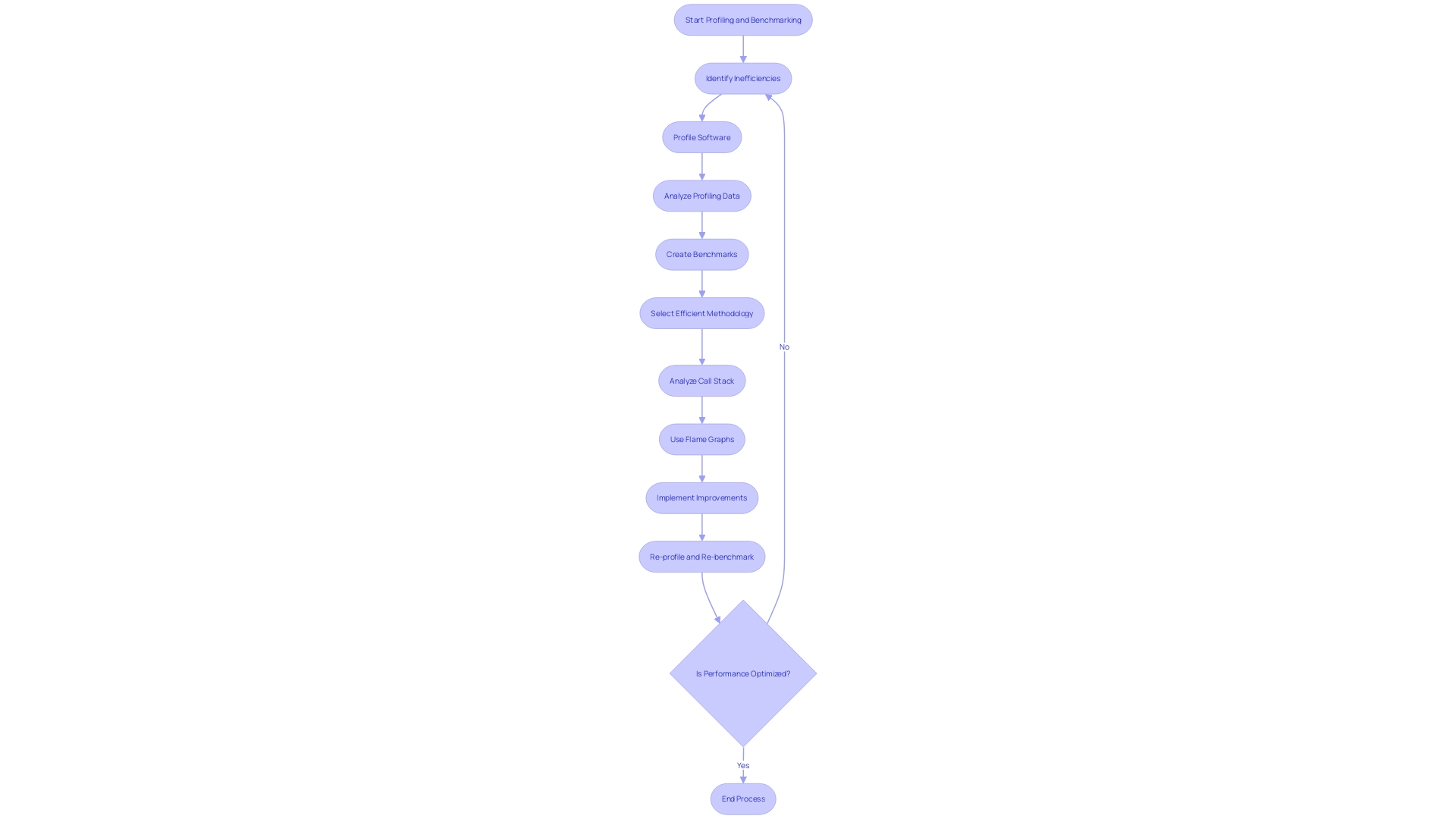

The Importance of Profiling and Benchmarking

Comprehending and enhancing the execution of programming is an intricate procedure that includes both profiling and benchmarking. Profiling is similar to a thorough health check-up for your program, identifying inefficiencies and diagnosing areas that could benefit from optimization. For instance, Power Home Remodeling utilized profiling tools like Stackprof within their Ruby on Rails architecture to scrutinize their test suite, resulting in improved factory efficiency and API interactions.

Benchmarking complements profiling by focusing on the execution speed of code segments, providing a yardstick to measure the execution of code. It's about assessing different methodologies and selecting the most time-efficient one. OpenRCT2, an open-source project, exemplifies the benefits of benchmarking when they employed SIMD (Single Instruction Multiple Data) to expedite complex tasks, demonstrating a noteworthy improvement in efficiency.

Visual aids like Flame Graphs offer an additional layer of analysis, revealing the call stack's intricacies and the duration each function consumes, a stark illustration of hiccups in real-time. Differential Flame Graphs take this a step further by contrasting two such graphs, spotlighting any shifts in effectiveness.

The importance of thorough performance evaluations in both academic and industrial contexts cannot be overstated, as highlighted by multiple key insights. These evaluations delve into the system's inner workings, offering clarity on its behavior and limitations, which is integral for ongoing refinement. As the software industry advances with tools like AI impacting software development, evaluating the quality of the software becomes increasingly crucial, with Ai's influence resulting in significant changes in churn and reuse patterns.

Choosing the Right Data Types and Structures

To enhance program execution and address problems efficiently, selecting the appropriate types of information and structures is not only a recommended approach but also a crucial factor in optimizing the program. For instance, a wise selection of data type not only reduces memory usage but also enhances execution speed. Exploring further into the realm of structures, employing arrays or dictionaries where suitable can be a game-changer for your code's efficiency.

Take into account the transformation achieved in the OpenRCT2 project, a free and open-source remake of RollerCoaster Tycoon 2, where the utilization of SIMD (Single Instruction Multiple Data) resulted in a significant boost in speed. SIMD showcases a technique where a single procedure is applied to multiple information points concurrently, highlighting the influence of information arrangement and access patterns on efficiency.

Furthermore, the arrangement of processing engines and strategic storage systems play a crucial role in optimizing large-scale information pipelines and minimizing expenses. The effective choice of structures directly relates to the scalability, resource management, and problem-solving abilities of software applications, as emphasized by news sources highlighting the essential nature of these concepts in efficient coding and addressing issues.

Gaining insight into the internal mechanisms and protocols through a comprehensive evaluation can reveal intriguing properties of the structure and result in enhanced systems, as highlighted by industry professionals. Access patterns, the nature of data collections, and their complexities are all factors that influence the performance of code, as explained by Micha Gorelick and Ian Oswald in 'High Performance Python.'

Ultimately, optimization is not merely a set of steps to follow but a journey of exploration, where each problem may require a unique set of techniques. This method of optimization is a creative investigation that improves not only the framework at hand but also the developer's anticipation for building better structures in the future.

Optimizing Algorithms for Performance

The essence of algorithm optimization in software development cannot be overstated, particularly when addressing the complexities of modern computing environments. From websites to applications operating on a multitude of servers and systems, the need for efficient algorithms that make judicious use of time and computational resources is paramount. Through the strategic application of techniques like memoization, dynamic programming, and divide and conquer methods, developers can significantly enhance the time and space complexity of the algorithms, leading to more rapid execution and diminished resource usage.

Take, for example, the brute-force approach—a naive yet universal solution for many problems. While straightforward, its inefficiency becomes evident when scaling up. A sorting algorithm that operates effortlessly on a small data set may falter on inputs of millions. Thus, it is crucial to possess a formal and mathematical methodology to properly assess and compare algorithms under varying conditions.

In the dynamic field of AI research, advancements like FunSearch demonstrate the power of efficient algorithms. FunSearch, a tool for discovering new functions in mathematical sciences, exemplifies the innovative use of algorithms to push the boundaries of knowledge. Similarly, developers are utilizing AI pair-programming tools like GitHub Copilot, which, by providing suggestions for programming, have demonstrated to improve productivity across the boardâfrom beginners to experienced programmers. These tools not only reduce task time and cognitive load but also amplify the quality and enjoyment of the coding experience.

To truly master the art of software development, one must understand how to design, analyze, and optimize algorithms. This proficiency is not only vital for creating scalable and efficient applications but is also a distinguishing factor in coding interviews. As we forge ahead, the role of efficient algorithms in the development process will undoubtedly continue to grow, underscoring the need for developers to refine their algorithmic thinking and optimization skills.

Minimizing Redundant Operations and Computations

When improving performance, getting rid of unnecessary computations is not only about increasing speed; it's about optimizing resource utilization. A prime example of this approach is the meticulous work on modular multiplication optimization, followed by general algorithm enhancements. This methodical process, while executed in a non-linear fashion, is presented systematically for clarity. In a computing environment constrained by a CPU clock rate of 40 kHz and a limited instruction set, where the instruction cycle can take up to 16 ticks, efficiency is paramount. We're talking about a setting where you can execute a mere 92,500 instructions per second. In such scenarios, adopting strategies like caching and lazy evaluation becomes indispensable, as they help to bypass unnecessary calculations, particularly when you can't rely on pre-computed data tables due to the need for supporting an arbitrary number of computed digits. This constraint requires a universal solution, avoiding any customization for particular results, thereby ensuring that the program remains versatile and effective.

Not only do these optimizations contribute to execution speed, but they also play a critical role in energy conservation. Data centers, powered by servers that sustain our technology infrastructure, are now responsible for over one percent of global power usage. As workloads escalate, so does the number of servers, each adding incrementally to the energy footprint. Through optimization, we can mitigate this impact, as demonstrated by studies showing that optimized source files can significantly reduce power consumption. The complex relationship between computer architecture and energy use highlights the significance of optimization not only for efficiency, but for sustainability as well. In the words of Guillermo Rauch, 'Why waste time, effort, and money on programming that may become obsolete or might never be needed?' By focusing on eliminating redundancy and honing in on essential computations, developers can create software that not only runs faster but also consumes less power, making every instruction count.

Effective Use of Loops and Iterations

Improving loops is a vital element of enhancing program efficiency. Techniques such as loop unrolling can expand loops at compile time, reducing overhead and accelerating execution. Likewise, loop fusion merges several loops that have the identical iteration space into one loop, reducing loop overhead and enhancing cache efficiency. Parallelizing loops across multiple cores can also significantly speed up processing, especially for tasks that can be executed concurrently. It's crucial, however, to maintain a careful balance, ensuring code remains manageable and comprehensible even as efficiency is fine-tuned. Sophisticated algorithms can impede efficiency, especially in extensive sets of information or elaborate tasks. By selecting algorithms with lower computational complexity and employing efficient data structures, developers can streamline execution. For instance, utilizing a sorting algorithm with a time complexity of O(n) instead of O(n²) can result in significant gains in efficiency. Although languages like Python offer simplicity and readability, they sometimes compromise on raw execution. In such cases, understanding and applying these optimization strategies becomes even more critical to maximize efficiency.

Cache Optimization and Memory Management

Optimizing cache usage and managing memory effectively are pivotal strategies for improving program performance. Leveraging CPU caches adeptly can slash memory access times, thereby accelerating execution. Programmers can enhance program efficiency by minimizing cache misses and adopting cache-aware structures, in addition to employing strategies like prefetching and optimizing loop nests. Efficient memory management, such as avoiding memory leaks and optimizing memory allocation strategies, contributes to streamlining operations. In dynamic environments like modern data centers, where memory-intensive tasks like memcpy(), memmove(), hashing, and compression are commonplace, optimizing these operations is critical. These tasks, part of the 'data center tax', can monopolize CPU resources better allocated to running applications. Tools like TypeScript 4.9 are aiding developers in creating more efficient, type-safe code, further mitigating resource waste. Implementing full-page caching and object caching, as demonstrated in optimizing WordPress and WooCommerce for large-scale projects, shows notable enhancements in page generation times—a crucial aspect for website efficiency.

Utilizing Built-In Functions and Libraries

Harnessing the capabilities of built-in functions and libraries is akin to tapping into a reservoir of expertly crafted tools designed for peak performance. Programming languages come equipped with these powerful features, offering developers pre-optimized solutions that are ready to deploy. By utilizing these powerful functions and libraries, one can bypass the laborious process of crafting customized solutions from the beginning, thus expediting development schedules and improving the effectiveness of the software foundation.

The journey of program optimization is similar to mastering an art formâit's an ongoing process of refinement and discovery. It's crucial to adopt a mindset of continuous measurement and profiling to discern the true impact of any changes made. The pursuit of optimization is relentless, and while initial gains can be significant, diminishing returns often set in. In this regard, developers are encouraged to explore various avenues, as sometimes an entirely different approach can lead to breakthrough improvements in performance.

In the realm of Python, for instance, a single line of program can arrange a dictionary by multiple conditions using the sorted function and a lambda. Such succinct expressions epitomize the elegance and utility of one-liners. The all function stands out as another gem, capable of determining if every element in an iterable satisfies a particular condition. These streamlined tools not only simplify the coding process but also contribute to the overall efficiency and maintainability of applications.

As we explore further into the principles of 'Clean Code', it becomes evident that functions have a crucial role in creating software that is not only functional but also graceful and easy to maintain. Properly designed functions can transform the coding landscape, making it more organized and intuitive. It's a skill that serves programmers at every level, providing a pathway to more advanced and resilient applications. When confronted with repetitive lines of software, the development of a function can be a game-changer, providing long-term advantages and recognizing the intricate craft of composing high-quality, optimized software.

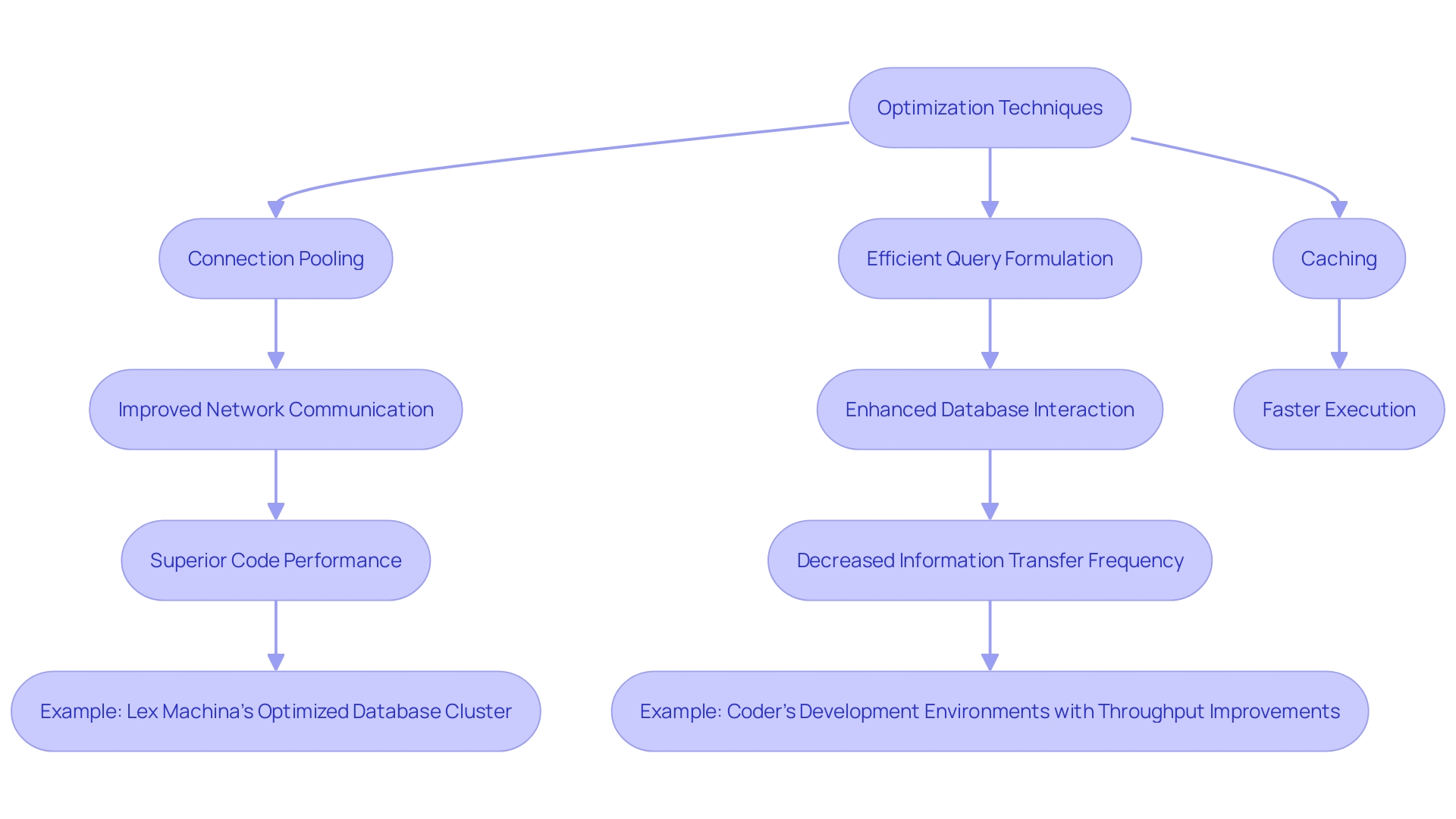

Reducing Network Latency and Optimizing Database Queries

When dealing with intricate interactions between networks or databases, sophisticated approaches like connection pooling, efficient query formulation, and judicious use of caching become crucial. These approaches not only enhance network communication and database interaction but also decrease information transfer and the frequency of network requests, resulting in faster execution and superior code performance. For instance, Lex Machina, which processes vast amounts of legal information, optimized their large Postgres database cluster to handle numerous databases for different stages and customer needs, improving their operational efficiency.

Furthermore, with the arrival of new internet standards like L4SâLow Latency, Low Loss, Scalable Throughputâdevelopers have tools to minimize latency and maintain high throughput without compromising information transfer rates. L4S aims to reduce queue times and rapidly adjust to congestion, ensuring that devices are immediately aware of and responsive to network conditions.

In practice, even applications like a simple Lambda function retrieving NFL stadium data can see gains through optimization. This function, needing less than 10ms per request, exemplifies how even minor enhancements can scale up to handle high transaction volumes effectively.

To further illustrate the impact of optimization, Coder's development environments saw throughput improvements over 5x with their latest release, emphasizing the real-world benefits of these techniques. Therefore, programmers must constantly adapt their methods to enhance performance in the presence of expanding datasets and intricate interactions.

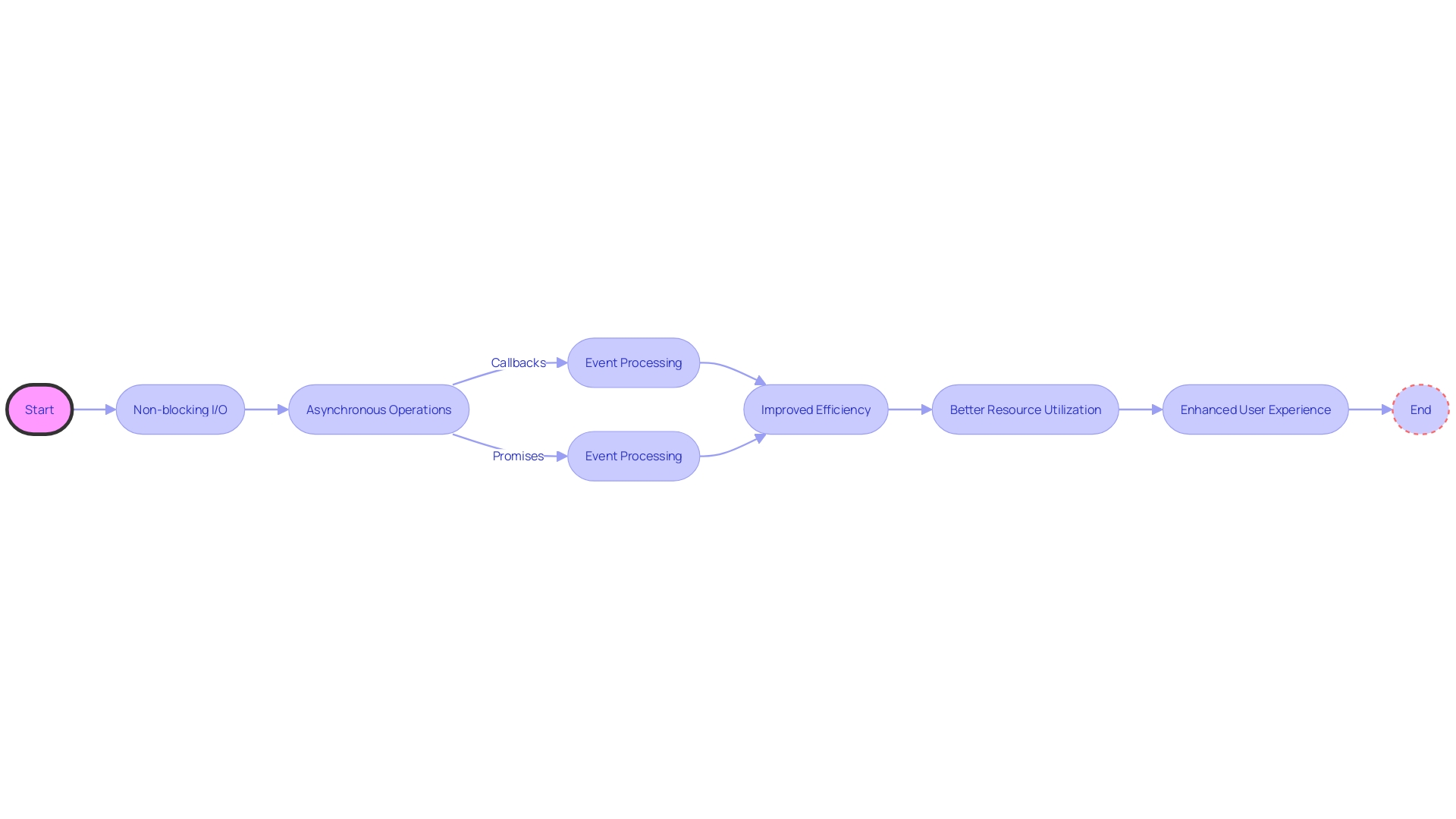

Asynchronous Programming for Improved Responsiveness

Asynchronous programming provides a strong solution for improving the efficiency of programming, especially when working with external entities or performing lengthy tasks. By adopting non-blocking I/O and asynchronous operations, programs can run concurrently, leading to better use of resources. Instead of the usual blocking calls, employing callbacks or promises enables the creation of software that is both highly responsive and efficient. This approach is especially critical in distributed systems that handle vast quantities of data, where the design of data processing has a direct impact on user experience. Using asynchronous methods, events are processed immediately as they arise, without the need for time coordination, which is essential in microservices where swift responses to requests are crucial. The Agile development methodology complements this by emphasizing collaboration, adaptability, and rapid iterations to deliver working software efficiently, a principle that aligns perfectly with the non-linear nature of asynchronous processing.

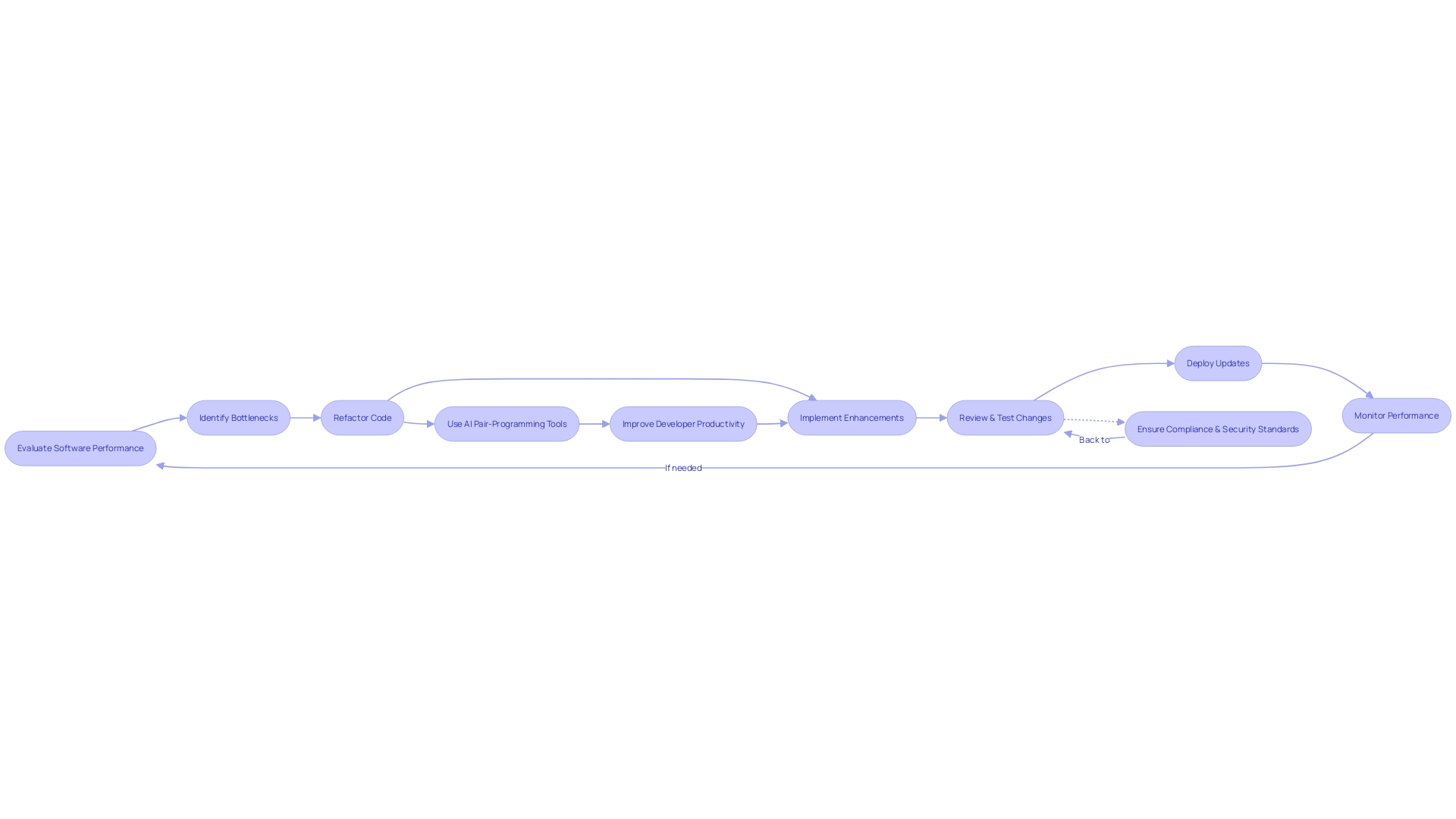

Regular Code Refactoring and Maintenance

Ensuring the efficiency of the program remains intact over time is not just advantageous; it's crucial, particularly in industries like banking where M&T Bank operates. With digital transformation at its peak, banks are fast adopting new technologies, necessitating software that meets the highest security standards and regulatory compliance. M&T Bank, with its rich history and commitment to innovation, has embraced the establishment of Clean Code standards to maintain and enhance software performance.

Refactoring plays a pivotal role in this process. It involves reworking the internal structure of the program without altering its external behavior, with the goal of making it more understandable, maintainable, and efficient. This means simplifying intricate programming to navigate and modify extensive systems more easily, leading to enhanced programming readability and organization. Effective refactoring can uncover bottlenecks and remove them, avoiding the accumulation of technical debt that can impede future development endeavors. It's truly a practice that benefits all involved, from the developers who create the software to the end-users who rely on its seamless functionality.

According to an industry expert, a thorough performance evaluation offers a comprehensive comprehension of a framework's behavior, measuring not just the overall behavior but also its internal mechanisms and policies This insight emphasizes the significance of ongoing assessment and enhancement of software to guarantee it meets quality benchmarks and remains robust in the face of evolving demands.

Statistics also validate the significance of tools like AI pair-programming systems, which have revolutionized the way developers work. By providing suggestions for predictive coding, these tools have been proven to improve all aspects of developer productivity, from reducing task time to enhancing the quality of the code. For instance, GitHub Copilot is cited as a game-changer, particularly benefiting junior developers who experience the most significant productivity boosts.

Ultimately, regular programming refactoring and upkeep are more than just coding best practices; they are strategic imperatives that resonate across industries, driving efficiency, reliability, and security in an era defined by rapid technological advancement.

When to Consider a Complete Code Rewrite

When a software application becomes too complex to improve or sustain, developers might encounter the challenging job of a complete code rewrite. This extensive undertaking is not just about beginning from scratch; it's about integrating all the complex knowledge acquired from the prior framework. As developers embark on such a project, they often encounter unexpected complexities, such as undocumented behaviors or 'easter eggs' that can significantly hinder progress.

The reconstruction of a desktop application for the web, for example, can unveil a myriad of hidden challenges. Dividing a monolithic structure into smaller, manageable elements may appear to be an optimal approach, but it can turn into a long-term endeavor that extends over many years, particularly for enterprise-level setups.

Refactoring software is a delicate process that involves enhancing the internal structure without altering the external functionality. It's crucial for maintaining a codebase that is easy to understand, maintain, and modify. Effective refactoring is supported by comprehensive documentation, which serves as both a guide and a record of the existing system's intricacies.

The Linux Foundation's Management & Best Practices resources emphasize the significance of best practices and standards in open source development. These guidelines can serve as a beacon for developers navigating the complex journey of rewriting software.

Moreover, software development research suggests a shift in the industry's focus towards multi-core processors and architectural enhancements to improve performance, as opposed to relying on increased clock speeds. This change emphasizes the significance of optimizing programming to guarantee it performs efficiently on modern hardware.

The dangers linked with enhancing the programming are genuine, as they can unintentionally disrupt operational setups. However, developers can mitigate these risks through careful planning and execution. Refactoring tasks may vary in scope, affecting multiple subsystems or being confined to a single component, but all share the common goal of enhancing the system's overall performance and maintainability.

Lastly, the impact of AI on software development is becoming increasingly significant. Studies on the impact of AI indicate alterations in quality metrics for software, such as churn rate and reuse. As technical leaders look ahead to 2024, understanding and measuring the effects of AI on software quality is becoming crucial for maintaining high standards in software development.

Best Practices for Testing and Validating Optimizations

When optimizing code efficiency, a meticulous testing and validation process is paramount to ensure enhancements do not lead to new complications. Employing techniques like unit testing and performance profiling is crucial to authenticate the efficacy of each optimization. A case in point is the optimization of an automated transformation in Pentaho, a business intelligence suite. The initial workflow consisted of a laborious insertion process into a database table that presented challenges as the volume increased. Through careful testing and validation, such issues are identified and addressed, ensuring the capacity to manage high data volumes effectively.

Performance testing is critical in assessing a system's speed, scalability, and stability. It involves various tools and techniques to simulate high-load scenarios and identify bottlenecks. As the SWEBOK highlights, testing is key in software engineering, confirming functionality against requirements and detecting defects early on. As software intricacy increases, testing remains an indispensable process in maintaining high-performance standards. Moreover, with new programming languages like Go, Rust, and Zig gaining traction for their efficiency, teams must evaluate the potential benefits against the proficiency of their developers in these languages. Ultimately, ensuring a seamless, fast, and efficient user experience is a decisive factor in a software application's success, and systematic testing is the gateway to achieving such excellence.

Conclusion

In conclusion, code optimization plays a vital role in software development, enhancing efficiency and productivity. Through techniques such as profiling and benchmarking, developers can identify areas for improvement and optimize algorithms for better performance. Selecting the right data types and structures, minimizing redundant operations, and utilizing loops and iterations effectively are essential strategies for code optimization.

Cache optimization and memory management are pivotal in improving code execution, while leveraging built-in functions and libraries can accelerate development timelines and enhance code efficiency. Reducing network latency and optimizing database queries are crucial for faster execution and superior code performance. Asynchronous programming allows for better resource utilization, especially in distributed systems, while regular code refactoring and maintenance ensure long-term performance and reliability.

When considering a complete code rewrite, developers must carefully plan and execute the process, taking into account the complexities and challenges that may arise. Testing and validating optimizations are equally important to ensure that enhancements do not introduce new complications. By employing techniques like unit testing and performance profiling, developers can authenticate the efficacy of optimizations and maintain high-performance standards.

Overall, code optimization is an ongoing journey of refinement and exploration, with the goal of achieving maximum efficiency and productivity in software development. By following best practices and embracing the continuous pursuit of improvement, developers can create high-quality, optimized code that meets the demands of modern computing environments.