Introduction

In today's fast-paced and ever-evolving world of software development, ensuring a secure and efficient codebase is more important than ever. From embracing security by design to implementing strong password management practices, there are various aspects to consider when it comes to safeguarding your code and data. Additionally, effective access control, error handling and logging, system configuration and patch management, threat modeling, cryptographic practices, input validation and output encoding, authentication and authorization, logging and audit practices, quality assurance and vulnerability management, secure secret management, source code management best practices, hardening CI/CD pipelines, branch protection policies, recommended access controls and permissions, server-level policies, and continuous monitoring and incident response planning all play crucial roles in creating a secure codebase.

By prioritizing these areas and incorporating best practices, developers can ensure the integrity, confidentiality, and availability of their software while mitigating potential security risks.

Security by Design

Embracing safety by design is not just an idealistic approach; it's a necessary strategy in a world where traditional security methods fall short. Picture the change when protection is not merely an afterthought but a core element embedded into the framework of software development. We must change our mindset, as Joel asserts, to give protection the priority it deserves, allowing developers to construct more secure infrastructures.

Apple's new Private Cloud Compute stands as a testament to this evolution, where proactive commitment to safety is paramount. Such commitment to safety by design is reflected in CISA's updated whitepaper, which not only reaffirms the original key principles but also expands its scope to include AI model developers. It highlights the significance of software manufacturers assuming responsibility for customer safety results, which involves integrating protection by default, embracing product development practices that prioritize safety, and adhering to business protocols that promote safety.

Google's experience demonstrates that emphasizing developer ecosystems can result in a significant decrease in typical vulnerabilities. The essence lies in promoting safe coding practices to prevent bugs, recognizing that even the most skilled developers are prone to errors when repeatedly confronted with potentially vulnerable coding patterns.

The DevSecOps mission statement reflects this philosophy, aiming to empower engineering teams to secure their digital products with the right tools and automation. This change towards a focus on safety through planning is not only about enhancing individual products but also about examining industry patterns to identify which companies are effectively progressing their protective measures.

Closed source applications, for instance, provide increased protection by concealing the source code from unauthorized individuals, thus minimizing the likelihood of breaches. Nevertheless, this does not undermine the necessity for a secure-by-design strategy, as concerns regarding the protection of information, irrespective of the program's openness, can result in severe system breakdowns and unauthorized disclosure of data, impacting customers on a large scale.

As trends reveal, the focus is gradually shifting from mere detection of defects to preventing similar vulnerabilities in entirety. This proactive approach to security is the foundation of a secure development lifecycle (SDLC) framework, ensuring that security considerations are integrated from the ground up, rather than being patched in as an afterthought.

Password Management

A strong password strategy is essential for protecting a program's codebase. Instead of relying solely on traditional methods, consider advanced tactics for crafting passwords that can withstand cyber threats. This incorporates not only the creation of complex passwords but also the integration of encryption methods to protect them and the establishment of stringent password protocols.

Historical data breaches, such as those experienced by LinkedIn in 2012, Yahoo in 2012-13, and GoDaddy in 2021, underscore the perils of weak password practices. These breaches vividly demonstrate the vital role passwords play in securing digital identities. For software developers, safeguarding user data hinges on robust identity verification mechanisms, of which passwords are a crucial component.

To enhance password protection, one should employ password hashing instead of storing passwords in plain text. Hashing converts passwords into unique, fixed-size strings, rendering the original password unrecoverable. Such a one-way function significantly enhances protection compared to two-way encryption methods, which could potentially allow reverse-engineering.

Furthermore, the password challenge faced by British Telecom (BT) emphasizes the trade-off between user experience and safeguarding. They navigated the complexities of credential management and moved towards a passwordless system, indicating a change in the protection paradigm.

High-profile incidents like the SolarWinds attack have only heightened the urgency for dynamic credential management. The challenge lies in reconciling the often conflicting goals of enhancing protection without impeding the user experience.

Recent statistics reveal an alarming trend: up to 90% of the code running in production systems may originate from open-source packages, as per the Python Package Index (PyPI). While these packages offer invaluable resources, they also pose potential security risks, making it imperative to keep them up-to-date and closely monitored.

In light of these developments, developers are encouraged to continuously educate themselves on the evolving landscape of cybersecurity threats and defenses. By embracing a culture of ongoing learning and implementing state-of-the-art password management practices, software teams can ensure the integrity and trustworthiness of their codebases.

Access Control

Implementing effective access control is a cornerstone of securing a codebase. Role-based access control (RBAC) is a popular method that focuses on assigning permissions to roles rather than individuals. These roles correspond to various job functions within an organization and are designed to reflect the responsibilities and necessary access each role entails. Notably, roles are distinct from team structures; for instance, a team may have managers and their reports who require different levels of access. The flexibility of RBAC allows for roles to be assigned temporarily, accommodating scenarios such as cross-functional projects or on-call duties.

Attribute-based access control (ABAC), on the other hand, operates on a more granular level, where access decisions are made based on attributes of users, resources, or the environment. Imagine an amusement park with rides that have different height requirements: similarly, ABAC uses attributes like user status or resource classification to determine access permissions.

Both RBAC and ABAC are integral to maintaining a secure and efficient workflow. For instance, Discord's exploration of access control systems highlighted the need for a tool that prioritized security and the principle of least privilege, while also being intuitive and self-serve to facilitate adoption within the organization.

The requirement for strong access control goes beyond internal systems to customer-facing applications. As emphasized by industry professionals, the responsibility for ensuring accessibility of programs lies with the customer, and it is crucial to include such requirements in contracts.

From a broader industry perspective, consultants emphasize that access control is not only a technical challenge but also an organizational strategy to protect assets. Effective systems must be underpinned by solid policy, user training, and regular auditing, ensuring they remain effective over time. Recent research by ASIS has delved into the current state of access control, offering insights into best practices and benchmarks within the field.

The evolution from physical locks to sophisticated digital access systems exemplifies the ongoing transformation in security practices. As the need for online access grows, so does the complexity of ensuring that users are who they claim to be. This underscores the importance of rigorous access control mechanisms to safeguard sensitive information and systems in an increasingly interconnected world.

Error Handling and Logging

Strong error management is a foundation of reliable application development, impacting not just the system's stability but also its resilience to malicious exploitation. Effective error handling transcends the local scope of a class or function, extending to the system's periphery, where it plays a pivotal role in safeguarding the integrity of the entire application. The evolution of error management strategies has shown us that while early programming languages like C and C++ relied on return codes to signal success or failure, this method often led to a tangled web of checks that obfuscated the true flow of control and increased the risk of overlooking critical errors.

Recognizing these shortcomings, the programming community embraced the concept of exceptions—a paradigm shift aimed at centralizing error handling and streamlining the control flow. Exceptions delegate the responsibility of managing errors to designated handlers, thereby promoting cleaner code and reducing the likelihood of unaddressed errors that could compromise system reliability.

Moreover, the manner in which we develop programs for modularity, like decoupling and cohesion, directly affects the simplicity with which mistakes can be controlled and comprehended. As developers, we must be acutely aware of the broader context in which our code operates. This includes understanding the volatile nature of requirements and how they can influence error management strategies.

Critically, performance is also a key consideration in error management. The approaches we adopt must not only be secure but also efficient, ensuring that the system's responsiveness is not adversely affected. As one industry expert pointedly notes, focusing on output quality rather than quantity is essential for long-term productivity in development. By concentrating on robust and reliable code, developers can avoid the pitfalls of recurring errors that drain time and resources.

In this light, error handling and logging are not just about catching and recording issues; they are about crafting a resilient codebase that can withstand the complexities of modern software environments. These practices are not just a byproduct of developmentthey are a fundamental aspect of creating safe and efficient systems that stand the test of time and usage.

System Configuration and Patch Management

To fortify your systems against security threats, meticulous attention to system configurations and timely application of patches are paramount. Effective configuration management ensures that systems operate in a state that is safe and consistent with organizational standards. With the rapid technological advancements and the shift towards digital banking, as seen in M&T Bank's approach, it's clear that maintaining secure and compliant code is not just a technical necessity but also a business imperative to prevent financial losses and reputational harm.

A patch is a piece of code designed to update a computer program or its supporting data, to fix or improve it. This includes fixing security vulnerabilities and other bugs, and improving the usability or performance. Patch management is a crucial aspect of vulnerability exploitation prevention. As reflected in the studies on application program maintenance and the insights from Google's developer ecosystems, the adherence to safe coding practices can drastically reduce the rate of common defects.

In the context of patch management, it's not just about whether a program contains vulnerable code, but whether it's being exploited. With cyberattacks on the rise, particularly those that target computer programs supply chains as mentioned in the ESF report, organizations must manage their applications effectively, which includes understanding and utilizing application bills of materials (ABOM).

To build a resilient foundation for patch management, it's essential to inventory and prioritize your assets. This encompasses all devices and applications in use. For example, creating a complete inventory is akin to mapping your digital ecosystem, which sets the stage for efficient patching practices. By doing so, you can ensure that your organization's maintenance efforts are both effective and under control, ultimately contributing to the overall productivity of teams.

Threat Modeling

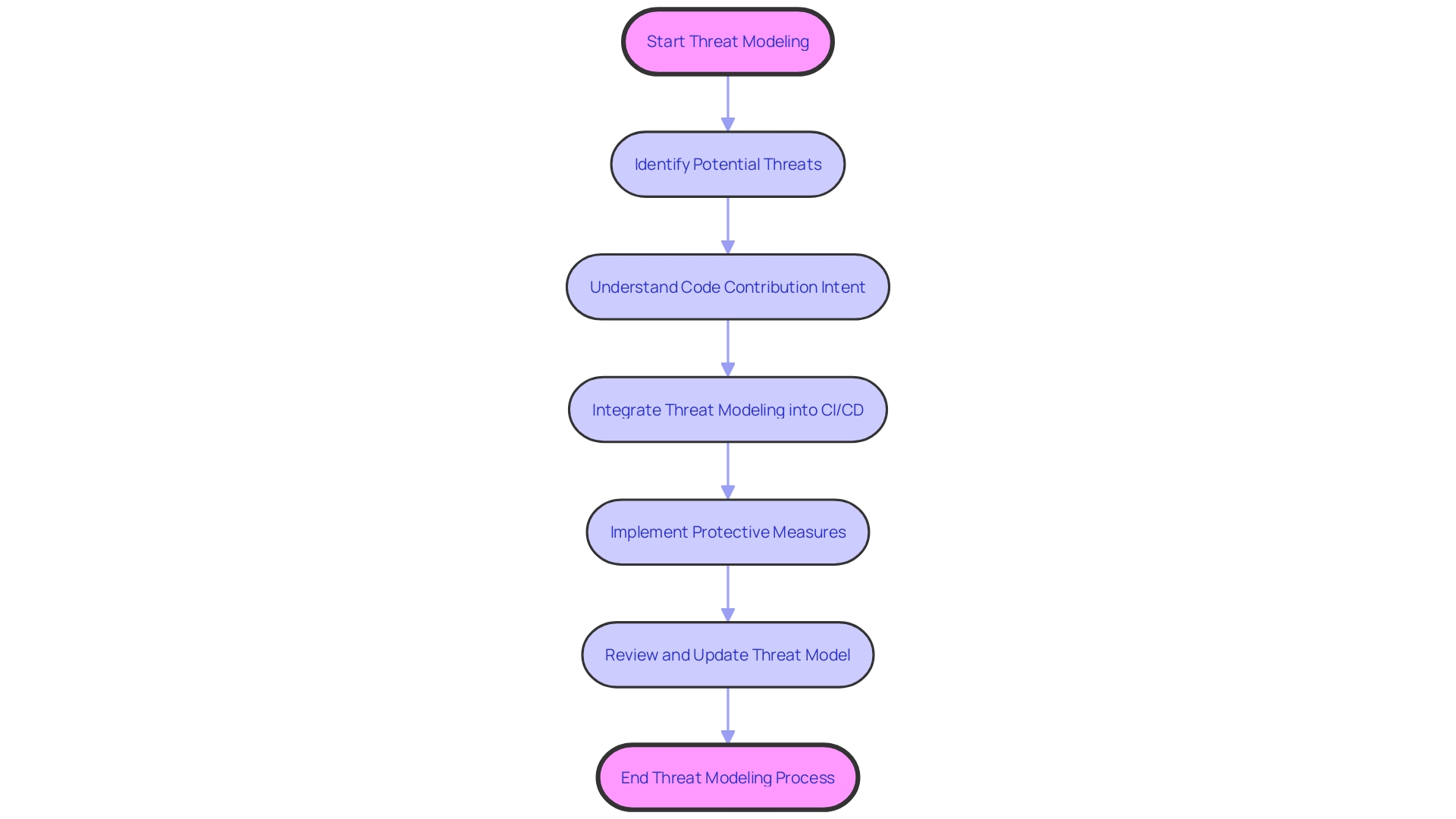

Recognizing and dealing with threats in software development is a vital undertaking, and threat modeling stands at the forefront of this challenge. With recent investigations revealing vulnerabilities in public GitHub repositories of large-scale open-source projects, the urgency for robust threat modeling is clear. These vulnerabilities, prior to being addressed, posed a risk to millions of consumers and highlighted the importance of maintaining a secure codebase.

The process of threat modeling involves not just identifying potential threats but also understanding the intent behind code contributions. For example, differentiating between a legitimate threat actor and a researcher in the field can be complex. Phylum's approach to err on the side of caution when encountering packages with characteristics of malware-like behavior is a testament to the intricate nature of threat identification.

Moreover, the integration of threat modeling into CI/CD environments is critical, as these systems use sensitive credentials and play a vital role in the building, testing, and delivery of code to production. Keeping a high level of credential hygiene is a significant challenge that requires a methodical approach to threat modeling.

The discussion regarding the significance of considering threat modeling in the development process highlights the need to address implications related to protection at the initial stages of the design. As the Internet has made services more interconnected, the requirement for strong protection measures has escalated. The development of understanding in safeguarding is essential for both developers and professionals to secure data and sensitive business information efficiently.

Backing up these concerns, recent studies suggest an increasing emphasis on safety among organizations, although the incorporation of protective measures into formal, standardized procedures is frequently inadequate. This gap in implementation highlights the need for future research to enhance the understanding and application of these methods in real-world scenarios.

The 'CS2023' guide on computer science curricula highlights the importance of safeguarding in education, alluding to the necessity for future developers to have a strong grasp of threat modeling and protective measures. This guide, treated as authoritative by accrediting organizations, will influence the content of computer science curricula for the next decade.

Fundamentally, threat modeling is an essential element of software development that helps in the proactive recognition and reduction of risks to software. It serves as a foundational practice that supports the creation of a secure codebase, ensuring that consumer data and business operations are protected from potential threats.

Cryptographic Practices

Mastering cryptographic techniques is crucial for safeguarding sensitive information and ensuring data integrity in our interconnected digital landscape. Encryption, as the transformation of readable data into an unreadable format, stands as a pillar of data protection. It's imperative to consider the necessity of encryption; while it's vital for protecting data, it's not always the go-to solution for every scenario, such as passwords, where irreversible hashing is more appropriate.

Hashing converts data into a fixed-size string of characters, which is typically used for validating the integrity of data. On the other hand, digital signatures authenticate the identity of the sender and ensure that the content has not been tampered with. These practices are not static; they evolve as cybersecurity threats grow more sophisticated. For instance, Microsoft's incorporation of the ML-KEM algorithm into SymCrypt, a post-quantum standard endorsed by the National Institute of Standards and Technology (NIST), showcases the progression toward future-proof cryptographic practices.

To guarantee that these cryptographic methods are effectively implemented, regular training on protection is indispensable. It equips teams with up-to-date knowledge on emerging threats and best practices. Furthermore, establishing a comprehensive data protection policy offers a strategic structure for handling and safeguarding data, which is crucial for both safety and regulatory conformity.

Real-world applications like Google's emphasize the significance of integrating safety from the ground up. Google's approach emphasizes the necessity of safe coding to prevent common vulnerabilities. Likewise, embracing the SLSA framework can improve the supply chain of programs by offering a shared vocabulary for producers and consumers to comprehend assurances.

The improvement of protected programs is an evolving procedure, necessitating ongoing enhancement of privacy and safety demands. Threat modeling, a component of this process, helps identify and categorize potential risks, guiding the implementation of robust security measures throughout the program's lifecycle.

Input Validation and Output Encoding

Ensuring a codebase that is free from vulnerabilities is akin to a well-oiled machine within a company where every department works in harmony. Just as in a bustling company where the transport department ensures the smooth transfer of information between departments, in application development, the Incoming Communication Layer serves a similar purpose. It's the gatekeeper for external inputs, transforming and directing them to the heart of the system—its business logic. This layer is pivotal in validating inputs, an essential practice to ward off injection attacks, which are like the corporate spies of the cyber world, always probing for a way in.

Input validation is the scrutinizing process where external data is checked against predetermined criteria before being accepted into the system. It's the receptionist who diligently verifies the ID of every visitor. Meanwhile, output encoding is the eloquent spokesperson of the company, ensuring that the information provided to the outside world is not only accurate but also presented in a manner that cannot be misconstrued or manipulated.

To illustrate the significance of these practices, consider the SQL language, a cornerstone in database communication. It's a language that can create, read, update, and delete data with ease. However, without proper validation, SQL can be the unwitting medium for an attacker's commands, leading to unauthorized data exposure or loss—like a misinformed employee accidentally sharing confidential information.

Best practices in secure coding are not just about following a set of rules, they are about understanding the potential threats, such as typosquatting or malicious packages, and implementing strategies to counter them. The coding world acknowledges the wisdom in the 'eat-your-own-dog-food' philosophy, where developers utilize their own programs to comprehend and rectify its shortcomings. This hands-on approach leads to a more refined user interface and a robust system, much like a company whose employees are also its customers.

Staying updated on the latest trends is crucial in the dynamic field of development. With the increasing integration of AI in coding and the rise of open source, the landscape is ever-evolving. An inventory of materials (SBOM) is becoming essential for supply chain management of programs, guaranteeing that the source, purpose, and safety of every code component are well-documented.

Developers are often likened to architects, tasked with constructing the most straightforward yet effective solution from the tools and resources available. It's a balance between innovation and practicality, where the simplest solution is not a sign of laziness but of efficiency.

In conclusion, input validation and output encoding are not just technical terms; they are the strategic policies and procedures that ensure a program is protected like a fortress while communicating with the world outside its walls in a clear and secure language.

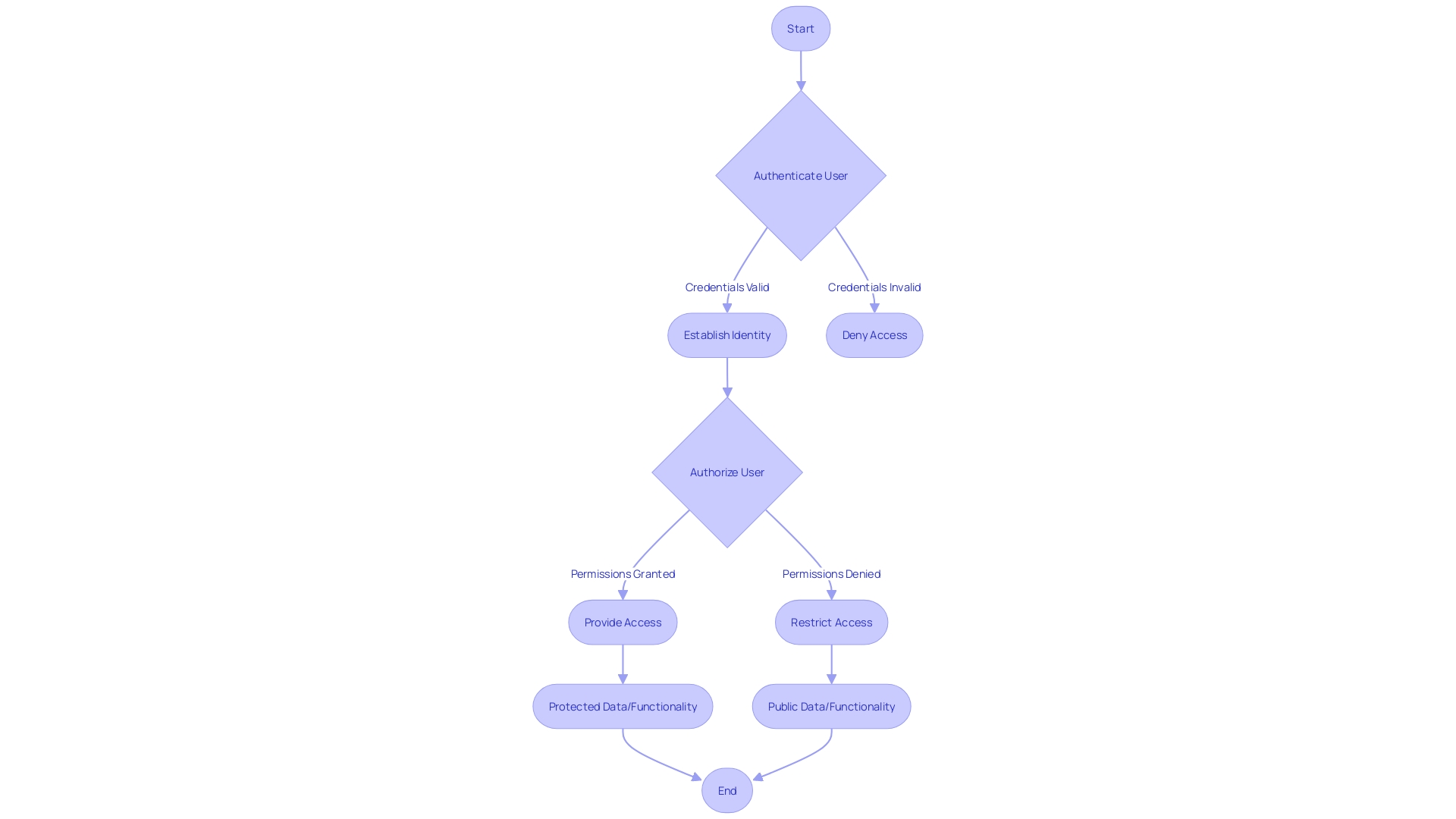

Authentication and Authorization

Confirmation and validation are crucial for verifying user identities and controlling their access, forming the foundation of application protection. Authentication involves validating credentials, like usernames and passwords, or biometric data, to establish user identity. Authorization, on the other hand, determines the permissions and access levels once a user is authenticated. These processes are vital for protecting data and functionality from unauthorized use and are essential to a codebase standard.

Reddit's advertising platform is a prime example, where every action requires swift authorization checks to maintain a fluid user experience. Any disruption in the authorization service could stall the platform entirely. Likewise, software enterprises aim for safe yet seamless user experiences, frequently encountering risks and customer trust concerns due to fragmented authentication methods.

In the domain of Single Page Applications (Spas) utilizing JavaScript frameworks such as React, Vue, or Angular, the challenge of safety becomes more pronounced with numerous libraries and dependencies. Each carries potential vulnerabilities that developers may not fully track or understand due to their complexity and interdependencies.

Statistics highlight the significance of strong protective measures. A study revealed that 96% of commercial codebases use open-source components, with 76% of the application code being open-source. This emphasizes the extensive supply chains for programs and the necessity for secure program signing and package registries.

To ensure clarity for developers and users alike, terms like 'login' for authentication and 'permissions' for authorization are recommended. Clear terms help demystify the security processes, making them more accessible and easier to manage.

Logging and Audit Practices

In the realm of software development, the need for stringent codebase monitoring has never been more pressing. As we witness the digital transformation of industries like banking, with venerable institutions such as M&T Bank steering towards all-digital customer experiences, the urgency for secure coding practices escalates. The banking sector's rapid technological adoption brings with it an increased necessity for measures capable of safeguarding sensitive data against potential cyber threats.

With the increasing complexity of software systems, observability becomes a critical component in managing these modern systems. Effective logging, as part of observability, serves as the system's black box, meticulously recording activities and events. It is crucial for identifying abnormalities and examining incidents within the codebase. Notably, the OpenTelemetry (Otel) project offers a cohesive approach by defining basic concepts and protocols, providing SDK libraries for various programming languages, and delivering a centralized collector for data transformation and exportation. Its vendor-agnostic nature sets it apart from legacy frameworks.

From Google's experience, focusing on developer ecosystems by instilling safe coding practices can drastically diminish common defect rates across a multitude of applications. Such an approach to security and reliability engineering involves educating developers and implementing systems that prevent bugs from arising, particularly those that stem from recurring insecure coding patterns.

Amidst evolving regulations and global efforts to fortify the supply chains of software, suppliers are compelled to stay abreast of requirements such as secure-by-design, liability of software, and certifications from third parties. These measures are crucial in mitigating risks from supply chain attacks on programs, as emphasized by the US Executive Order on Improving the Nation's Cybersecurity and other international initiatives.

As we navigate this landscape, it is imperative to hold our logging and auditing strategies to the highest standard. This includes asking critical questions before logging any information and ensuring that our logs provide a comprehensive, yet discerning, record of system activity. By doing this, we ensure a strong defense against breaches while preserving the integrity and functionality of our program.

Quality Assurance and Vulnerability Management

Ensuring a codebase with high levels of safety through quality assurance processes and vulnerability management is an essential part of the development of programs. To achieve this, strategies such as code review, systematic testing, and vigilant vulnerability scanning must be employed.

In code review, every line of code is scrutinized by fellow developers to catch errors and improve the overall quality. This peer evaluation is critical, as even seasoned programmers can overlook potential security flaws. For example, M&T Bank, with its extensive history and commitment to safe banking practices, has adopted Clean Code standards to maintain and enhance software performance, demonstrating the value of rigorous code review practices.

Testing is another pillar of a secure codebase. By implementing comprehensive testing strategies—including unit, integration, system, and acceptance testing—developers can detect and address defects early in the development process. Google's experience shows that focusing on the developer ecosystem, including safe coding practices, can significantly reduce common defects. Such proactive measures are more effective than trying to remediate issues post-deployment, which can be a challenge.

Vulnerability scanning is an essential aspect of the process, especially considering that 96% of contemporary applications incorporate open-source components, which are frequently the cause of vulnerabilities. By utilizing tools that automatically scan for Common Vulnerabilities and Exposures (CVEs), developers can ensure that the components they integrate into their programs are secure.

The responsibility of creating a reliable and robust application lies in the hands of developers. Through these methods, they can confidently produce software that not only meets functional requirements but also maintains the highest standards of safety.

Secure Secret Management

Ensuring the integrity and confidentiality of your codebase often hinges on how well you manage your secrets, such as API keys, passwords, and database connection strings. Bazaarvoice, a company that powers a vast range of products, emphasizes the significance of prioritizing the protection of such sensitive information. They tackle the pivotal question: "What could happen if someone discovers these secrets?" and implement strict management protocols to prevent breaches.

As the technical infrastructure of organizations rapidly evolves with the adoption of cloud services, CI/CD platforms, and SaaS products, there is an increasing need for robust secret management. This technological diversity can fragment efforts to protect information, leading to secret sprawl, which is the proliferation of untracked or unprotected credentials across IT environments. To combat this, it's essential to employ a comprehensive approach that includes scanning for and locating all secrets within your IT landscape.

According to DevSecOps principles, which blend development, protection, and operations, embedding measures early in the development lifecycle is crucial. As data's value skyrockets, the risk of theft grows alongside it. Keeping applications secure is a constant battle with hackers who are always trying to be one step ahead. The practice of DevSecOps fosters a partnership between the business and IT, ensuring value creation while maintaining safety as a foundational element.

It's no secret that developers look for the simplest solutions to problems; what's seen as laziness might actually be strategic efficiency. A developer's role often involves navigating constraints and resources to deliver effective, yet uncomplicated solutions. With up to 90% of the code in production environments relying on open-source packages from systems like PyPI, even minor vulnerabilities can lead to significant safety concerns.

In 2023, incidents impacting GitHub users experienced a surge, with over a 21% rise from the preceding year, highlighting the increasing dangers in software creation areas. DevSecOps is becoming essential, where the incorporation of safety is seamlessly integrated into the creation process from the start, not added as an afterthought. In this proactive safety climate, managing secrets isn't just about safe storage and encryption; it's about a holistic strategy that secures the entire development and operational workflow.

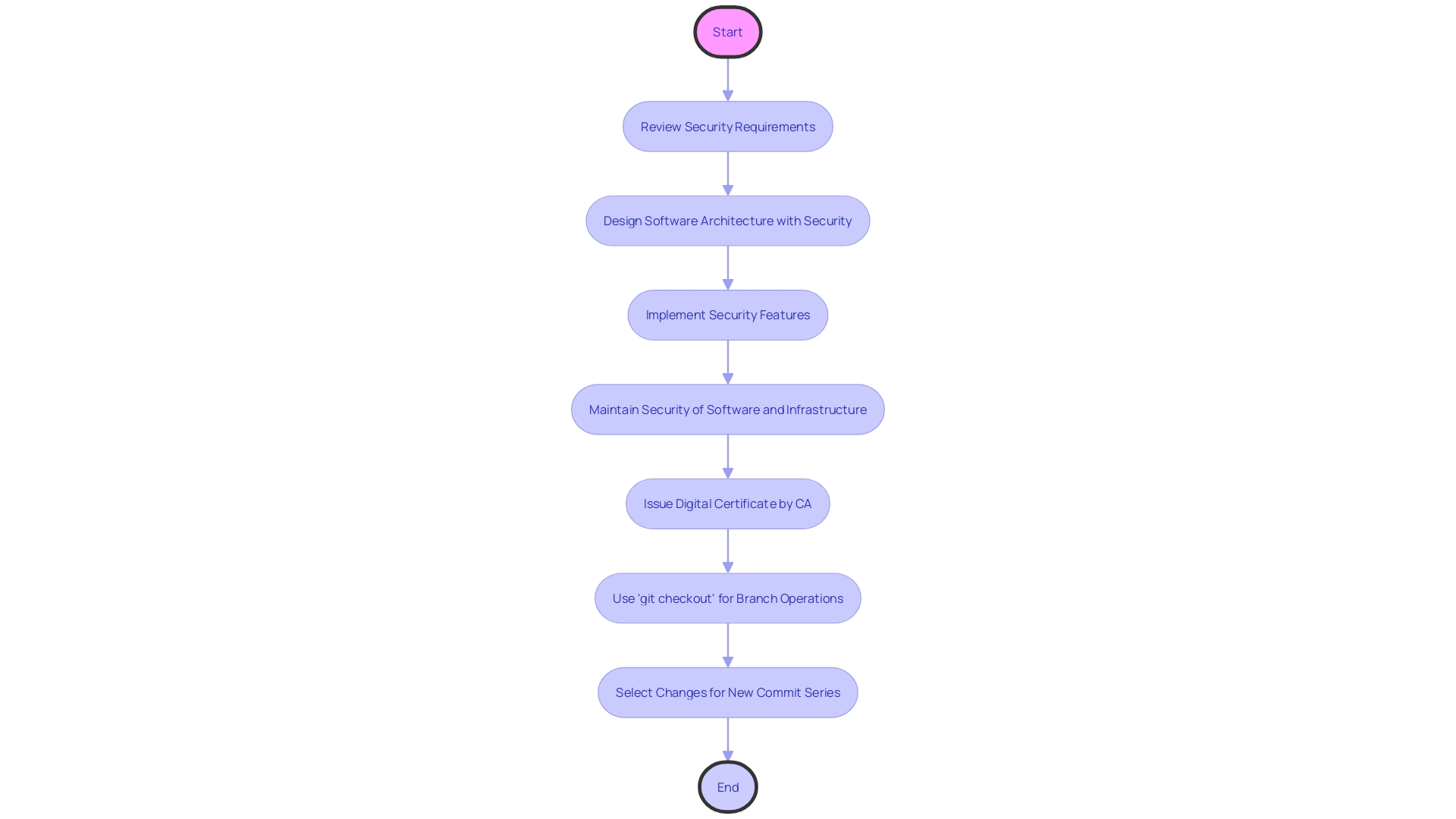

Source Code Management Best Practices

Maintaining code integrity and shielding it from unauthorized changes are pivotal for secure codebase standards. Proper source code management techniques are not only about safeguarding the code but also about aligning it with business objectives, ensuring compliance with strict regulations, and preventing costly security breaches.

For example, M&T Bank, with its rich history, faced the challenge of aligning application quality with its digital transformation goals. They adopted Clean Code standards to enhance maintainability and performance. Similarly, Domain-Driven Design (DDD) is adopted to guarantee that the program reflects intricate business rules and adjusts to changes, emphasizing the significance of deliberate coding practices.

The idea of a Software Supply Chain is extensive, spanning from the environment to the operating system. In 2023, notable cybersecurity incidents were associated with revealed secrets, such as API keys, discovered in plaintext, which could have been prevented through dependable source code management and a comprehension of the application's components.

The creation of protected applications starts with establishing safety and confidentiality criteria, which develop alongside the life cycle of the product and are influenced by danger models. This approach is essential to the Security Development Lifecycle (SDL), ensuring that protection is a constant focus and that issues are addressed early.

Government entities are increasingly acknowledging the role of software safety. The White House Office of the National Cyber Director, for instance, has emphasized the significance of adopting memory-safe programming languages to enhance security of programs. This is part of a broader trend toward establishing rigorous standards for application quality.

In terms of practical tools, the Software Bill of Materials (SBOM) is gaining acceptance as a method for ensuring transparency in the code supply chain. The utilization of open-source components in development is widespread, with the 2024 OSSRA report mentioning that 96% of the codebases contained open-source elements. This enhances the requirement for automated testing of safety by means of solutions like composition analysis (SCA) to efficiently handle the large quantity of elements and potential vulnerabilities.

Ultimately, code management that ensures safety is not a standalone approach but rather a holistic plan that encompasses comprehending the entire software supply chain, from creation to implementation, and aligning it with business objectives and regulatory requirements. Failure to do so can lead to dire consequences, but with the right approach, organizations can maintain a strong and protected codebase that supports their operational and strategic objectives.

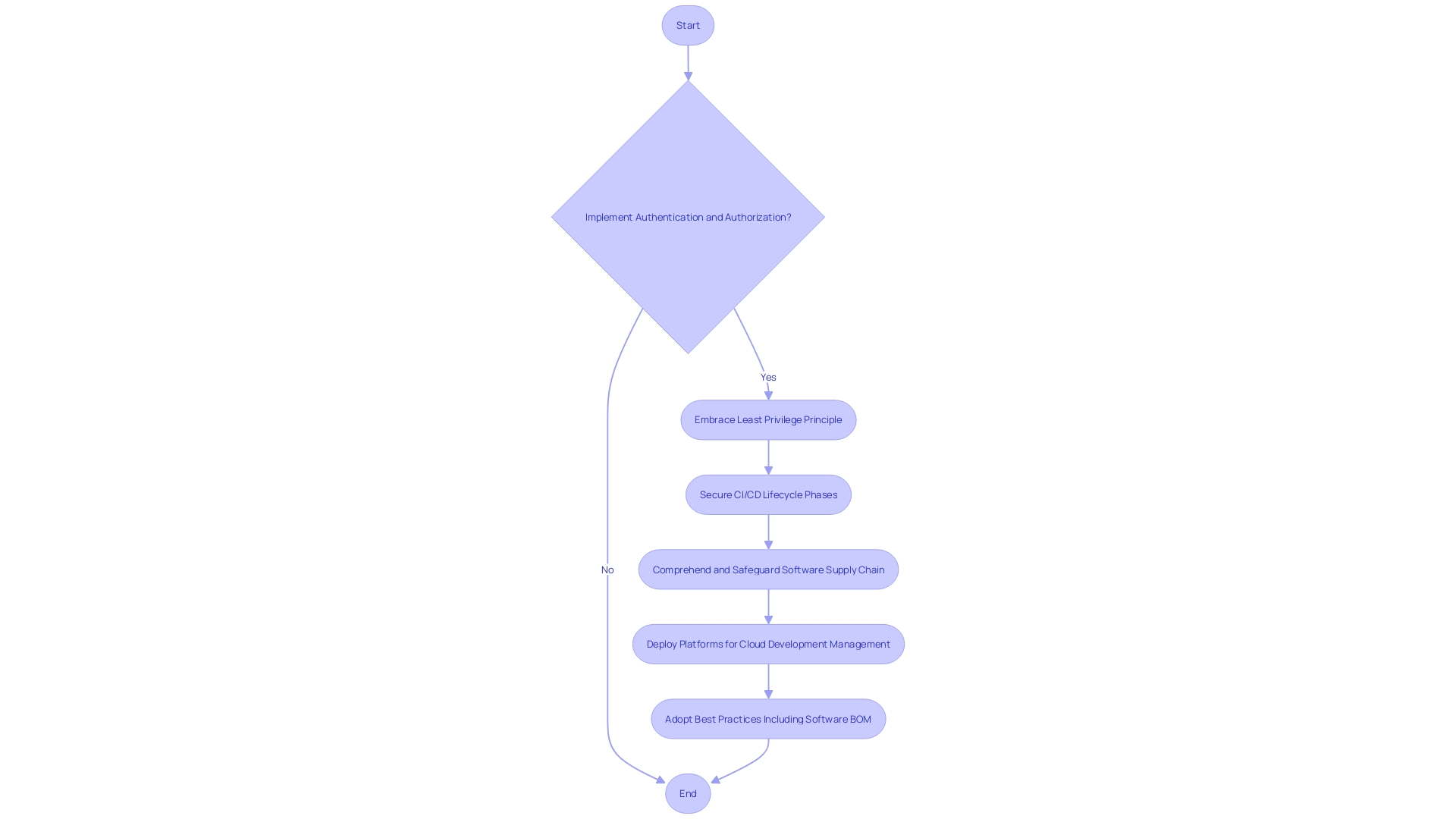

Hardening CI/CD Pipelines

Hardening CI/CD pipelines is not just a technical necessity; it's a business imperative, especially in sectors like banking where security and compliance are paramount. Consider M&T Bank, for example, a long-standing institution in the banking sector, which has acknowledged the necessity of upholding a dependable and effective development process in light of swift technological advancements and rigorous regulatory requirements.

To secure your CI/CD pipeline, start by implementing rigorous authentication and authorization protocols. This ensures that only authorized entities can access and modify the code, configurations, and deployment procedures. Embrace the principle of least privilege, which dictates that each component of your system should have no more access than necessary to perform its function.

Moreover, the rise of AI-assisted applications like chatbots has made it crucial to bake security into the very fabric of our software. As indicated in the lifecycle described as the CI/CD flywheel, each phase from writing code to deploying it in production must be secured.

The importance of a secure CI/CD pipeline is further underscored by the escalating threat landscape, as evidenced by the 21% increase in incidents impacting GitHub users in 2023. The implementation of the 'Software Supply Chain' idea in the Executive Order on Enhancing the Nation's Cybersecurity has emphasized that comprehending and safeguarding every elementâfrom creation surroundings to third-party dependenciesâis essential.

In this era of DevSecOps, security is integral from the onset of the development lifecycle. Companies like Sirius Technologies are leading the charge by deploying platforms that manage Cloud Development Environments, ensuring that their financial services remain efficient, reliable, and secure while addressing challenges such as productivity and intellectual property management.

With cybersecurity incidents on the rise due to exposed secrets like API keys and signing keys, the creation of a Software Bill of Materials (BOM) becomes imperative, providing transparency and provenance of all components in your software. Adopting best practices can supercharge your Software Supply Chain Security and protect against vulnerabilities that can lead to severe financial and reputational damages.

Branch Protection Policies

Branch protection policies are vital to securing your codebase by preventing unauthorized changes, ensuring that only reviewed and approved code merges into important branches. To set up these policies effectively, consider M&T Bank's approach, a seasoned financial institution facing stringent security demands. They have implemented Clean Code standards across their team, emphasizing the importance of maintaining a high-quality, compliant codebase amidst digital transformation.

Change amplification, as described by John Ousterhout in his book 'Philosophy of Software Design', often plagues complex codebases, making the need for straightforward policies even more critical. By simplifying your development process, akin to the ethos of using one command to get started, you reduce the complexity and improve maintainability.

Recent cybersecurity incidents have highlighted the significance of code practices that prioritize safety. Failures in managing confidential information have resulted in significant breaches, demonstrating the necessity for comprehensive protective measures throughout the supply chain of programs. An assured development lifecycle (SDLC) can proactively address vulnerabilities, ensuring security is a priority from inception to deployment.

Lastly, software design patterns play a role in establishing robust branch protection policies. These patterns, such as Creational, Structural, and Behavioral, guide developers in creating efficient and maintainable code, which is easier to secure and manage.

When implementing branch protection policies, keep in mind that simplicity and safety are not mutually exclusive. In this ever-changing tech environment, it is crucial for any organization to remain watchful of the quality of code and the protection of their systems.

Recommended Access Controls and Permissions

Implementing access controls is a critical step in safeguarding your codebase and data from unauthorized access. By understanding the concept of Role-Based Access Control (RBAC), you can organize users into roles based on their responsibilities, ensuring that access permissions align with their job functions. For example, roles can vary within a team, with managers and their reports requiring different levels of access to services and data. Additionally, roles can be assigned temporarily for specific projects or on-call duties, demonstrating the flexibility of RBAC in managing permissions.

Consider an e-commerce store with various data categories. A business analyst needing to query this data should have access only to the information they're authorized to view. This scenario underscores the importance of fine-tuning access controls to prevent unauthorized data exposure.

As APIs become integral to business operations, API protection is paramount. Flaws in API design can lead to data breaches, with recent studies showing that 60% of organizations experienced at least one breach due to such vulnerabilities.

It's not just about managing who can access data; it's about ensuring that the software itself is developed with protection in mind. The DevSecOps approach integrates safety early in the development process, and a Software Supply Chain encompasses all elements from code writing to deployment. With significant cybersecurity incidents arising from exposed secrets like API keys, it's clear that safeguarding credentials is as crucial as securing the code itself.

In web applications, permissions must be checked across all layers: frontend, backend, and database. Authentication and authorization, while distinct concepts, must work together to provide a secure and seamless user experience, much like the process of entering Disneyland and then accessing various attractions.

Statistics from the 2024 OSSRA report emphasize the prevalence of open source components in software, emphasizing the requirement for a Software Bill of Materials (SBOM) to monitor these components and address risks. ASIS's research on access control best practices further emphasizes the necessity of maintaining robust access controls for organizational protection.

Server-level Policies for Globally Enforced Best Practices

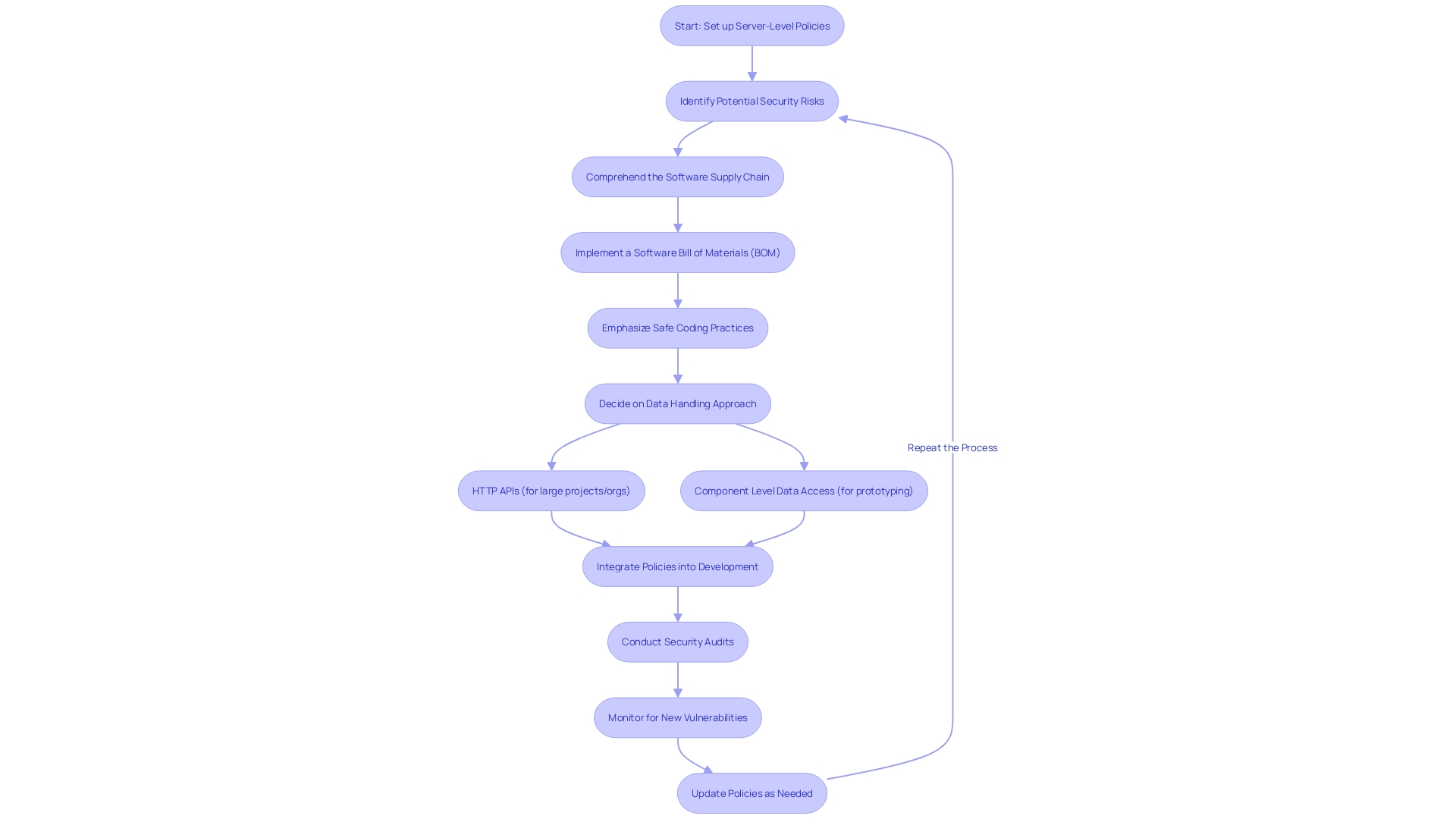

Server-level policies are a linchpin in safeguarding a codebase, especially when considering the proliferation of threats such as typosquatting attacks and malicious packages. By setting up server-level policies, organizations can adhere to the best practices tailored for their technology stack, such as Node.js, and ensure robust code security.

An important element of these policies involves comprehending the Software Supply Chain, which includes everything from the development environment to the OS where the application operates. Recent cybersecurity incidents have highlighted the risks of exposed secrets within codebases, underscoring the necessity for diligent management of sensitive data.

To fortify server-level policies, organizations should consider a software Bill of Materials (BOM), which tracks all components within the software, similar to a BOM in manufacturing. This transparency helps identify vulnerabilities and instabilities introduced through third-party dependencies.

Moreover, emphasis on safe coding practices can significantly reduce defect rates. Google's experience shows that a focus on developer ecosystems can lead to fewer common classes of defects. Safe coding practices prevent bugs by avoiding vulnerable coding patterns, a proactive approach that is more effective than remedial measures such as code review or static analysis.

For example, in the realm of data handling, a clear strategy is crucial. Whether opting for HTTP APIs for larger projects or Component Level Data Access for prototyping, the chosen method should be consistently applied. This clarity not only aids developers but also facilitates security audits.

In the end, the integration of server-level policies that prioritize safety requires vigilance and a commitment to best practices. With the support of case studies and expert insights, organizations can create a more resilient and well-protected codebase, capable of withstanding the evolving landscape of cyber threats.

Continuous Monitoring and Incident Response Planning

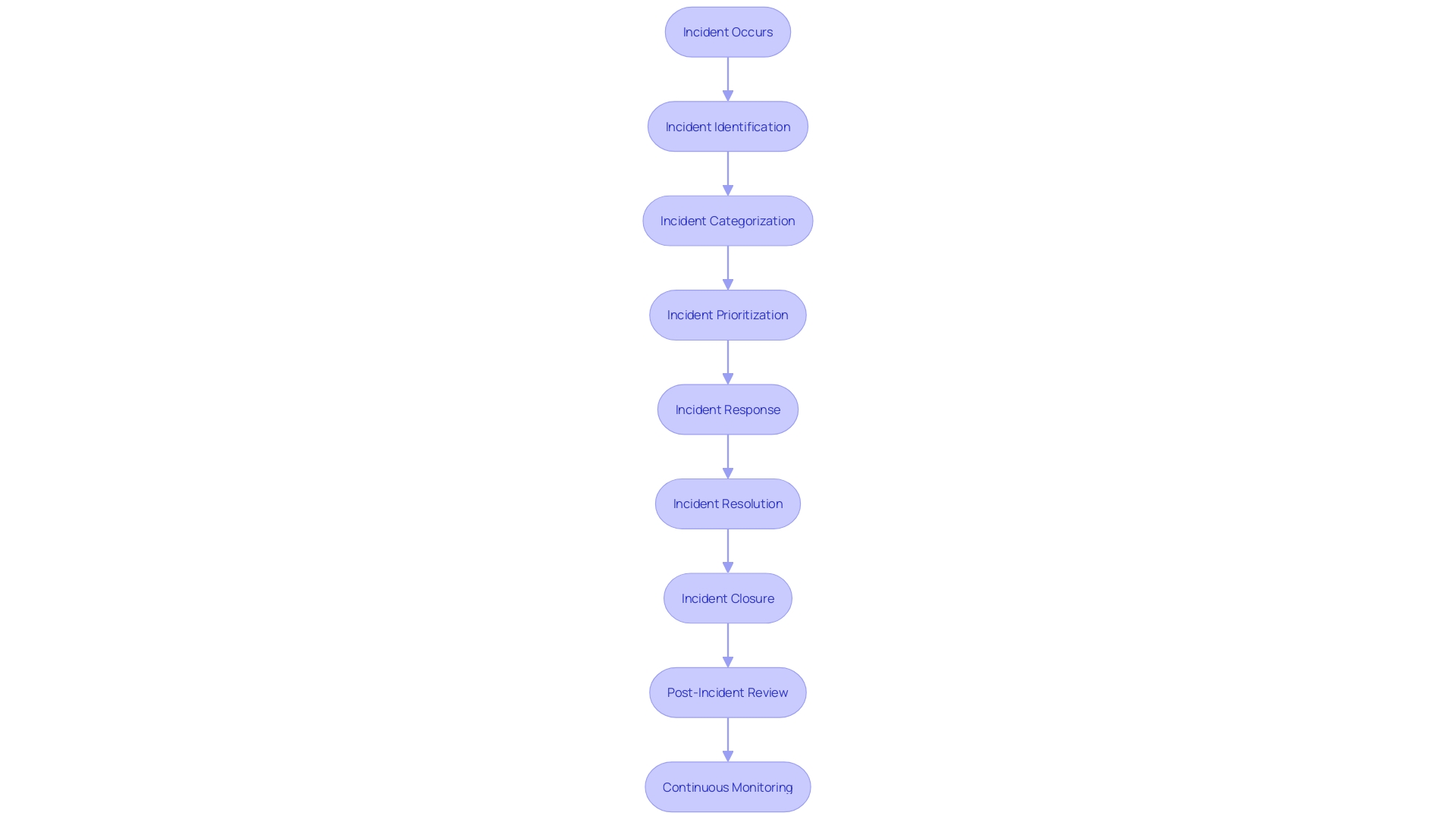

The ever-evolving landscape of development has escalated the complexity of maintaining a secure and efficient codebase. To ensure swift detection and response to potential security incidents, organizations must employ comprehensive strategies for continuous monitoring and incident response. This involves the implementation of 'CoMELT' (Code, Metrics, Events, Logs, Traces), a telemetry system that provides vital signals indicating the health of software systems. By using observability platforms that leverage CoMELT, developers are equipped to handle an array of incidents with enhanced debugging capabilities.

Incident response is an orchestrated process encompassing the identification, containment, and remediation of incidents to minimize the disruption to IT systems or services. The core components of an effective incident response framework include a documented plan outlining the specific steps to manage and mitigate incidents, along with a dedicated incident response team. This team is tasked with employing the plan to address incidents as they arise.

A practical measure for maintaining situational awareness is the utilization of a dashboard, which serves as a centralized monitoring tool. It provides real-time insights into the operational aspects of the environment, such as the status of feature flags, active promotions, and system elasticity. This proactive monitoring is crucial for planning capacity and throughput adjustments, thus ensuring the resilience of the services offered.

Understanding the root cause of an incident involves a rigorous investigation into the contributing factors, which may range from configuration drift to recent code modifications. The goal is to ask iterative questions that delve into the causes of an incident, ultimately leading to the identification of the primary and secondary factors at play.

To benchmark the success of an incident response strategy, it is essential to establish a set of success criteria that are comprehensive, minimal, written, and falsifiable. These criteria should delineate the desired outcomes while remaining concise and measurable. By adhering to these criteria, organizations can objectively evaluate the effectiveness of their incident response efforts.

Furthermore, recent initiatives like the Office of Homeland Security Statistics (OHSS) have begun providing statistical data on a variety of topics, including cybersecurity. These statistics offer valuable insights that can be used to enhance incident response strategies. For example, the Past Incidents heat map is a visual tool that illustrates the frequency of incidents over the previous six months, allowing organizations to identify patterns and prepare for similar events in the future.

Conclusion

In conclusion, prioritizing security in software development is crucial for ensuring a secure and efficient codebase. By embracing best practices such as security by design, robust password management, effective access control, error handling and logging, system configuration and patch management, threat modeling, cryptographic practices, input validation and output encoding, authentication and authorization, logging and audit practices, quality assurance and vulnerability management, secure secret management, source code management best practices, hardening CI/CD pipelines, branch protection policies, recommended access controls and permissions, server-level policies, and continuous monitoring and incident response planning, developers can create a secure codebase.

These practices address various aspects of software security, including protecting user data, preventing unauthorized access, detecting and mitigating vulnerabilities, and ensuring the integrity and confidentiality of sensitive information. By incorporating security measures from the early stages of development, developers can build a solid foundation for secure software.

Moreover, staying updated on evolving cybersecurity threats and incorporating ongoing learning and training is essential for maintaining the security of the codebase. By adopting a proactive approach to security, developers can prevent security incidents and minimize the impact of potential breaches.

Overall, prioritizing security in software development not only protects valuable data and resources but also contributes to the overall efficiency and productivity of the development process. By implementing these best practices, developers can create a codebase that is resilient, trustworthy, and capable of withstanding the evolving landscape of cybersecurity threats.

Start implementing best practices and create a resilient and trustworthy codebase with Kodezi!

Frequently Asked Questions

What is 'Security by Design'?

Security by Design refers to integrating security measures into the core framework of software development, rather than treating security as an afterthought. It emphasizes a proactive approach to protection throughout the development lifecycle.

Why is Security by Design important?

In a world where traditional security measures often fall short, embedding security into the development process is crucial for creating robust software infrastructures that can withstand vulnerabilities and cyber threats.

How does Apple demonstrate Security by Design?

Apple's Private Cloud Compute exemplifies this approach by prioritizing proactive safety measures, showcasing a commitment to integrating security into its foundational architecture.

What role do software manufacturers play in security?

Software manufacturers are responsible for ensuring customer safety outcomes by integrating protection by default, adopting safe product development practices, and following business protocols that prioritize security.

What are RBAC and ABAC in access control?

Role-Based Access Control (RBAC) assigns permissions based on user roles within an organization, allowing flexible access management. Attribute-Based Access Control (ABAC) uses attributes related to users, resources, or the environment to determine access permissions.

Why is input validation important?

Input validation checks external data against predetermined criteria to prevent injection attacks and unauthorized data exposure, ensuring that only safe inputs are processed by the system.

What is the significance of error handling and logging?

Effective error handling and logging are critical for maintaining application stability and resilience against malicious exploitation. They ensure that errors are managed appropriately and that system activities are recorded for future audits.

How do cryptographic practices contribute to security?

Cryptographic techniques, such as encryption and hashing, protect sensitive information and ensure data integrity. They are essential for safeguarding data against unauthorized access and tampering.

What is the role of continuous monitoring in incident response?

Continuous monitoring helps organizations swiftly detect and respond to security incidents. It involves tracking metrics, logs, and events to identify abnormalities and execute a pre-defined incident response plan.

What is vulnerability management?

Vulnerability management refers to the ongoing process of identifying, assessing, and mitigating security vulnerabilities within a software system. It includes methods like code reviews, vulnerability scanning, and systematic testing.

How does secret management enhance security?

Proper management of secrets (e.g., API keys and passwords) involves implementing strict protocols to prevent unauthorized access and ensuring that sensitive information is securely stored and monitored.

What is the Software Bill of Materials (SBOM)?

A Software Bill of Materials (SBOM) is a comprehensive inventory of all components within a software application. It helps organizations understand their software supply chain and manage vulnerabilities effectively.

Why are branch protection policies important?

Branch protection policies prevent unauthorized changes to critical code branches, ensuring that only reviewed and approved code is merged, thereby maintaining the integrity and security of the codebase.

How can organizations improve their incident response strategies?

Organizations can enhance their incident response strategies by establishing clear success criteria, employing a dedicated incident response team, and utilizing monitoring dashboards to maintain situational awareness.

What are the implications of not adopting Security by Design?

Failing to adopt Security by Design can lead to significant vulnerabilities, increased risk of cyberattacks, and potential breaches that could harm organizations' data integrity and reputations.