Introduction

Artificial Intelligence (AI) is revolutionizing how we approach problem-solving and operational efficiency. However, successful AI implementation requires a comprehensive understanding of its requirements and challenges, which range from data management and bias mitigation to regulatory compliance and seamless integration with existing systems. This article delves into the critical aspects of AI development, highlighting the importance of responsible AI principles, security and trustworthiness, and good machine learning practices.

Additionally, it explores the significance of human oversight and ethical considerations, as well as the need for transparency and accountability in AI systems, providing a roadmap for organizations to harness AI's transformative potential effectively.

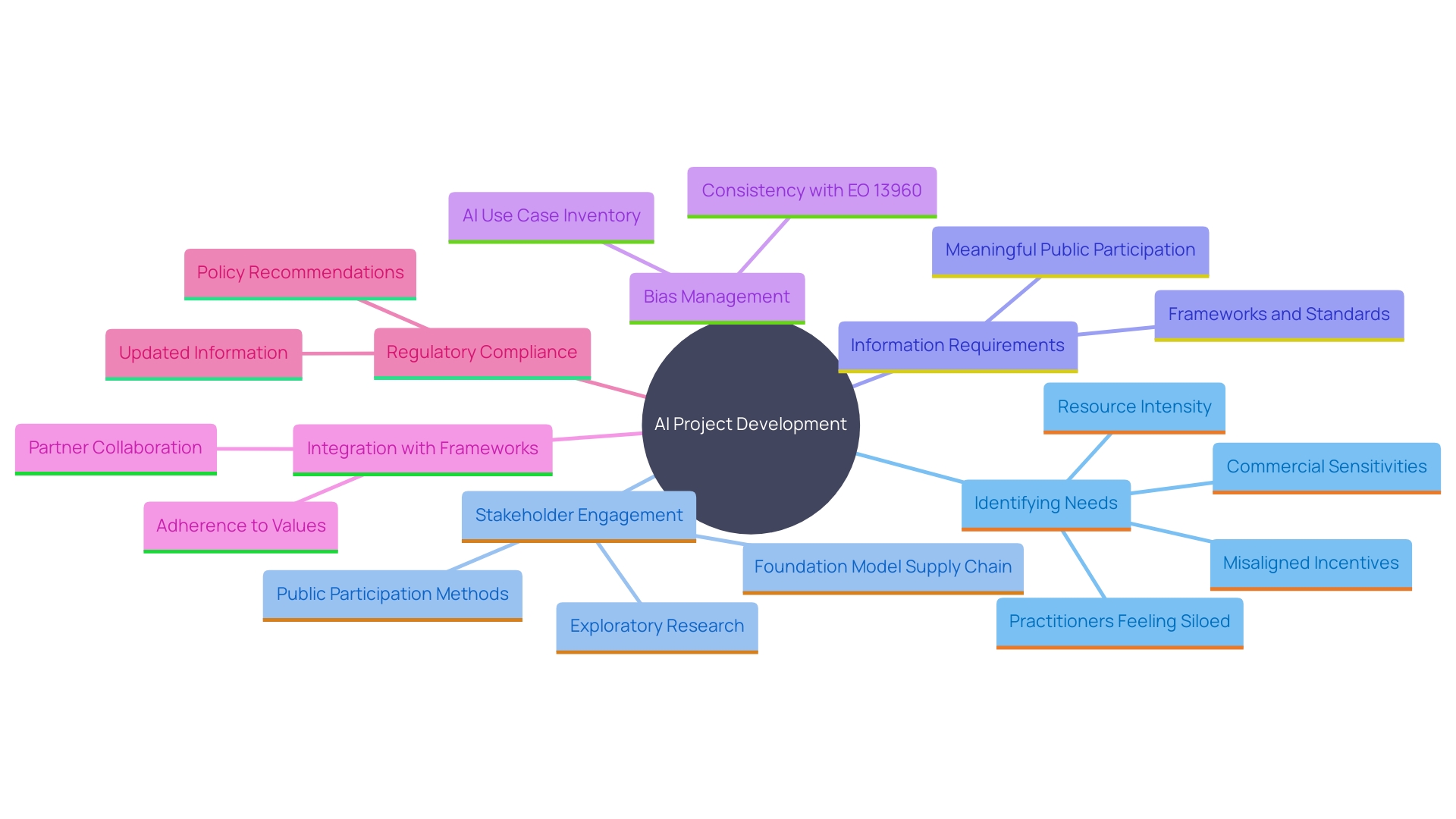

Understanding AI Requirements and Challenges

Identifying the specific needs of your AI project is the cornerstone of successful development. Start by clearly defining the problem you aim to solve and the desired outcomes. This involves gathering input from stakeholders and integrating user feedback into your strategy, ensuring that all perspectives are considered. Grasping the information requirements is crucial—consider the type of information needed, its source, and accessibility. High-quality information, both in volume and accuracy, is essential to train robust AI models.

One of the biggest challenges in AI development is managing information bias. Ensuring diverse and representative data sets can mitigate bias and lead to more equitable outcomes. Moreover, incorporating AI with current frameworks necessitates meticulous preparation to guarantee smooth functioning without interrupting ongoing processes.

Evolving regulations are another critical factor to consider. Keeping abreast of the latest AI policies and governance frameworks is essential to ensure compliance and avoid legal pitfalls. The Foundation Model Transparency Index, for instance, evaluates companies on various aspects of transparency, highlighting the importance of clear and ethical AI practices.

Workshops and training sessions, like those conducted by project teams for Health Economics and Safety meetings, are invaluable. They provide insights into AI systems, user training, and governance requirements, contributing to a well-rounded understanding of AI deployment. For example, a pharmaceutical company achieved a 75% increase in operational efficiency by leveraging AI, underscoring the transformative potential of well-implemented AI solutions.

In summary, a comprehensive approach that includes stakeholder engagement, meticulous data management, addressing bias, seamless integration, and adherence to evolving regulations is imperative for the successful development and deployment of AI technologies.

Responsible AI (RAI) Principles and Best Practices

Implementing Responsible AI principles is vital for building trust and ensuring the ethical use of AI technologies. This involves ensuring fairness, accountability, and transparency in AI systems. For instance, Azure Machine Learning’s Responsible AI dashboard provides tools to assess model fairness across sensitive groups such as gender, ethnicity, and age. Regular audits should be conducted to assess AI technologies' performance and influence on various demographics. Incorporating diverse perspectives during development can mitigate biases and enhance inclusivity. To build trust, AI technologies must function reliably, safely, and consistently, responding appropriately to unexpected conditions and resisting harmful manipulation. The Center for AI Safety emphasizes the need for research and regulation to reduce societal-scale risks from AI, underscoring the importance of these principles in fostering a trustworthy AI ecosystem.

Ensuring AI System Security and Trustworthiness

Security vulnerabilities in AI technologies can have far-reaching consequences, potentially exposing sensitive data to unauthorized access and tampering. To protect these systems, it is essential to establish strong protective measures. Frequent updates to protection measures and carrying out penetration testing can aid in recognizing and reducing potential vulnerabilities. As stated by the Cloud Security Alliance, thorough examination and protective measures are essential when dealing with machine learning models from untrusted sources.

A significant illustration of the importance of protection in AI is the detection and analysis of safety properties in LLM-generated code. Researchers have created methods to automatically identify weaknesses, uncovering prevalent problems like API misuse that could result in considerable threats to protection. As noted by Apostol Vassilev, a computer scientist at NIST, current mitigation strategies lack robust assurances, and the community is encouraged to develop better defenses.

Transparency in AI decision-making processes is also vital for fostering trust among users and stakeholders. This is particularly important as AI systems become more integrated into various aspects of society, from autonomous vehicles to medical diagnostics. Making sure that AI algorithms are free from bias is essential; 65% of professionals in the field think regular evaluations are important to avoid biased decision-making. In a survey, 37% of organizations reported using AI-powered endpoint protection solutions, highlighting the growing reliance on AI in cybersecurity.

In summary, safeguarding AI technologies from security weaknesses requires a multi-faceted approach, including robust security protocols, regular updates, and transparency in decision-making processes. By addressing these aspects, we can create more secure and trustworthy AI solutions.

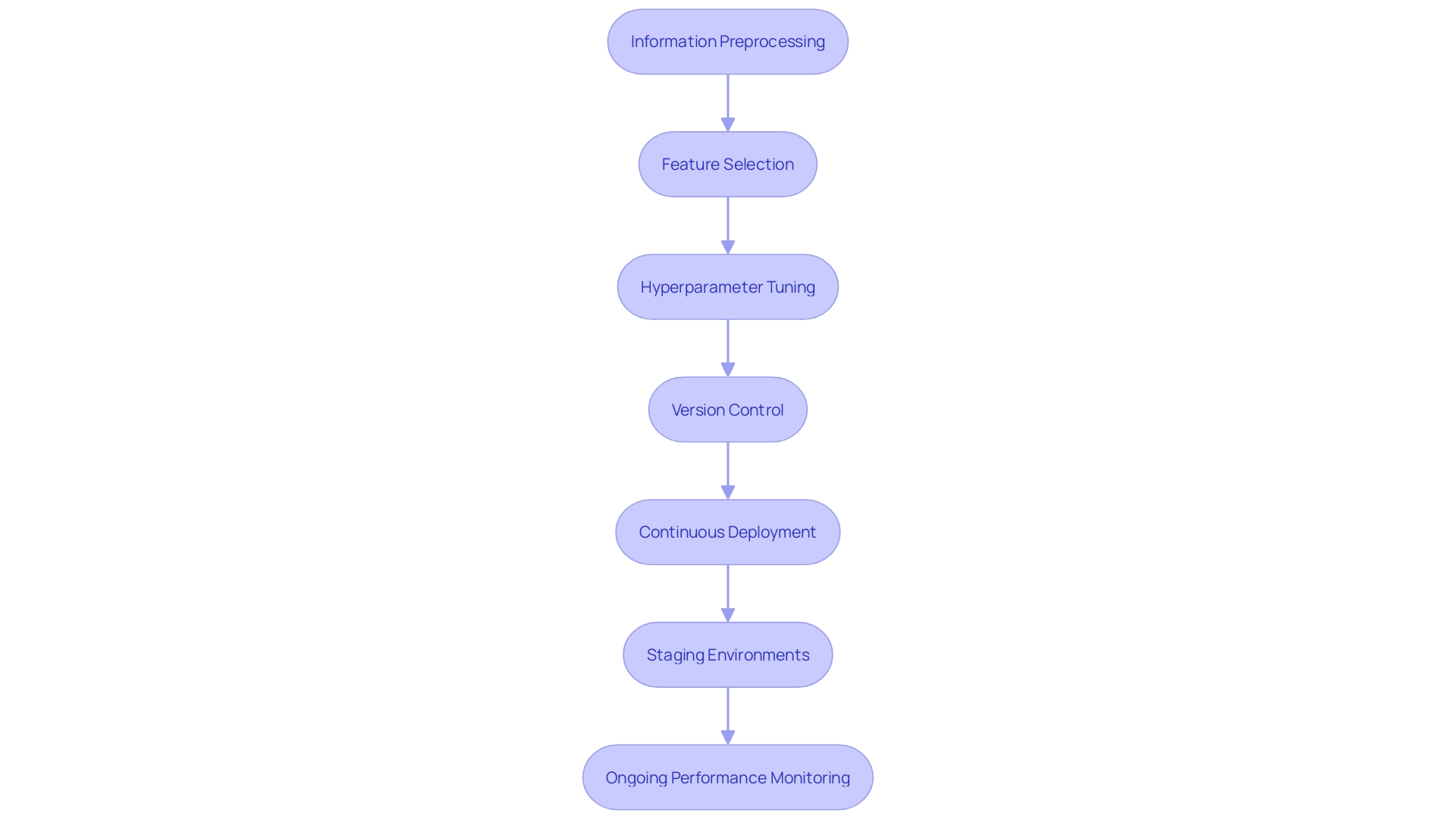

Good Machine Learning Practices for AI Development

Adopting best practices in machine learning can significantly enhance the efficiency and effectiveness of AI systems. Key practices include information preprocessing, feature selection, and hyperparameter tuning. For instance, Aviva, a leading insurance company, has implemented machine learning across more than 70 use cases. Earlier, their analytics experts devoted more than 50% of their time to operational tasks because of manual deployment procedures. By adopting advanced MLOps practices, they streamlined the training and deployment of algorithms, leading to more innovation and improved performance monitoring.

Employing version control for frameworks and data is essential for monitoring alterations and guaranteeing reproducibility. Continuous deployment is another crucial practice, automatically releasing the latest, most effective version updates to production. Additionally, staging or shadow deployment environments mimic real-world conditions, allowing for thorough testing before full deployment.

Ongoing observation of performance after deployment is essential. This practice enables real-time improvements and ensures models remain effective over time. By following these best practices, organizations can optimize their machine learning workflows, driving innovation and maintaining a competitive edge.

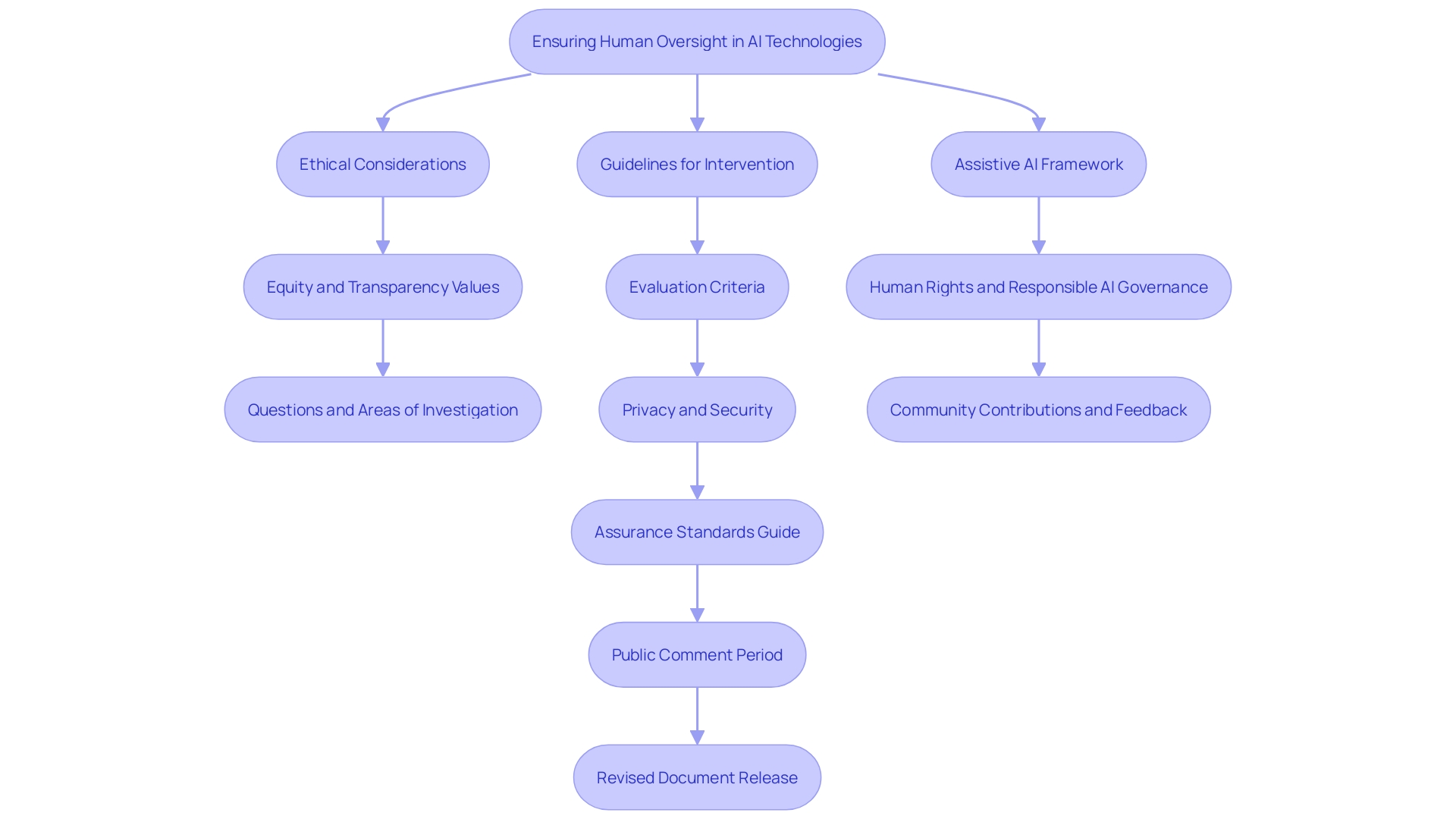

Human Oversight and Ethical Considerations in AI

While AI technologies can automate many processes, human oversight remains essential. Establishing clear guidelines for human intervention in decision-making processes is particularly crucial in high-stakes environments. For example, in military uses, AI must never independently choose to end a human life, as highlighted by Pope Francis, who advocated for increased human oversight over autonomous weapons. Ethical considerations must be integrated into every phase of AI development to ensure solutions uphold human rights and dignity. The international human rights framework provides a rare consensus, making it a pillar for responsible AI governance strategies. Additionally, the implementation of the Assistive AI framework, which focuses on enhancing human decision-making capabilities, underscores the importance of privacy, accountability, and credibility. To establish confidence in AI technologies, safety cases, a tool already prevalent in sectors such as aviation and nuclear power, can be modified to showcase AI safety and dependability in different operational contexts.

Implementing AI with Transparency and Accountability

Clarity in AI frameworks is essential for building trust and acceptance among users. By documenting all aspects of the AI development process, including data sources and algorithm choices, stakeholders can gain a clearer understanding of how decisions are made. This transparency is supported by initiatives like AI Impact Assessments (AI-IAs), which are increasingly adopted by companies to align with frameworks such as the NIST AI Risk Management Framework and OSTP’s Blueprint for an AI Bill of Rights. For example, Salesforce openly publishes its AI-IAs results, which helps build trust with investors, regulators, and the public.

Establishing accountability frameworks is another key step in ensuring that all stakeholders are responsible for the outcomes of AI implementations. Federal agencies are encouraged to collaborate with stakeholders to apply existing liability rules and standards to AI technologies, and to supplement them as needed. This involves investing in technical infrastructure, AI access tools, and personnel to support independent evaluations of AI technologies. For instance, the federal government is urged to support datasets that test for equity and efficacy, as well as the computing infrastructure necessary for rigorous evaluations.

Moreover, transparency in data usage not only enhances the quality of AI models by ensuring they are trained on accurate and unbiased data, but also facilitates compliance with regulations, thereby reducing the risk of legal issues. Open AI technologies are easier to scrutinize, making it simpler to identify and fix vulnerabilities. This approach is essential in creating a culture of responsibility and ensuring that AI systems are both effective and trustworthy.

Conclusion

The exploration of AI development underscores key requirements and challenges essential for successful implementation. Clear project objectives, a solid understanding of data needs, and stakeholder engagement are foundational to effective AI initiatives. Addressing data bias, ensuring seamless integration, and adhering to evolving regulations are critical steps that can lead to significant operational efficiencies.

The adoption of Responsible AI principles is crucial for building trust within the AI ecosystem. Focusing on fairness, accountability, and transparency enhances the inclusivity and reliability of AI systems. Regular audits and diverse perspectives during development not only mitigate risks but also align with societal expectations for ethical technology use.

Security and trustworthiness are non-negotiable in AI deployment. Robust security protocols, regular updates, and transparency in decision-making processes are vital for protecting sensitive data and fostering user confidence. As AI becomes increasingly integrated into various sectors, prioritizing security measures is essential to safeguard against vulnerabilities.

Moreover, implementing best practices in machine learning and ensuring human oversight optimize AI effectiveness while respecting human rights. Streamlined workflows and continuous monitoring help maintain innovation and competitive advantage.

Ultimately, fostering transparency and accountability is essential for responsible AI implementation. Documenting the development process and establishing clear accountability frameworks enhance compliance with regulations and contribute to a culture of responsibility. This comprehensive approach not only improves AI model quality but also drives meaningful advancements across industries, unlocking the transformative potential of AI technology.

Frequently Asked Questions

What is the first step in developing a successful AI project?

The first step is to clearly define the problem you aim to solve and the desired outcomes. This should involve gathering input from stakeholders and integrating user feedback into your strategy.

Why is stakeholder engagement important in AI development?

Stakeholder engagement ensures that all perspectives are considered, leading to a more comprehensive understanding of the project requirements and desired outcomes.

What role does data quality play in AI projects?

High-quality information—both in volume and accuracy—is essential for training robust AI models. Understanding information requirements, sources, and accessibility is crucial.

How can information bias affect AI development?

Information bias can lead to inequitable outcomes. Ensuring diverse and representative data sets helps mitigate bias and improves the fairness of AI systems.

What preparations are necessary for integrating AI with existing frameworks?

Meticulous preparation is needed to ensure smooth functioning of AI systems without interrupting ongoing processes.

Why is it important to keep up with evolving AI regulations?

Staying updated on AI policies and governance frameworks is essential for ensuring compliance and avoiding legal pitfalls.

What is the Foundation Model Transparency Index?

It evaluates companies on various aspects of transparency, underscoring the importance of clear and ethical AI practices.

How can workshops and training sessions help in AI deployment?

Workshops provide insights into AI systems, user training, and governance requirements, contributing to a well-rounded understanding of AI deployment.

What best practices can enhance the efficiency of machine learning systems?

Key practices include information preprocessing, feature selection, hyperparameter tuning, version control, continuous deployment, and ongoing performance monitoring.

What is the importance of human oversight in AI decision-making?

Human oversight is crucial, particularly in high-stakes environments, to ensure ethical considerations are upheld and decisions align with human rights.

How can transparency in AI frameworks build trust?

Documenting all aspects of the AI development process helps stakeholders understand decision-making, which builds trust and acceptance among users.

What are AI Impact Assessments (AI-IAs)?

AI-IAs are initiatives adopted by companies to assess the impact of AI technologies, ensuring alignment with frameworks like the NIST AI Risk Management Framework.

Why is establishing accountability frameworks essential?

Accountability frameworks ensure that all stakeholders are responsible for the outcomes of AI implementations, promoting responsible governance.

How does transparency in data usage benefit AI development?

Transparency in data usage enhances the quality of AI models, facilitates compliance with regulations, and reduces the risk of legal issues.

What is the significance of security measures in AI technologies?

Strong security measures protect against vulnerabilities that can expose sensitive data. Regular updates and penetration testing are essential for maintaining security.