Introduction

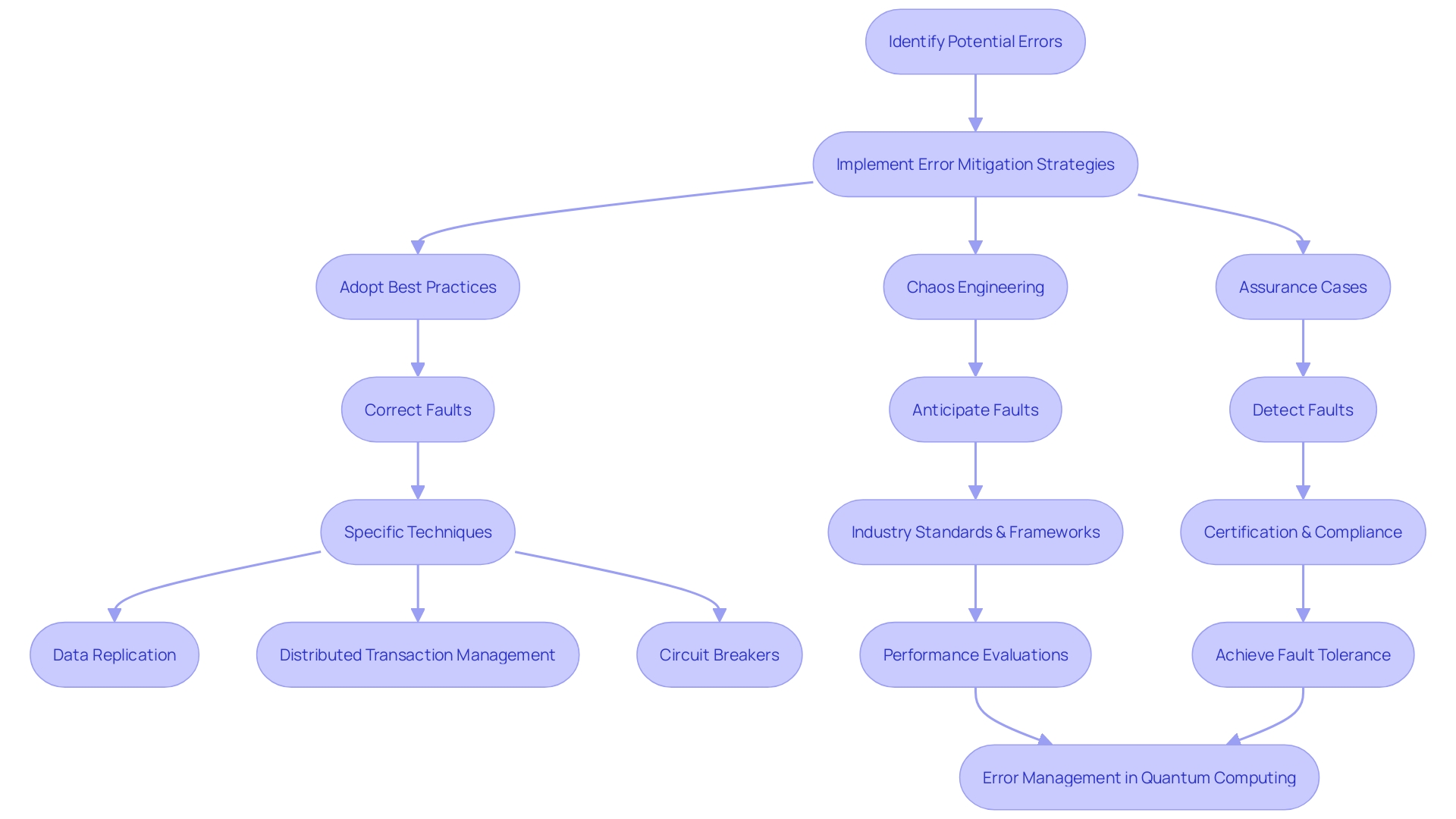

Error mitigation techniques play a crucial role in ensuring the reliability and smooth performance of systems. From chaos engineering to assurance cases, these strategies are designed to anticipate, detect, and correct faults before they escalate into significant system failures. In critical areas like autonomous systems and AI-based applications, robust assurance cases become particularly prominent.

Industry standards and frameworks help certify systems and demonstrate compliance with regulations, fortifying trust in system dependability. Best practices in error mitigation include detailed documentation of potential attacks and their countermeasures, coupled with real-time system monitoring. The resilience of microservices relies on self-preservation and consistent performance, while performance evaluations enhance system quality and developers' ability to create better systems.

The field of quantum computing shows the critical need for effective error mitigation techniques to realize its potential advantages. In this article, we will explore the different types of error mitigation techniques, analyze their strengths, and examine the factors influencing their choice. Through a comparative analysis, we aim to provide insights into the best practices for implementing error mitigation and discuss the future directions and challenges in this field.

Overview of Error Mitigation Techniques

Error mitigation techniques play a crucial role in ensuring the dependability and seamless operation of mechanisms. They encompass a variety of strategies, from chaos engineering to assurance cases, designed to anticipate, detect, and correct faults before they escalate into significant failures. For example, chaos engineering goes beyond mere testing by following a methodical approach to hypothesize, observe, and analyze behavior under stress. It's not about creating mayhem but about learning how to prevent it through carefully planned experiments that complement traditional testing methods.

In crucial domains such as autonomous technology and AI-driven applications, assurance concerns become notably prominent. Industry standards such as ISO 26262 for automotive safety, FDA guidelines for medical devices, and OMG's SACM for argumentation reflect the growing necessity for robust assurance cases. These frameworks aid in certifying and demonstrating compliance with regulations, thereby strengthening trust in dependability.

Best practices in error mitigation include detailed documentation of potential attacks and their countermeasures, as well as a condensed view of the best practices specific to technologies like Node.js. By implementing strategies like data replication and distributed transaction management, along with ongoing real-time monitoring, it is possible to greatly minimize the possibility of data loss and guarantee the uninterrupted flow of critical business processes.

The resilience of microservices, for instance, relies on the concept of self-preservation and consistent performance, even under duress. Techniques like circuit breakers prevent cascading failures by avoiding requests to faltering setups, thereby maintaining overall integrity.

Academic research and industry practices both emphasize the significance of comprehensive performance evaluations to comprehend a structure's behavior and constraints deeply, as highlighted by expert insights. Performance evaluations not only enhance the immediate quality but also improve developers' capability to create better solutions in the future.

Quoting Leslie Lamport, "A distributed structure is one in which the failure of a computer you didn't even know existed can render your own computer unusable." This underscores the significance of fault tolerance in distributed systems—ensuring uninterrupted operation despite component failures. The goal is to achieve a level of resilience that safeguards against disruptions, from minor server issues to major data center outages.

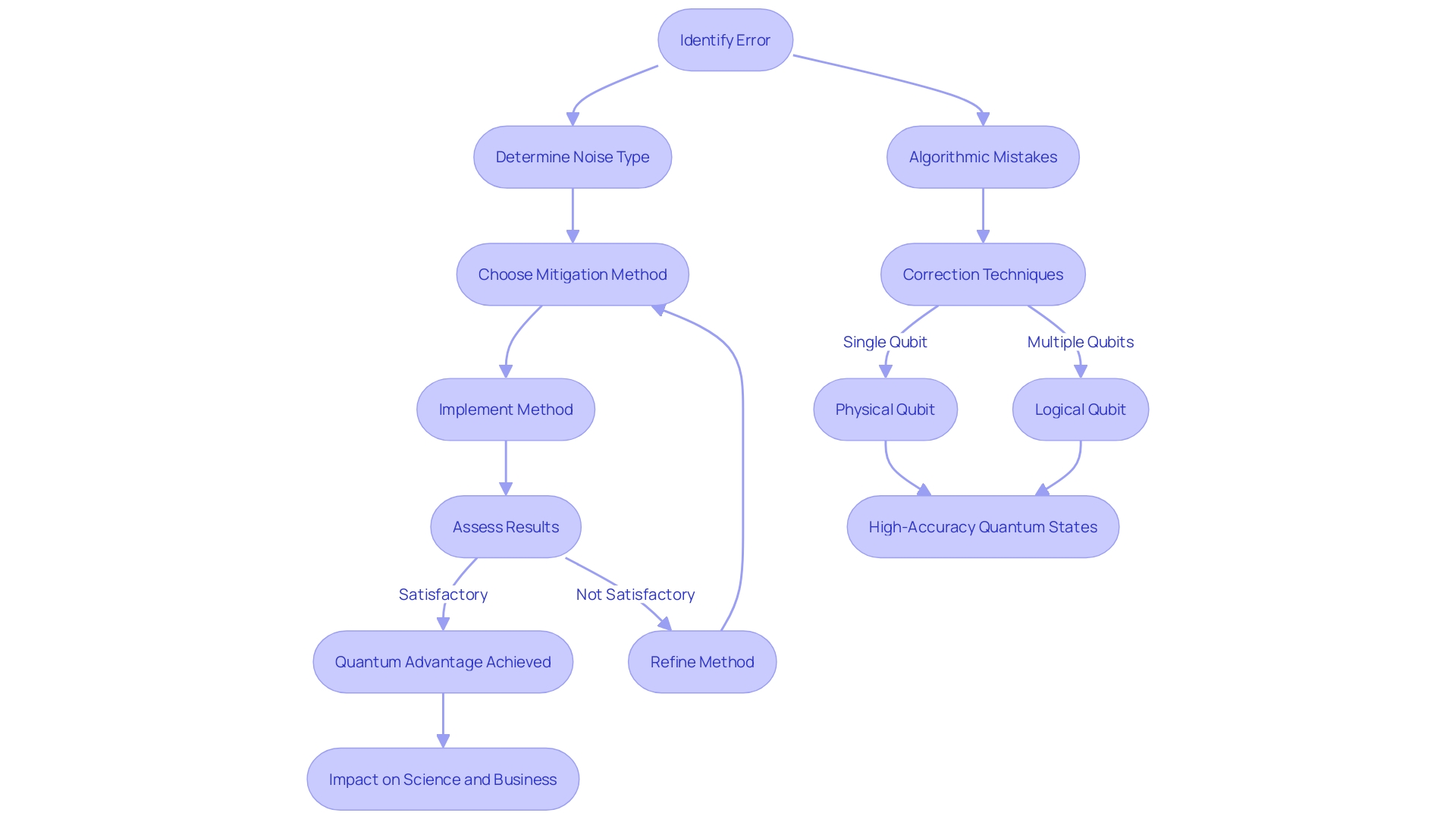

Statistical analyses uncover the various types of noise that can affect systems, including algorithmic mistakes, and direct the choice of suitable alleviation approaches. The continuous progress in quantum computing, sensitive to errors and noise, highlights the essential requirement for efficient error management methods to achieve the potential benefits that such technology offers for science and business.

Types of Error Mitigation Techniques

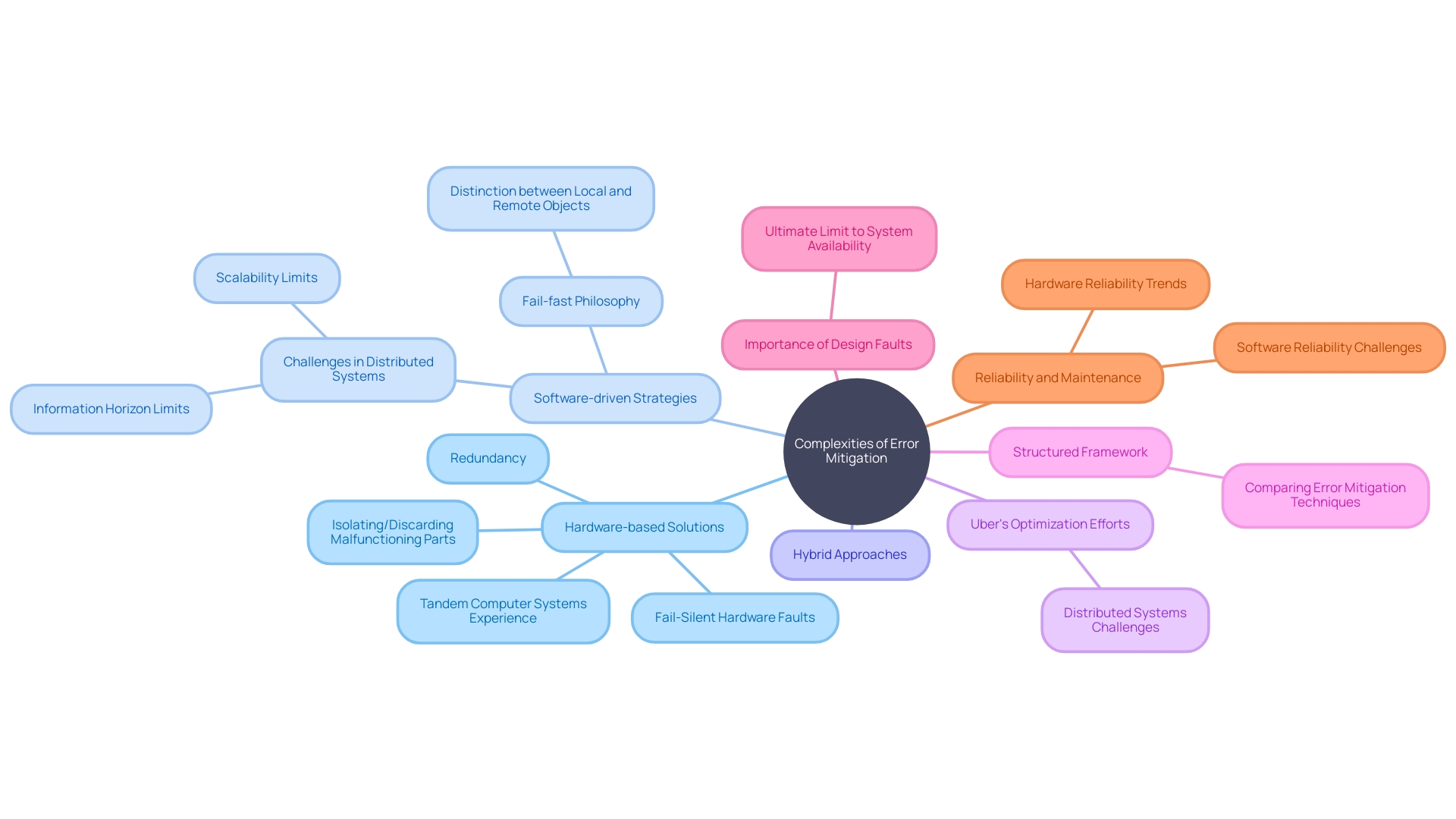

Addressing errors is a complex field that demands a nuanced strategy customized to the distinctive difficulties of every situation. Techniques can range from hardware-based solutions to software-driven strategies, or even a blend of both, known as hybrid approaches. In practice, Uber's efforts to optimize their multi-tenant platform demonstrate the complexities involved. By aiming to improve CPU usage and minimize resource inefficiency, Uber encountered a web of interconnected challenges, which prompted a thorough assessment of their processes. Similarly, recent academic research, reviewing 220 articles published between 2019 and 2024, offers a structured framework to compare these error mitigation techniques, highlighting their strengths and potential synergies. This framework is critical for future research directions and tackling open challenges in the field.

In the industry, an effective performance evaluation is crucial for sustaining high performance throughout a product's lifecycle. It offers understanding into the behavior of a structure, its limitations, and the fundamental concerns that must be tackled to improve performance. A well-executed performance evaluation can unveil attributes of the structure that were not previously apparent, leading to enhanced design and implementation of upcoming structures.

Furthermore, the realm of quantum computing highlights the significance of identifying the appropriate methods for addressing the prevailing forms of noise, such as algorithmic inaccuracies. The susceptibility of quantum systems to mistakes presents a substantial obstacle, establishing boundaries on the duration and magnitude of computation. Innovations in this realm could lead to quantum advantage in both scientific and commercial applications, as discussed in recent research.

The data center industry also acknowledges the importance of reducing mistakes as it aims to construct scalable infrastructure. News from the sector shows an increasing focus on semiconductor technology, with major acquisitions shaping the market and the U.S. government's investment to bolster the domestic chip industry. The importance of reducing mistakes is evident as it directly affects the effectiveness and expandability of data center operations, a crucial factor for future development.

Case Study: Applying Error Mitigation Techniques on NISQ Devices

Quantum computing holds the key to solving complex problems at speeds unthinkable with classical computers. However, recognizing this potential relies on overcoming the challenge of sensitivity to mistakes due to quantum systems' interactions with their environment. Scientists have recognized the main categories of disturbance, like algorithmic mistakes, that impact Noisy Intermediate-Scale Quantum (NISQ) devices, establishing a path for choosing suitable reduction methods.

Innovative research conducted by Postler, Egan, and their team have highlighted that rectifying mistakes is not a universal remedy. The choice of technique must align with the specific noise characterizing the quantum system. The field is full of open problems, but there is optimism about devices that can leverage mistake mitigation to deliver quantum advantage, impacting science and industry.

Advancements in quantum correction for errors are crucial, as highlighted by the distinction between physical and logical qubits. Physical qubits are tangible components within the hardware, prone to decoherence and operational mistakes. Logical qubits, on the other hand, arise from groups of physical qubits working together, according to the intricate principles of quantum correction. Attaining a coherent qubit's stable and high-accuracy state necessitates surpassing the breakeven threshold, where error-detection reduces the mistake rate below a critical point.

The evolution of quantum computers is evident in the shift from single physical qubits to multiple physical qubits encoding a unit of information, forming a 'logical' qubit. This approach enhances robustness and reduces the likelihood of information being misrepresented due to state alterations. Google's quantum team has been at the forefront, experimenting with superconducting circuits housed in ultra-cold environments to construct logical qubits. Such developments are instrumental in pushing the boundaries of what quantum computers can achieve despite the inherent challenges of mistakes and noise.

Comparative Analysis of Error Mitigation Methods

Comparative analysis is an essential tool for developers to select the optimal method for managing issues in their projects. By examining various techniques, we understand their strengths and how they can be applied to different scenarios. This is especially important in a field as dynamic and evolving as software development, where new challenges and technologies emerge regularly.

Comprehending the differentiation between compile-time and runtime mistakes is the initial stage in robust error mitigation. TypeScript, for example, is intended to detect many mistakes during compilation thanks to its rigorous type mechanism. However, runtime mistakes, like those triggered by external system malfunctions or unforeseen user input, can only be detected when the application is running.

Efficient handling of mistakes in TypeScript and other programming languages often includes try-catch blocks and other language-specific mechanisms. These tools enable developers to foresee possible mistakes and handle them properly, guaranteeing applications stay secure and dependable. The effective implementation of these strategies can be observed in the efforts of companies like D-Wave, which highlights the significance of resilient mistake management in the advancement of quantum computing technology.

As we consider the observations from experienced professionals in the field, it's evident that handling mistakes is not a one-size-fits-all solution. It necessitates thorough deliberation of the project's context and a profound comprehension of the underlying framework. The wisdom gained from years of practice in distributed systems design demonstrates that while certain principles are universally applicable, the implementation of error handling approaches must be tailored to the specific needs of each individual setup.

In conclusion, choosing the appropriate method for reducing mistakes is a challenging choice that requires considering multiple aspects, such as the type of errors, the particular needs of the application, and the development setting. By learning from the successes and challenges faced by industry leaders, developers can equip themselves with the knowledge to make informed decisions and write more resilient code.

Factors Influencing the Choice of Error Mitigation Techniques

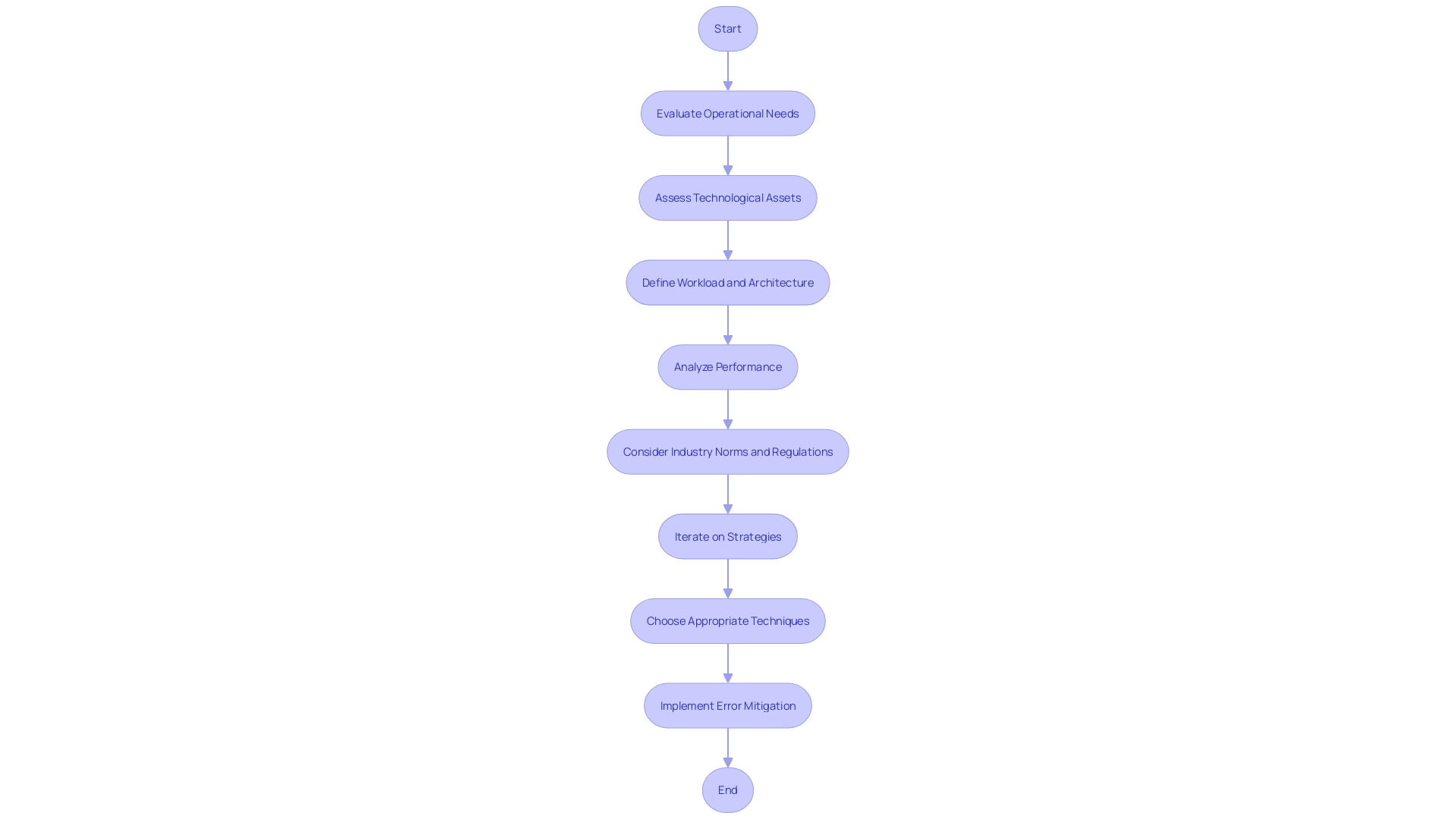

Choosing the appropriate techniques to mitigate mistakes in your setup is a complex task. It entails a meticulous evaluation of your particular operational needs, the accessible technological assets, and the kinds of mistakes you expect. The process is not unlike assembling a multi-die structure. You must begin by defining the workload and architecture, asking what applications will run, what the non-functional requirements are, such as latency and throughput, and determining the hardware components' power and performance properties. This detailed analysis will form a blueprint for your error mitigation strategy.

For example, in the domain of autonomous technologies or AI-based solutions, such as autonomous vehicles and advanced healthcare systems, the industry norms, including ISO 26262 for automotive safety and FDA guidelines for medical devices, emphasize the significance of creating strong assurance arguments. This adherence not only guarantees adherence to regulations but also serves as a crucial element in the structure of reliable structures with fault-tolerant designs.

Performance evaluation emerges as a crucial factor in the selection of error mitigation methods. A thorough performance assessment, as stated by industry professionals, analyzes the behavior of the entity, identifying constraints and crucial domains for enhancement. It is this depth of understanding that not only enhances the current system but also refines the developer's skill, paving the way for better systems in the future.

Moreover, empirical studies in software maintenance have highlighted the complexities involved in the lifecycle management of software, particularly in the maintenance phase. This clearly indicates that strategies for addressing mistakes cannot be universally applicable solutions. It requires an iterative approach, much like the 'engineering' process described for training deep learning models. The objective is to align the strategy for reducing mistakes with the project's broader requirements and values, such as safety, to guarantee that the execution is both purposeful and efficient.

In summary, choosing the appropriate techniques to handle mistakes is similar to scaling two mountains, known as Twin Peaks in the business world. It is an ongoing process of experimentation, necessitating a harmony between implementing practical solutions and reflecting on the broader goals and principles of the structure. By adopting an appropriate method guided by industry benchmarks, performance assessments, and empirical evaluations, your endeavors to minimize mistakes can result in a noticeable decrease in typical flaws and a structure that is more dependable, safeguarded, and streamlined.

Best Practices for Implementing Error Mitigation

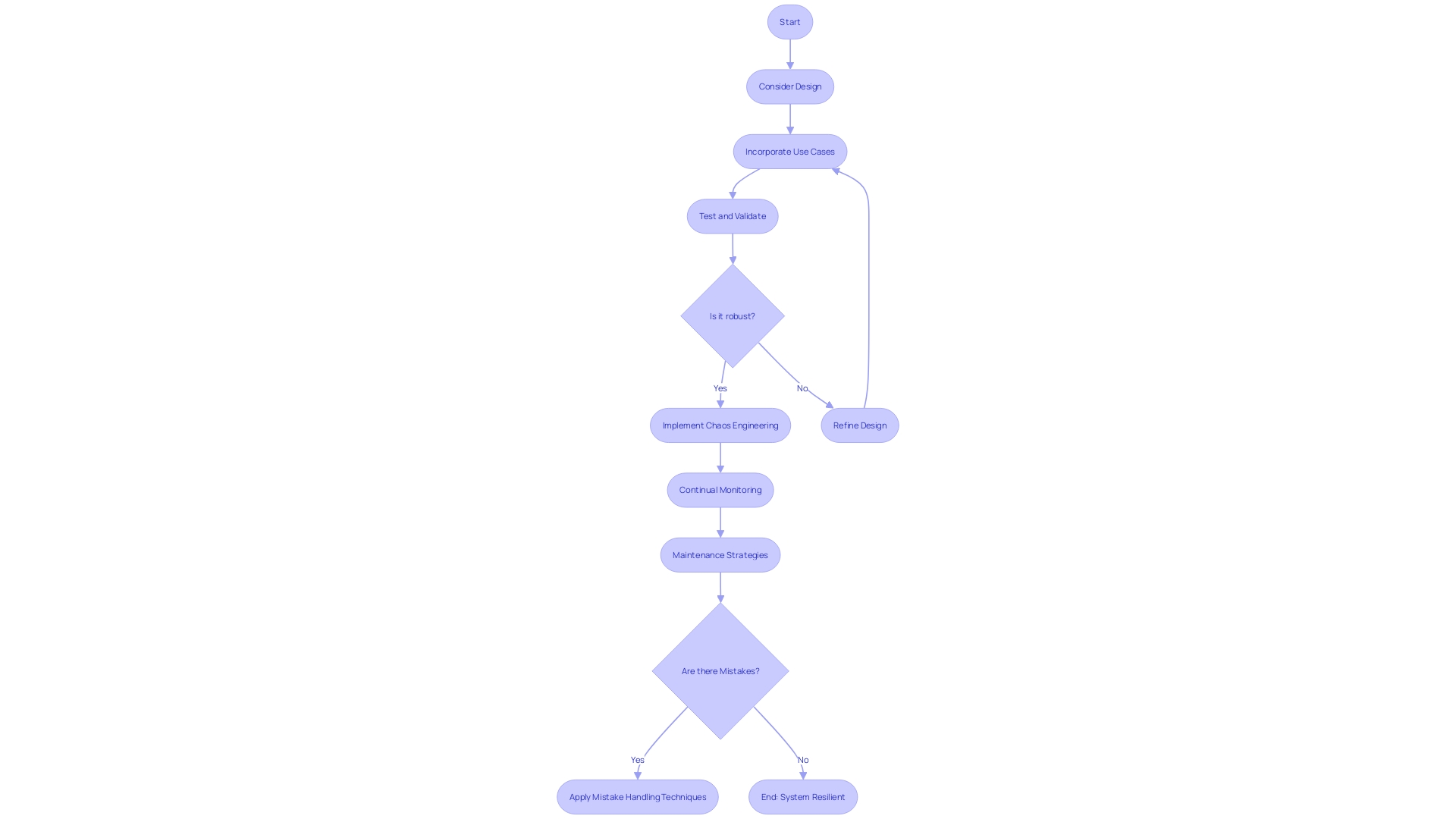

The foundation of a strong architecture is the capability to anticipate and address mistakes efficiently. This necessitates not just a firm comprehension of the design's structure but also the execution of strategic procedures and monitoring techniques to uphold its integrity. Considerations for design are crucial in laying the groundwork for error mitigation. By incorporating use cases, which outline the necessary preconditions, main flow of interaction, and postconditions, developers can gain comprehensive insights into functional requirements and behavior.

Testing and validation are crucial to guaranteeing the resilience of a structure, and this is where chaos engineering can offer substantial advantages. By intentionally introducing faults into the setup, developers can observe the response and fine-tune their setups accordingly. This approach, akin to an educated hypothesis, is designed to identify weaknesses and enable proactive improvements.

Continual monitoring and maintenance strategies are essential to sustaining performance. A practical tip is to establish fallback mechanisms for any critical external services, such as payment gateways or other third-party integrations, to prevent single points of failure that could compromise the system's availability.

In the realm of mistake handling, it is acknowledged that complexity can be perceived both locally and systemically. It's crucial to find a middle ground between readability and the practicality of issue handling within the codebase. Throughout history, programming languages have progressed from employing return codes to exceptions, underscoring the continuous endeavor of the industry to enhance handling techniques.

The insights provided by MIT engineers, who have developed automated sampling algorithms, underscore the importance of identifying a range of potential failures. This proactive approach helps in anticipating and addressing vulnerabilities before they lead to catastrophic outcomes.

Applying these measures to minimize mistakes not only improves the resilience of the setup but also assists developers in crafting more durable and trustworthy architectures. By implementing effective approaches, individuals can guarantee that mechanisms persistently operate accurately and consistently, despite unexpected obstacles.

Future Directions and Challenges in Error Mitigation

New methods to reduce mistakes are changing the way we predict and manage the inaccuracies and difficulties inherent in cutting-edge computing technologies. Innovations in deep learning certification, for example, are paving the way forward, with the research community actively exploring the reliability and safety of models during runtime. Techniques such as uncertainty quantification, out-of-distribution detection, feature collapse, and adversarial attack resistance are crucial in shaping the future of robust computing models. The aspiration is to develop models that not only perform regression and uncertainty predictions but also have the capacity to identify out-of-distribution inputs without relying on regression labels for training.

The influence of mistake management is significant, impacting not just system performance but also software design, readability, and the overall development experience. The conventional approaches, such as return codes employed in C and C++, frequently resulted in intricate control flow and placed the responsibility of checking for mistakes on the caller. The evolution toward exceptions was a significant shift, transferring error-handling responsibilities and simplifying code structure.

Quantum computing, a field led by companies like D-Wave, showcases the difficulties of managing mistakes in cutting-edge technology. Quantum computers, which encode data in superpositions of 1s and 0s, are highly susceptible to inaccuracies due to their sensitive components. Reducing these mistakes is crucial for the practical implementation of quantum computing in different industries, such as logistics, AI, and drug discovery. Quantum mistake management methods must be selected carefully based on the primary noise type to realize the promise of quantum advantage.

The forward-looking statements from industry leaders underline the challenges and potential of these emerging technologies, acknowledging the risks and uncertainties ahead. However, the continuous advancements suggest a promising trajectory for error mitigation techniques, with the potential to revolutionize computing and its applications in science and business.

Conclusion

Error mitigation techniques are crucial for ensuring reliable and smooth system performance. From chaos engineering to assurance cases, these strategies anticipate, detect, and correct faults before they lead to system failures. Robust assurance cases are particularly important in critical areas like autonomous systems and AI-based applications, certifying systems and demonstrating compliance with regulations.

Best practices in error mitigation include detailed documentation, real-time system monitoring, and the resilience of microservices. Performance evaluations enhance system quality and improve developers' ability to create better systems. Quantum computing highlights the need for effective error mitigation techniques to harness its potential advantages.

Choosing the right error mitigation technique requires a nuanced approach. Comparative analysis helps developers understand the strengths of different techniques and their applicability in various scenarios.

Factors influencing the choice of error mitigation techniques include operational requirements, available resources, and anticipated error types. Industry standards, performance evaluations, and empirical assessments also play a role.

Robust system architecture relies on effective error prediction and mitigation. This involves understanding the system's design, implementing strategic procedures, and utilizing monitoring techniques. Testing, ongoing monitoring, maintenance, and efficient error handling strategies contribute to successful error mitigation.

Emerging techniques in deep learning certification and quantum error management are transforming how we handle inaccuracies and challenges in advanced computing systems. These innovations have the potential to revolutionize computing in science and business.

In conclusion, selecting the right error mitigation technique is a complex decision. By learning from industry leaders and applying best practices, developers can make informed choices and write more resilient code. Efficient error mitigation reduces defects and ensures a reliable, secure, and efficient system.

Frequently Asked Questions

What are error mitigation techniques?

Error mitigation techniques are strategies designed to anticipate, detect, and correct faults in systems to prevent them from escalating into significant failures. These techniques ensure the dependability and seamless operation of mechanisms.

What is chaos engineering?

Chaos engineering is a methodical approach to testing where experiments are designed to introduce stress to a system in order to learn how to prevent potential chaos. It aims to identify weaknesses and improve system resilience.

Why are assurance cases important in autonomous technology and AI-driven applications?

Assurance cases are important because they help to certify and demonstrate compliance with industry standards and regulations, such as ISO 26262 for automotive safety or FDA guidelines for medical devices, which is essential for building trust in the dependability of these technologies.

How can the resilience of microservices be maintained?

The resilience of microservices can be maintained using techniques like circuit breakers, which prevent cascading failures by avoiding requests to faltering setups, thereby maintaining overall system integrity.

What role does performance evaluation play in error mitigation?

Performance evaluations play a significant role in deeply understanding a structure's behavior and constraints, which not only enhance the immediate quality of the system but also improve developers' ability to create better solutions in the future.

What is the significance of fault tolerance in distributed systems?

Fault tolerance in distributed systems is critical to ensure uninterrupted operation despite component failures. This helps safeguard against disruptions, from minor server issues to major data center outages.

What types of error mitigation techniques exist?

Error mitigation techniques range from hardware-based solutions to software-driven strategies, and can include a blend of both, known as hybrid approaches.

Why is comparative analysis important in error mitigation?

Comparative analysis helps developers understand the strengths of various error mitigation techniques and how they can be applied to different scenarios. It's essential for selecting the optimal method for managing issues in projects.

What challenges does quantum computing face regarding error mitigation?

Quantum computing is highly sensitive to errors and noise due to quantum systems' interactions with their environment. Innovations in quantum error correction are crucial to overcome these challenges and achieve quantum advantage.

What factors influence the choice of error mitigation techniques?

Factors include the specific operational needs, technological assets, types of expected errors, industry norms, performance evaluations, and empirical software maintenance studies.

What are some best practices for implementing error mitigation?

Best practices include detailed design considerations, chaos engineering, continuous monitoring, fallback mechanisms for critical services, and the use of strategic procedures to uphold system integrity.

What are the future directions and challenges in error mitigation?

Future directions include innovations in deep learning certification, techniques for managing mistakes more effectively, and the development of quantum computing error management methods tailored to the primary noise type. Challenges include the inherent difficulties of cutting-edge technologies and their practical implementation in various industries.