Introduction

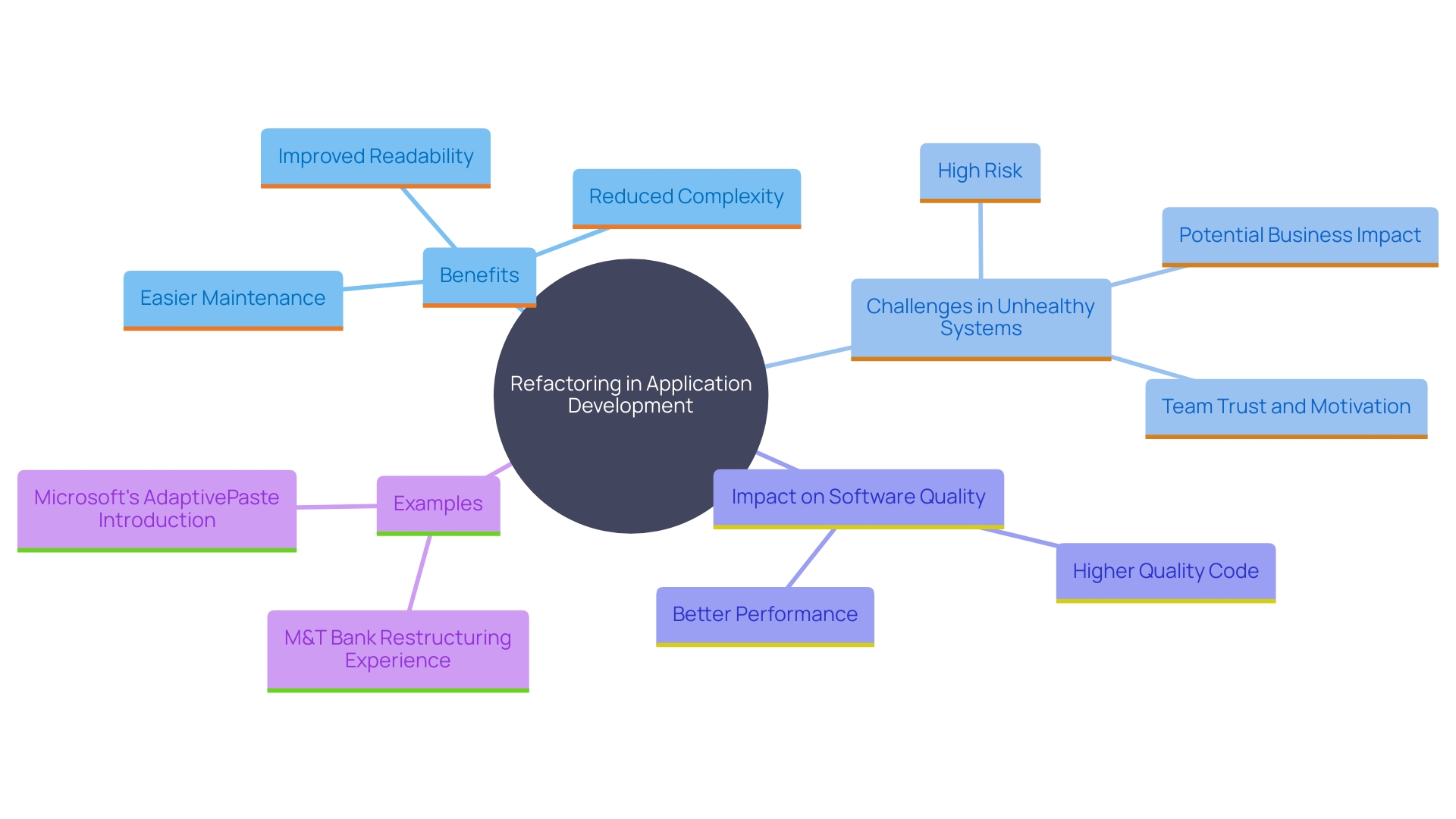

In the rapidly evolving world of software development, maintaining code quality and adaptability is paramount. Refactoring, the process of restructuring existing code without changing its external behavior, emerges as a vital practice to achieve these goals. It enhances readability, reduces complexity, and simplifies maintenance, thereby improving overall software quality.

Particularly in large systems, where navigating and modifying code can become cumbersome, refactoring ensures that the codebase remains manageable and efficient.

Empirical studies, such as the Code Red study, underline the stark contrast in task implementation times between healthy and unhealthy codebases, highlighting the significant business risks of neglecting code quality. Through systematic improvement, refactoring enables teams to adapt to changing requirements, fix hidden bugs, and ensure that the software evolves in tandem with user needs. This process is not just about immediate gains but also about sustaining long-term software health and performance.

As organizations like M&T Bank demonstrate, refactoring is crucial in industries with stringent security and regulatory demands. The banking sector's ongoing digital transformation underscores the necessity of maintaining clean code standards to avoid security breaches and financial losses. Moreover, advancements in software engineering, such as Microsoft's AdaptivePaste, illustrate the continuous evolution of refactoring techniques to enhance coding efficiency.

By understanding and applying best practices in refactoring, developers can ensure their code remains clean, efficient, and easy to maintain, ultimately driving maximum efficiency and productivity in software development.

Why Refactoring is Essential in Software Development

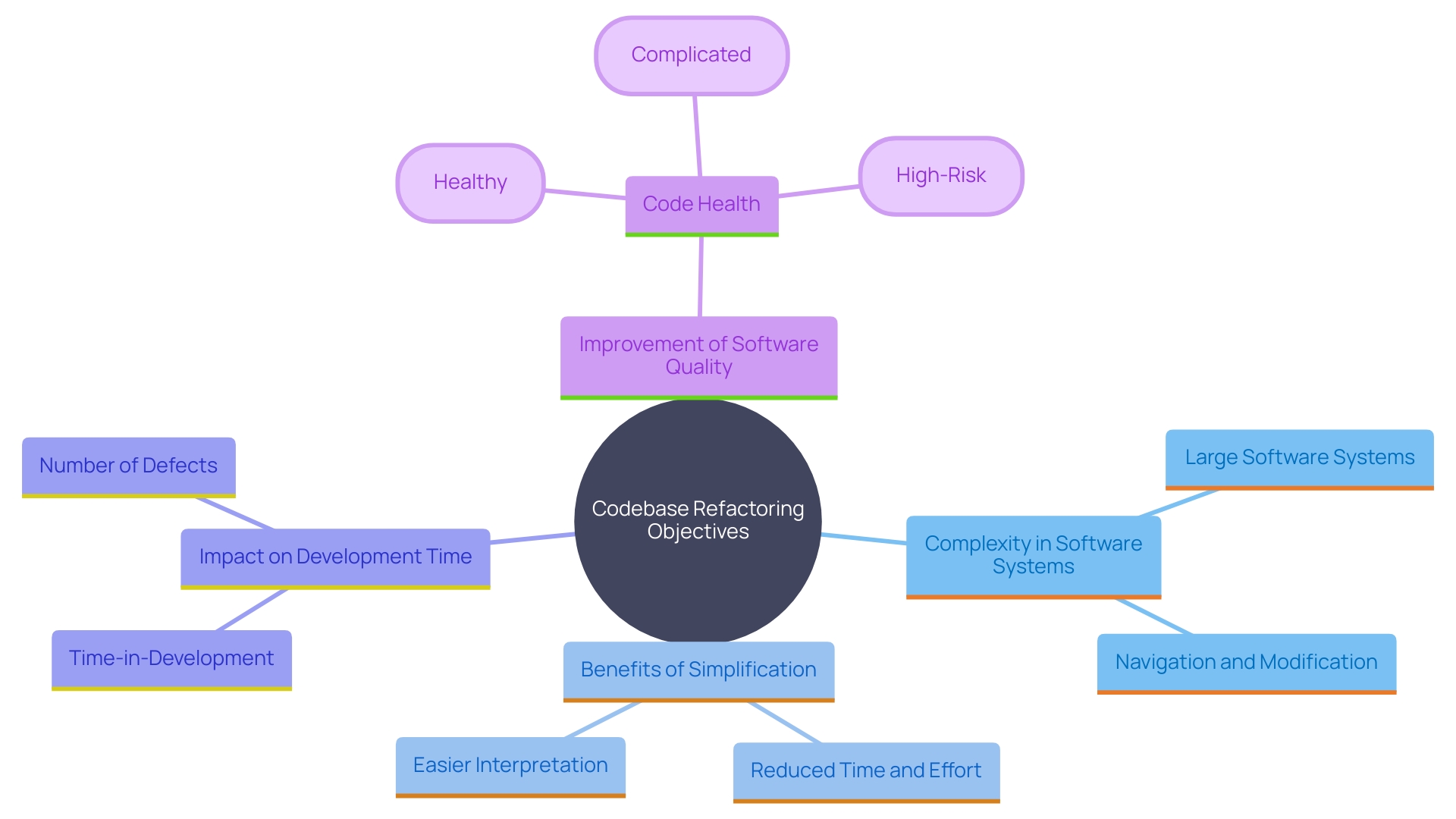

Refactoring is a vital element of application development that improves structure without changing its external behavior. It improves readability, reduces complexity, and facilitates easier maintenance, ultimately leading to higher quality software. Simplifying scripts is particularly crucial in large systems where navigating and modifying them can become arduous. In fact, empirical data from the Code Red study highlights that working in unhealthy systems can extend task implementation times by up to tenfold compared to healthy environments, emphasizing the significant business risks involved.

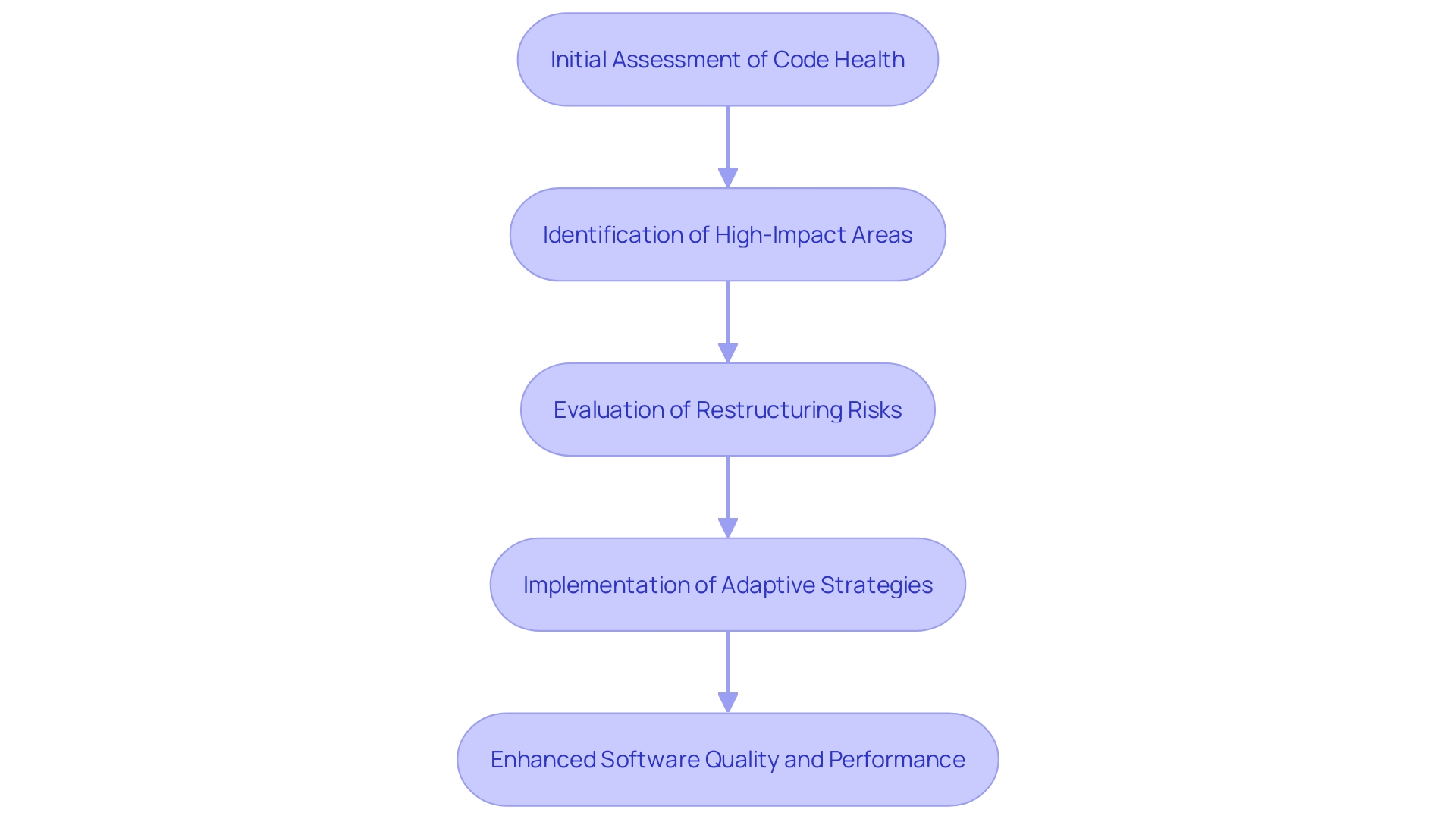

By systematically enhancing the programming, teams can adapt to changing requirements, fix hidden bugs, and ensure that the software evolves alongside user needs. This was clearly shown in a recent project where the restructuring process involved organizing global variables, removing unused segments, and creating a test function, which streamlined future modifications.

M&T Bank's experience highlights the significance of restructuring in upholding software quality and adherence, essential in a sector filled with security and regulatory obstacles. As the banking sector undergoes a massive digital transformation, maintaining clean programming standards ensures operational smoothness and mitigates risks of security breaches and financial losses.

The procedure of restructuring also aligns with modern advancements in software engineering. For instance, Microsoft's introduction of AdaptivePaste at ESEC/FSE 2023 aims to enhance programming efficiency by refining pasted snippets, addressing issues that arise from quick fixes like copying and pasting snippets. As highlighted by specialists, comprehending and utilizing the best methods for refactoring is crucial for programmers to maintain their work clean, efficient, and easy to manage.

Key Refactoring Techniques

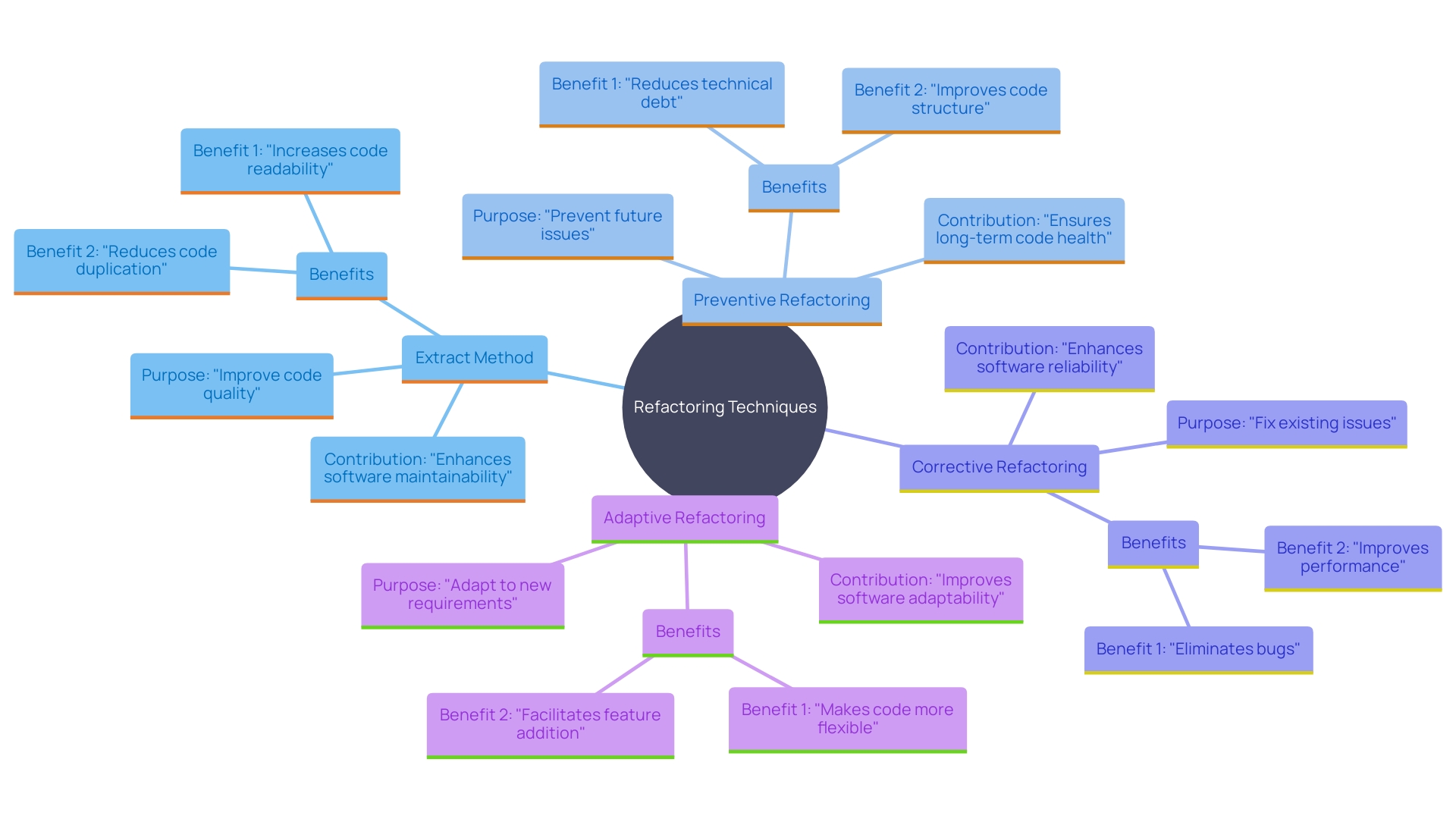

Numerous techniques can be employed to refactor programming effectively, each serving a unique purpose. Extract Method, frequently referred to as the "Swiss army knife" of refactorings, enables programmers to enhance software quality by dividing extensive methods into smaller, more manageable sections. This technique not only enhances readability but also simplifies testing and maintenance.

Preventive Refactoring involves addressing potential issues before they escalate, thus preventing technical debt. By regularly reviewing and refactoring the programming elements, developers can ensure that the codebase remains clean and efficient. This proactive approach helps in catching issues early, making it easier to maintain and extend the software.

Corrective Refactoring focuses on improving existing structures by identifying and addressing weaknesses. This can be accomplished through regular reviews of the programming where potential issues are highlighted and resolved. Incorporating improvements into the development process guarantees that the software stays strong and easy to manage.

Adaptive Refactoring, a technique gaining traction with the rise of AI, involves using machine learning models to adapt and refine code snippets. Tools like GitHub Copilot are transforming this method by proposing enhancements and automating aspects of the code restructuring process.

Refactoring can be challenging, particularly in large codebases where the risk of introducing new bugs is higher. However, the benefits, such as improved readability, maintainability, and reduced complexity, far outweigh the drawbacks. Regular refactoring sessions can significantly enhance the overall health of a codebase, leading to more efficient and predictable development cycles.

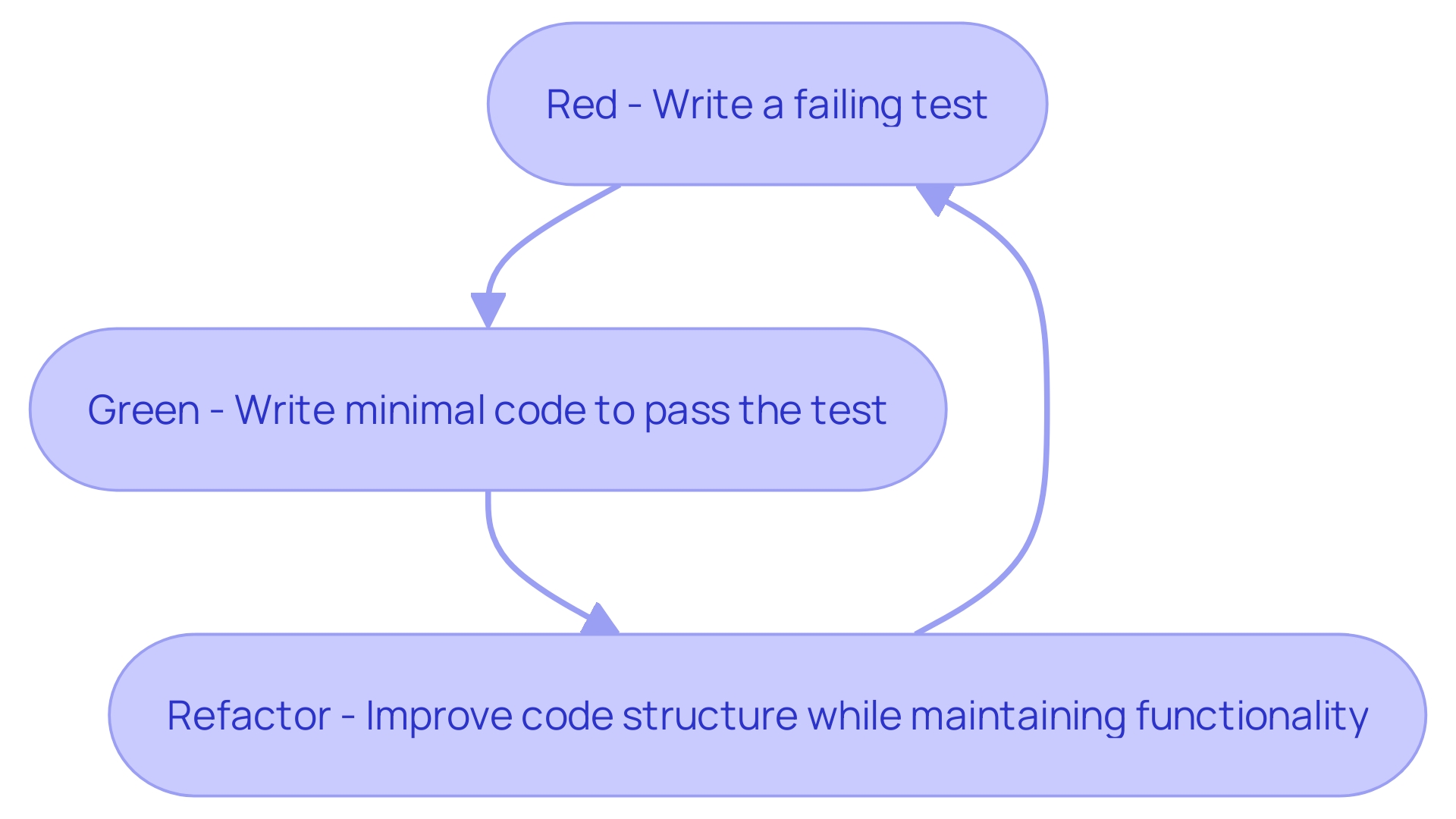

Red-Green-Refactor Method

The red-green-refactor cycle in test-driven development (TDD) is a disciplined method that ensures software quality and functionality. This technique begins with writing a failing test (red), which clearly defines the functionality to be implemented. Developers then write the minimal amount of instructions required to pass this test (green), creating a direct path to achieving the desired functionality. Finally, the program is refactored to enhance its structure without altering its behavior.

This cycle promotes modularity, clarity, and independence within the codebase, making it highly testable and maintainable. According to Kazys Rakauskas, starting with a red test is essential as it guides the development process effectively. This approach not only simplifies identifying and resolving defects but also contributes to long-term benefits like improved performance and scalability. For instance, the Code Red study highlights that maintaining a healthy codebase can reduce implementation times significantly, giving a competitive edge in the software industry.

Refactoring by Abstraction

Refactoring by abstraction is a powerful technique that enhances software quality by identifying common patterns and extracting them into separate functions or classes. This method significantly reduces duplication and enhances modularity, making the system more understandable and easier to modify. By streamlining the framework of the program, developers can save time and effort when navigating and updating large systems. As mentioned, 'Reworking can assist in streamlining scripts, making them more straightforward to read, comprehend, and manage.' This approach not only encourages cleaner programming but also helps in decreasing technical debt and enhancing overall software maintainability. Research has indicated that restructuring techniques, like the Extract Method, are crucial in improving software quality, with substantial business effects. The connection between programming quality and business value is non-linear, emphasizing the significant benefits of maintaining a well-refactored codebase. Ultimately, comprehending and implementing best practices in refactoring ensures that programs remain efficient and manageable over time.

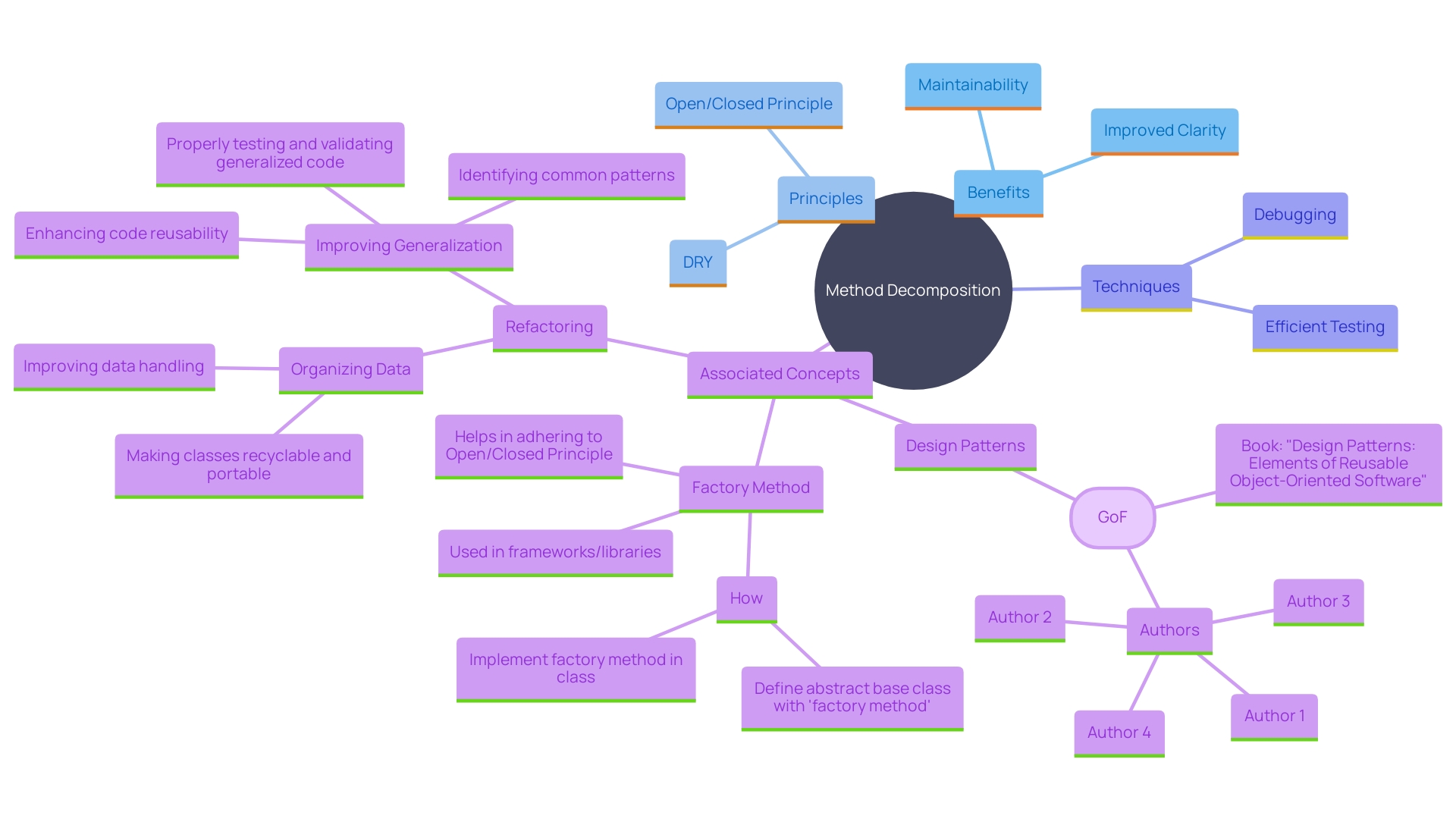

Composing Method

Breaking down complex methods into smaller, more manageable ones is a cornerstone of effective software design. By employing this technique, programmers can significantly improve the clarity and maintainability of their software. This practice, often referred to as method decomposition, not only simplifies the debugging process but also facilitates more efficient testing.

Software systems should be explainable, aiding developers and users in understanding the functionality and behavior of the system. Textual documentation, although beneficial, frequently becomes obsolete and detached from the actual programming. Tests, conversely, are directly connected to the programming and can act as a type of documentation. For example, certain tests can be designed to return the object under test, allowing for inspection and reuse in further tests, thereby providing a more interactive and informative way to understand the system.

Composing methods by breaking down complex processes aligns with the DRY (Don't Repeat Yourself) principle, which promotes more organized and coherent coding practices. This approach aids in developing scalable and maintainable software systems by minimizing redundancy and ensuring that each component is unique and essential.

Recent advancements in formal methods and tools, such as the improvements seen in the Lean theorem prover, underscore the importance of robust and efficient program refactoring techniques. These tools have made significant strides in simplifying and streamlining the development process, making it easier for programmers to adopt best practices like method decomposition.

In the ever-evolving domain of engineering, adapting to new methodologies and continuously refining code is essential. This not only enhances the quality of the application but also supports a wider community of programmers, including those who may not have formal education in engineering. By focusing on method decomposition and other refactoring techniques, developers can create high-quality, maintainable software that stands the test of time.

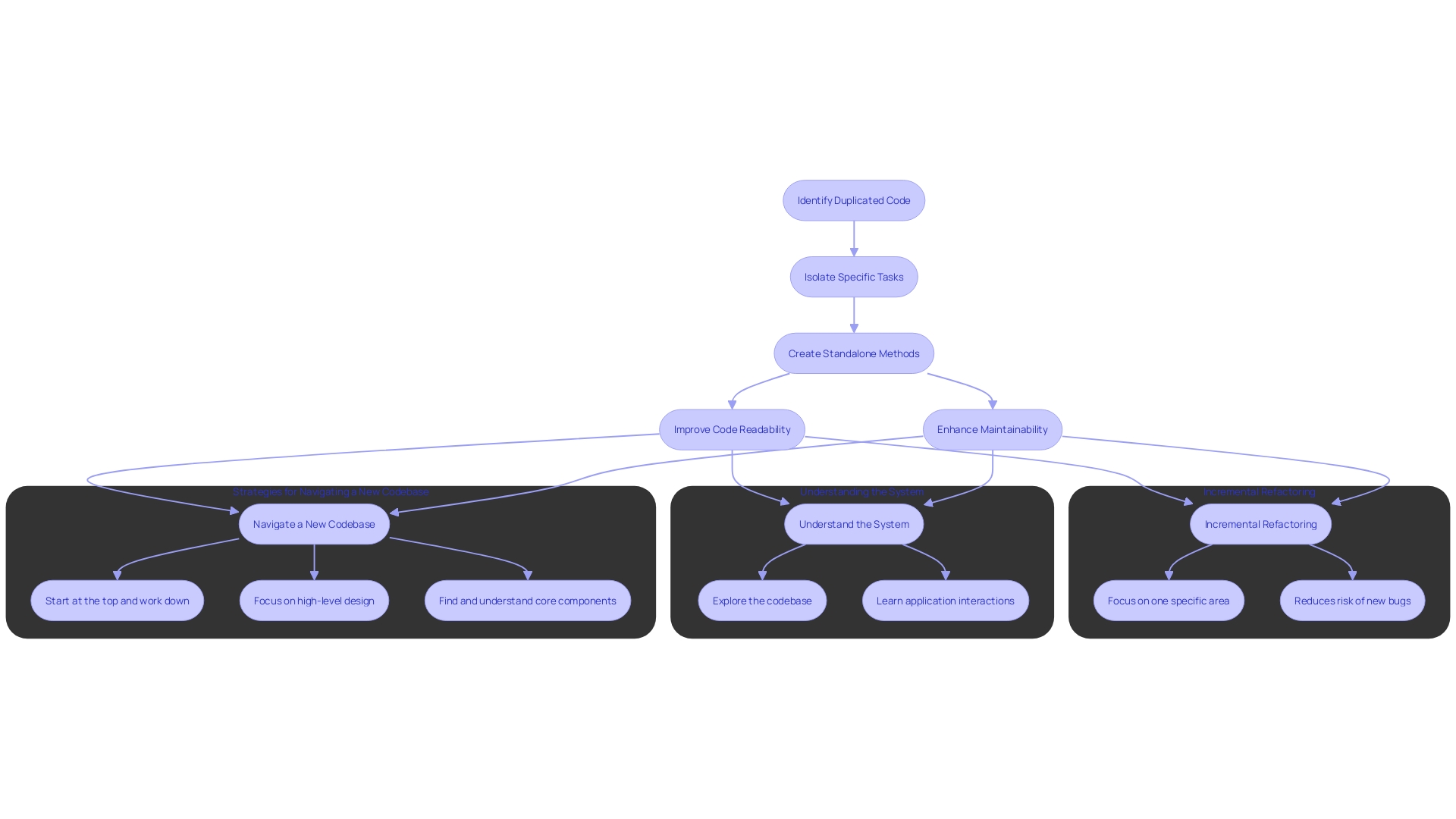

Extract Method

The extract method technique involves isolating a specific task within a block of instructions and moving it into its own standalone method. This approach not only encourages reuse of programming components but also simplifies the original method, making it more concise and easier to understand. For instance, duplicated programming is a common programming smell that can complicate maintenance. By extracting the repeated sections into individual methods, you eliminate redundancy and streamline your codebase. This technique also addresses long and complex methods, which are often difficult to read and maintain. Breaking these methods into smaller, focused ones enhances readability and manageability.

In the context of large-scale operations, such as those at Google, the impact of such refactoring techniques can be substantial. It has been reported that this can reduce the time spent on reviews by hundreds of thousands of engineer hours each year. Moreover, input from programmers emphasizes that ML-recommended adjustments greatly enhance efficiency, enabling them to concentrate on more innovative and intricate tasks.

Moreover, real-world applications of the extract method are evident in various case studies. For instance, one programmer was able to produce unit tests for roughly 3,000 lines of programming, attaining a total coverage of 88% with 96 tests in merely one day. This demonstrates the efficiency and effectiveness of well-structured programming facilitated by techniques like the extract method.

By understanding and implementing these refactoring practices, developers can ensure that their work is clean, efficient, and easy to maintain. This not only improves the immediate quality of the program but also aids in long-term project sustainability.

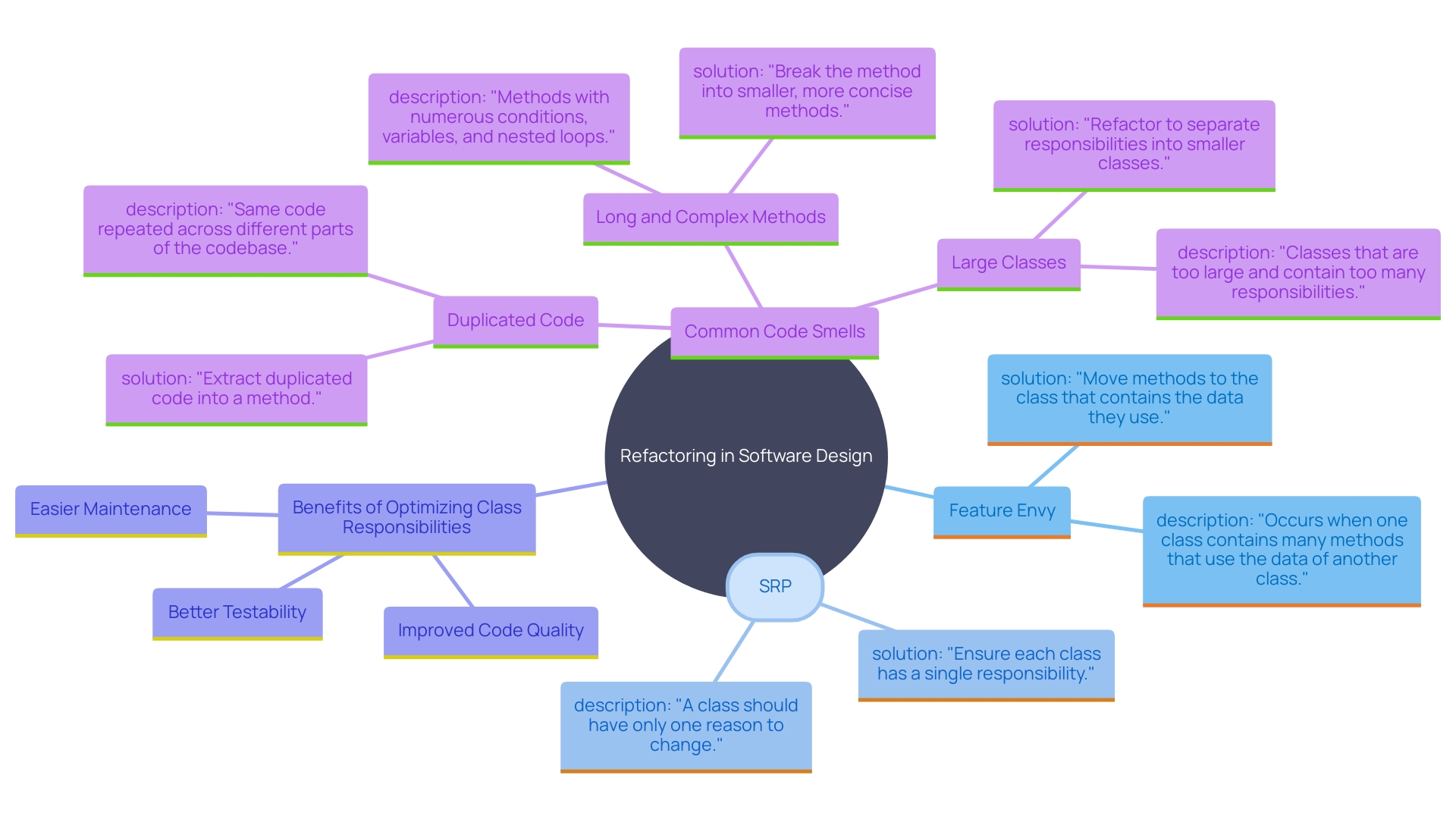

Moving Features Between Objects

'Transferring features or methods from one class to another is an effective technique to enhance cohesion and delineate class responsibilities.'. This refactoring approach addresses the issue of Feature Envy, where one class excessively uses the data of another class, leading to unwarranted dependencies. By relocating methods to the class whose data they primarily utilize, developers can enhance structure, making it simpler to maintain and test.

This strategy aligns with adhering to SOLID principles, particularly the Single Responsibility Principle (SRP). Violations of SRP often result in classes with multiple responsibilities, complicating the codebase. Refactoring to ensure each class has a single responsibility not only improves organization but also enhances overall programming quality.

An illustrative case is the use of the Grace Hopper Superchip in tightly coupled heterogeneous systems, where optimizing data movement between CPU and GPU significantly boosts performance and energy efficiency. This principle is similar in software design, where efficient data and method relocation across classes lead to a more streamlined and efficient code structure.

Best Practices for Refactoring

Maximizing the benefits of code restructuring requires adherence to best practices that enhance both efficiency and effectiveness. Adhering to these practices guarantees that restructuring efforts result in significant enhancements.

One of the primary benefits of refactoring is simplifying the program, making it easier to read, understand, and maintain. This is particularly crucial in large software systems, which can become cumbersome to navigate and modify over time. As developers spend more time reading scripts than creating them, simplified structures significantly reduce the time and effort needed to understand and modify them.

Moreover, well-structured programming is essential for long-term maintenance. 'Poorly organized scripts can swiftly become a maintenance nightmare, especially in aging systems.'. Even with efforts to reduce technical debt, codebases tend to become increasingly complex over time. Focusing on maintenance via refactoring improves the programming, boosting its performance and possibly lowering expenses, particularly for extensive deployments.

Refactoring is also essential for enhancing the quality of the program, maintainability, and readability. By reorganizing the program to be more comprehensible and simpler to modify, it streamlines the process of troubleshooting and resolving issues, ultimately enhancing developers' efficiency.

'Including restructuring in regular upkeep plans and program evaluations can assist in preserving tidy and effective software environments.'. 'This proactive method not only tackles potential problems but also guarantees that the program is adequately examined and verified, making development more predictable and efficient.'. 'Despite the challenges, such as the possibility of introducing new issues, the long-term advantages of decreased technical debt and enhanced programming standards make restructuring an essential practice in application development.'.

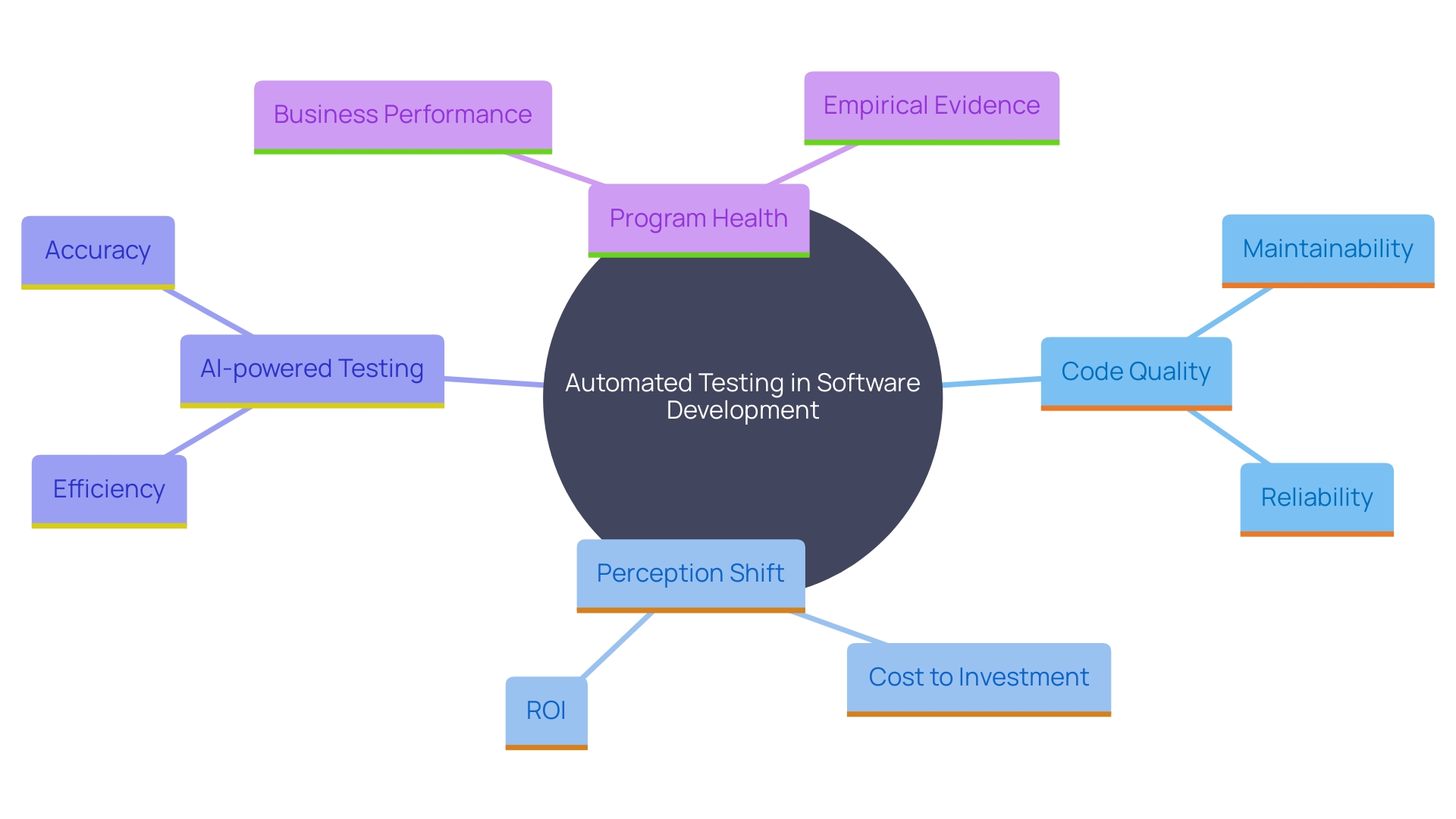

Frequent Testing

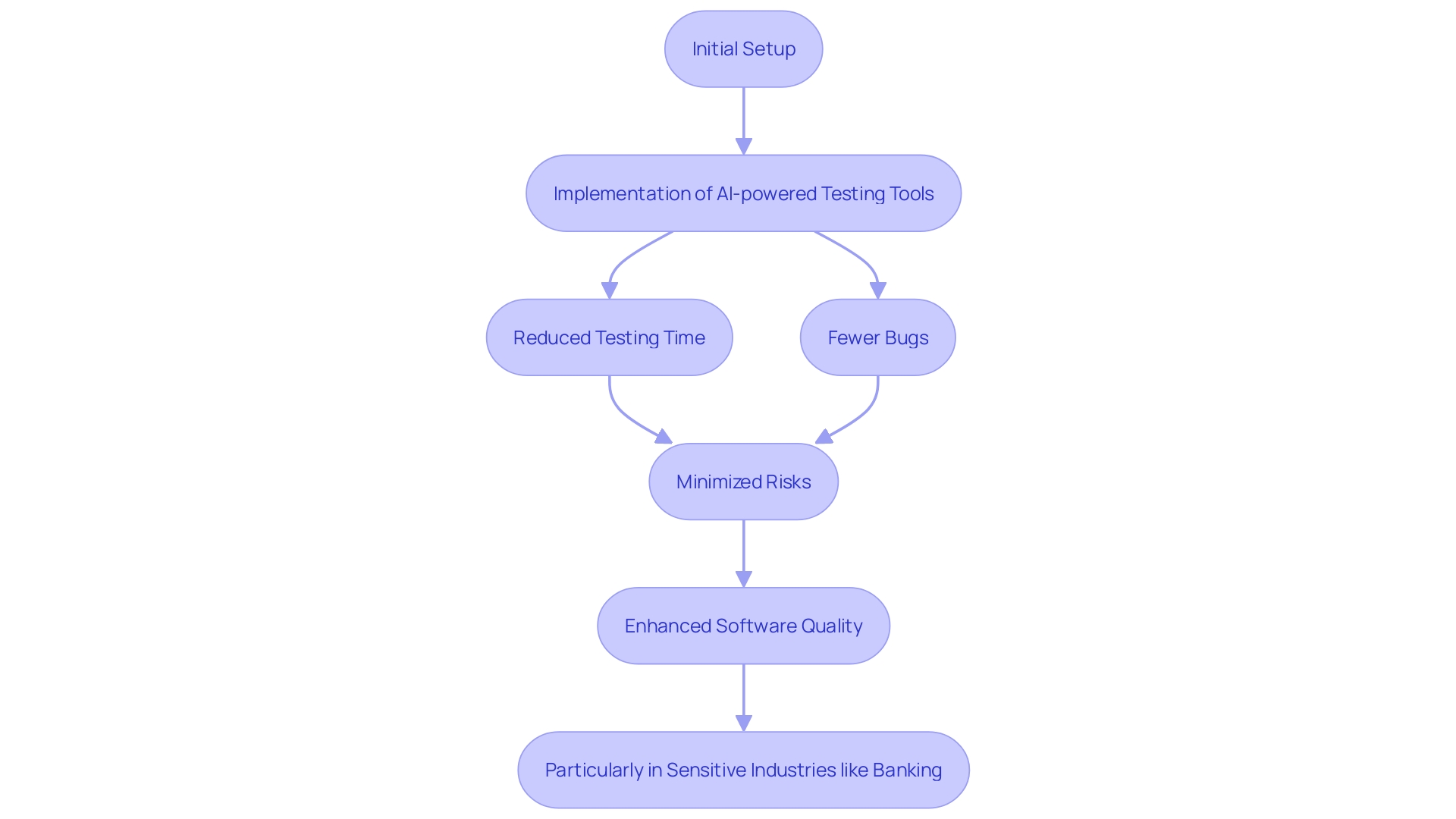

Consistently testing code during refactoring is essential to upholding quality and reliability of applications. Automated tests are crucial in this process, enabling quick validation that changes do not introduce new bugs. This not only preserves existing functionality but also enhances overall efficiency.

In the high-speed world of application development, where programs must evolve rapidly or risk obsolescence, quality assurance (QA) teams are under constant pressure to ensure functionality, quality, and speed of release. Automated testing emerges as a pivotal solution, shifting the perception of software testing from a financial burden to a valuable investment. Contemporary testing techniques, especially those powered by AI, can automatically create test cases based on analysis, requirements, and user behavior information, ensuring thorough test coverage. This approach saves time and resources, addressing the time-consuming nature of manual testing.

Empirical data underscores the importance of maintaining a healthy codebase. For instance, a study on the relationship between program health and business impact revealed that tasks in unhealthy programs could take significantly longer to complete compared to those in healthy programs. This highlights the business risk of neglecting code quality, emphasizing the need for regular and thorough testing.

As technology continues to advance, application testing remains a fundamental process to ensure that programs meet the highest standards of performance and functionality. By identifying and rectifying bugs early, automated testing not only safeguards software reliability but also delivers substantial cost savings and return on investment (ROI).

Refactoring in Small Steps

Implementing changes incrementally allows programmers to manage risk effectively. By dividing modification tasks into smaller, manageable components, programmers can reduce the inherent risks associated with changing a functioning system. This approach ensures that changes are easier to track and understand, significantly reducing the likelihood of introducing issues. For example, when renaming a function, it is crucial to ensure that all references to that function are updated accordingly. If you have a comprehensive suite of tests covering your codebase, any errors will quickly become apparent, making it easier to detect and fix issues before they impact the system.

In many situations, programmers might face larger refactoring tasks that affect multiple subsystems. However, incremental changes help contain potential issues within a single component, minimizing unforeseen effects on other parts of the system. 'This is especially crucial in extensive software systems where complexity can result in failures in essential business operations.'. By focusing on small, incremental changes, creators can maintain system stability and ensure that each modification is carefully tested and validated.

Moreover, incremental changes are essential when enhancing software to make room for new features. For instance, changing a single product purchase flow to support multiple items requires careful planning and execution to avoid disrupting existing functionalities. By addressing such enhancements in phases, creators can maintain control over the process and ensure that new features are seamlessly integrated into the system.

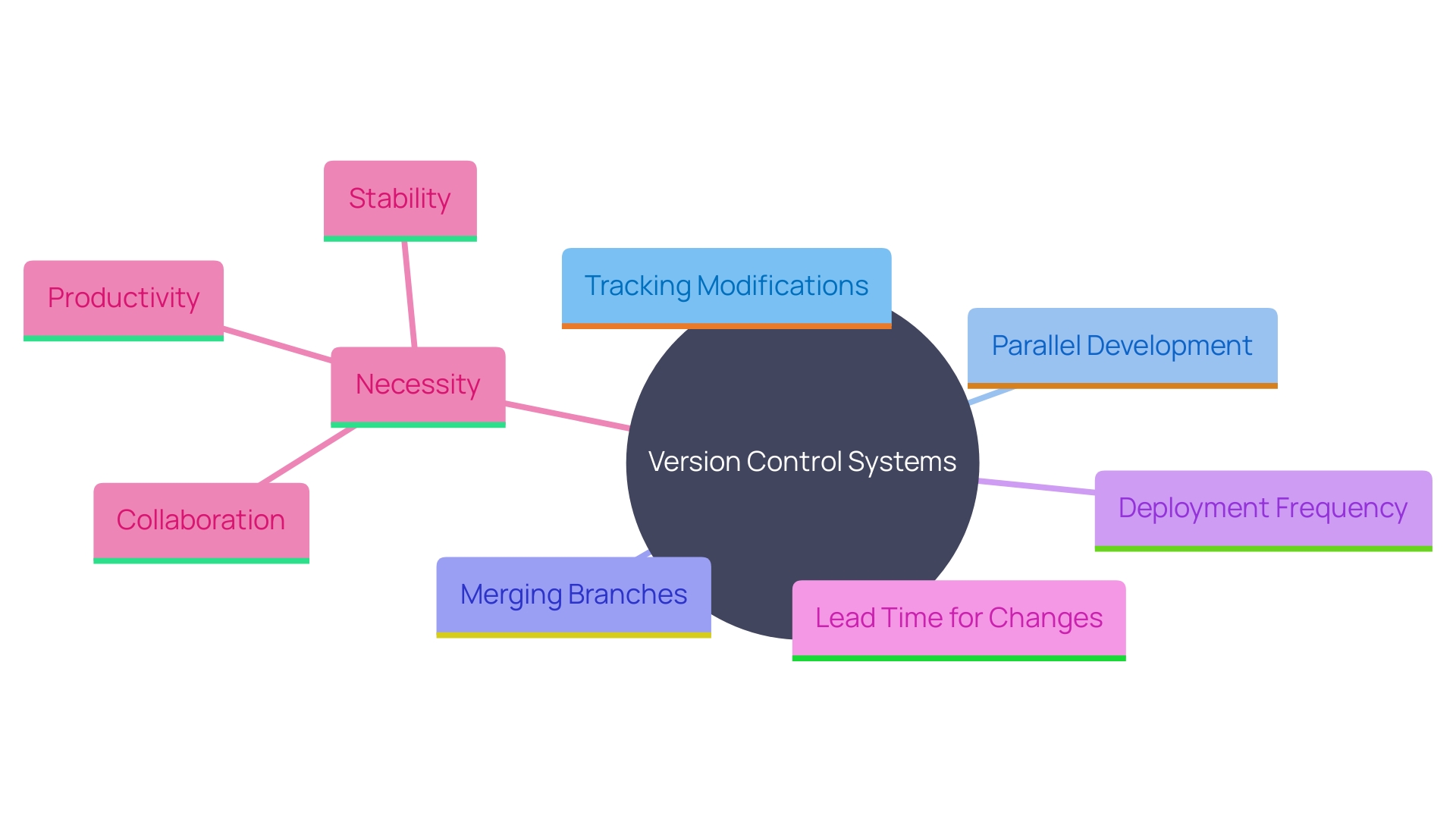

Utilizing Version Control

Version control systems are essential in the process of software refactoring. These systems, including GitHub, Azure DevOps, and Team Foundation Server, offer programmers the capability to monitor every modification made to the source files. This tracking capability is crucial for understanding the evolution of the code, troubleshooting issues, and reverting to stable versions when necessary.

Version control systems enable parallel development by allowing the creation of multiple branches from the main codebase. This functionality ensures that different developers can work on separate features or bug fixes simultaneously without interfering with each other’s work. As application development projects grow in complexity, the ability to merge these branches back into the main codebase seamlessly becomes essential. For instance, in a recent Ruby repository update, features, bug fixes, and documentation updates were managed through pull requests, demonstrating the efficient handling of various contributions.

Moreover, the deployment frequency and the lead time for changes are significantly improved with the use of version control systems. These systems ensure that code changes reach production faster and with fewer errors, contributing to a lower mean time to restore (MTTR) in case of failures. 'The scalability of these systems further supports growing development initiatives, making them a vital tool in today’s fast-paced technology sector.'.

Christopher Condo from Forrester Research emphasizes the rising need for version control systems, noting that as the demand for applications expands, so does the necessity for efficient version control. This is evident in the growing number of developers utilizing these systems to boost their profiles through regular commits and contributions to open-source projects.

Ultimately, version control systems are not just about maintaining a history of changes; they are about fostering collaboration, enhancing productivity, and ensuring the stability and scalability of software projects.

Performance Monitoring

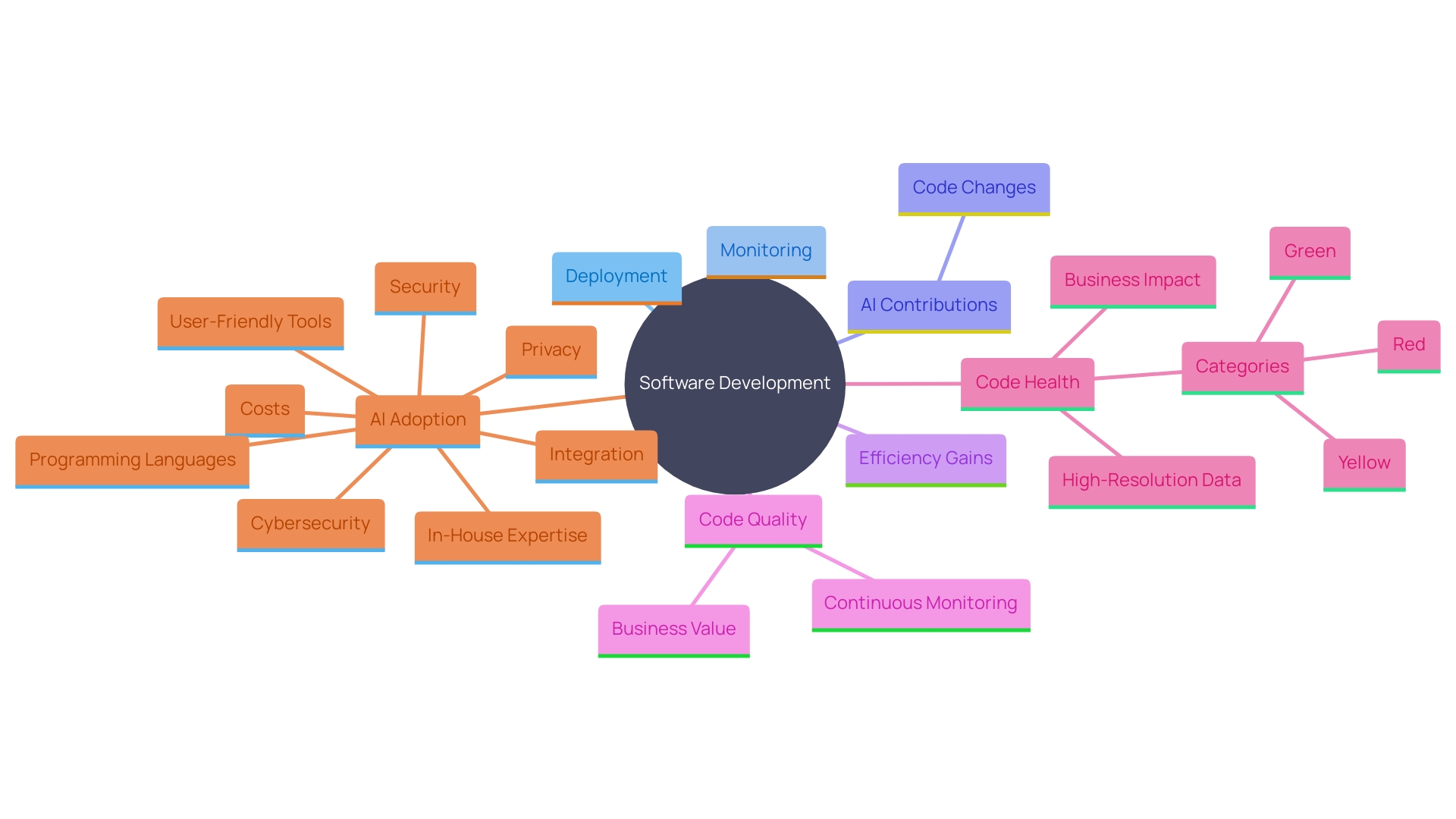

Tracking performance prior to and following modifications is essential for evaluating the effects of alterations. By leveraging high-resolution data from over 50 proprietary codebases, including metrics like time-in-development and defect numbers, polynomial regression analysis reveals a non-linear relationship between Code Health and business value. This approach not only guarantees better quality of programming but also maintains or enhances performance. For instance, even a codebase with a Code Health score of 9.0, categorized as Green, hasn't reached its full potential, highlighting the importance of continual performance assessment. As one specialist observes, 'By comprehending the methods and optimal strategies for restructuring, programmers can guarantee that their code is tidy, effective, and simple to manage.'

![]()

Automated Refactoring Tools

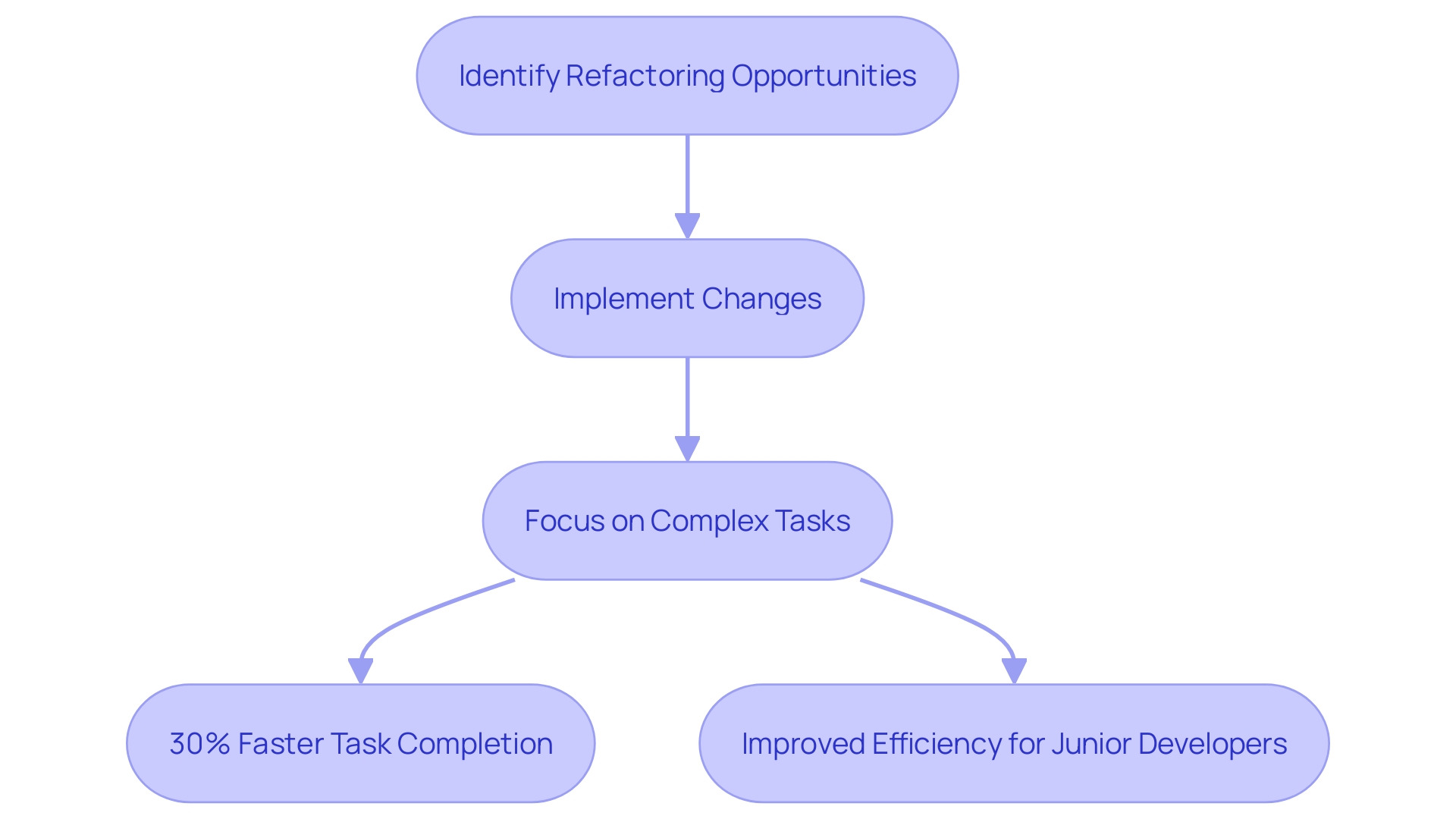

Automated tools, particularly those driven by AI, can significantly speed up the restructuring process. These tools identify common refactoring opportunities and streamline the implementation of changes, allowing developers to focus on more complex tasks. For instance, a controlled experiment with software development teams revealed that those using AI-powered tools completed tasks 30% faster than teams using traditional methods. At Google, ML-suggested modifications have significantly reduced the time spent on reviews, thereby boosting productivity. AI pair-programming tools like GitHub Copilot provide completion recommendations within integrated development environments (IDEs), significantly improving efficiency. This is particularly beneficial for junior developers, who see the most significant productivity gains. The incorporation of AI in development workflows not only minimizes repetitive programming tasks but also enhances overall quality and development speed.

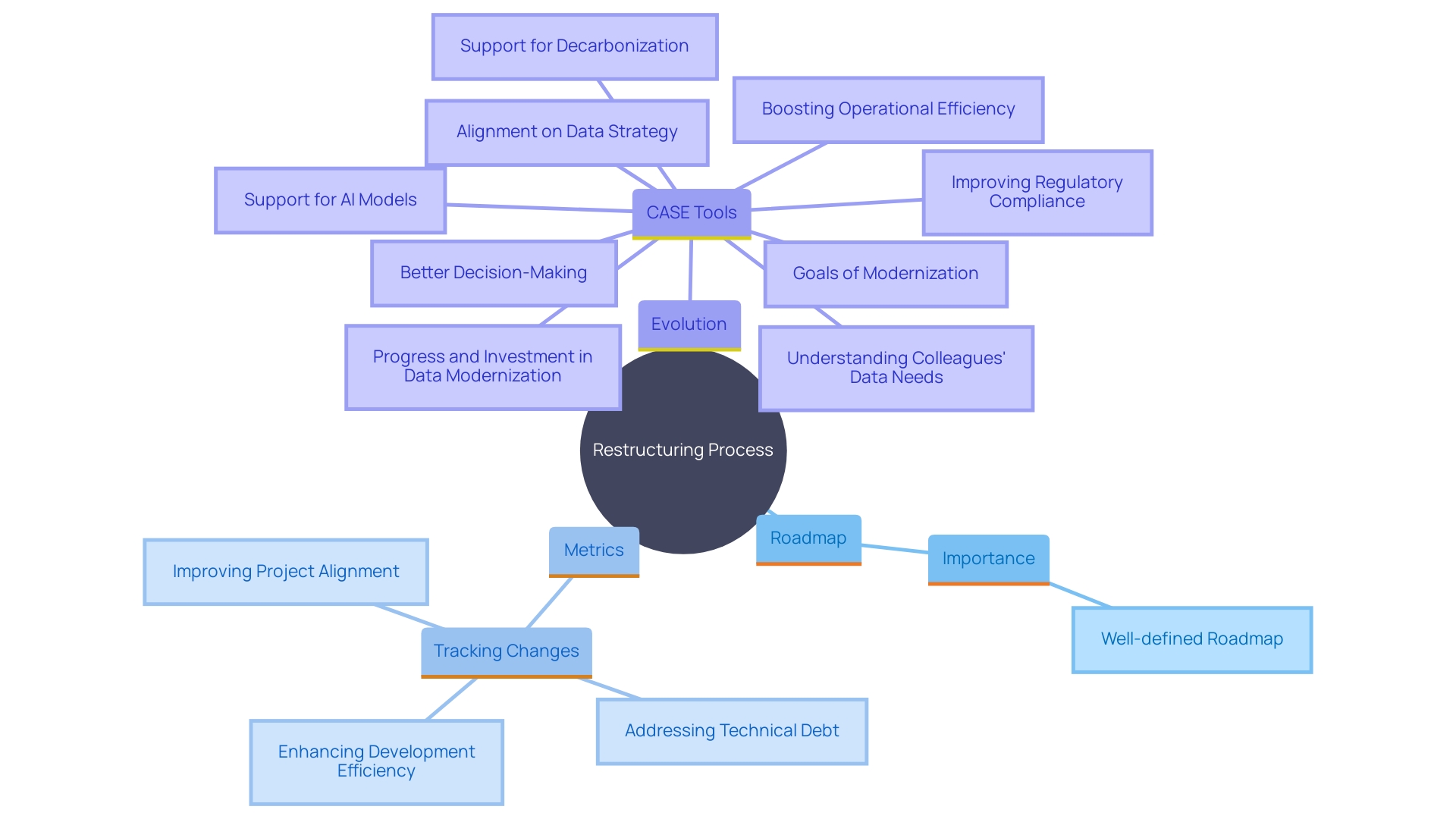

Creating a Refactoring Roadmap

A well-defined roadmap is essential in the restructuring process, ensuring alignment with overall project goals and objectives. This structured approach not only helps in identifying critical areas for improvement but also facilitates smoother transitions and future changes. For instance, a case study revealed that restructuring exposed underlying issues, making them easier to address in the future, which streamlined the process and enhanced organization.

Moreover, setting metrics and tracking changes is vital to measure the effectiveness of refactoring efforts. This continuous improvement approach helps in balancing technical debt—accumulated when development teams take shortcuts—with the evolving demands of clients. An empirical study from the Code Red research highlights that tasks can take significantly longer when dealing with unhealthy code, emphasizing the business advantages of maintaining a healthy codebase.

The historical evolution of CASE tools also underscores the importance of systematic solutions in program development. Since their inception in the late 1960s, these tools have evolved to support a wide range of programming languages and methodologies, making complex structures more accessible and manageable. This evolution has empowered developers to transform their creative visions into functional, high-quality applications, ultimately driving efficiency and productivity in the development process.

Setting Objectives

Establishing clear objectives is crucial for pinpointing the exact areas of a codebase that necessitate refactoring, ensuring targeted and effective efforts. In large software systems, navigating and modifying programs can become increasingly complex over time. 'Simplifying the program not only makes it easier to read and maintain but also significantly reduces the time and effort needed for future modifications.'. Empirical data supports this, showing that tasks can take significantly longer in unhealthy codebases compared to well-maintained ones, putting businesses at a competitive disadvantage. By concentrating on clearly outlined improvement objectives, programmers can reduce risks and improve software quality, ultimately promoting a more efficient and productive development atmosphere. As noted, much of a developer’s time is spent reading existing scripts, making it imperative that the scripts are optimized for ease of interpretation and maintenance.

Prioritizing Tasks

Prioritizing refactoring tasks is essential for optimizing time and resource management. Focusing on high-impact areas initially can result in considerable enhancements in software quality and overall system performance. According to empirical data, improving code health can drastically reduce the time required to implement tasks. For instance, tasks that might take nine months in an unhealthy codebase could be completed in less than a month in a well-maintained one. Furthermore, creating suggestions for code improvement that correspond with what programmers view as pertinent can enhance the wider use and impact of restructuring initiatives.

However, restructuring comes with its own set of challenges. Changes to a working system can be risky, as they may inadvertently affect other parts of the system and disrupt vital business operations. To mitigate these risks, developers can employ various strategies to make the process more manageable. For example, larger restructuring tasks that affect multiple subsystems should be prioritized based on their potential impact and the feasibility of implementation. On the other hand, tasks constrained within a single component should be assessed for any unforeseen consequences on the overall system.

Additionally, progress in software development methods, like Microsoft's adaptive learning-based strategies, seeks to improve coding efficiency and reduce risks linked to restructuring. By concentrating on high-impact areas and utilizing empirical data to inform decisions, organizations can significantly enhance their software quality and operational efficiency.

Establishing a Testing Environment

Establishing a dedicated testing environment is crucial for safe and effective code refactoring. Such an environment allows developers to experiment with changes without risking the stability of the production system. For instance, M&T Bank, a leading U.S.-based commercial bank, emphasizes stringent quality standards and compliance to ensure smooth operations and protect sensitive data during their digital transformation. This approach is particularly important in the banking industry, where security breaches can lead to severe financial and reputational damage.

Moreover, the importance of software testing in large-scale projects cannot be overstated. Effective testing identifies defects, ensures compliance with standards, and delivers reliable applications. Traditional methods often fall short in complex environments, but a dedicated testing environment offers a controlled space to validate changes. According to industry statistics, companies using AI-powered testing tools have seen a 40% reduction in testing time and a 60% decrease in bugs found in production. These tools enhance efficiency and ensure thorough testing, making them invaluable for maintaining high software quality.

In real-world scenarios, such as the CI infrastructure evolution from Java to Go Monorepo, having a dedicated testing environment helped address challenges and stabilize the workflow. This setup not only provides a sandbox for safe experimentation but also offers a window into the historical performance of tests, allowing for better categorization and analysis. The legacy service's limitations in visibility and extensibility underscore the need for a robust testing setup to support different strategies and accommodate various programming languages.

In summary, a dedicated testing environment is essential for validating code changes safely and efficiently. It minimizes risks, supports compliance, and enhances the overall quality of software, ensuring that organizations can meet their operational and security requirements effectively.

Deploying and Monitoring Refactored Code

Once refactoring is complete, deploying the changes and closely monitoring the system helps in identifying any unforeseen issues. Ongoing observation guarantees that the revised program operates as anticipated. For instance, a study found that 80% of alterations in certain landed change lists were AI-authored, reducing migration time by 50%. Such efficiency gains highlight the importance of thorough monitoring and validation post-deployment. Effective use of tools can predict necessary edits with high accuracy, ensuring smooth transitions. Moreover, using high-resolution data sets to measure code health has shown a non-linear relationship between code quality and business value, emphasizing the critical role of continuous monitoring in maintaining optimal code performance.

Conclusion

Refactoring stands as a cornerstone in the realm of software development, fostering enhanced code quality, maintainability, and adaptability. By systematically restructuring code without altering its external behavior, developers can significantly improve readability and reduce complexity. This practice is particularly vital in large systems, where the intricacies of navigating and modifying code can lead to inefficiencies and increased business risks, as highlighted by empirical studies such as the Code Red study.

Adopting key refactoring techniques, such as Extract Method and Adaptive Refactoring, empowers developers to streamline their workflows and address potential issues proactively. These methods not only bolster code quality but also facilitate easier modifications and testing, ultimately driving productivity. The integration of automated tools further accelerates this process, allowing teams to focus on complex tasks while ensuring that their code remains clean and efficient.

Establishing a well-defined roadmap and setting clear objectives for refactoring efforts are crucial for maintaining alignment with overall project goals. Prioritizing tasks based on their potential impact can significantly enhance code health and performance, enabling organizations to meet evolving demands effectively. As demonstrated by industry leaders like M&T Bank, maintaining stringent code quality standards is essential, particularly in sectors with rigorous security and regulatory requirements.

In summary, the continuous practice of refactoring, combined with robust testing and monitoring, equips development teams to create high-quality software that not only meets current needs but is also adaptable for future challenges. Emphasizing these best practices ensures that codebases remain manageable and efficient, ultimately driving sustained success in the fast-paced world of software development.

Frequently Asked Questions

What is refactoring in application development?

Refactoring is the process of improving the internal structure of an application without altering its external behavior. It focuses on enhancing readability, reducing complexity, and facilitating easier maintenance, which ultimately leads to higher quality software.

Why is refactoring important?

Refactoring is crucial because it simplifies complex scripts, particularly in large systems, making them easier to navigate and modify. It helps teams adapt to changing requirements, fix hidden bugs, and ensure that software evolves with user needs, reducing business risks associated with unhealthy code environments.

What are some techniques used in refactoring?

Several techniques can effectively refactor code, including: Extract Method, Preventive Refactoring, Corrective Refactoring, and Adaptive Refactoring.

How does refactoring relate to software testing?

Refactoring should be accompanied by consistent testing, especially automated tests, to ensure changes do not introduce new bugs. Automated testing enhances efficiency and quality assurance by validating that the software remains functional after modifications.

What is the red-green-refactor cycle in Test-Driven Development (TDD)?

The red-green-refactor cycle is a disciplined method in TDD that involves writing a failing test (red), writing the minimal code necessary to pass the test (green), and then refactoring the code to improve its structure without changing its behavior.

What is the significance of method decomposition in refactoring?

Method decomposition involves breaking down complex methods into smaller, focused methods, aligning with the DRY (Don't Repeat Yourself) principle. This practice enhances clarity, maintainability, and simplifies debugging and testing.

How can version control systems assist in refactoring?

Version control systems, like Git, allow developers to track changes, manage multiple branches for parallel development, and revert to stable versions if necessary. This capability is essential for maintaining stability and collaboration during refactoring efforts.

What are the risks associated with refactoring?

Refactoring can introduce new bugs, particularly in large codebases. To mitigate these risks, developers should employ incremental changes, ensuring that modifications are manageable and thoroughly tested before integration.

How can AI tools enhance the refactoring process?

AI tools can identify common refactoring opportunities and automate parts of the restructuring process, allowing developers to focus on more complex tasks. Studies have shown that teams using AI tools can complete tasks significantly faster than those using traditional methods.

Why is establishing a dedicated testing environment important?

A dedicated testing environment allows developers to experiment with changes safely without risking the stability of the production system. This is especially crucial in industries like banking, where security and compliance are paramount.

What should be done after completing a refactoring process?

After refactoring, it is essential to deploy the changes and closely monitor the system to identify any unforeseen issues. Continuous monitoring ensures that the revised program operates as intended and maintains code quality over time.