Introduction

Code efficiency is a crucial aspect of software development that goes beyond simply making code work. It involves crafting code that runs smoothly, uses minimal resources, and provides an optimal user experience. In this article, we will explore various strategies and best practices for achieving code efficiency and maximizing productivity.

From breaking down code into smaller functions or modules to optimizing code for performance, we will delve into the importance of using appropriate data structures and avoiding unnecessary computations. We will also discuss the significance of considering algorithmic complexities, implementing secure coding practices, and conducting regular vulnerability scanning and patching. Additionally, we will explore the role of network monitoring and intrusion detection, as well as the importance of backup and disaster recovery strategies.

Finally, we will highlight the significance of detecting and responding to code execution issues in a structured and efficient manner. By implementing these techniques and following best practices, developers can ensure that their code performs optimally, leading to efficient and results-driven software development.

Understanding the Importance of Code Efficiency

Efficiency in programming is not only related to making the software functional; it involves shaping it to operate seamlessly, utilize minimal resources, and deliver an optimal experience for users. It's a strategic approach that encompasses the entire lifecycle of software development, from writing maintainable, 'humane' code that prioritizes clear communication among developers, to enhancing existing processes for improved outcomes.

A tangible example of this is seen in the work with Pentaho, a suite of BI tools, where a transformation process was reevaluated for efficiency. Initially, the process involved extracting and inserting information across databases, which, while logical, was not scalable. By revisiting and optimizing this workflow, the insertion of data became more efficient, directly impacting the system's performance as data volume grew.

The idea of 'humane programming,' as introduced by Mark Seemann, emphasizes that the software should be a dialogue among current and future developers. This approach not only enhances the readability and maintainability of the program but also aligns with the concept of developing applications at a sustainable rate, ensuring and ease of problem resolution.

Research supports the idea that more expressive, high-level programming languages can improve productivity, with one study showing that developers produce more lines of programming when using these languages. The Developer Experience Lab's research further suggests that improving the quality of the coding environment—referred to as Developer Experience (DevEx)—can lead to more sustainable and efficient development practices.

In the pursuit of efficiency, it is essential to consider the interplay between various qualitiesâprocess, programming, system, and productâas they collectively influence performance. AI-powered tools like GitHub Copilot have been crucial in improving developers' productivity across the board, from reducing cognitive load to enhancing the quality of the programming.

Breaking Down Code into Smaller Functions or Modules

Breaking down complex software into digestible, functional units entails modularizing, a method akin to city planning where every district serves a specific purpose. This approach not only simplifies development but also facilitates understanding and maintenance. For example, a sprawling Angular project can be likened to a city: well-planned code architecture ensures navigability and efficiency, reducing the likelihood of system failure. Embracing the principle of separation of concerns is pivotal for creating testable, high-quality software. Modular programming adheres to this principle by offering clarity, allowing separate modules of programming to be tested autonomously, which is crucial for promptly and consistently detecting flaws. As an experienced developer mentioned, 'Modularity, clarity, and independence are essential features of testable software.'.Furthermore, Amdahl's Principle serves as a reminder that improvements in execution are limited by the least parallelizable portions of the program. This concept underscores the importance of optimizing each module to achieve overall efficiency. In the domain of engineering for optimal execution, the advancement of multi-core processors has moved the attention from enhancing clock speeds to enhancing the structure and design for ideal execution.

To guarantee that the program stays flexible, refactoring assumes a significant part. It involves reworking the internal structure of the program without altering its external behavior, aiming to improve understandability, maintainability, and performance. A common mistake in development is overlooking the necessity of refactoring, which can lead to tightly coupled and inflexible code.

In practice, architectural patterns like Hexagonal Architecture can defend the core business logic from external changes through well-defined ports, promoting interchangeability and resilience. This is particularly beneficial for less experienced developers who may struggle with complex codebases.\n\Performance benchmarking also plays an essential role, focusing on relevant use cases to avoid skewed results. For example, the 2024 OSSRA report highlights the importance of maintaining a Software Bill of Materials (SBOM) for transparency in open-source components, which can be as prone to security threats as the proprietary software.

Ultimately, the process of is not only a technical endeavor; it is a strategic step towards developing software that is strong, comprehensible, and flexible to accommodate future changes or expansion.

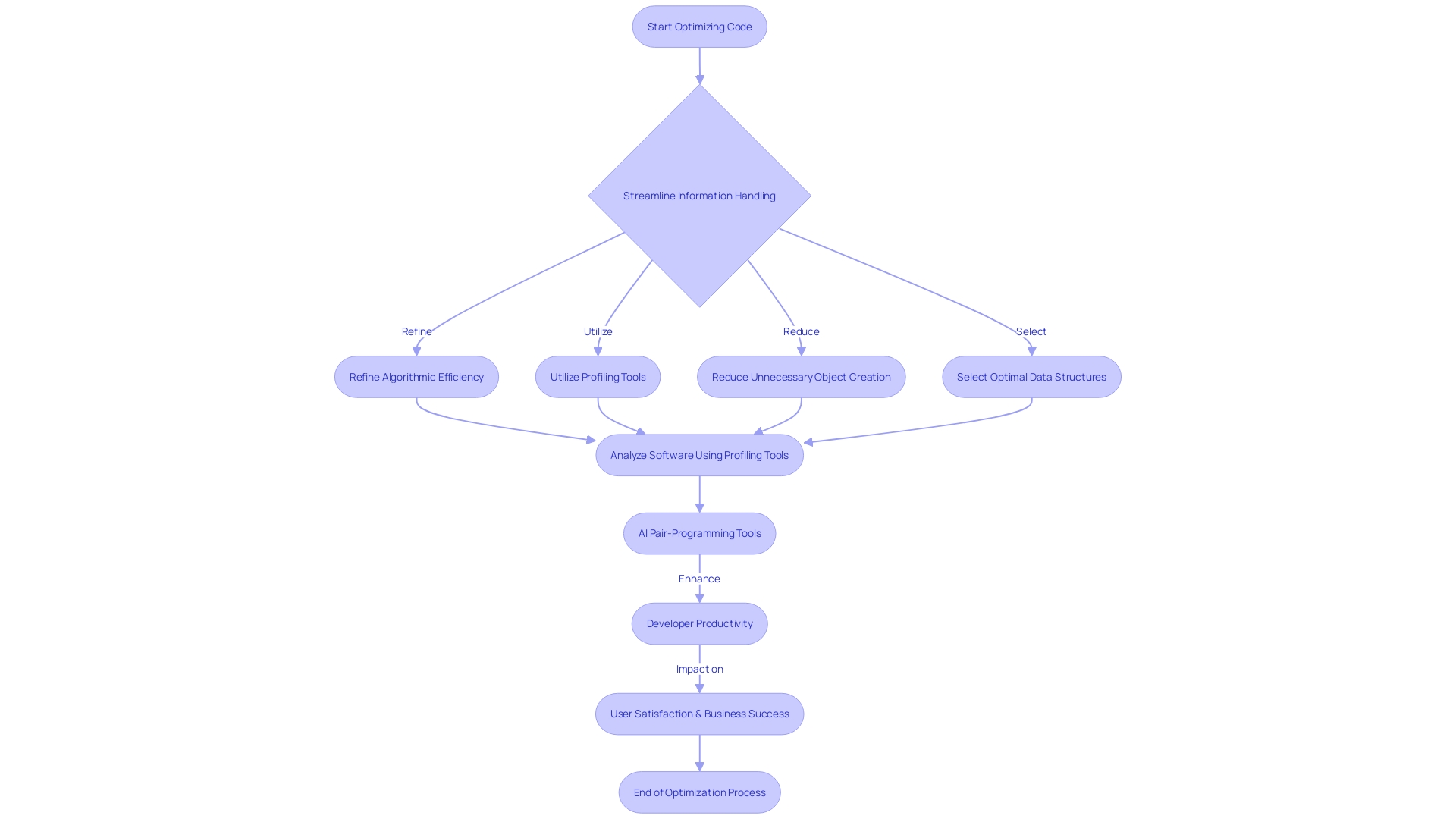

Optimizing Code for Performance

Enhancing code for performance is a complex process that involves streamlining information handling, refining algorithmic efficiency, and utilizing robust profiling tools. For instance, consider the case of Pentaho, a comprehensive suite of BI tools. An IT Analyst faced the challenge of improving a transformation process that initially seemed logical but was flawed due to inefficient insertion from multiple queries into a single database table. This case underscores the importance of scrutinizing workflows to identify and rectify such bottlenecks.

To address these issues, it's essential to adopt a proactive approach. Start by reducing the creation of unnecessary objects, which can lead to burdensome memory usage and frequent garbage collections. Employ immutable objects when feasible and select the optimal data structure tailored to your specific needs, such as using ArrayLists for quick access or Linked List for frequent insertions and deletions.

Profiling tools are invaluable in identifying bottlenecks within your application. By systematically analyzing software, these tools assist developers in identifying areas that require improvement, directing them towards efficient optimization strategies.

Furthermore, engineering has become increasingly vital in the era of computing limitations, as highlighted by a detailed examination of Moore's Law. As we encounter constraints in increasing processor clock speeds, the emphasis has shifted to multi-core processors and architectural improvements. This shift underscores the necessity for developers to write optimized code, as hardware advancements alone no longer guarantee performance boosts.

In the realm of information communication, advances in technology have aimed at reducing information volume and communication time, crucial for services like IoT and cloud computing. This optimization is crucial for systems requiring fast transmission, such as those using AI for image recognition from surveillance cameras.

Application performance optimization is not just about code. It directly influences user satisfaction, operational efficiency, and business success. Key metrics, such as response time, define the user experience, making optimization a critical component of application development.

Lastly, AI pair-programming tools like GitHub Copilot have been shown to significantly enhance developer productivity, benefiting all skill levels and positively impacting various productivity aspects from task time to learning.

Using Appropriate Data Structures

Choosing an optimal structure is like selecting the right tools for a task - it can greatly improve the efficiency of your operations. Structures are essential components in coding that organize and store information, much like a well-arranged library. The importance of an accurate information organization is clear in its capacity to enable fast access, modification, and administration of information within a program.

Consider the use of dictionaries in programming—a structure that pairs keys with values, allowing for rapid retrieval. This is particularly advantageous when handling tasks like , where a HashMap can swiftly suggest word corrections. Such efficiency is owed to the underlying mechanics of the structure, such as hash tables or binary search trees, which dictate the performance of insertions and lookups.

The complexity of structures extends beyond simple storage; they embody a formal model for organization, which becomes crucial when applications process and manipulate vast datasets. The appropriate structure can greatly decrease the time complexity of operations. For example, a hash table has the ability to access any item in a constant amount of time, regardless of the dataset's sizeâa characteristic that can improve the dynamic nature of a digital dictionary or a browser's information management.

In the realm of software development, the selection of structures is not trivial. It's a strategic decision that impacts code performance, as highlighted by experts like Micha Gorelick and Ian Oswald in 'High Performance Python'. They claim that a significant portion of efficient programming involves choosing a structure that can quickly answer the specific queries presented by your information. Whether it's a contiguous block of memory or a node-based structure like a linked list, the nature of the collection is pivotal in determining the efficiency of information access and manipulation.

To illustrate, envision a delivery company that initially utilized bicycles for transportation. As the company expanded to a larger city, the limitations of bicycles became apparent due to increased delivery distances. This scenario mirrors the importance of data structures in programming—where the wrong choice can slow down operations, just as bicycles proved inefficient over longer distances, whereas the correct choice, akin to switching to motorcycles or cars, can lead to seamless and swift deliveries.

Avoiding Unnecessary Computations

Enhancing the execution time and reducing the time taken for computations is more than a technical task; it is a strategic approach to improve the efficiency of the program. Developers must delve into the complexities of software design and computer architecture to truly refine their programming. A poignant example is understanding the unique properties of character encoding like ASCII to eliminate redundant calculations, thus bolstering efficiency. For instance, leveraging the distinct bit patterns of ASCII characters can streamline processes, as demonstrated in a case where significant performance gains, to the tune of an 8x speedup, were realized over a naive implementation. Such optimizations are not prescriptive but require a deep understanding of the problem at hand and a willingness to explore various solutions.

As technology evolves, new architectural methods emerge, like the novel approach to transformer models that replace 'self-attention' with 'overlay' techniques, where matrix elements interact more selectively, showcasing how targeted optimizations can lead to substantial improvements. This innovative strategy has been shared through platforms like GitHub, providing developers with practical examples to model their optimizations.

The practice of writing prematurely can often lead to wasted effort and obsolete solutions. This sentiment is echoed by Guillermo Rauch, who highlights the futility of into programming material that might never be needed or may become outdated before it finds use. The philosophy of 'make it work, make it right, then make it fast' underscores the importance of focusing on functionality before optimization. However, this adage requires nuance; optimization is not an afterthought but an integral part of the development process that demands continuous attention.

AI pair-programming tools like GitHub Copilot are revolutionizing productivity by offering developers real-time completions. These systems utilize large language models to provide context-aware suggestions that can span multiple lines, enhancing productivity across all skill levels, especially for junior developers. The combination of human knowledge and AI support is transforming how developers handle optimization, decreasing mental burden and enhancing both task effectiveness and quality of the code.

The increasing need for energy efficiency, especially in data centers that consume more than one percent of global power usage, highlights the importance of optimized programming that can decrease server workloads. By implementing strategic optimization and being open to adopting new technologies and methodologies, developers can achieve notable progress in producing more efficient and effective software, ultimately contributing to the overarching objective of sustainable technology practices.

Considering Algorithmic Complexities

Delving into the intricacies of algorithmic complexities is more than an academic exercise; it's a practical skill that sharpens a developer's ability to choose the right tool for the job. At the core of this lies the comprehension of time and space complexities, which can determine the success or failure of code in execution. A brute-force algorithm, while straightforward, often pales in comparison to more sophisticated methods when scaling to larger datasets. For example, even though a sorting algorithm might efficiently handle a small array, it could have difficulty with millions of elements, emphasizing the need for a mathematical method to assess accuracy.

The recent breakthroughs in network flow algorithms, such as Kyng's work, underscore the importance of this evaluation. His algorithm, adept at finding the optimal flow in complex networks from transportation to internet traffic, showcases how efficiency leaps can be made when the right algorithm is applied - delivering solutions in real-time, a feat that had eluded researchers for nearly a century.

In both academic and industry settings, the effect of thorough evaluation is undeniable. It provides a comprehensive understanding of a system's behavior, its limitations, and potential improvements. This not only enriches the developer's intuition but also propels the creation of . As we navigate through various algorithms, from the fundamental to the cutting-edge, our goal is a blend of correctness, simplicity, and efficiency - a balance that, when struck, leads to superior program performance.

Best Practices for Code Design and Development

Following recommended principles in program design and development is essential for guaranteeing that your application performs at its best. Such practices encompass industry-standard programming conventions and design principles that significantly enhance code efficiency. This involves giving priority to the readability and maintainability of the program, important factors that affect the quality of the design.

Mark Seemann, a software architect, introduced the concept of humane programming in his book 'Code That Fits In Your Head' to address the complexity of software and advocate for sustainable software development. Humane programming is based on the idea that software is a means of communication among present and future developers. This emphasizes the significance of readability, guaranteeing that the program's intention is clear for future reference and maintenance.

Moreover, the idea of a design system plays a pivotal role in creating a consistent user experience. A design system acts as a foundational framework of design components and rules that guide the development of digital products, ensuring cohesion across various elements like typography, color, and layout.

Industry experts emphasize the necessity of refactoring, which involves restructuring existing programming to enhance readability and performance without altering its functionality. This practice is crucial for preserving the quality of programming over time.

Eleftheria, an experienced business analyst and tech writer, stresses the significance of clean code—code that is straightforward and simple to understand. Utilizing meaningful names for variables, functions, and classes is a critical part of writing clean code, as it clarifies the code's purpose and facilitates easier comprehension and collaboration among developers.

Publications like 'Design Patterns: Elements of Reusable Object-Oriented Software' introduce a taxonomy of 23 design patterns that offer templated solutions for recurring problems. These patterns are classified into Creational, Structural, and Behavioral types, each addressing specific challenges in engineering. Selecting the appropriate design pattern requires careful problem identification to avoid over-complicating solutions.

Additionally, advancements in AI-powered pair-programming tools, such as GitHub Copilot, have demonstrated substantial improvements in developer productivity across all skill levels. These tools offer context-sensitive suggestions that enhance product quality, reduce cognitive load, and foster learning.

In the realm of application development, the discussion has shifted towards sustainable practices that prioritize the developer experience (DevEx). Research by Microsoft and GitHub's Developer Experience Lab during the pandemic has revealed that optimizing the coding environment is a more effective approach to improving developer outcomes than merely increasing expectations.

To summarize, contemporary software development is not only about composing functional instructionsâit's about composing instructions that are maintainable, scalable, and understandable. By adopting these best practices, developers can ensure that their programming not only meets current standards but also stands the test of time.

Compute Resources and Use Case Considerations

Maximizing code efficiency is not a one-size-fits-all endeavor; it requires a keen understanding of the compute resources at hand and the particular demands of the application in question. To achieve , developers must embrace a results-oriented approach that leverages specific hardware capabilities and tailors optimization strategies to the application's unique characteristics. For example, the effective optimization of a Blazor WebAssembly application, which reduced processing times from 26 to 9 seconds, highlights the significant influence that a focused approach can have on efficiency.

Modern programs must excel in diverse environments, from websites to applications across various operating systems. Azure, for example, must maintain high performance across an eclectic mix of servers and configurations. Given the constraints of time and computational resources, coupled with the growing complexity of adding new services and hardware, finding the optimal solution is often not feasible. Instead, optimization algorithms aim for a near-optimal solution that balances resource use and time efficiency. Such algorithms have proven invaluable in establishing environments for programs and hardware testing platforms, illustrating their broad applicability across different domains and the complexity of the optimization challenges they address.

Optimization extends beyond simply fine-tuning code; it's about strategic decisions that can significantly reduce costs, such as selecting the right virtual machine types or enhancing data processing engine configurations. Innovations like NVIDIA's Inception program demonstrate the importance of providing cutting-edge technical tools and resources to startups at all stages, fostering rapid evolution through optimized technology. Furthermore, as technologies like ChatGPT reach unprecedented user milestones in record time, the need for content that targets domain experts and computational scientists becomes more pressing. Such content not only serves as a guideline but also encourages hands-on experimentation with optimization techniques tailored for specific applications or models.

In the realm of optimization, software scalability and system efficiency are deeply interconnected. Techniques like caching can dramatically improve performance, while optimizing I/O operations is crucial for efficient file handling and network communication. Performance testing and profiling are essential practices that help identify areas ripe for optimization, balancing the trade-offs between optimizing software and maintaining its readability and maintainability. As centers' energy consumption becomes an ever-greater concernâaccounting for over one percent of global power usageâenergy efficiency emerges as a critical aspect of optimization that software development organizations must not overlook.

Identifying and Eliminating Bottlenecks

Improving code for efficiency is a complex undertaking, often likened to the intricacy of progressing through higher levels of mathematics. As you navigate from the simplicity of coding for a single-user application to the intricacies of a distributed system with concurrent users, challenges such as maintaining data consistency become evident. This can be seen in scenarios where basic operations, like incrementing a counter, become complex due to multiple users and processes interacting with the system simultaneously.

To tackle these challenges, a strong evaluation of outcomes is essential. It provides insight into a system's behavior, uncovers its limitations, and identifies areas that require improvement. Comprehending the inner operations and policies of your system is not only about sustaining efficiency; it is about improving your intuition as a developer to construct exceptional systems moving ahead.

In the context of distributed systems, where performance bottlenecks can emerge from data consistency issues, tools like Amazon Q offer valuable assistance. By catching, diagnosing, and resolving bugs that are otherwise challenging to test and reproduce, developers can ensure smoother operation and maintain the integrity of their applications.

Performance engineering's relevance has magnified in the era where Moore's Law faces physical limitations. The development of multi-core processors and architectural improvements has changed attention from increasing clock speed to optimizing current software. Utilizing tools and strategies to is essential, particularly when a piece of unoptimized software will not necessarily run faster on newer hardware.

It's essential to remember that the goal is not to fix every possible issue but to prioritize and tackle small, impactful areas. This approach is essential in optimizing the application's success, where a comprehensive understanding of good versus bad API responses can greatly impact results. Performance monitoring tools and program profiling are essential techniques in identifying and resolving these critical areas, resulting in quicker, more efficient program execution.

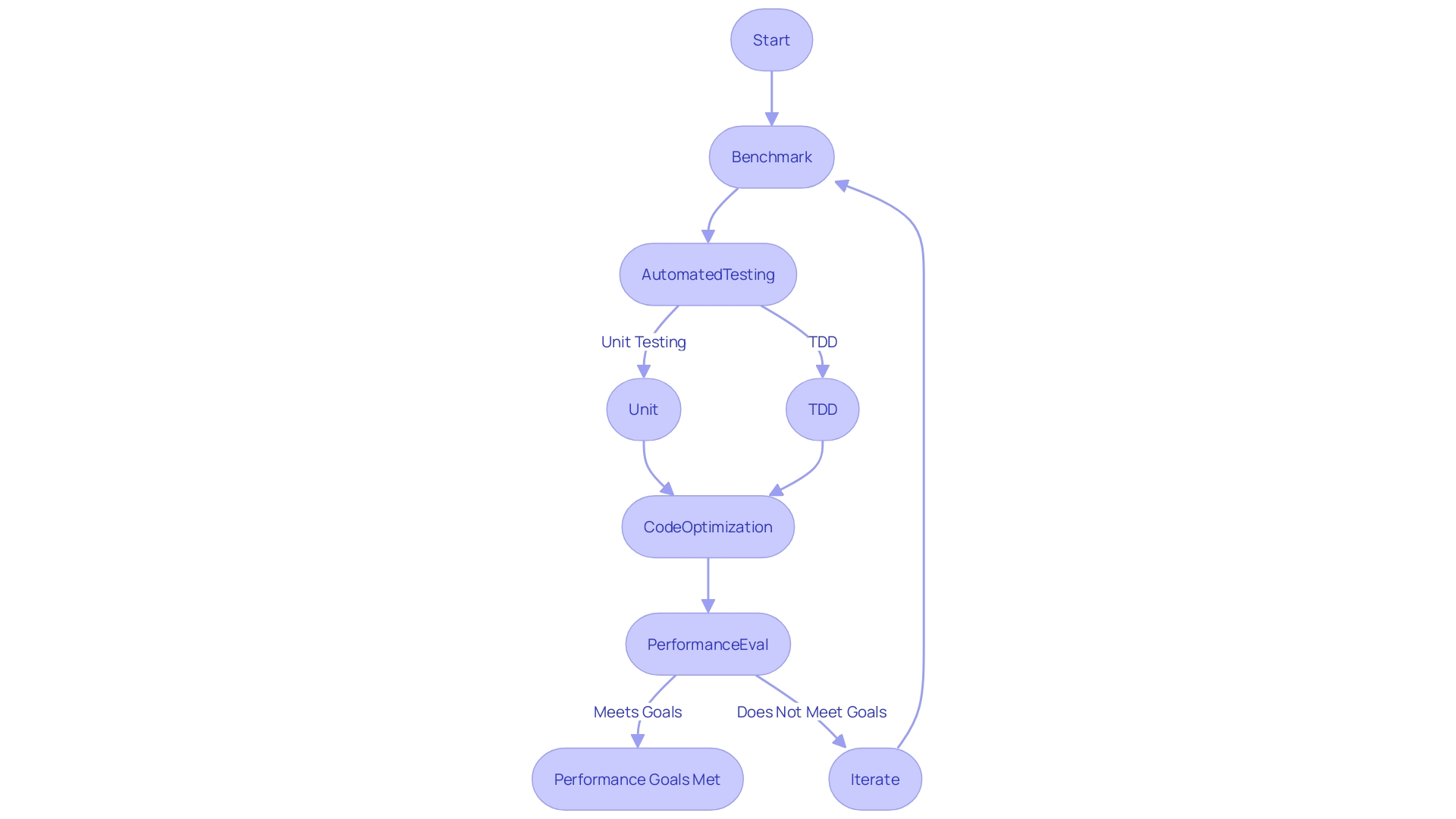

Benchmarking and Performance Testing

Comparing and evaluating stand at the forefront of performance engineering, essential steps for developers to ensure their software operates with optimal efficiency. These methodologies enable the accurate measurement of , providing a tangible measure of the benefits yielded from optimization techniques. Benchmark testing, specifically, allows developers to compare their application's performance against predefined standards or competitors, guiding them toward achieving industry-leading performance.

Automated Testing, including Unit Testing and Test Driven Development (TDD), is the bedrock upon which reliable applications are built. Automated Testing covers a range of tests that assess different aspects such as correctness, security, and load, while Unit Testing examines the smallest units of the program to ensure their proper function. TDD, on the other hand, reverses the traditional code-first approach by prioritizing test creation before coding, ensuring each development step is validated.

As Moore's Law encounters the physical limitations of processor speed improvements, the emphasis has shifted to multi-core processors and architectural enhancements. This development emphasizes the significance of code optimization, as software can no longer depend on raw hardware advancements to enhance its effectiveness. In this landscape, testing and benchmarking are not mere formalities but essential practices that underpin the creation of robust, scalable, and efficient applications.

The importance of testing the functionality is echoed in industry sentiments, where maintaining high efficiency is not just a goal but a necessity for a product's lifespan. A comprehensive performance evaluation reveals intricate system behaviors, setting the stage for future improvements and innovation. The philosophy of 'make it work, make it right, then make it fast' encapsulates the iterative journey of application development, where the final step of optimization is critical for delivering exceptional applications that stand the test of time and usage.

Secure Coding Practices to Prevent Remote Code Execution (RCE) Vulnerabilities

To maintain efficient and reliable programs, it's crucial to prioritize secure coding practices. These practices are a strong defense against , which can be a gateway for attackers to compromise applications. Effective input validation is critical to ensuring that only sanitized data enters the system, acting as a first line of defense against SQL injection and other forms of attacks that exploit input fields. Moreover, the adoption of regular vulnerability scanning, as part of a comprehensive security strategy, plays a significant role in identifying and mitigating potential risks before they can be exploited.

Developers are encouraged to follow best practices such as scrutinizing third-party dependencies and implementing a software Bill of Materials (BOM) to understand the full composition of their software. By doing so, they can trace vulnerabilities to their source, ensuring that each component is secure. Additionally, the importance of not exposing sensitive information such as API keys or passwords within the codebase cannot be overstated, as this has been the root cause of many high-profile cybersecurity incidents. These measures, combined with continuous education through regular security training, cultivate a proactive security culture and keep the development team ahead of emerging threats.

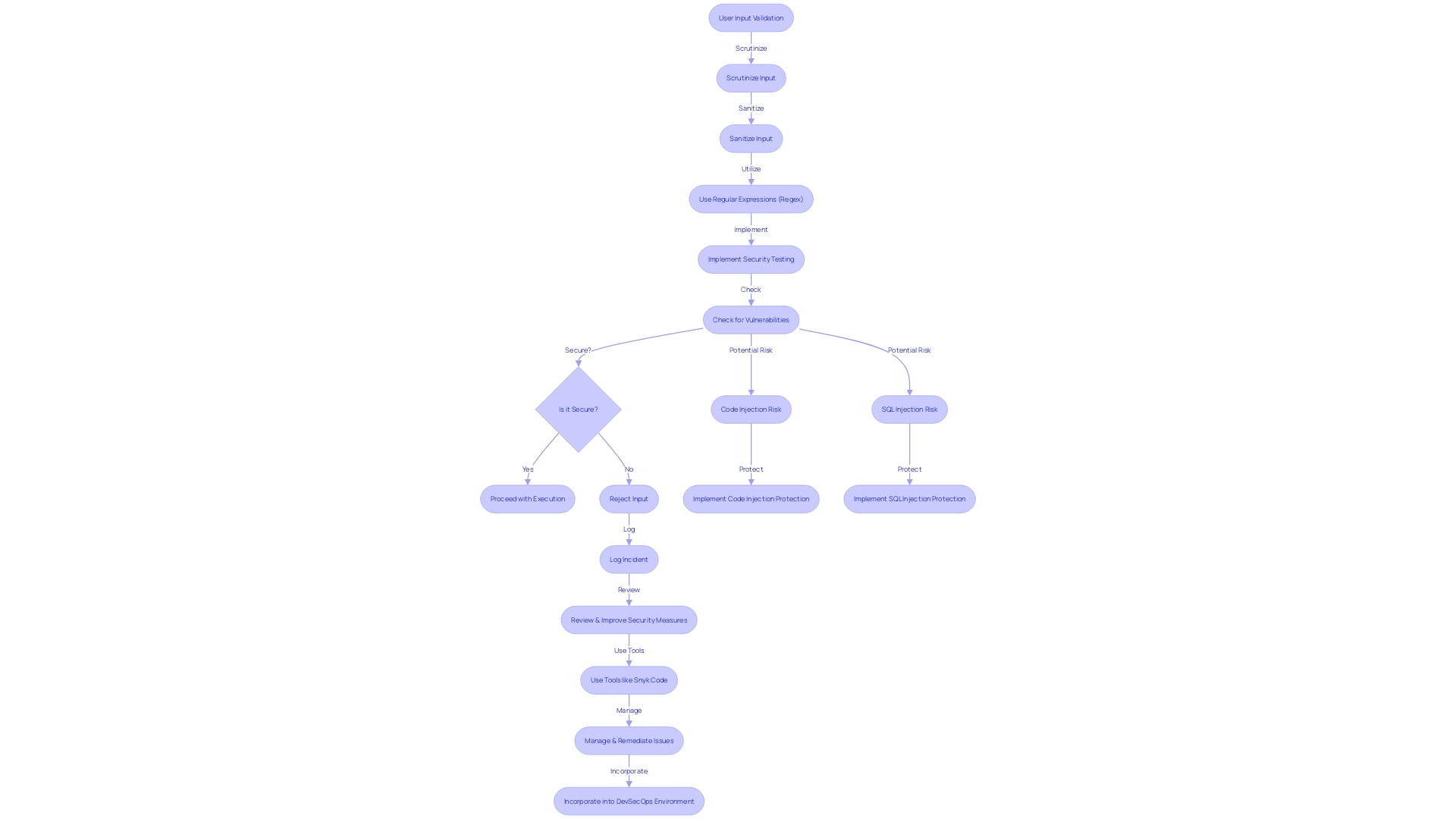

Input Validation and Sanitization

Validating user input is a cornerstone of robust program execution and security. By scrutinizing and sanitizing input, developers can thwart issues such as code injection, a prevalent threat where attackers exploit user-controlled inputs to execute malicious code. Consider a scenario where an application takes user input without any checks, allowing an attacker to input a command like ' OR '1'='1'; DROP TABLE users; which could lead to devastating outcomes. It's crucial to comprehend these vulnerabilities and utilize techniques like Regular Expressions (Regex) to ensure integrity.

SQL injection (SQLi) is another common vulnerability that arises when a SQL query field, expected to contain a specific type, is manipulated with an unexpected command. This can lead to unauthorized command execution, much like if someone named 'Bob' could manipulate a court document to erroneously state 'Bob is free to go,' leading to an unintended release. Vigilance in web application security, adherence to , and regular security testing are essential to protect against such vulnerabilities.

Moreover, considering the intricate nature of data validation especially in full-stack applications fetching data from cloud APIs or user inputs, it's essential to validate external data sources rigorously. Tools like Snyk Code can assist in managing and remediating programming issues with features like issue filtering and priority scoring, which are critical in a DevSecOps environment where consolidating and correlating results from various tools can be challenging. The survey results on the use of AI completion tools indicate a broad interest across various sectors, reflecting the importance of quality and security in today's technology roles.

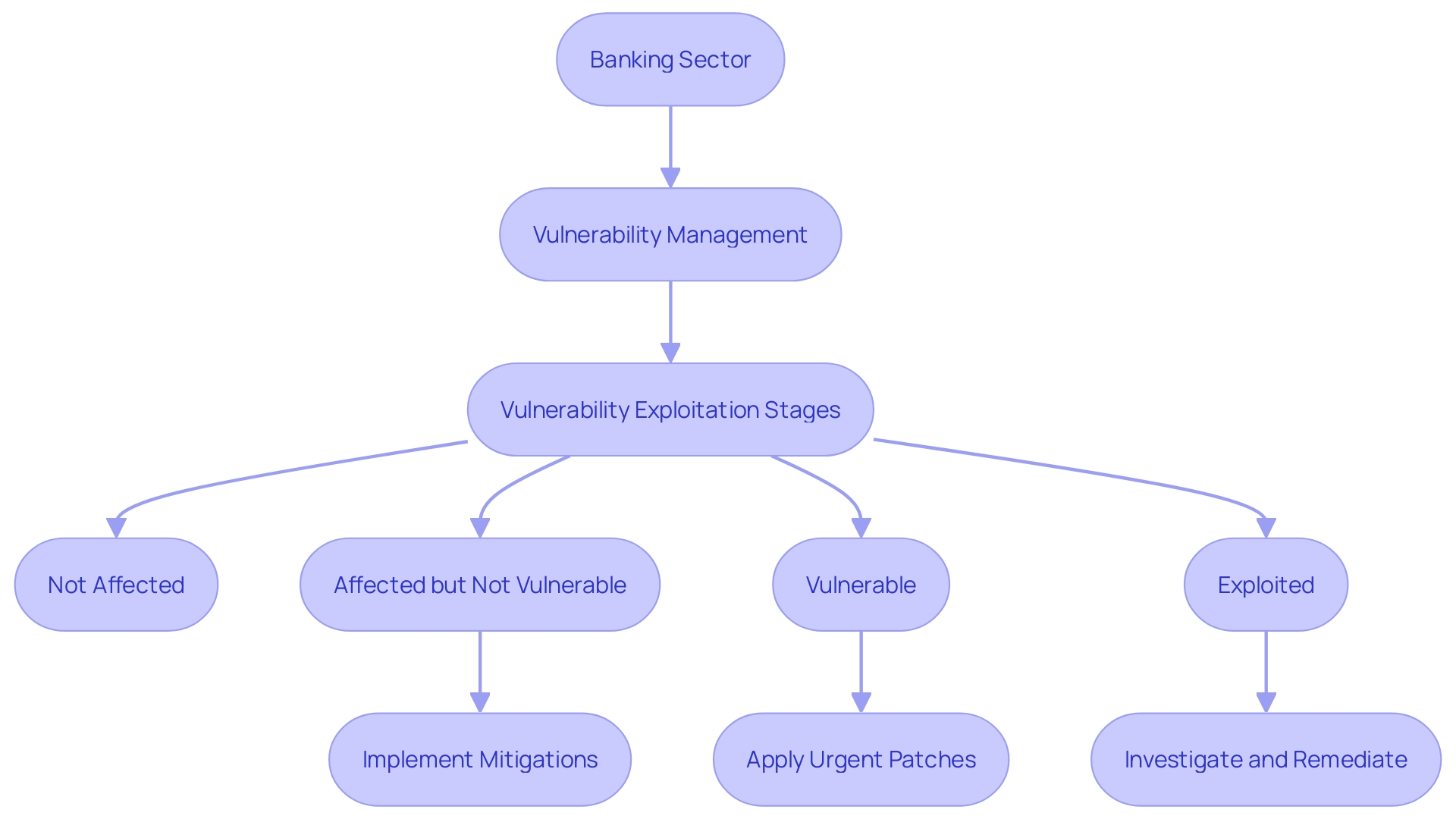

Regular Vulnerability Scanning and Patching

In the domain of program development, particularly in crucial sectors like banking, the security of code is of utmost importance. M&T Bank, with its rich legacy and dedication to innovation, recognized the significance of establishing Clean Code standards to strengthen their application against vulnerabilities. As the financial sector evolves with digital transformations, it is critical to adopt a proactive approach to vulnerability management. Regular vulnerability scanning is not merely a compliance requirement; it is a necessity to protect sensitive data from the ever-present threat of cyber-attacks. The proactive identification and patching of security flaws are crucial steps in maintaining the integrity of a codebase.

Vulnerability exploitation can be categorized into four stages: not affected, affected but not vulnerable, vulnerable, and exploited. It is crucial to comprehend that a flaw in a program may not necessarily make it susceptible if not utilized in a way that exposes it to risk. However, if the flaw is actively exploited, immediate action is required to mitigate potential damage. The banking industry's digital transformation requires strict standards, as the introduction of programs with vulnerabilities incurs not only financial loss but also reputational damage.

Real-time vulnerability scanning moves beyond the outdated practices of periodic checks. With the constant emergence of new threats, continuous monitoring has become the gold standard. This approach aligns with the latest update to the Payment Card Industry Data Security Standard (PCI DSS), which reflects current technological and threat landscape changes. Ultimately, adopting ensures that digital assets are adequately safeguarded against the dynamic nature of cyber threats.

Network Monitoring and Intrusion Detection

Network monitoring and intrusion detection systems are essential for maintaining the integrity of program execution in the presence of external threats. To highlight the importance of these systems, we can look at the validation process in software like Moodle, used by institutions like RWTH Aachen University. In the Moodle's programming, for instance, validation checks guarantee that PHP comments do not infiltrate answer formulas, and variables are sanitized to prevent the insertion of harmful elements. This kind of meticulous validation is a first line of defense against code execution issues stemming from external threats.

Moreover, recent developments in cyber threat tactics, such as exploiting network latency to infer user activities with high accuracy, underscore the need for robust network monitoring. Attackers can now use latency measurements to detect what videos or websites a victim might be watching or visiting, making it crucial for intrusion detection systems to evolve and adapt to these sophisticated techniques.

As the Microsoft Digital Defense Report indicates, the defense against cyber threats is an ever-evolving challenge that requires constant innovation and collaboration. With , leveraging large datasets has become essential to stay ahead of threats. This transformation is evident in the way observability is now seen within systems. Observability, a concept introduced by Rudolf E. Kálmán in 1960, originally applied to control systems, is now crucial in understanding and interacting with virtual systems, allowing for the detection of issues that other methods may miss.

In the development lifecycle, security and privacy requirements are foundational. These requirements evolve, informed by known security threats, best practices, regulatory standards, and insights from past incidents. Continuous training in cybersecurity basics and secure development practices, including AI application in software testing, is essential for all involved in the creation of software.

Overall, network monitoring and intrusion detection are not just about safeguarding against current threats but also about preparing for emerging vulnerabilities. The landscape of cyber threats is constantly evolving, and our defense mechanisms must be equally dynamic and forward-thinking to protect execution from malicious activities.

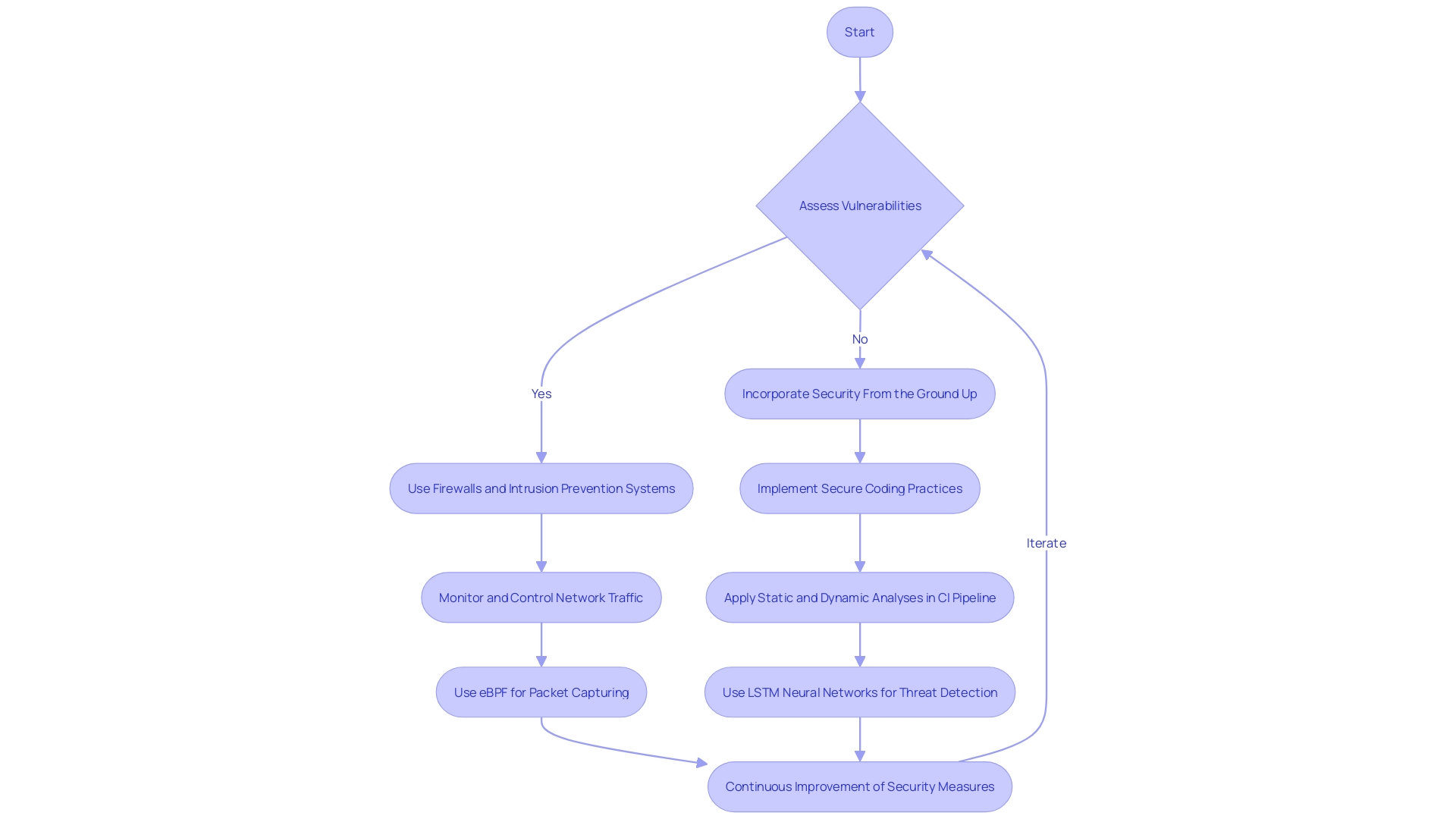

Implementing Firewalls and Intrusion Prevention Systems

Protecting the integrity of code execution is pivotal, not just to thwart unauthorized access, but also to shield against sophisticated cyber threats that can compromise enterprise operations. Firewalls and intrusion prevention systems (IPS) are the bedrock of a robust security posture. These tools serve as the first line of defense, monitoring and controlling incoming and outgoing network traffic based on an organization's security policies.

For instance, consider the narrative of DUCKS4EVER, a company that expanded rapidly due to their successful hosting services. As their client, Ducklings2Meet, experienced exponential growth, the necessity for impeccable security measures became paramount. Their story underscores the significance of scalable and advanced security solutions that grow with the company.

In the realm of intrusion detection, innovative technologies like eBPF for packet capturing and LSTM neural networks are revolutionizing how threats are identified and classified. This method allows for the detection of threats in real-time with minimal system overhead, ensuring that security measures do not hinder network operation.

Organizations are increasingly aware that network security is not a trade-off with performance. In fact, effective security is integral to maintaining high-performance networks. This balance is crucial as enterprises continue to develop enterprise apps that drive business efficiency but also present new security challenges.

With the average in the USA hitting $9.48 million in 2023, the stakes are high. Security by design is not just a concept but a critical practice that involves a multi-stage process, from assessing vulnerabilities to continuous improvement of security measures. These practices are crucial in protecting sensitive information, financial records, and ensuring privacy.

Leading voices in cybersecurity emphasize the importance of incorporating security from the ground up. As technology evolves, so do the strategies employed by threat actors, making it essential for software and infrastructure to be secure by design. This proactive stance on security is echoed by agencies like CISA, which advocates for technology providers to build products that are inherently secure.

In conclusion, the convergence of cutting-edge technologies, strategic security practices, and a collaborative approach between government and industry is shaping the future of digital defense. This is a multi-faceted effort that requires ongoing vigilance and innovation to keep pace with the dynamic landscape of cyber threats.

Backup and Disaster Recovery Strategies

Creating backups and preparing for disaster recovery are not just safety measures; they are critical components of maintaining continuous code execution and minimizing detrimental downtime. In the event of information loss, the consequences can be severe, including the . Dependable backups act as a safety net, allowing the restoration of information and systems after a loss event, thereby providing a buffer against information corruption and unforeseen disasters.

Consider the mundane causes of downtime that are often overlooked: hardware failures, severe weather, human errors, and power outages. These high-probability events can be as disruptive as the more dramatic disasters. Adding to the complexity, organizations face increasing threats from malicious attacks, both internal and external, that can compromise IT environments. It's not just the loss of information that's at stake; financial losses and the erosion of customer trust can have long-lasting effects on an organization's viability and reputation.

For instance, Gartner predicts that by 2025, 30% of organizations will experience an incident of information loss due to careless employees. The responsibility of guardians, like Exchange Server administrators, has become more challenging in the face of such threats. They must navigate a complex environment, balancing the need for tight security measures against sophisticated cybercriminals and evolving threats.

It's essential to recognize that backups and disaster recovery, while related, entail distinct strategies and concepts. Backups are about preserving information, while disaster recovery encompasses a broader scope, preparing for the continuity of operations under adverse conditions. As the saying goes, 'Hope for the best, prepare for the worst'—implementing a robust backup and disaster recovery strategy is the embodiment of this adage in the context of data management and protection.

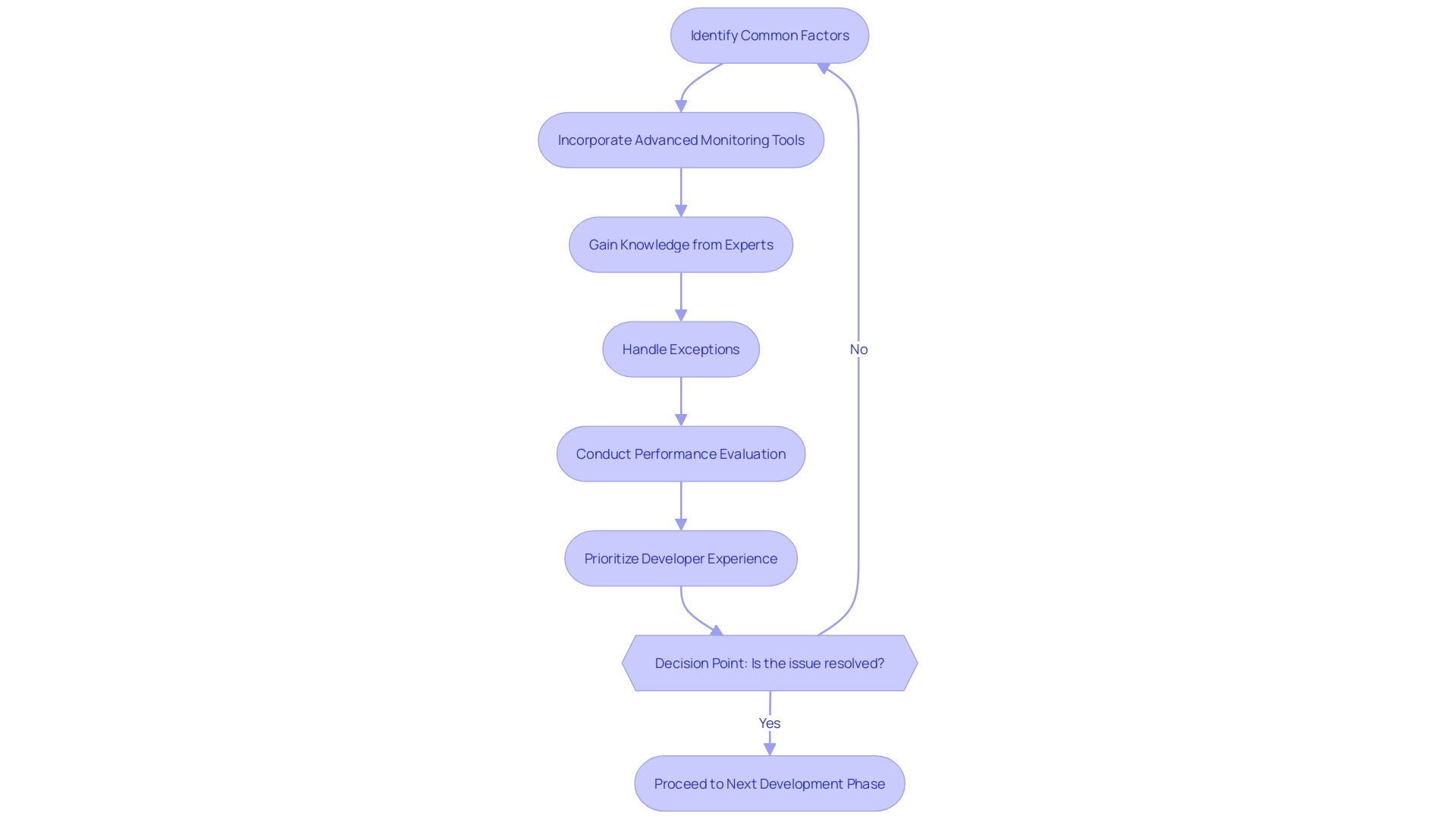

Detecting and Responding to Code Execution Issues

To address execution issues effectively, it's crucial to have a structured approach. Start by identifying common factors among affected users to pinpoint the source of the problem. For instance, internal investigations at Gusto revealed that not all employee users were impacted and customer-facing software remained unaffected, guiding the team towards the issue's root cause.

Incorporate advanced monitoring tools that offer real-time insights, as underscored by the latest security insights from Google. With these tools, anomalies can be detected swiftly, minimizing potential disruptions. In addition, gaining knowledge from experts in the field, like the complex mechanisms of Turbofan described by Jeremy Fetiveau and Samuel GroÃ, can offer a more profound comprehension of how to enhance program execution and resolve issues efficiently.

Exception handling plays a pivotal role in maintaining robust software. It's not just about catching errors but understanding their nature, as highlighted by the insights into C# exception handling. By filtering and prioritizing issues using tools like Snyk Code, developers can address the most critical problems first, enhancing the code's reliability and security.

is another cornerstone. It's not just about evaluating the overall functioning but exploring the system's behavior and its internal mechanisms. A comprehensive performance evaluation, as noted in academic and industry research, reveals insights that drive future developments and system improvements.

Finally, the Developer Experience Lab's research emphasizes the importance of a positive developer experience (DevEx) over mere productivity. By creating an environment that fosters efficient coding practices, developers can produce high-quality work without the side effects of burnout or decreased retention. This holistic approach to tackling code execution issues ensures not just immediate fixes but also long-term sustainability and efficiency.

Conclusion

In conclusion, achieving code efficiency and maximizing productivity requires a strategic approach that encompasses various strategies and best practices. Breaking down code into smaller functions or modules simplifies development, enhances understandability, and facilitates maintenance. Optimizing code for performance involves streamlining data handling, refining algorithms, and utilizing profiling tools.

Selecting appropriate data structures enhances the efficiency of operations and reduces time complexity. Avoiding unnecessary computations improves code performance and reduces execution time. Considering algorithmic complexities sharpens a developer's ability to choose the right tools for the job.

Adhering to best practices in code design and development prioritizes code readability and maintainability. Maximizing code performance requires understanding compute resources and tailoring optimization strategies to the application's demands. Identifying and eliminating bottlenecks through performance evaluation and optimization techniques is crucial.

Benchmarking and performance testing quantifies code performance and guides optimization efforts. Implementing secure coding practices and regular vulnerability scanning helps prevent remote code execution vulnerabilities. Network monitoring and intrusion detection systems safeguard code execution from external threats.

Firewalls and intrusion prevention systems serve as the first line of defense against unauthorized access and sophisticated cyber threats. Backup and disaster recovery strategies minimize downtime and ensure continuous code execution. Detecting and responding to code execution issues requires a structured approach, incorporating advanced monitoring tools, exception handling, and comprehensive performance evaluation.

By following these techniques and best practices, developers can ensure that their code performs optimally, leading to efficient and results-driven software development.

Frequently Asked Questions

What is code efficiency?

Code efficiency refers to the ability of software to function seamlessly, utilize minimal resources, and deliver an optimal user experience. It encompasses the entire software development lifecycle, including writing maintainable code and optimizing existing processes.

What is 'humane programming'?

'Humane programming,' introduced by Mark Seemann, emphasizes clear communication among developers. It focuses on writing readable and maintainable code, ensuring that future developers can easily understand and modify it.

Why is breaking down code into smaller functions or modules beneficial?

Modular programming simplifies development, enhances code clarity, and allows for independent testing of functions. This approach improves maintainability and reduces the likelihood of system failures.

How does refactoring contribute to code efficiency?

Refactoring involves restructuring existing code to improve understandability and performance without changing its external behavior. It helps maintain flexibility and reduces tightly coupled code, which can hinder future updates.

What role do data structures play in code efficiency?

Choosing the right data structure is crucial for organizing and accessing information efficiently. Optimal structures can significantly decrease the time complexity of operations, improving overall program performance.

How can developers avoid unnecessary computations?

Developers should analyze their code to eliminate redundant calculations and optimize algorithms. Understanding character encoding and leveraging efficient coding practices can lead to significant performance improvements.

What are algorithmic complexities, and why do they matter?

Algorithmic complexities refer to the time and space requirements of algorithms. Understanding these complexities helps developers choose the best algorithms for their specific use cases, especially when scaling up to larger datasets.

What are the best practices for code design and development?

Best practices include prioritizing readability, maintaining clean code, utilizing meaningful names, and following design patterns. Regular refactoring and using AI-powered tools can also enhance productivity and code quality.

Why is performance benchmarking important?

Performance benchmarking allows developers to measure their application's efficiency against predefined standards. It guides optimization efforts and ensures that software meets industry performance expectations.

How can vulnerability scanning and secure coding practices enhance code efficiency?

Secure coding practices, such as input validation and regular vulnerability scanning, help prevent security breaches that can disrupt software performance. A proactive approach to security fosters a more stable and efficient codebase.

What strategies exist for backup and disaster recovery?

Creating reliable backups and disaster recovery plans is essential for minimizing downtime and loss of data. This includes regular backups and preparing for various scenarios that could affect operational continuity.

How can developers address code execution issues effectively?

Developers should utilize monitoring tools to identify and analyze issues, prioritize exception handling, and perform comprehensive performance evaluations to enhance code reliability and security.