Overview

AI performance profiling is crucial in addressing the coding challenges developers face today. By measuring resource usage and pinpointing inefficiencies in AI systems, developers can optimize code efficiency effectively.

How can you ensure your AI models perform at their best? Utilizing methodologies and tools such as TensorFlow Profiler, along with continuous monitoring strategies, allows for enhanced application responsiveness and reduced operational costs. This not only improves productivity but also ensures that AI models maintain their effectiveness over time.

Explore the tools available on platforms like Kodezi to elevate your coding practices and achieve optimal performance.

Introduction

In the realm of software development, coding challenges are ever-present and can significantly impact efficiency. AI systems are revolutionizing industries, yet their true potential can only be unlocked through meticulous performance profiling. This critical practice not only measures resource utilization but also identifies inefficiencies that can hinder application responsiveness and inflate operational costs.

As organizations strive for optimization in an increasingly competitive landscape, how can developers effectively harness AI performance profiling techniques to enhance code efficiency and ensure their solutions remain robust and adaptable? Exploring the methodologies, tools, and best practices of AI performance profiling reveals a pathway to achieving superior system performance and sustained competitive advantage.

Furthermore, Kodezi offers specific features designed to tackle these challenges head-on. By leveraging Kodezi, developers can improve productivity and enhance code quality. In addition, the platform provides tools that streamline the coding process, allowing for quicker iterations and more efficient debugging.

Similarly, the benefits of using Kodezi extend beyond mere efficiency gains; they encompass a holistic improvement in the overall development experience. As you consider your coding practices, reflect on how Kodezi could transform your approach. With its robust features, Kodezi stands ready to support developers in navigating the complexities of modern coding challenges.

Understand AI Performance Profiling

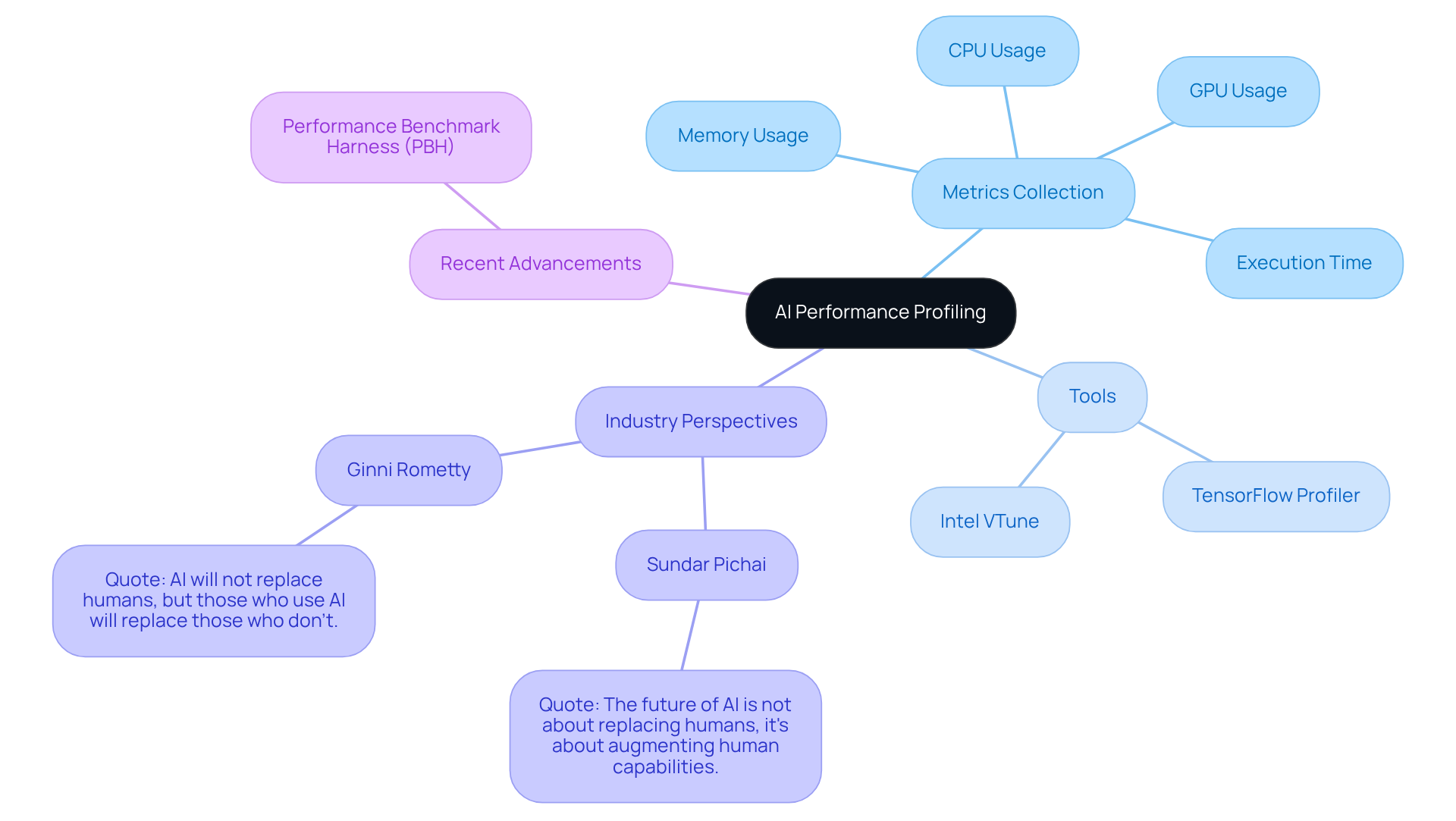

AI performance profiling plays a crucial role in measuring and analyzing the effectiveness of AI systems, particularly in terms of their resource usage during execution. By collecting metrics on CPU, GPU, memory usage, and execution time across various tasks, developers utilize AI performance profiling to gain insights into how AI systems perform under different conditions. This understanding enables the identification of inefficiencies, allowing for AI performance profiling to enhance code, improve application responsiveness, and reduce operational costs. Efficient AI performance profiling is essential for recognizing slow functions, memory leaks, and other operational challenges. Tools such as TensorFlow Profiler and Intel VTune are frequently employed to provide comprehensive insights into system efficiency and resource utilization.

Furthermore, recent advancements in AI evaluation measurement have led to standardized benchmarking tests, which are essential for ensuring that research findings are adaptable across different platforms. The Performance Benchmark Harness (PBH) exemplifies a systematic approach to tracking efficiency, facilitating consistent comparisons of system capabilities across various frameworks and architectures. The integration of standardized metrics is vital for optimizing models under diverse constraints, ensuring the effective deployment of AI solutions.

In addition, industry leaders underscore the significance of AI performance profiling in the development of AI. For instance, Sundar Pichai, CEO of Google, emphasizes that AI's transformative potential is fundamentally tied to its ability to enhance human capabilities, achievable only through efficient evaluation and optimization. Similarly, Ginni Rometty, former CEO of IBM, asserts that organizations that responsibly leverage AI will gain a competitive edge, highlighting the necessity for robust assessment frameworks.

As we approach 2025, the latest trends in AI performance profiling resources spotlight the importance of standardized metrics that facilitate consistent comparisons across AI systems. Real-world examples, such as the development of the PBH, illustrate how organized tracking methods can lead to significant improvements in AI model efficiency and reliability. By adopting these methodologies, teams can ensure their AI implementations are not only effective but also aligned with the evolving demands of the industry.

Explore Effective Methodologies and Tools

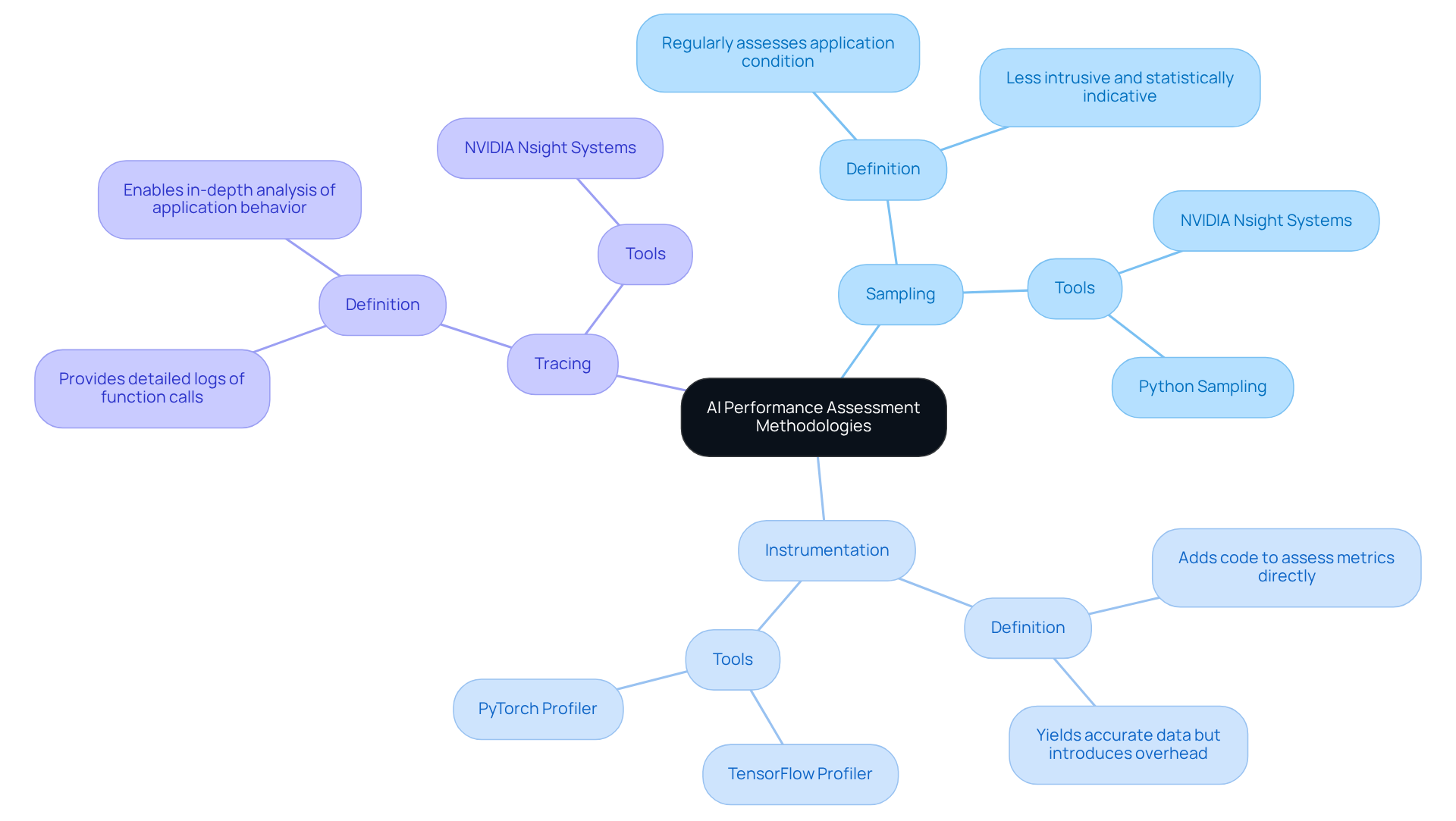

To effectively assess AI capabilities, developers often encounter various challenges. Understanding the right methodologies and tools can significantly streamline this process. Key methodologies include sampling, instrumentation, and tracing, each serving a distinct purpose in evaluating application performance.

Sampling entails regularly assessing the application's condition to collect usage data, making it less intrusive and more statistically indicative of production environments. For example, did you know that the maximum supported sampling frequency for Nsight Systems is 200,000 Hz? This capability allows for high-resolution data collection, providing valuable insights.

On the other hand, instrumentation requires adding code to assess metrics directly. While this yields accurate data, it may introduce overhead. Tracing, meanwhile, offers a detailed log of function calls and execution paths, enabling in-depth analysis of application behavior. How might these methods impact your coding practices?

Well-known resources for AI performance analysis include:

- TensorFlow Profiler

- PyTorch Profiler

- NVIDIA Nsight Systems

Each tool presents unique characteristics that can enhance your workflow. For instance, TensorFlow Profiler excels in monitoring TensorFlow operations, while PyTorch Profiler provides insights tailored to PyTorch applications. Similarly, NVIDIA Nsight Systems stands out with its extensive performance analysis features across multiple platforms, including support for GPU metrics and network communication assessment.

Choosing the suitable instrument depends on the specific needs of your project and programming environment. Developers must weigh the benefits of sampling versus instrumentation, considering factors like overhead, accuracy, and the level of detail required for effective optimization. Be cautious, as common pitfalls include the potential for sampling to distort the time taken in frequently called functions, leading to inaccurate conclusions. By grasping these methodologies and tools, along with their related challenges, teams can improve their strategies for AI performance profiling, ultimately resulting in more efficient and maintainable code.

Implement Continuous Monitoring and Optimization Strategies

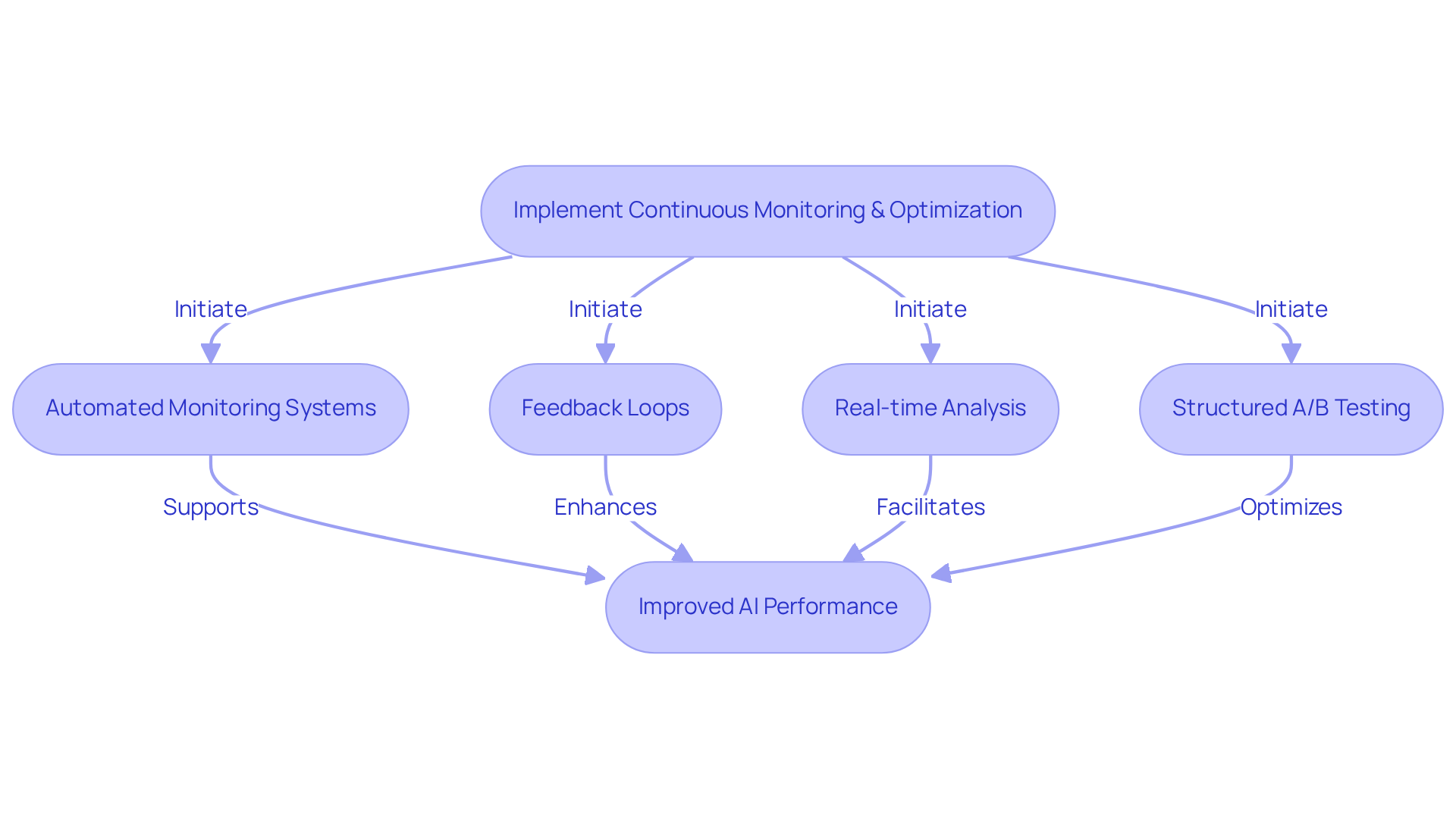

Implementing continuous monitoring and optimization strategies is essential for effective AI performance profiling to ensure that AI models perform efficiently over time. This involves establishing automated monitoring systems that track key metrics (KPIs) such as accuracy, latency, and resource utilization. Tools like Prometheus and Grafana can be combined to visualize metric data in real-time. Furthermore, UptimeRobot monitors essential endpoints like APIs and prediction services at designated intervals, offering additional confidence in system reliability.

Have you considered how feedback loops can enhance your monitoring efforts? Creating these loops enables teams to collect insights from system effectiveness and user interactions, promoting prompt adjustments. Consistently planned evaluations and adjustments to the framework based on new information can assist in reducing problems associated with drift and ensure ongoing effectiveness.

As Veronica Drake emphasizes, AI performance profiling of systems post-deployment is crucial to ensure they perform as intended and adapt to changing conditions. By adopting a proactive approach to monitoring, teams can sustain high effectiveness and adjust to evolving requirements.

For instance, Nubank's proactive oversight of its machine learning systems in credit risk evaluation exemplifies how continuous tracking can uphold accuracy and reliability, safeguarding customer trust. Moreover, executing structured A/B testing can attain a 20-30% test success rate, greatly influencing overall effectiveness metrics.

This comprehensive approach not only optimizes AI models through AI performance profiling but also fosters a culture of continuous improvement, which is essential in today's fast-paced technological landscape. It is crucial to recognize that relying exclusively on snapshot methods for monitoring can result in overlooked variations in results, highlighting the necessity for real-time analysis to detect issues as they emerge.

Integrate Profiling Practices into Development Workflows

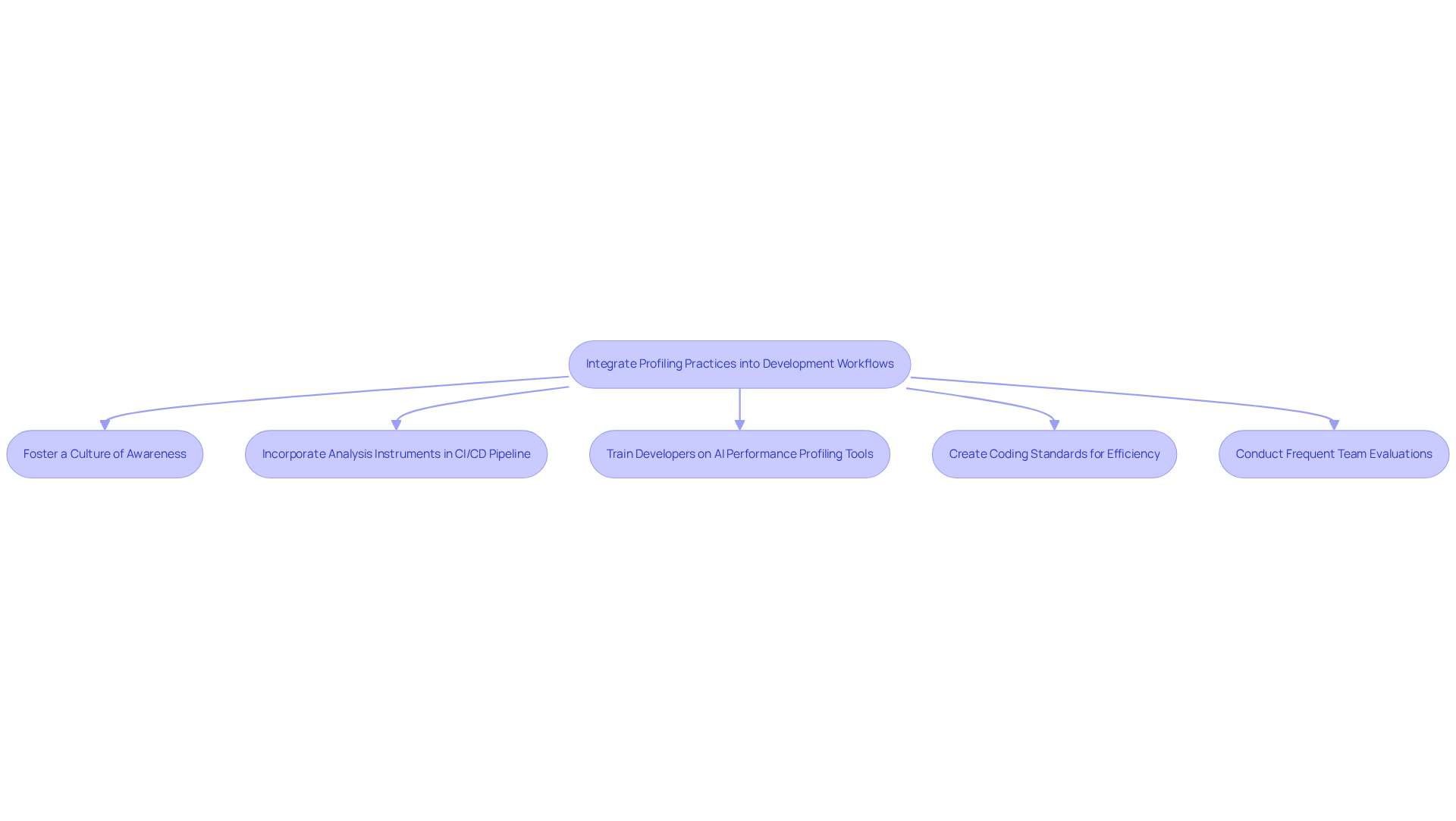

To successfully incorporate assessment techniques into development processes, teams must foster a culture of awareness regarding efficiency from the beginning. This starts with incorporating analysis instruments within the continuous integration/continuous deployment (CI/CD) pipeline, allowing automated efficiency assessments with each code commit. Did you know that 50% of developers now confirm regular use of CI/CD resources? This statistic emphasizes the importance of integrating AI performance profiling utilities within these pipelines.

Training developers to utilize AI performance profiling tools during the development phase enables them to identify and correct efficiency issues early in the process. Furthermore, expert Antino stresses that the primary aim of a CI server is to consistently build and test applications whenever new modifications are pushed to the repository, highlighting the importance of quality checks.

Creating coding standards that emphasize efficiency aspects further strengthens a focus on effectiveness throughout development. In addition, frequent team evaluations of profiling outcomes not only encourage collaboration and knowledge sharing but also guarantee that all team members stay aligned on objectives and strategies.

For instance, a case study shows that CI/CD fosters collaboration and communication among development teams, leading to increased efficiency and reduced errors. This proactive approach to AI performance profiling can significantly enhance development efficiency, resulting in higher-quality software and faster delivery times.

Conclusion

AI performance profiling stands as a crucial element in ensuring the efficiency and effectiveness of AI systems. By systematically measuring resource usage and execution time, developers can pinpoint inefficiencies and optimize their code. This leads to improved application responsiveness and reduced operational costs. The integration of standardized benchmarking tests and advanced profiling tools further enhances the ability to evaluate AI systems across various platforms, underscoring the importance of continuous assessment and refinement in AI development.

Throughout this discussion, key methodologies such as sampling, instrumentation, and tracing have been examined, alongside the various tools available for AI performance analysis. The significance of continuous monitoring and optimization strategies is also highlighted, showcasing how proactive oversight can sustain system reliability and adapt to evolving conditions. Furthermore, incorporating performance profiling practices into development workflows is essential for cultivating a culture of efficiency and collaboration among development teams.

In conclusion, the insights shared underscore the vital role of AI performance profiling in the ongoing evolution of AI technologies. As organizations seek to leverage AI for competitive advantage, adopting robust profiling practices and methodologies becomes paramount. Embracing these best practices not only enhances code efficiency but also ensures that AI systems remain effective and responsive to the dynamic demands of the industry. The call to action is clear: prioritize AI performance profiling today to pave the way for tomorrow's innovations in artificial intelligence.

Frequently Asked Questions

What is AI performance profiling?

AI performance profiling is the process of measuring and analyzing the effectiveness of AI systems by collecting metrics on resource usage such as CPU, GPU, memory usage, and execution time during execution across various tasks.

Why is AI performance profiling important?

It is important because it helps identify inefficiencies in AI systems, enhances code, improves application responsiveness, and reduces operational costs. It also aids in recognizing slow functions, memory leaks, and other operational challenges.

What tools are commonly used for AI performance profiling?

Common tools for AI performance profiling include TensorFlow Profiler and Intel VTune, which provide comprehensive insights into system efficiency and resource utilization.

What are standardized benchmarking tests in AI evaluation?

Standardized benchmarking tests are essential for ensuring that research findings are adaptable across different platforms. They facilitate consistent comparisons of system capabilities across various frameworks and architectures.

What is the Performance Benchmark Harness (PBH)?

The Performance Benchmark Harness (PBH) is a systematic approach to tracking efficiency in AI systems, allowing for consistent comparisons of capabilities across different frameworks and architectures.

How do industry leaders view the significance of AI performance profiling?

Industry leaders like Sundar Pichai, CEO of Google, and Ginni Rometty, former CEO of IBM, emphasize that AI's transformative potential is tied to efficient evaluation and optimization, and that organizations leveraging AI responsibly will gain a competitive edge.

What trends are emerging in AI performance profiling as we approach 2025?

Emerging trends highlight the importance of standardized metrics that facilitate consistent comparisons across AI systems, with organized tracking methods like the PBH leading to significant improvements in AI model efficiency and reliability.