Overview

In the realm of software development, coding challenges can often impede progress. How can developers overcome these hurdles? The article delves into the effective use of AI in software testing, showcasing how technologies such as machine learning and natural language processing can automate testing tasks. This automation not only enhances accuracy and speed but also reduces costs. Ultimately, the integration of AI transforms the software evaluation process, allowing developers to concentrate on more complex issues that require their expertise. By adopting these AI solutions, developers can significantly improve productivity and code quality. Are you ready to explore how AI can revolutionize your testing practices?

Introduction

The integration of artificial intelligence into software testing is revolutionizing the industry, promising unprecedented efficiency and accuracy. By automating essential tasks such as test case creation and result analysis, AI alleviates the burden of repetitive work while significantly enhancing defect identification rates.

However, as organizations rush to adopt these innovative solutions, they often encounter challenges that can hinder their success. What are the key strategies to effectively harness AI in testing and overcome these obstacles to maximize productivity and quality?

Furthermore, understanding these strategies can empower teams to not only adopt AI but also leverage it to achieve superior results.

Understand AI Testing Fundamentals

AI evaluation transforms the software assessment process by leveraging artificial intelligence technologies, automating essential tasks such as test case creation, execution, and result analysis. This innovation addresses common challenges developers face, enhancing overall efficiency and accuracy in evaluations.

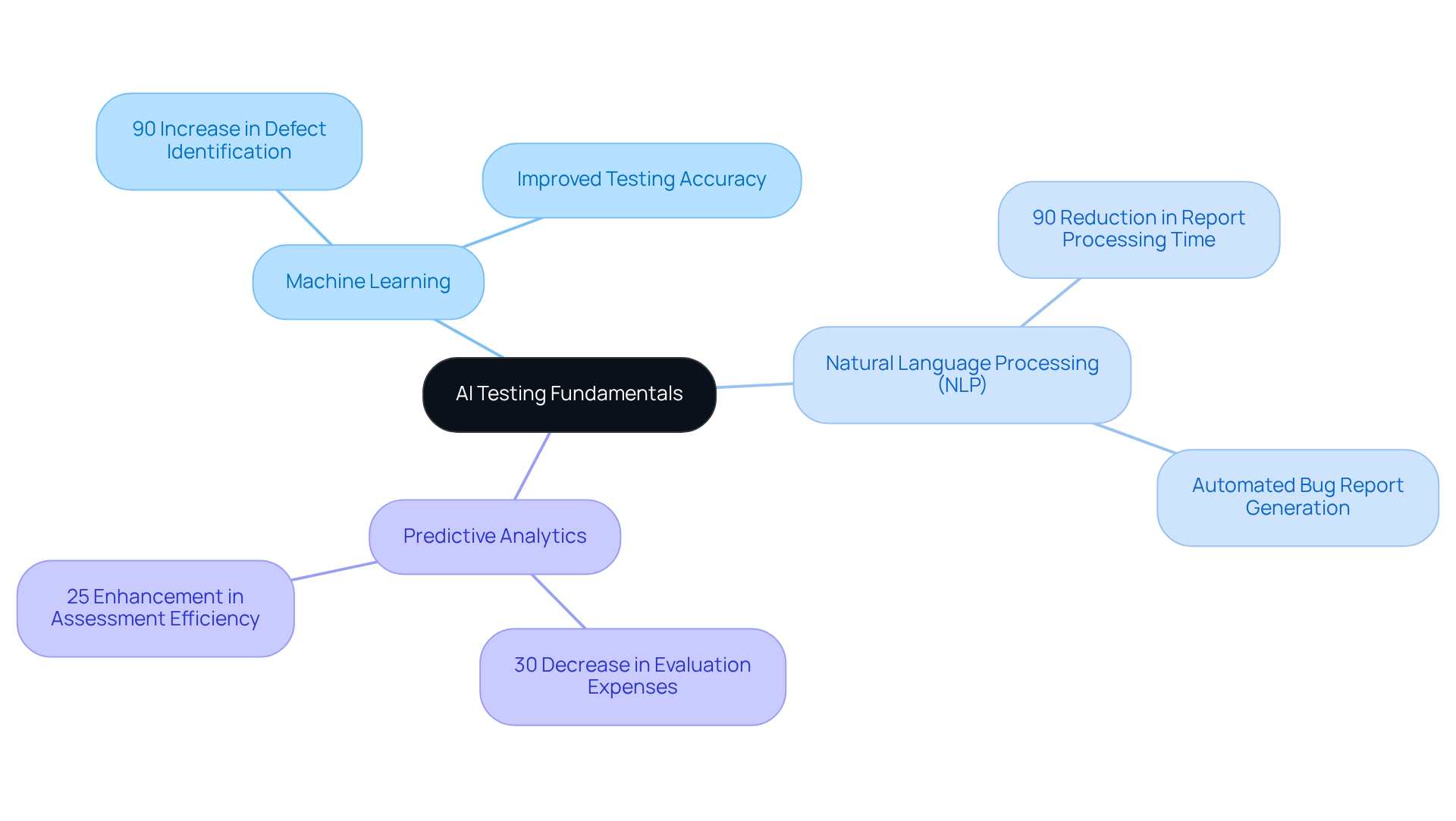

Key components of AI evaluation include:

- Machine Learning: Algorithms that learn from data to improve testing accuracy over time. Did you know that AI evaluation can increase defect identification rates by as much as 90% compared to manual assessments? This significant improvement greatly enhances software dependability.

- Natural Language Processing (NLP): This technology enables AI to understand and interpret human language, making it invaluable for analyzing requirements and generating test cases. Imagine automating the generation of standardized bug reports, which can improve communication clarity and reduce report processing time by 90%.

- Predictive Analytics: By utilizing historical data, predictive analytics helps recognize potential defects and prioritize evaluation efforts. This proactive approach can lead to a 30% decrease in evaluation expenses and a 25% enhancement in assessment efficiency, ensuring that critical areas are thoroughly examined before release.

Acquainting yourself with these concepts will illuminate how to use AI in testing, simplify your evaluation methods, enhance precision, and ultimately boost overall effectiveness in software development. Are you ready to explore the transformative potential of AI in your software assessment processes?

Identify Benefits of AI in Testing

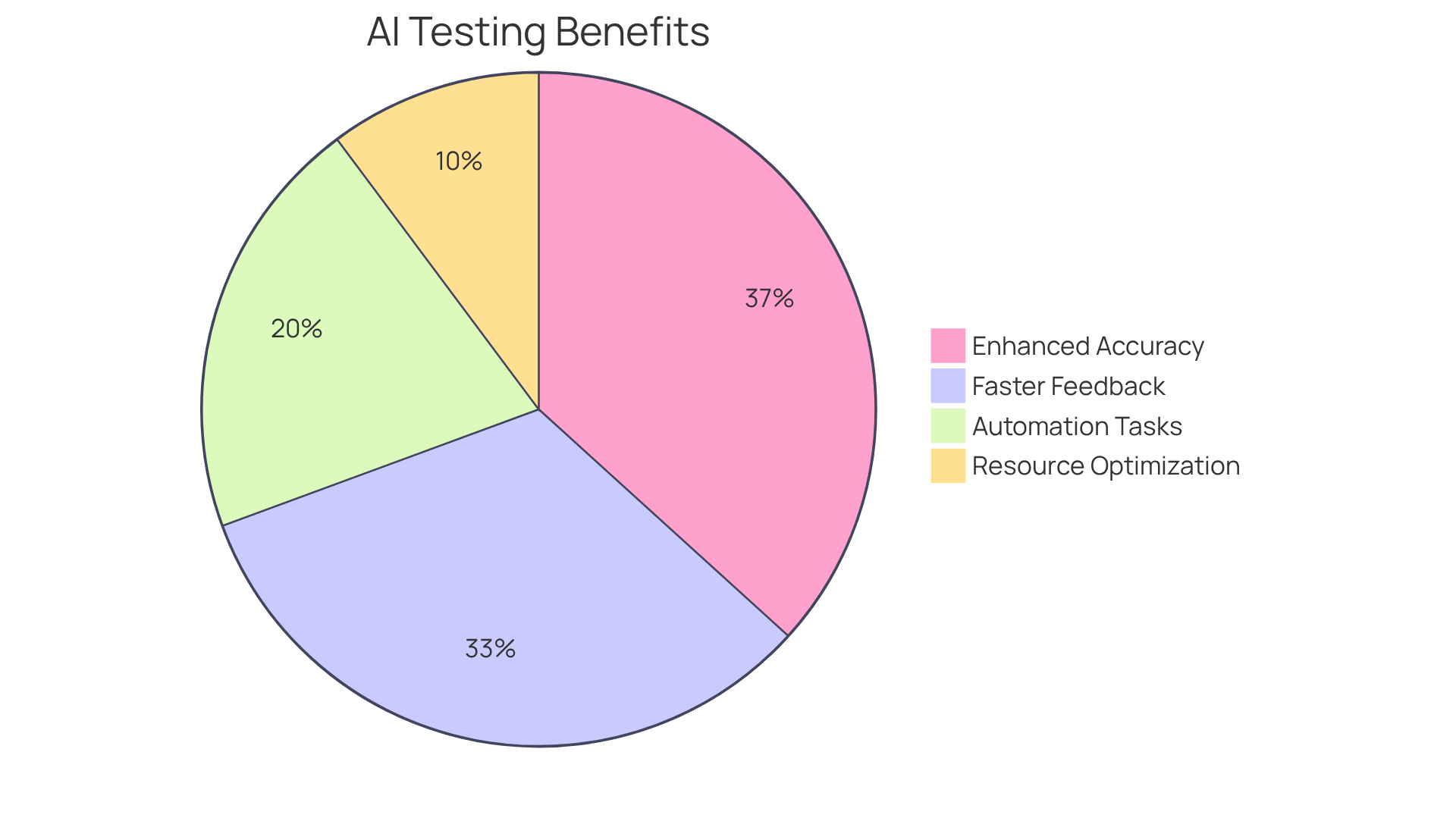

Learning how to use AI in testing can offer numerous advantages that transform the way developers approach their work. Are you facing challenges with repetitive tasks that consume valuable time? Understanding how to use AI in testing can automate these tasks, allowing testers to focus on more complex issues. In fact, test automation has replaced 50% or more of manual evaluation efforts in 46% of cases, significantly enhancing productivity.

Furthermore, AI enhances accuracy. Machine learning algorithms can identify patterns and anomalies that human testers might overlook, reducing the likelihood of defects. Automation can enhance defect identification by as much as 90% compared to manual methods, ensuring higher quality outputs.

In addition, understanding how to use AI in testing facilitates faster feedback loops. It can execute tests more quickly, providing immediate feedback to developers and speeding up the development cycle. Test automation can reduce developers' feedback response time by up to 80%, facilitating quicker iterations and improvements.

Moreover, resource optimization is a key benefit. By automating evaluations, teams can allocate resources more effectively, reducing costs associated with manual assessments. About 25% of companies that invested in test automation reported immediate ROI, highlighting the financial benefits of AI adoption.

Comprehending these advantages will assist you in valuing how to use AI in testing within your assessment strategy. More than 60% of firms indicate a return on investment from automated evaluation resources, further emphasizing the strategic importance of incorporating AI into assessment procedures.

Implement AI Tools for Testing Automation

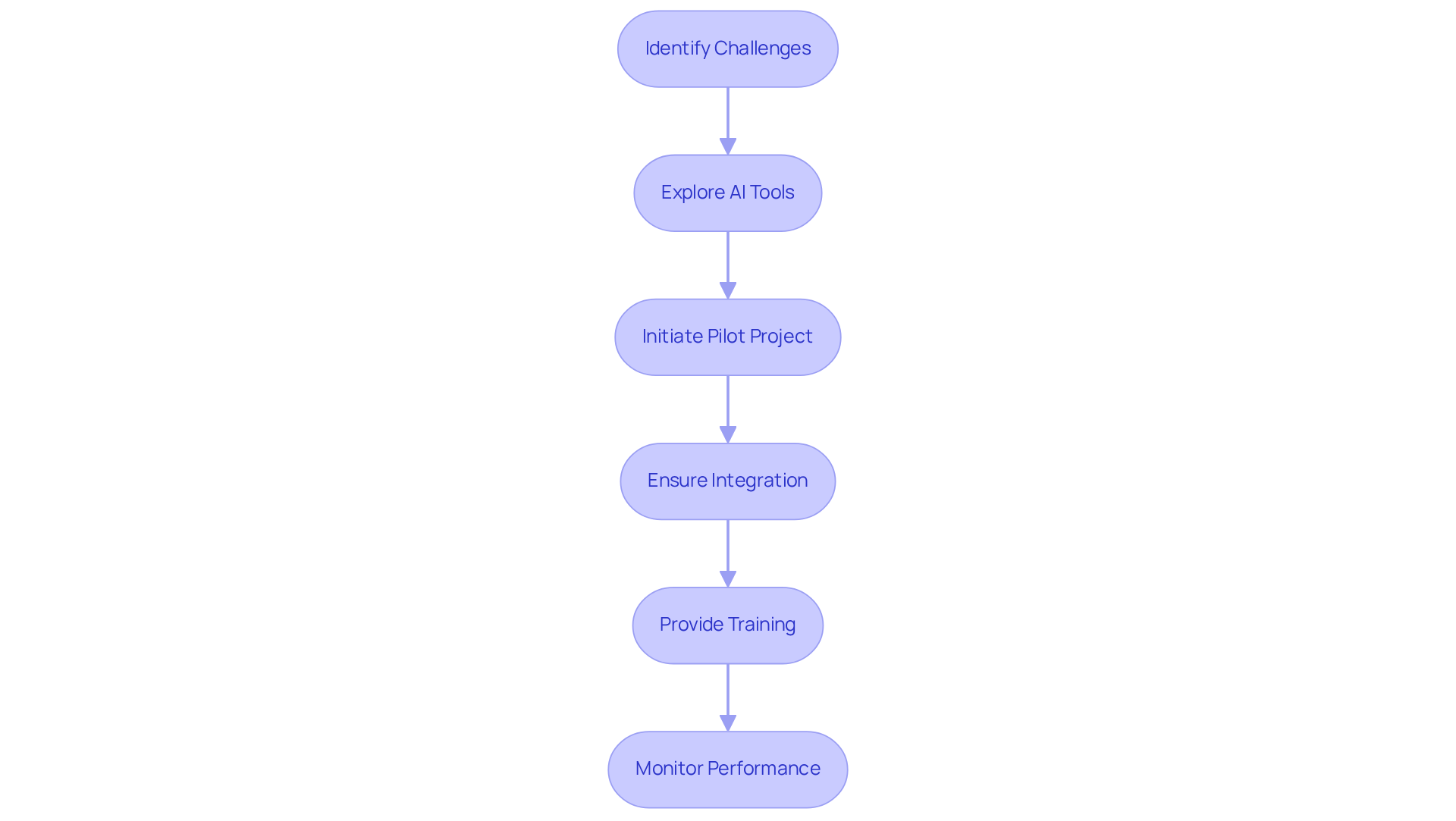

To effectively implement AI tools for testing automation, consider the following steps:

To begin, coding challenges developers face can be significant. Identifying the specific evaluation challenges your team encounters is crucial. Understanding these pain points will help you determine how to use AI in testing to provide targeted solutions. Notably, 70% of AI agent users report reduced time spent on specific development tasks, highlighting the potential benefits of targeted AI integration. The tool can automatically analyze bugs and optimize code, significantly enhancing productivity.

Next, investigate how to use AI in testing by exploring various applications available in the market. Focus on features that align with your identified needs, such as automated test case generation, defect prediction, and integration capabilities. This platform stands out by providing an AI-driven programming tool that rectifies code automatically, acting as a beneficial resource in your toolkit.

Furthermore, initiate a pilot project to assess the effectiveness of the software. Select a small, manageable codebase to test its capabilities, allowing for a focused assessment of its performance. This method corresponds with the trend where 46% of teams have substituted 50% or more of their manual assessments with automation, demonstrating how to use AI in testing to achieve significant efficiency gains. The tool's CLI can improve this process by swiftly repairing codebases, making it an ideal candidate for pilot evaluation.

In addition, ensure that the system integrates seamlessly with your existing Continuous Integration/Continuous Deployment (CI/CD) pipeline. This integration is crucial for enabling continuous testing and maintaining a smooth workflow, as 54% of developers are adopting DevOps for faster development cycles. The platform's capabilities can be utilized here to streamline the integration process and enhance overall efficiency.

Moreover, provide comprehensive training for your team on the platform, emphasizing best practices and troubleshooting techniques. This training will enable your team to utilize the resource effectively and maximize its potential. Expert insights indicate that efficient training can greatly improve the acceptance and functionality of AI resources in evaluations, including features for automatic bug analysis and code correction.

Finally, continuously monitor the performance of Kodezi and gather feedback from your team. Utilize this information to enhance its application, ensuring that the resource develops in line with your testing requirements. Organizations that actively monitor and adjust their AI tool usage report higher satisfaction and productivity levels. Kodezi's ongoing updates and support can further assist in this optimization effort.

By following these steps, you can learn how to use AI in testing your evaluation processes, enhancing efficiency and accuracy while reducing the time spent on repetitive tasks.

Troubleshoot Common AI Testing Challenges

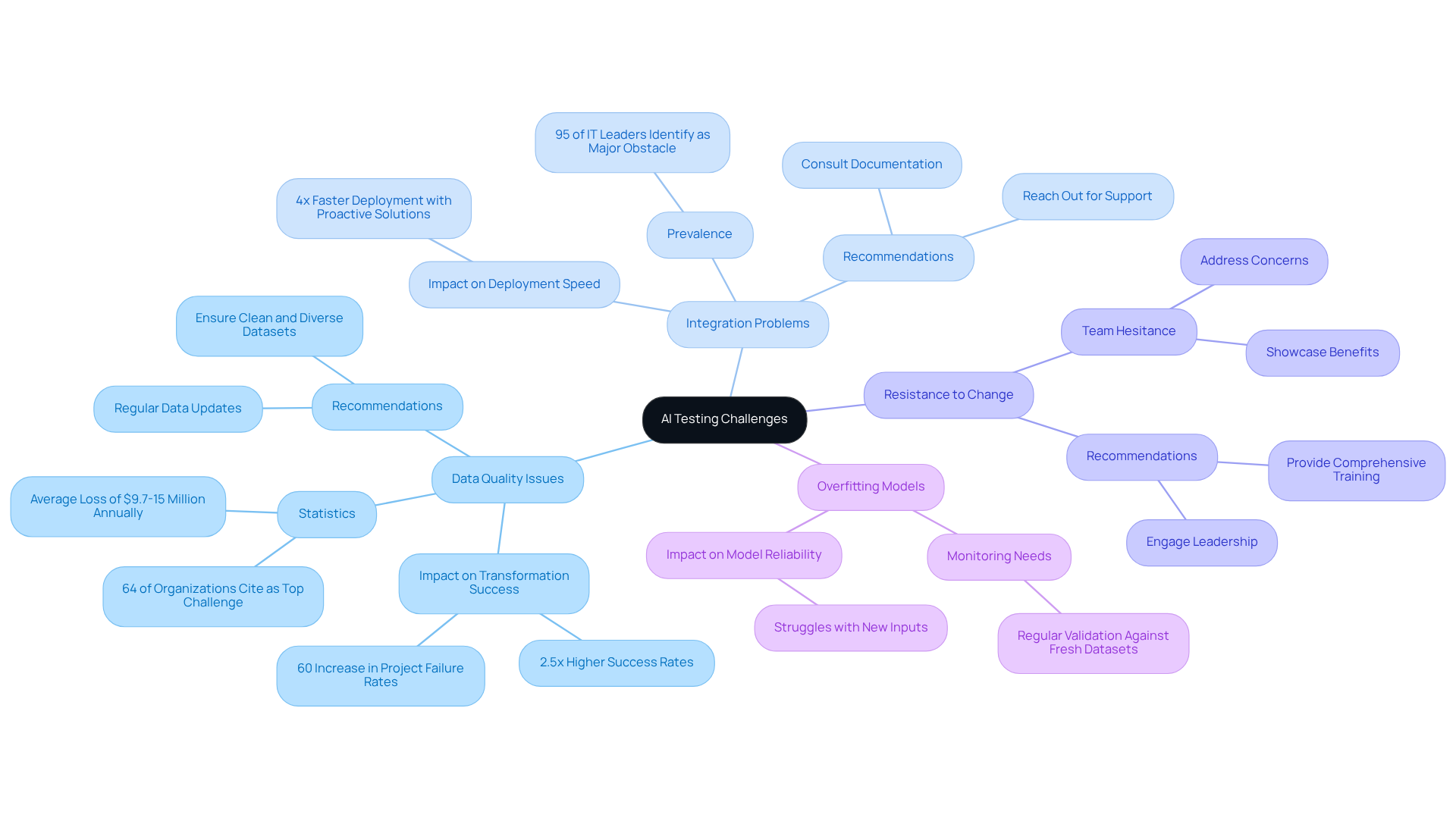

Organizations often face several challenges when they learn how to use AI in testing. Understanding these hurdles is essential for a successful transition to AI-enhanced testing.

-

Data Quality Issues: The integrity of AI models depends heavily on the quality of the training data. It is crucial to ensure that datasets are clean, diverse, and reflective of real-world scenarios. Regular updates are vital; organizations that prioritize data quality experience 2.5 times higher transformation success rates. Conversely, poor data quality is the leading challenge for 64% of organizations, resulting in significant project failures and inefficiencies. Additionally, quality problems can increase project failure rates by 60%, highlighting the critical need for high data standards. As Charles Babbage noted, "Good quality data is the holy grail, and that’s what you should always aim for."

-

Integration Problems: If your AI application struggles to integrate with existing systems, it’s advisable to consult the documentation or reach out to the support team for configuration assistance. Integration issues are a prevalent barrier, with 95% of IT leaders identifying them as a major obstacle to effective AI implementation. Organizations that proactively address these challenges can achieve up to four times faster AI deployment.

-

Resistance to Change: Team members may be hesitant to embrace AI tools. To mitigate this, it’s important to address their concerns by showcasing the benefits and providing comprehensive training. Engaging leadership in the process can significantly enhance team performance and acceptance of new technologies.

-

Overfitting Models: It is essential to monitor AI models for signs of overfitting, where they perform well on training data but struggle with new inputs. Regular validation against fresh datasets is crucial for maintaining model reliability.

By proactively addressing these challenges, organizations can facilitate a smoother transition to learning how to use AI in testing, ultimately maximizing efficiency and effectiveness.

Conclusion

Mastering the integration of AI in testing can revolutionize software development processes, enhancing efficiency and accuracy while addressing common challenges faced by developers. By leveraging advanced technologies such as machine learning, natural language processing, and predictive analytics, organizations can streamline their testing procedures, ultimately leading to significant improvements in software quality and reliability.

Furthermore, key insights from the article highlight the transformative benefits of AI in testing, including:

- The automation of repetitive tasks

- Enhanced defect identification

- Faster feedback loops

The implementation of AI tools not only optimizes resource allocation but also promotes cost savings, as evidenced by the reported return on investment from automated evaluation resources. In addition, understanding and overcoming common challenges such as data quality issues and integration problems are crucial for successful AI adoption in testing.

Embracing AI in testing is not merely about keeping up with technological advancements; it is an essential step toward achieving a competitive edge in the software development landscape. Organizations are encouraged to explore the latest trends in AI testing tools and methodologies, actively invest in training their teams, and continuously monitor performance to maximize the potential of these innovative solutions. By doing so, they will not only enhance their testing processes but also ensure that they are well-prepared for the evolving demands of the industry in the years to come.

Frequently Asked Questions

What is AI evaluation in software testing?

AI evaluation transforms the software assessment process by using artificial intelligence technologies to automate tasks such as test case creation, execution, and result analysis, enhancing efficiency and accuracy.

What are the key components of AI evaluation?

The key components of AI evaluation include Machine Learning, Natural Language Processing (NLP), and Predictive Analytics.

How does Machine Learning improve software testing?

Machine Learning uses algorithms that learn from data to improve testing accuracy over time, potentially increasing defect identification rates by up to 90% compared to manual assessments.

What role does Natural Language Processing (NLP) play in AI evaluation?

NLP enables AI to understand and interpret human language, making it essential for analyzing requirements and generating test cases, such as automating standardized bug reports to improve communication clarity.

How does Predictive Analytics contribute to software testing?

Predictive Analytics uses historical data to identify potential defects and prioritize evaluation efforts, which can lead to a 30% reduction in evaluation costs and a 25% increase in assessment efficiency.

What are the benefits of using AI in software testing?

Using AI in software testing simplifies evaluation methods, enhances precision, boosts overall effectiveness, and ensures critical areas are thoroughly examined before release.