Introduction

Bottlenecks are common obstacles that hinder efficiency and productivity in various domains, from manufacturing to software development. Understanding and resolving these bottlenecks is crucial for achieving optimal performance. In this article, we explore the causes and identification of bottlenecks, as well as the tools and techniques used for their detection.

We also delve into strategies for resolving bottlenecks, optimizing performance, and managing production schedules. Additionally, we discuss the importance of continuous improvement and regular bottleneck analysis in maintaining high-performance systems. By adopting these approaches, organizations can streamline their operations, enhance productivity, and achieve maximum efficiency.

What is a Bottleneck?

Comprehending obstacles is vital in every setup where effectiveness is essential. Bottlenecks are essentially choke points that restrict the flow of processes, resulting in delays and reduced productivity. This can happen in various domains, from manufacturing lines to digital infrastructures. For instance, at Meta, a unique challenge arose with the need to manage a vast infrastructure at scale, pushing the team to rethink conventional solutions and optimize performance. They approached this by managing their entire fleet through shared capacity pools, a shift away from individual service planning.

Amdahl's Law offers a valuable viewpoint on limitations in computing, emphasizing that the enhancement of a structure is constrained by its least parallelizable component. To illustrate, when analyzing a 1TB text dataset, the process may be straightforward, but scaling up to 1PB or more with complex computations demands innovative strategies to overcome potential bottlenecks. In the software industry, where 41% of developers work, the difference between being satisfied and merely complacent in one's job can hinge on the ability to work efficiently and without undue delays.

The importance of performance evaluation is emphasized in the industry with observations that a good analysis not only enhances the overall functionality but also the developer's intuition for future projects. Moreover, in today's tech landscape, AI pair-programming tools are transforming the scene by enhancing developer productivity across the board, especially for beginners who are now able to access sophisticated tools and comprehensive guidelines that simplify complex processes.

Causes of Bottlenecks

Bottlenecks, the constrictions that slow or halt progress within a structure, are multifaceted challenges in the realm of software development. They can stem from a myriad of factors ranging from limited resources to suboptimal system architectures. For instance, Meta's approach to software monoliths at scale required a shift from individual service management to managing shared capacity pools—a testament to the need for novel solutions when confronted with scale. Likewise, the 'regionalization problem' addressed by Meta's team highlights the significance of strategic planning and cooperation among different groups to efficiently deal with and resolve challenges.

A significant insight into project management is the idea of 'Brooks' Law,' which suggests that adding manpower to a late project only further delays it, primarily because of the complexities in communication and integrating new team members. This principle emphasizes the critical nature of having a skilled team and clear communication, rather than simply increasing headcount.

From an industry perspective, software development requires a high degree of satisfaction and engagement from its professionals. However, statistics indicate that only 19% of developers are truly content with their job, pointing to the necessity for improved work environments and methodologies that can alleviate burnout and dissatisfaction—factors that can contribute to obstacles.

Moreover, maintenance and enhancement of software are notorious for their complexity and resource consumption. Studies have shown that a significant portion of a data processing department's resources are devoted to application software maintenance, highlighting the importance of robust exception handling and error mitigation strategies.

Lastly, developers often find themselves in a paradox where being 'lazy'—in the sense of seeking the path of least resistance—can lead to efficient problem-solving. This highlights the importance of creative solutions and the ability to judiciously use available resources to overcome challenges and maintain progress.

In summary, comprehending the complex nature of obstacles is essential for identifying and resolving them efficiently. This includes recognizing the role of scale, the importance of team dynamics and clear communication, the need for a satisfying work environment, the complexities of software maintenance, and the value of creative problem-solving.

Identifying Bottlenecks

Identifying bottlenecks is a crucial task in ensuring seamless and efficient operations within complex systems. One illustrative example is the realm of microbiome research, where machine learning algorithms are employed to navigate through immense data sets to pinpoint informative patterns and connections. Similarly, the analysis of distributed applications, such as those comprising numerous microservices, leverages detailed metrics like latency and requests to facilitate root cause analysis. This involves dissecting performance issues to their core, which, though arduous, is vital for robust system functioning.

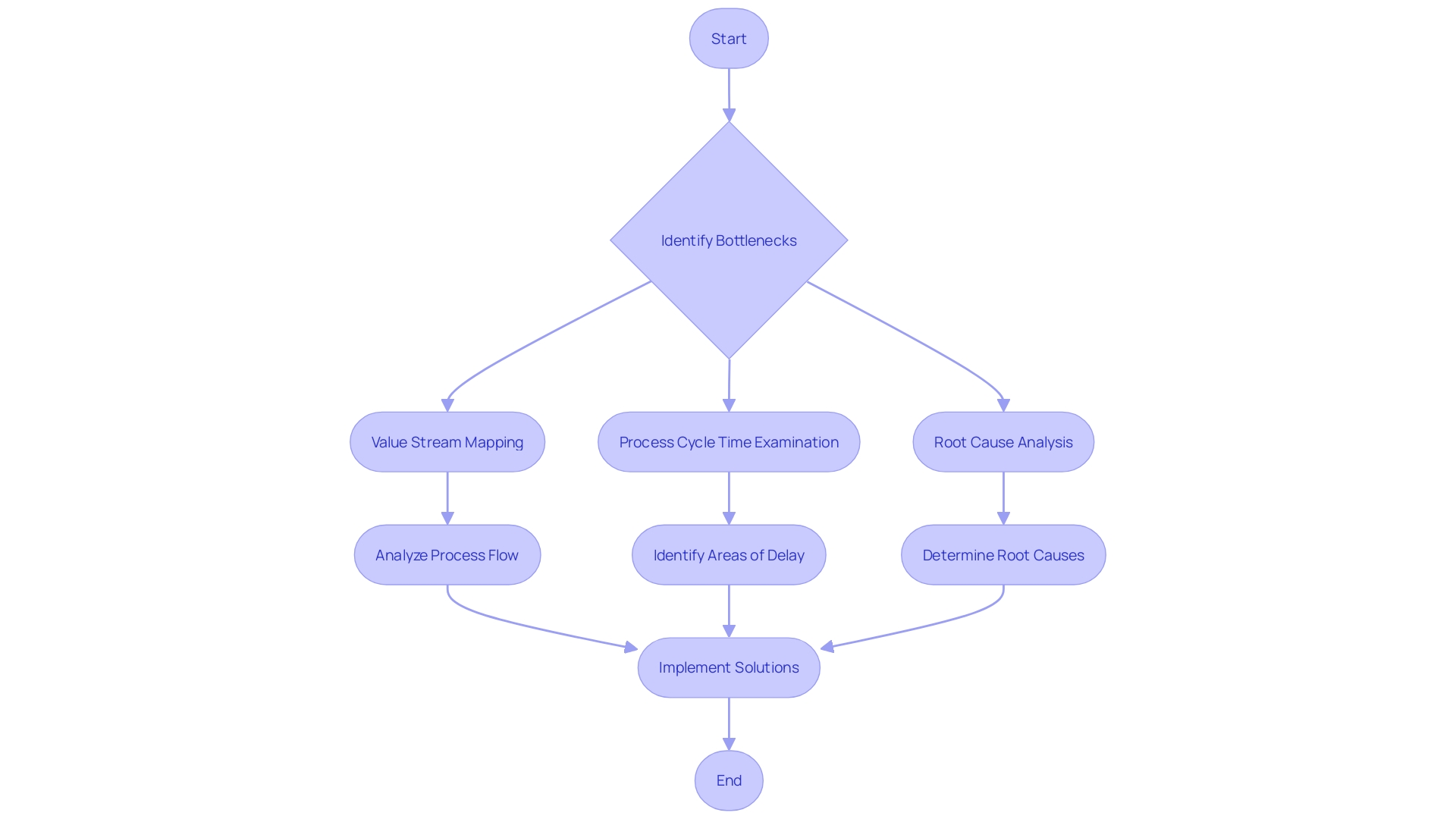

To effectively diagnose bottlenecks, several strategies can be employed. Value stream mapping offers a visual representation of process flow, highlighting areas of delay. Process cycle time examination scrutinizes the time taken for each stage, revealing inefficiencies. Root cause analysis is pivotal, utilizing methodologies like the 5 Whys and Fishbone diagrams to trace issues to their origin. These methods are focused on identifying the precise positions of constraints, enabling specific actions to improve performance and dependability.

The development of software necessitates continuous maintenance to rectify bugs and adapt to new dependencies. Maintenance, distinct from extension, ensures the software continues to perform as initially described, while also remaining secure and operational. As companies transition from exploration to exploitation in project development, strategic technical debt is undertaken to expedite innovation, later transitioning to a focus on product robustness. This progressive method to software management highlights the significance of flexibility and adaptability in addressing constraints and sustaining the general health of the entire framework.

Tools and Techniques for Bottleneck Identification

Utilizing the appropriate tools and techniques for identifying constraints is an essential measure towards maintaining an efficient operation. Performance monitoring software stands at the forefront, providing real-time insights into performance and pinpointing inefficiencies. Information gathering and examination instruments delve further, providing a detailed view of operational information to identify patterns and irregularities that may suggest constraints. Moreover, process visualization tools offer a visual representation of workflows, making it easier to identify areas of congestion that impede productivity. These tools, when used efficiently, can convert the complex task of identifying performance constraints into a manageable and accurate process, guaranteeing that productivity is maximized and that operations are running at optimal efficiency.

Data Collection and Analysis for Bottleneck Identification

To effectively identify bottlenecks in systems and processes, it is essential to engage in meticulous information collection and analysis, utilizing both qualitative and quantitative metrics. Beginning with qualitative metrics, we must support the utilization and offer practical guidance on how to capture, track, and make use of this information. In the pursuit of developer productivity, strategic technical debt plays a role in the exploration phase of research. During this phase, a variety of solutions are explored rapidly, which is crucial for research-oriented companies aiming to innovate quickly.

When it comes to analyzing information, we often encounter unstructured and incomplete datasets. This is where professionals like Dean Knox come into play, emphasizing the significance of dataset construction and the dangers of bias resulting in misleading conclusions. Knox's work, alongside his colleagues, in compiling information to quantify specific elements in social problems demonstrates the value of thorough information evaluation in real-world applications.

Furthermore, software Performance Engineering offers two approaches to improve performance: predictive model-based and measurement-based. Both approaches are evolving, with a need for convergence to cover the entire development cycle effectively. This highlights the importance of performance as a pervasive quality in software systems.

To demonstrate the strength of analysis, the ORG.one project supports fair and localized sequencing of critically endangered species. By uploading information to an open-access database, the project ensures the information is available to anyone, anywhere, to aid in conservation efforts. This project exemplifies how information management and sharing can have a significant impact on real-world issues.

Ultimately, as we navigate through the process of collecting and examining information to identify areas of constraint, it is crucial to bear in mind that any level of information administration is better than none. Case studies from different areas, such as genomics and social science, emphasize the importance of strong data evaluation and administration for attaining effectiveness and productivity in research and conservation endeavors.

Common Mistakes to Avoid During Bottleneck Analysis

To effectively navigate bottleneck identification, it's crucial to recognize and avoid common missteps. For instance, the all-too-easy mistake of inadequate planning can lead to an underprepared analysis, especially during high-stakes periods such as a major product release. A robust approach involves ensuring ample resources, including inventory and workforce, are in place and confirming that all systems, akin to an e-commerce website's functionality, are optimized.

Another common mistake in information handling is allowing consumer lag to accumulate, as highlighted by HubSpot's engineering team's experience with Apache Kafka. When messages are published faster than they can be processed, a backlog is created, obscuring accurate information examination and insights. To maintain the integrity and reliability of data analysis, adopting best practices at every stage—from defining the research question to communicating the results—is crucial.

The practice of 'dogfooding,' or using your own software as seen in the Technical Marketing Team at Cribl, can serve as a valuable strategy in detecting and mitigating errors early on. By internalizing the use of your products, you gain firsthand insight into usability and can proactively address potential obstacles.

In the realm of research-oriented companies, strategic technical debt is often taken during the exploration phase to test a myriad of ideas swiftly. This is balanced during the exploitation phase by robustifying the best solution. Amdahl's Law further reminds us that enhancing components of a structure will not necessarily improve the overall performance if other elements remain inefficient.

In conclusion, by integrating these insights and strategies into your bottleneck analysis process, you can enhance the accuracy of your findings and foster a more effective and efficient operational environment.

Strategies for Resolving Bottlenecks

Recognizing bottlenecks is only the initial step in enhancing performance. The real challenge lies in the strategic resolution of these pain points. To achieve this, a multifaceted approach, encompassing process optimization, judicious resource allocation, workflow redesign, and the integration of automation, is essential. These strategies are not just theoretical constructs but have been practically applied by industry leaders to drive sustainable growth and efficiency.

Rivian, an innovative electric vehicle manufacturer, exemplifies this approach. With a global presence and a dispersed U.S. team working on various aspects of automotive design and production, Rivian faced challenges in maintaining efficient, sustainable processes. Addressing such complexity, they've likely employed process optimization and workflow redesign to synchronize teams across different geographies, thereby reducing waste and speeding up production.

Similarly, AT&T faced the resistance of long-established structures and policies that hindered their operations. They embarked on a transformative journey that included restructuring their processes and systems, inspired by the candid feedback from their annual employee survey that highlighted the urgent need for change.

OnProcess Technology, now part of Accenture, showcases the significance of supply chain operations as a competitive edge. Their emphasis on domain expertise, data, and processes to revolutionize supply chains aligns with the requirement for a strong strategy that reduces obstacles and enhances operational resilience.

These cases highlight the significance of a comprehensive approach to resolution, utilizing both predictive model-based strategies and measurement-based tactics to cover the entire development cycle. As we advance, AI tools like GitHub Copilot demonstrate the tangible benefits of technology in aiding developers, enhancing productivity across various parameters such as task time, product quality, and cognitive load. By integrating such tools, organizations can facilitate error mitigation and robust exception handling, propelling them toward a more efficient and resilient future.

Optimizing Bottleneck Performance and Scheduling

To enhance productivity, it is essential to tackle and optimize the performance of constraints within processes. A pragmatic approach to this challenge involves strategic workload balancing, setting priorities effectively, and scheduling with precision. For instance, by learning from the trajectory of a team that set clear performance targets for their application, we can understand the importance of fine-tuning to achieve desired results. Their journey to amplify app performance by year-end provides valuable insights into the application of these techniques. Similarly, ScyllaDB Summit presentations have highlighted the necessity of such optimizations, as seen in the implementation of a metastore to reduce query times significantly.

In the realm of deep learning, specifically with Transformer-based models, the refinement process known as bottleneck adapter tuning emerges as a highly efficient method. As these models grow in complexity and size, the need for efficient tuning becomes more pronounced. By applying this method, fine-tuning large models becomes more manageable, paving the way for enhanced performance.

Reflecting on the cloud services paradigm, the integral role of optimization is further emphasized. The cloud is a holistic platform; without comprehensive services, its functionality is compromised, akin to a lego set missing pieces. The optimization of these services is not just about enhancing individual components but ensuring the entire set up operates seamlessly.

This continuous pursuit of performance excellence is underscored by the acknowledgment that a thorough performance evaluation is critical. It not only improves current systems but also enriches the developer's intuition, leading to the creation of superior systems in the future. Hence, by integrating these methods and understandings into our procedures, we can effectively overcome obstacles, guaranteeing the optimal utilization of our resources and minimizing any delays.

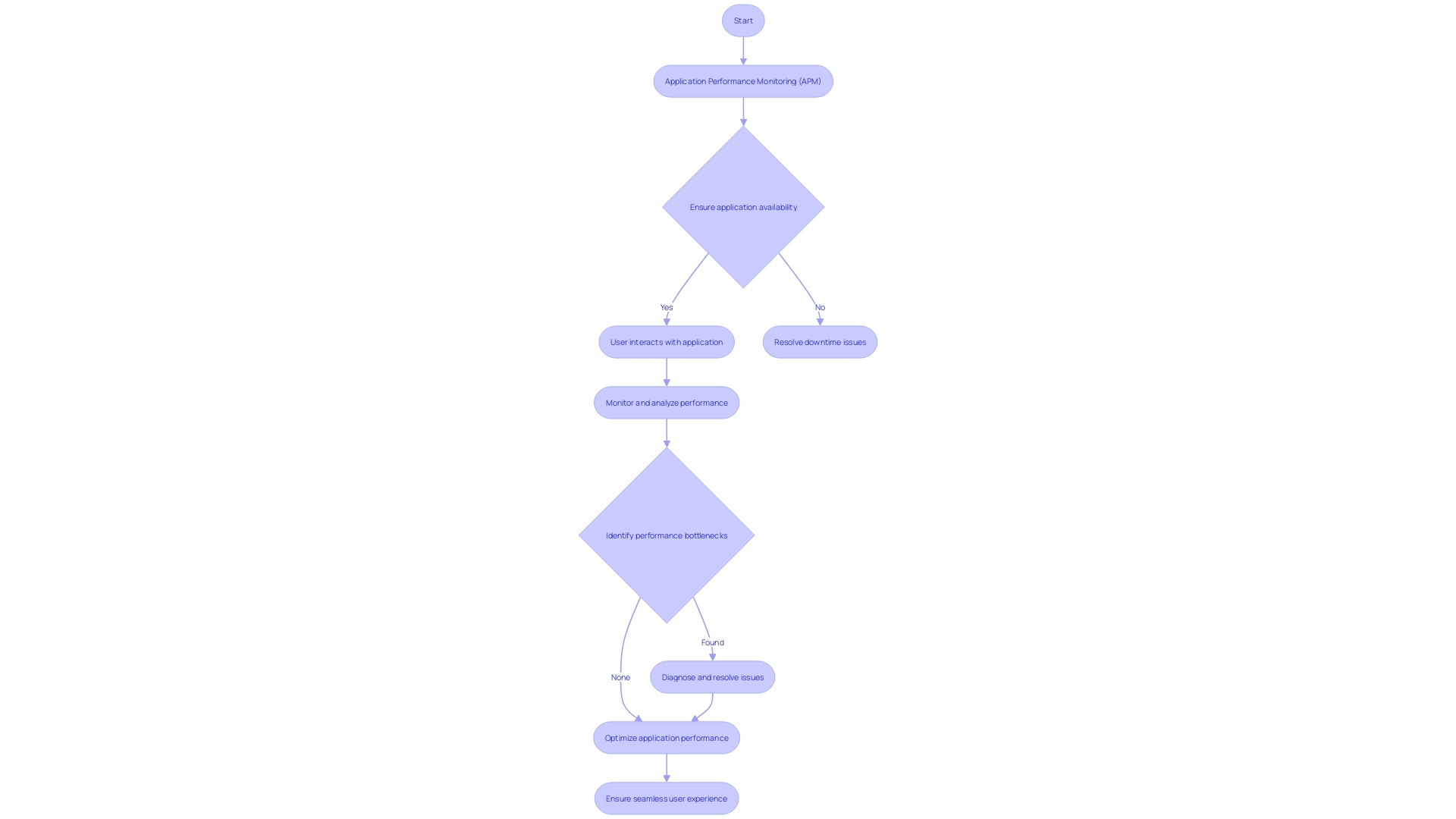

Minimizing Downtime and Managing Production Schedules

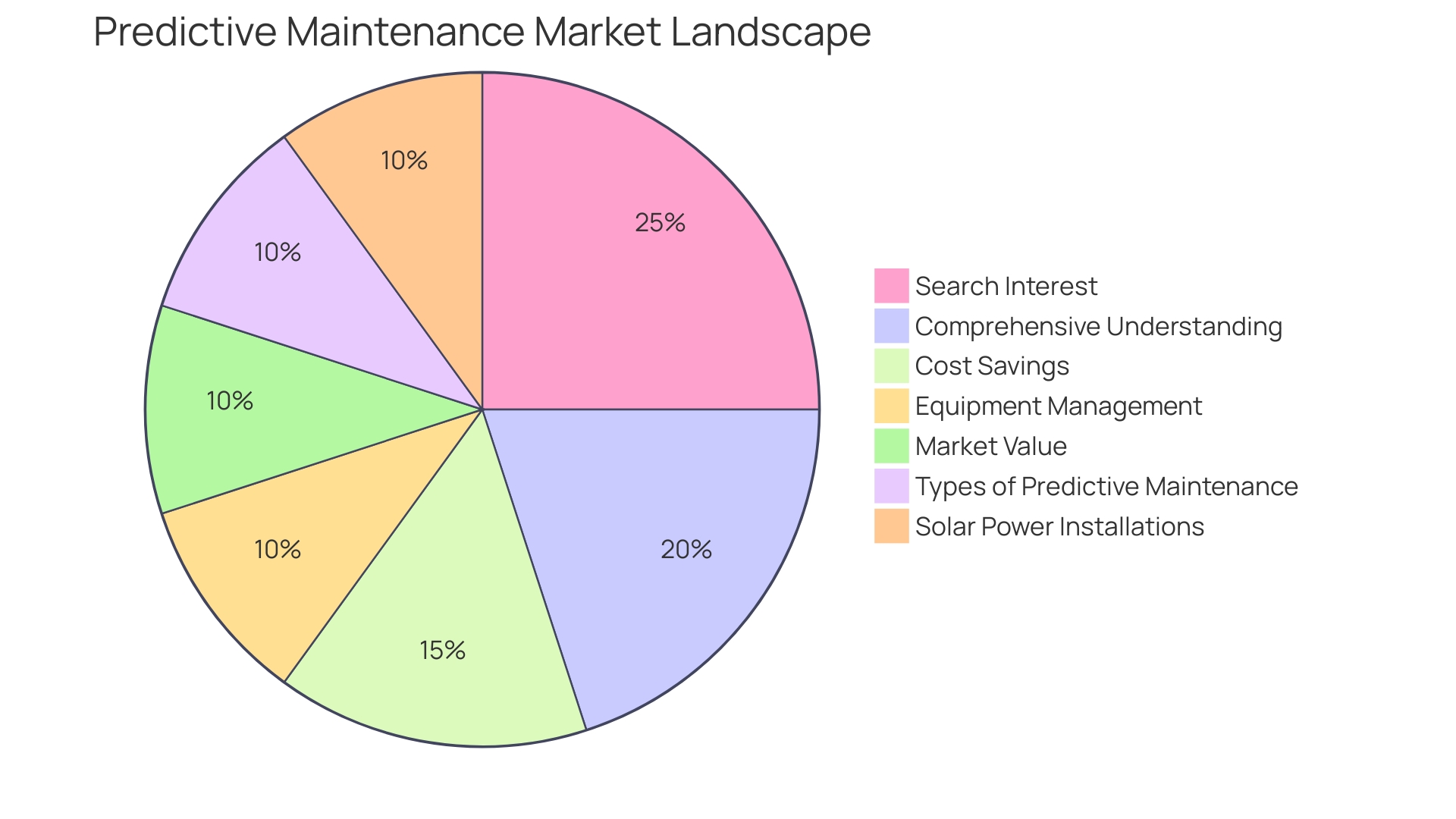

To keep production humming along and ensure deadlines are met, it's essential to have a solid plan in place for minimizing downtime. Strategies like preventative maintenance, which involves routine checks and servicing of equipment to prevent sudden failures, and predictive maintenance, which uses analysis and sensors to predict when a machine might fail, are key to avoiding unexpected disruptions. In addition, intelligent scheduling can ensure that maintenance is performed during off-peak hours, thereby reducing the impact on production schedules.

Consider the case of Lex Machina, who faced challenges managing their Postgres database, resulting in silent failures and substantial time spent on operations scripting. By exploring new database management solutions, they aimed to minimize disruptions and maintain efficiency. Similarly, IFCO's partnership with Rackspace Technology provided them with the expertise needed to navigate to the cloud effectively, leveraging Rackspace's experience to improve their operations. Furthermore, Chess.com's commitment to a stable IT infrastructure supports their mission to serve the global chess community, ensuring that millions of games can be played daily without interruption.

Recent statistics underscore the importance of such strategies. In the UK, business leaders have acknowledged a heightened dependence on connectivity, with internet downtime leading to significant productivity losses and financial impacts. A global survey of plant maintenance decision-makers revealed that 92% saw an increase in uptime due to effective maintenance, with 38% noting an improvement of at least 25%. This not only enhances a company's reputation but also helps meet contractual obligations and secure repeat business. In the US alone, a single day without internet access could result in an economic loss of approximately $11 billion, illustrating the critical need for robust maintenance and scheduling strategies to mitigate such risks.

As the adage goes, 'An ounce of prevention is worth a pound of cure.' Implementing these techniques can make all the difference in maintaining continuous production and delivering on promises to customers.

Implementing Solutions and Monitoring Effectiveness

To navigate the complexities of bottleneck detection and resolution, it's essential to have a strategic approach to monitoring and optimization. By dissecting the process sector by sector and focusing on specific performance metrics, organizations can identify the efficacy of implemented solutions. For example, in the field of public health, the incorporation of real-time information feeds from different sources has been crucial in monitoring community health and preventing disease outbreaks. Similarly, adopting a data-driven mindset is critical in other sectors to enhance decision-making.

Leveraging case studies, such as the ones that address drought response and preparedness in the United States, organizations can better understand the granularity required in subsectors for effective monitoring and adjustments. Furthermore, the thoughtful application of optimization algorithms, as demonstrated in Azure's diverse server environments, can help navigate the trade-offs between computational resources and performance.

Recent progress highlights the significance of effective and safe data exchange across government levels to upgrade and assist communities. This aligns with the findings that a user's home wireless network is frequently the throughput bottleneck—a revelation that highlights the need for continuous performance assessment from end-to-end. These insights collectively guide professionals in establishing strong monitoring mechanisms, tracking progress through meaningful metrics, and making informed decisions to relentlessly pursue performance enhancement.

Continuous Improvement and Regular Bottleneck Analysis

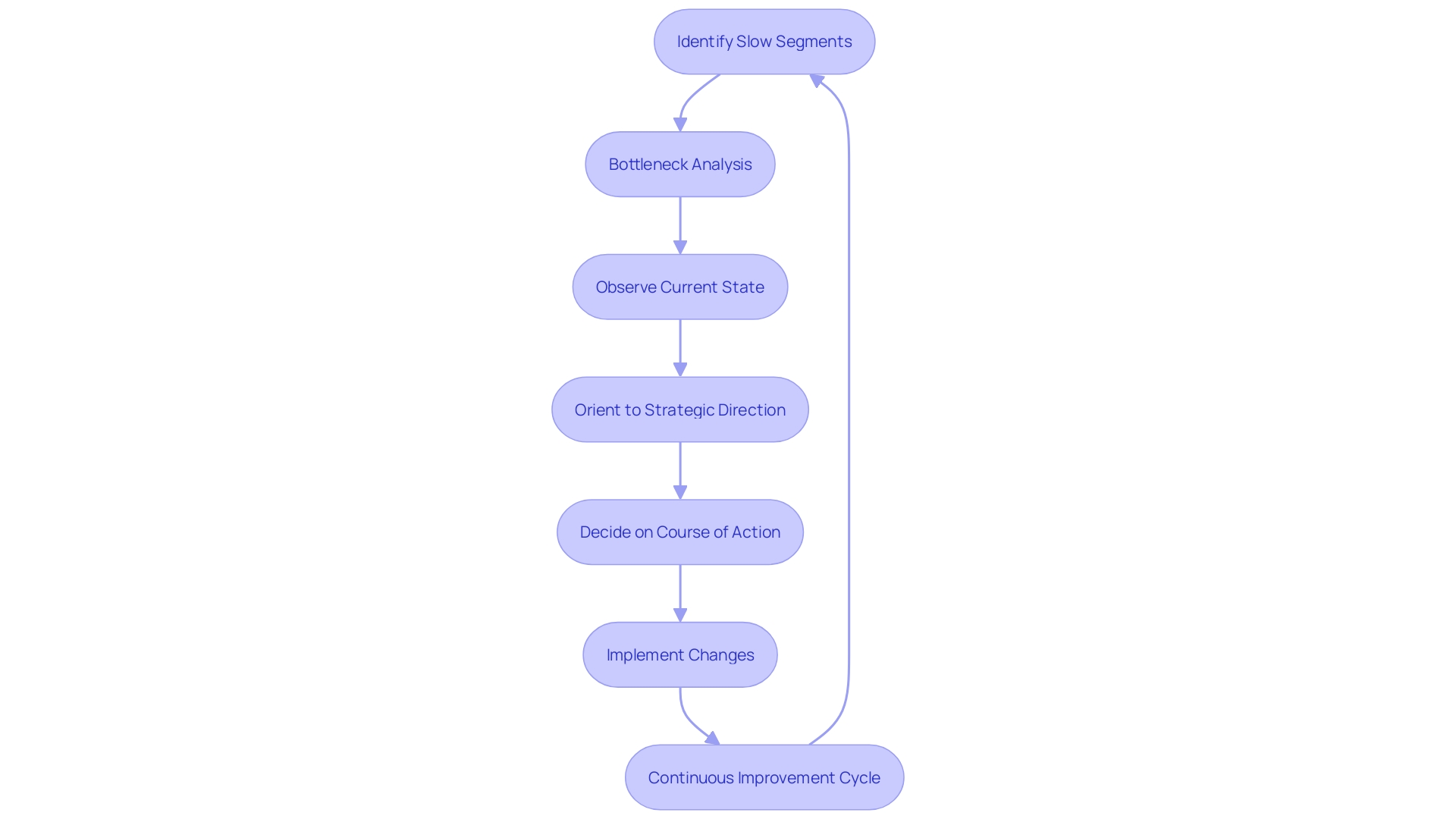

Comprehending and resolving obstacles are vital for upholding a high-performance framework. Amdahl's Law reminds us that enhancements to a setup are constrained by the least efficient parts; much like in a race, your overall time is capped by your slowest segment. By participating in continuous bottleneck analysis, teams can identify these slow segments and concentrate on improving them to guarantee that the entire operation runs more smoothly.

The OODA strategy, utilized by experts in security and risk management, highlights the importance of continuous improvement. It encourages teams to observe and analyze the current state, orient to the strategic direction, decide on the best course of action, and act to implement changes. This process is priceless for evolving structures and adjusting to new challenges, guaranteeing that progress is both sustained and efficient.

Furthermore, performance evaluations, as pointed out by industry professionals, offer valuable comprehension of behaviors and limitations. These evaluations not only shed light on internal mechanisms but also foster the intuition of developers, allowing for the creation of increasingly refined systems. They emphasize the need for frequent examination and the pursuit of continuous improvement.

To truly drive progress, it is essential to foster a culture that values ideation and prioritizes strategic outcomes. This means investing time in careful examination, design, and testing before fully committing to building new features. Adopting this mindset ensures that only the most valuable and impactful improvements are integrated into the product.

Statistics on developer productivity support the significance of continuous improvement. AI pair-programming tools like GitHub Copilot have demonstrated substantial productivity boosts across all developer levels. These tools reduce cognitive load and increase enjoyment, which can lead to better quality products and learning opportunities. This evidence reinforces the value of regular bottleneck analysis and the pursuit of a more efficient and productive development environment.

Conclusion

In conclusion, understanding and resolving bottlenecks is crucial for achieving maximum efficiency and productivity. By adopting a multifaceted approach, organizations can effectively identify and resolve bottlenecks, optimize performance, and manage production schedules.

To identify bottlenecks, strategies such as value stream mapping, process cycle time analysis, and root cause analysis can be employed. Effective tools, such as performance monitoring software and data collection and analysis tools, assist in bottleneck detection.

Resolving bottlenecks requires a multifaceted approach, including process optimization, resource allocation, workflow redesign, and automation integration. This ensures that resources are utilized efficiently and delays are minimized.

Optimizing bottleneck performance involves strategic workload balancing, effective prioritization, and precise scheduling. Preventative and predictive maintenance strategies help minimize downtime and disruptions.

Implementing solutions and monitoring their effectiveness is essential for continuous improvement. By tracking performance metrics and using optimization algorithms, organizations can enhance system performance.

Continuous improvement and regular bottleneck analysis are vital for maintaining high-performance systems. By constantly identifying and addressing bottlenecks, organizations can enhance efficiency and productivity.

In summary, understanding bottlenecks, adopting effective tools and techniques, and implementing strategies for resolution and optimization are key to streamlining operations, enhancing productivity, and achieving maximum efficiency.

Frequently Asked Questions

What is a bottleneck?

A bottleneck is a point in a process that restricts flow, leading to delays and reduced productivity. It can occur in various contexts, including manufacturing and digital infrastructures.

How can bottlenecks affect productivity?

Bottlenecks can significantly hinder productivity by causing slowdowns in processes. For example, in software development, inefficient workflows can lead to developer frustration and decreased job satisfaction.

What are common causes of bottlenecks?

Bottlenecks can arise from various issues, such as limited resources, poor system architecture, or complex communication challenges within teams. Specific examples include resource constraints in software development and maintenance complexities.

How can bottlenecks be identified?

Identifying bottlenecks involves analyzing performance metrics, value stream mapping, and conducting root cause analysis. Techniques such as the 5 Whys and Fishbone diagrams can help trace issues back to their origins.

What tools can help in identifying bottlenecks?

Tools such as performance monitoring software, data visualization tools, and information gathering instruments are essential for diagnosing bottlenecks. These tools help visualize workflows and pinpoint inefficiencies.

What are some common mistakes to avoid during bottleneck analysis?

Common mistakes include inadequate planning, allowing consumer lag to build up, and neglecting to use your software internally to identify issues. Ensuring thorough planning and adopting best practices can mitigate these pitfalls.

What strategies can be used to resolve bottlenecks?

Effective strategies for resolving bottlenecks include process optimization, resource allocation, workflow redesign, and integrating automation. Organizations can apply these strategies to drive efficiency and minimize delays.

How can organizations minimize downtime during production?

Minimizing downtime can be achieved through preventative and predictive maintenance, as well as intelligent scheduling of maintenance tasks during off-peak hours. These strategies help maintain operational efficiency.

What is the role of continuous improvement in managing bottlenecks?

Continuous improvement involves regularly analyzing and addressing bottlenecks to ensure high performance. Utilizing frameworks like OODA (Observe, Orient, Decide, Act) can help teams adapt and improve over time.

How do AI tools impact bottleneck management?

AI tools, such as GitHub Copilot, enhance productivity by reducing cognitive load and simplifying complex processes. They provide valuable support for developers, leading to more efficient workflows and improved output quality.