Introduction

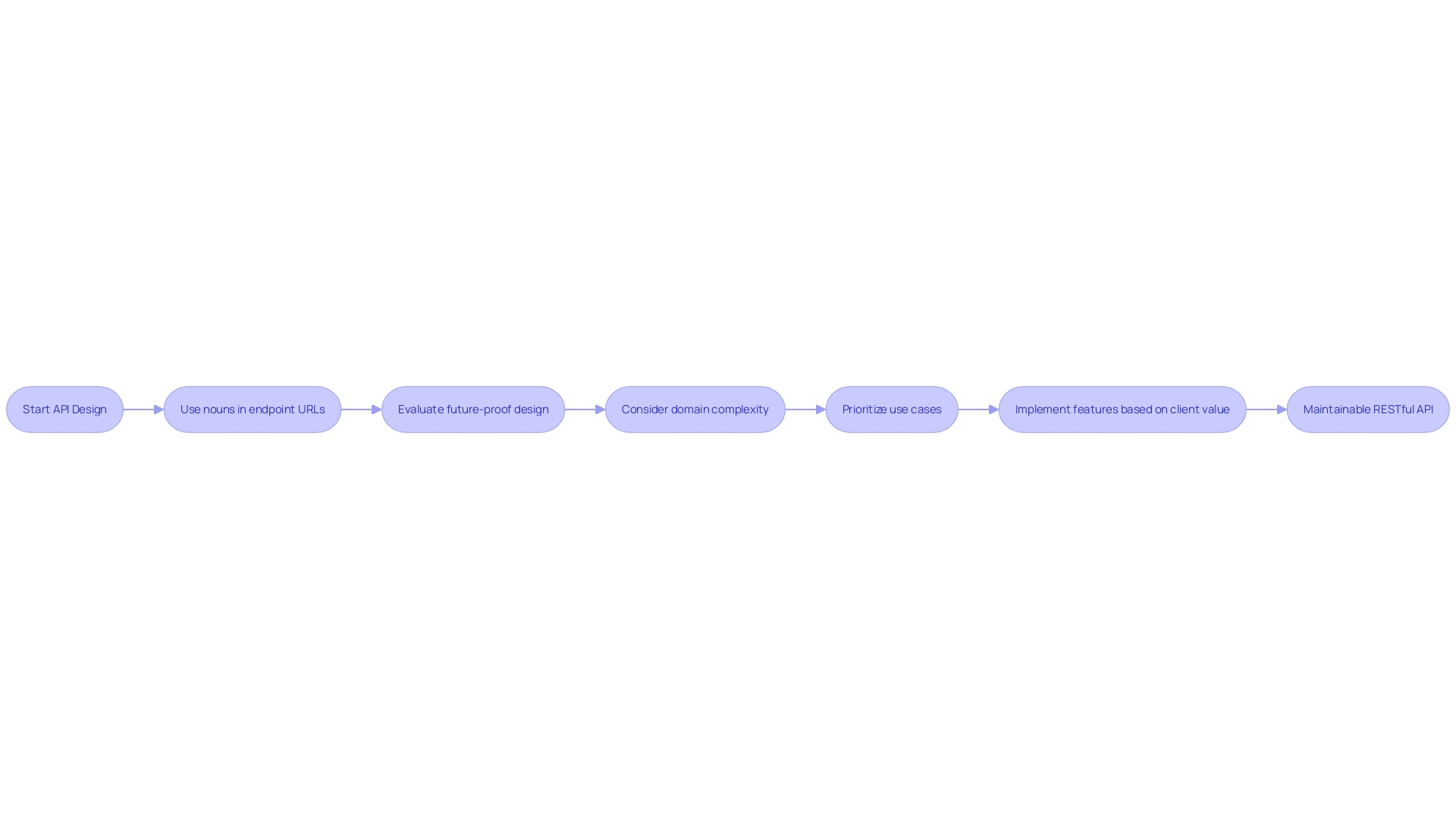

Crafting RESTful endpoints is a subtle art that demands a deep understanding of the principles of REST. Representational State Transfer, or REST, is an architectural style that ensures self-contained requests and resource-based interactions. In this article, we will explore the fundamentals of RESTful API development and the benefits of designing endpoints that adhere to REST principles.

We will delve into the use of HTTP methods as verbs and resources as nouns, making the API more user-friendly. Additionally, we will discuss the importance of understanding the domain and prioritizing specific functionalities that the API needs to support. By following these best practices, you can create robust, scalable, and user-friendly APIs that deliver outstanding user experiences.

Designing RESTful Endpoints

Crafting RESTful endpoints is a subtle art that demands a deep understanding of the principles of REST. Representational State Transfer, or REST, is not merely a set of rules but an architectural style that ensures that each request from a client to a server is self-contained, with all the necessary information to process the request. This stateless approach, coupled with a uniform interface and resource-based interactions, forms the backbone of RESTful API development.

In the spirit of REST, your API should be designed to handle data operations clearly and intuitively. A RESTful endpoint uses HTTP methods as the verbs, such as GET, POST, PUT, and DELETE, to perform actions on resources, which are the nouns, like /users or /products. For instance, sending a GET request to /articles retrieves a list of articles, whereas a POST request to the same endpoint might add a new article.

This 'verb + object' structure is straightforward and mirrors the way we naturally communicate, making the API more user-friendly and easier to understand.

The journey of learning to design RESTful endpoints begins with a strong foundation in the basics of web services and a good grasp of the language in which you're working—in this case, JavaScript. With just a browser and a code editor at your disposal, you can start making your first API requests and handling the responses.

It's essential to recognize that what we once considered 'best practices' can become outdated as technology evolves. The use of 'X-' prefixes in parameter names, for example, was once common but has since been recognized as problematic and is no longer recommended. It is a reminder that standards are not static and that we must be willing to adapt and refine our approaches as we gain new insights and understanding.

As we explore the domain in which our API will operate, we must identify and prioritize the specific functionalities it needs to support. This could range from facilitating communication between software components to collecting and retrieving data for analytics. Each use case should be carefully analyzed for its relevance and impact, ensuring that the API serves both the clients and developers effectively.

To sum up, RESTful APIs represent a standardized way for applications to interact and share data. As we delve into API design, we will focus on the principles of REST, the construction of RESTful endpoints, and the practical considerations that will help us create APIs that are not only easy to use but also robust, scalable, and capable of delivering outstanding user experiences.

Using Nouns Instead of Verbs in Endpoint URLs

Adhering to best practices in RESTful API design enhances the clarity and longevity of an API. Rather than using verbs in endpoint URLs, which can lead to narrow, action-specific paths, it's recommended to use nouns that represent resources. Consider the difference between /API/createUser and /API/users.

The latter is not just more intuitive but also aligns with the principle of treating an API as an interface, a contract between systems that should remain consistent and decoupled from internal logic changes.

The concept of using nouns over verbs is underlined by the need for an API to be future-proof. Software evolves, and what's seen as a best practice today might not hold tomorrow. For instance, while one might consider using POST /reservations/{id}/cancel to reflect a reservation's internal state, this approach introduces specificity that can limit the API's adaptability.

Instead, employing methods like DELETE /reservations/{id} for cancelling reservations can offer a more standardized and less ambiguous interface.

Furthermore, as the domain of software development is complex and varies across different contexts, what may seem like a best practice in one scenario could be problematic in another. For instance, the 'Account' domain is multifaceted, and its representation in an API will differ based on departmental or lifecycle considerations. This complexity suggests that standards should be critically evaluated rather than blindly followed.

Ultimately, when designing RESTful APIs, it's crucial to deeply understand the domain and prioritize use cases that will most impact both clients and developers. This strategic approach ensures the API serves as a robust, clear, and maintainable interface between systems, simplifying integration and promoting long-term productivity.

Following a Hierarchical Structure for Resource Representation

When designing RESTful APIs, the organization of resources plays a pivotal role in creating a system that's intuitive and scalable. As we peel back the layers of effective API architecture, we find that a hierarchical structure is not merely a 'best practice' but a reflection of a deeper design philosophy. Drawing inspiration from Hexagonal Architecture, we aim to build APIs that are both robust and maintainable, by clearly separating the core business logic from external interfaces.

Consider an API for a blogging platform. Adopting a hierarchical representation, we could structure endpoints such as /api/blogs/{blogId}/posts to access posts within a specific blog. This isn't just about neatness—it echoes the principles of Hexagonal Architecture, where the 'ports' represent various entry and exit points for data (in this case, blog posts), and the 'adapters' handle the communication with these ports.

The benefits of this approach are manifold. Apart from mirroring the modular nature of our application's architecture, it allows for seamless testing and maintenance. It adapts well to changes, ensuring our API can evolve without cascading failures.

Moreover, it aligns with contemporary shifts in the industry, where enterprises are strengthening their platforms and open-source resources are being leveraged to educate on modern APIs.

Yet, we must be cautious. In the words of a skeptic of rigid standards, 'best practices' can sometimes outlive their utility. As we forge our path in the API landscape, it's vital to question and validate the relevance of these practices.

After all, the essence of REST isn't in slavishly adhering to conventions but in the thoughtful orchestration of operations that reflect the unique behaviors and structures of our software products.

By deeply exploring our domain, we ensure our API supports the functionalities vital to our use cases, prioritized by their significance. This is not just a matter of structuring data—it's about facilitating meaningful interactions between software components, embodying the declarative nature of our business logic. In doing so, we unlock the full potential of RESTful endpoints, enabling them to serve as conduits for the rich and dynamic exchange of information across the digital ecosystem.

Utilizing HTTP Methods for CRUD Operations

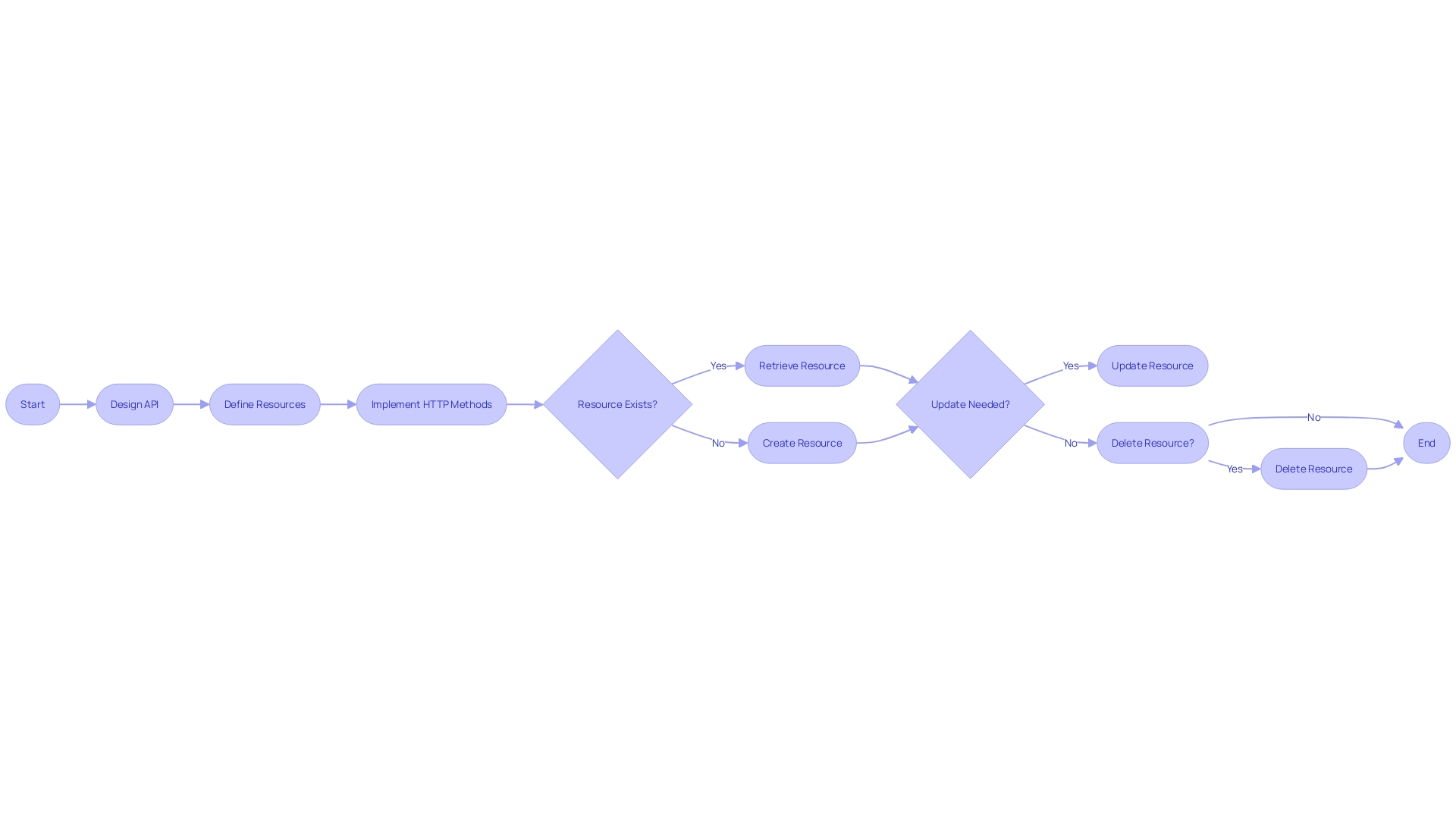

RESTful APIs, an acronym for Representational State Transfer Application Programming Interfaces, are the cornerstone of web services design, enabling standardized communication between diverse software applications. At the heart lies the use of HTTP methods to interact with web resources. The POST method is typically employed to create new resources, whereas the GET method is designated for retrieving existing data.

Furthermore, the PUT method is used to update a resource and the DELETE method to remove it.

It's crucial to understand that an API serves as an interface between two systems, acting as a contract to encapsulate logic and allow decoupling. This perspective is essential because it underpins the longevity of the software we develop, which may surpass our tenure and the initial requirements. For instance, consider the debate between using DELETE /reservations/{id} or POST /reservations/{id}/cancel for handling reservation states.

The crux of this debate centers around the permanence of the deletion and the evolution of internal resource states.

In the context of API design, it's vital to prioritize use cases based on their importance and impact on both clients and developers. This deep dive into the domain ensures that the API supports specific functionalities effectively. The distinction between REST and RESTful endpoints is subtle yet significant; while REST refers to the overarching architectural style, RESTful endpoints specifically pertain to the points of interaction in web applications that adhere to REST principles.

As we navigate the intricacies of RESTful API development, we must acknowledge that standards and best practices are not static; they evolve. Challenging the status quo is necessary, as eloquently stated by an industry expert, "Just because everyone is following along, doesn’t mean that it necessarily even makes sense anymore or should be a 'best practice'." This quote underscores the importance of critical thinking in software development.

In conclusion, RESTful APIs are not just about following a set of rules; they represent a dynamic approach to building web services that cater to the ever-changing landscape of software development, ensuring that applications communicate effectively and stand the test of time.

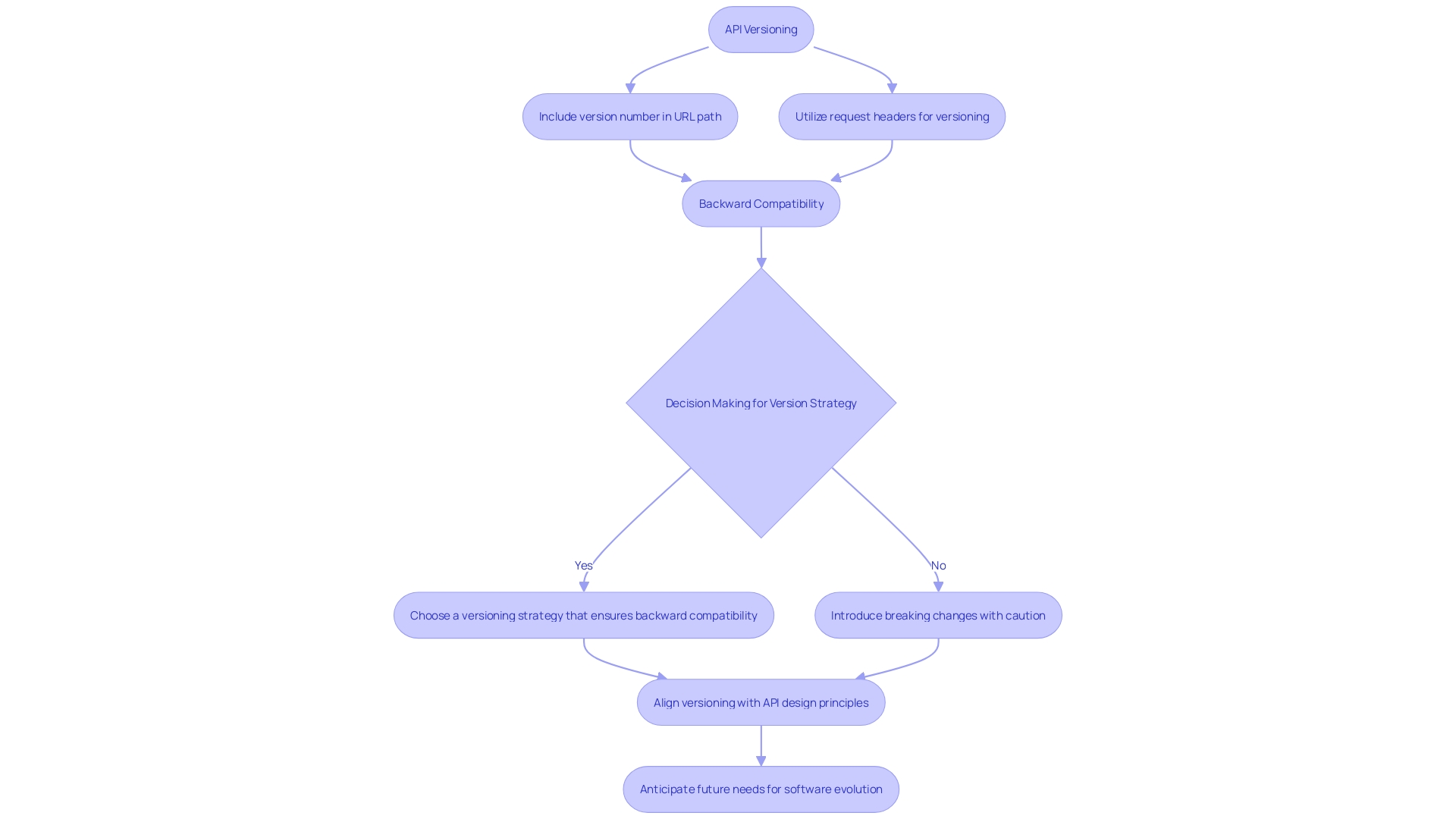

Versioning Your APIs

Understanding the evolution of software is crucial, particularly when it involves API development where changes can impact the system's interoperability. As software adapts to new business needs or regulatory changes, versioning becomes an indispensable tool for maintaining compatibility and ensuring that any updates do not disrupt the functionality of existing client applications. Choosing a flexible and forward-compatible versioning strategy is vital.

One popular method of API versioning is to include the version number in the URL path. This approach is straightforward and easily recognizable, making it a common choice among developers. Another method involves utilizing request headers to specify the API version, which can keep the URI clean and support multiple versions simultaneously without changing the URI structure.

A compelling analogy compares the use of libraries, frameworks, and tools in software development to running a business on credit; just as borrowed capital can help launch or grow a business, borrowed code in the form of dependencies can accelerate development. However, the quality of these 'investments' can greatly affect the outcome, underscoring the importance of careful versioning.

For instance, consider how adding parameters to an API can be as simple as appending an optional property to the interface. This non-breaking change allows older clients to operate without modification while permitting newer clients to leverage the additional functionality. It's a delicate balance to ensure that both backward compatibility and future enhancements are addressed.

It's essential to recognize that not all software changes are backward-compatible. Some alterations, like removing an endpoint, constitute breaking changes that necessitate careful handling through versioning. As software and APIs are economic artifacts with inherent value, investing resources in their development demands a strategic approach to maximize return on investment, including how changes are managed through versioning.

In conclusion, the method of versioning chosen should align with the overall API design principles and anticipate future needs, allowing for seamless evolution of the software while minimizing disruption for users. This strategy is not just a technical necessity but also a business imperative to ensure the longevity and success of the API ecosystem.

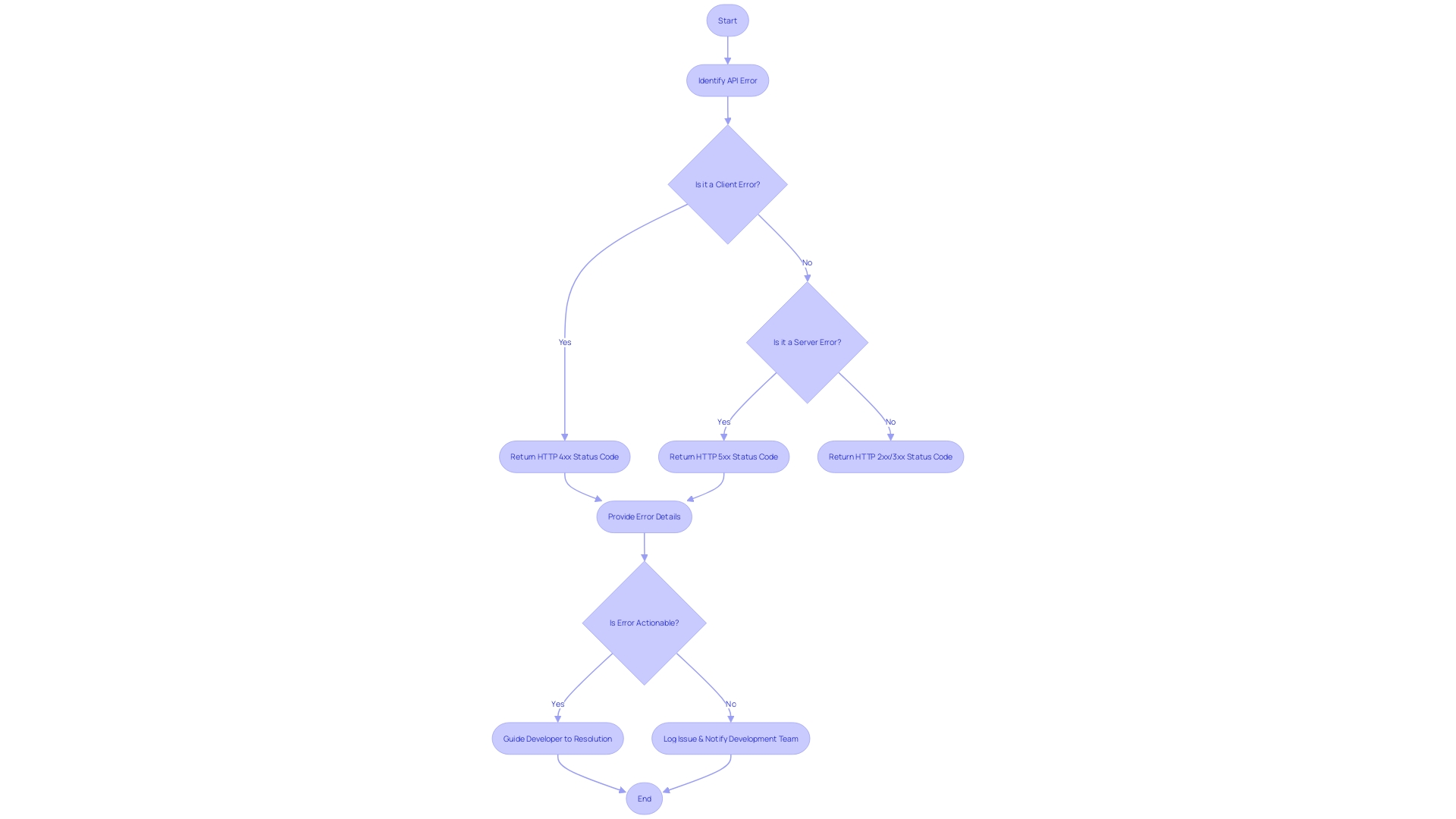

Response Formats and Status Codes

Crafting a successful API involves more than just handling requests; it also means providing meaningful feedback to users, especially when things go wrong. HTTP status codes are integral to this communication, categorizing responses into groups like informational, success, redirection, client error, and server error, with familiar codes such as 404 for 'Not Found' or 500 for 'Internal Server Error'. Each code conveys a specific message to the developer, guiding them towards understanding and resolving issues.

The structure and clarity of error messages are equally important. A well-formulated error response can turn a frustrating debugging session into a straightforward fix. As the digital landscape evolved, the role of APIs expanded from simple data retrieval to complex interactions between decoupled frontend and backend systems.

The separation highlighted the need for standardized error responses that are both informative and actionable, ensuring developers can easily navigate and remedy problems.

The modern web is inherently unpredictable, and APIs, as its building blocks, are subject to this uncertainty. An error message must, therefore, be clear and resolvable, providing enough information for a developer to attempt a fix or, at the very least, understand that no action is needed. This approach to API design not only aids in error handling but also enhances the interoperability between different systems, smoothing out the integration process in our ever-growing web of APIs.

Returning Responses in JSON Format

RESTful APIs have revolutionized the way we interact with web services, and central to their operation is the format in which they communicate: JSON. With its lightweight nature and ease of use, JSON has become the cornerstone for exchanging data across diverse platforms. As the universally preferred response format, it streamlines the process of parsing and manipulating data on the client-side.

For example, at GitHub, the transformation of their documentation to a dynamic application at docs.github.com highlighted the move towards data-driven content, heavily relying on JSON for content delivery and internal communications. This move to JSON not only enhanced developer experiences but also improved the efficiency of the platform's operations.

Moreover, considering the REST architectural style that powers HTTP methods for web interactions, JSON's role becomes more pronounced. It enables developers to perform actions such as create, retrieve, update, and delete with unparalleled simplicity, contributing to robust and scalable web applications.

Statistics underscore the ubiquity of APIs, with countless applications and services relying on them daily. APIs act as intermediaries, allowing for seamless interactions between software components. For instance, a weather app on your phone retrieves data from an external source, facilitated by APIs that define the interaction protocols.

The significance of JSON in RESTful APIs is further evidenced by the data-access patterns used by Node.js developers, such as the Mongoose project. These patterns require features like complex document manipulation, which JSON adeptly supports through its flexible and intuitive structure.

Ultimately, the clear responses that APIs provide, analogous to a waiter delivering an order in a restaurant, are made possible by the structured and reliable format of JSON. It ensures that the data delivered is not only accessible but also actionable, paving the way for more efficient and user-friendly applications.

Using Appropriate HTTP Status Codes

HTTP status codes are integral to the web, signaling the outcome of a client's request to a server. These codes are more than mere numbers; they are a standardized language ensuring that clients and servers communicate effectively. For instance, the '200 OK' status code is a universal thumbs-up, indicating that everything went smoothly and the request was successful.

Conversely, the '404 Not Found' speaks of a hiccup, informing that the requested resource is nowhere to be found.

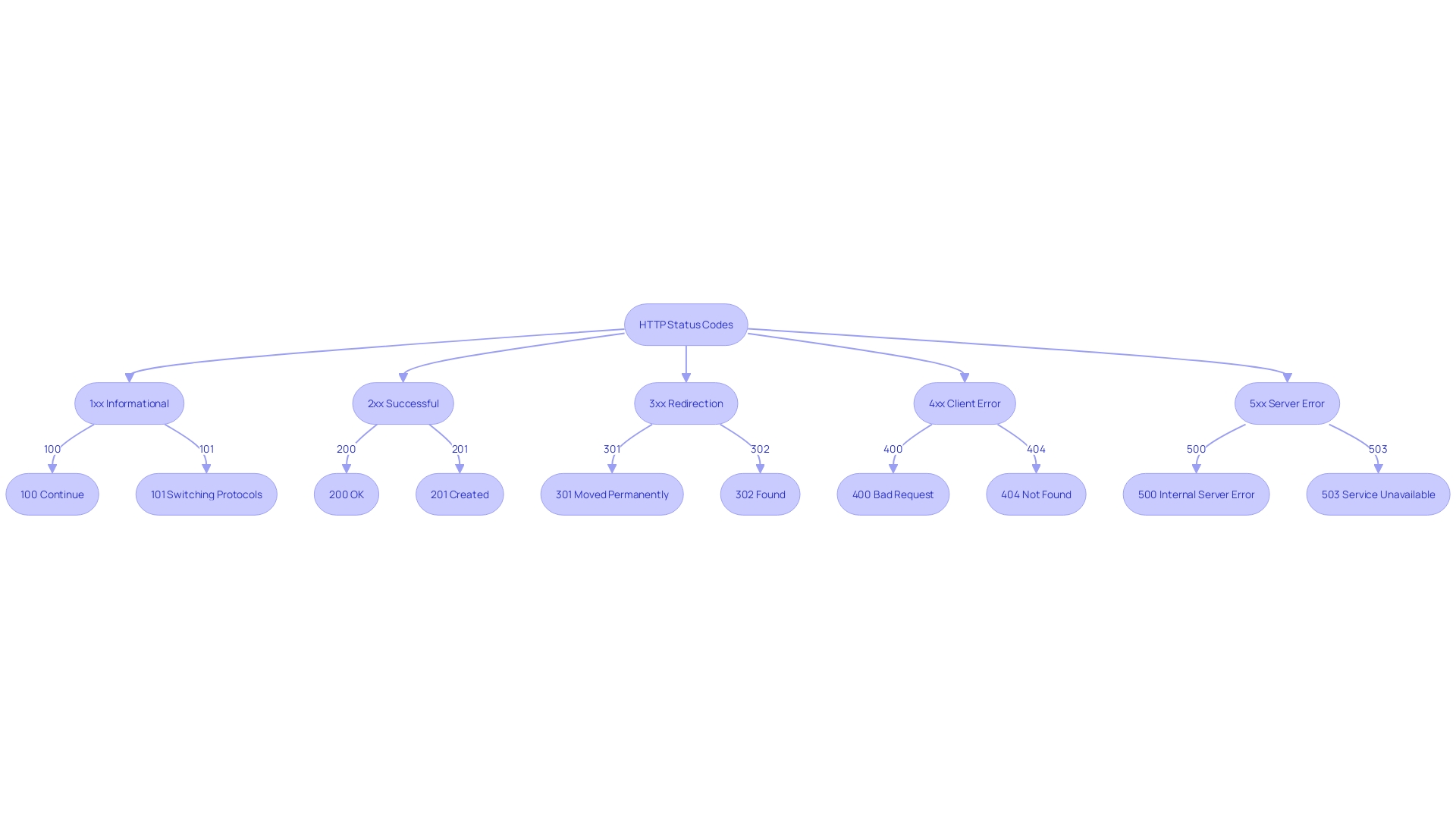

Understanding the full spectrum of HTTP status codes is key to diagnosing and resolving web issues. They fall into five distinct categories, each with a unique purpose:

- 1xx - Informational: The request is received and under process.

- 2xx - Success: The request was successfully received, understood, and accepted.

- 3xx - Redirection: Further action needs to be taken to complete the request.

- 4xx - Client Error: The request contains bad syntax or cannot be fulfilled.

- 5xx - Server Error: The server failed to fulfill a valid request.

While these codes have been part of HTTP since its inception in the early 1990s, the significance of effectively delivering the 'bad news' through error codes (4xx and 5xx) has become increasingly recognized. A well-structured, informative, and actionable error message not only streamlines troubleshooting for developers but also enhances the interoperability between diverse systems amidst the expanding web of APIs.

By leveraging status codes wisely, developers can ensure a more coherent and reliable web experience, where communication between client and server is clear-cut and leaves no room for ambiguity.

Providing Meaningful Error Messages

APIs are the backbone of modern web services, acting as a bridge between different software applications. As with any complex system, errors are inevitable. When they occur, providing clear, understandable, and actionable error messages is crucial.

Good error handling is akin to having a well-written user manual for a laptop; it guides users through resolving issues, enhancing their overall experience.

Clear error messages empower developers to pinpoint problems quickly, reducing the time spent troubleshooting. This is particularly important considering the sheer volume of APIs available, each serving its unique function and information. A well-crafted error message should serve as a beacon, helping users navigate through the potential complexities of API integration and use.

In the landscape of API development, it is essential to not only anticipate errors but also to deliver the bad news in a structured and informative manner. Historically, inconsistency in error formats has led to a 'Wild West' scenario, creating a nightmare for developers working with multiple systems. Therefore, error messages should not only indicate what went wrong but also provide sufficient context to attempt a resolution or, when no action is needed, convey that assurance.

For developers, the quality of an API is often judged by their interaction during error handling. As such, error messages are an integral part of API documentation, serving as the first line of support when issues arise. They not only reflect the API's reliability but also its commitment to providing a seamless developer experience.

In conclusion, as we navigate the ever-expanding universe of APIs, the clarity and usefulness of error messages cannot be understated, making them a pivotal aspect of API documentation.

Authentication and Authorization

Ensuring the security of API endpoints is paramount in a digital environment where sensitive data is frequently exchanged. Authentication, the process of verifying user or system identity, is a core feature of robust API gateways. Particularly in Kubernetes environments, traditional authentication methods fall short in safeguarding against sophisticated cyber threats.

This necessitates adopting advanced authentication techniques that can withstand the intricacies of such complex systems.

In the enterprise realm, with growing demands and frequent staff turnover, the need for secure and flexible resource management is more crucial than ever. API gateways that support LDAP Single Sign-On (SSO) address this need by providing a singular access point for both internal and external services. The gateway not only simplifies access and management for developers but also enhances security and monitoring capabilities, thereby bolstering the system's overall integrity.

Microservices architectures present their unique challenges, with varied strategies for managing access. One approach is decentralized, where each service independently verifies access, akin to individual security checks at multiple club entrances. Alternatively, a centralized model employs an API Gateway to handle all access management, streamlining the security protocols.

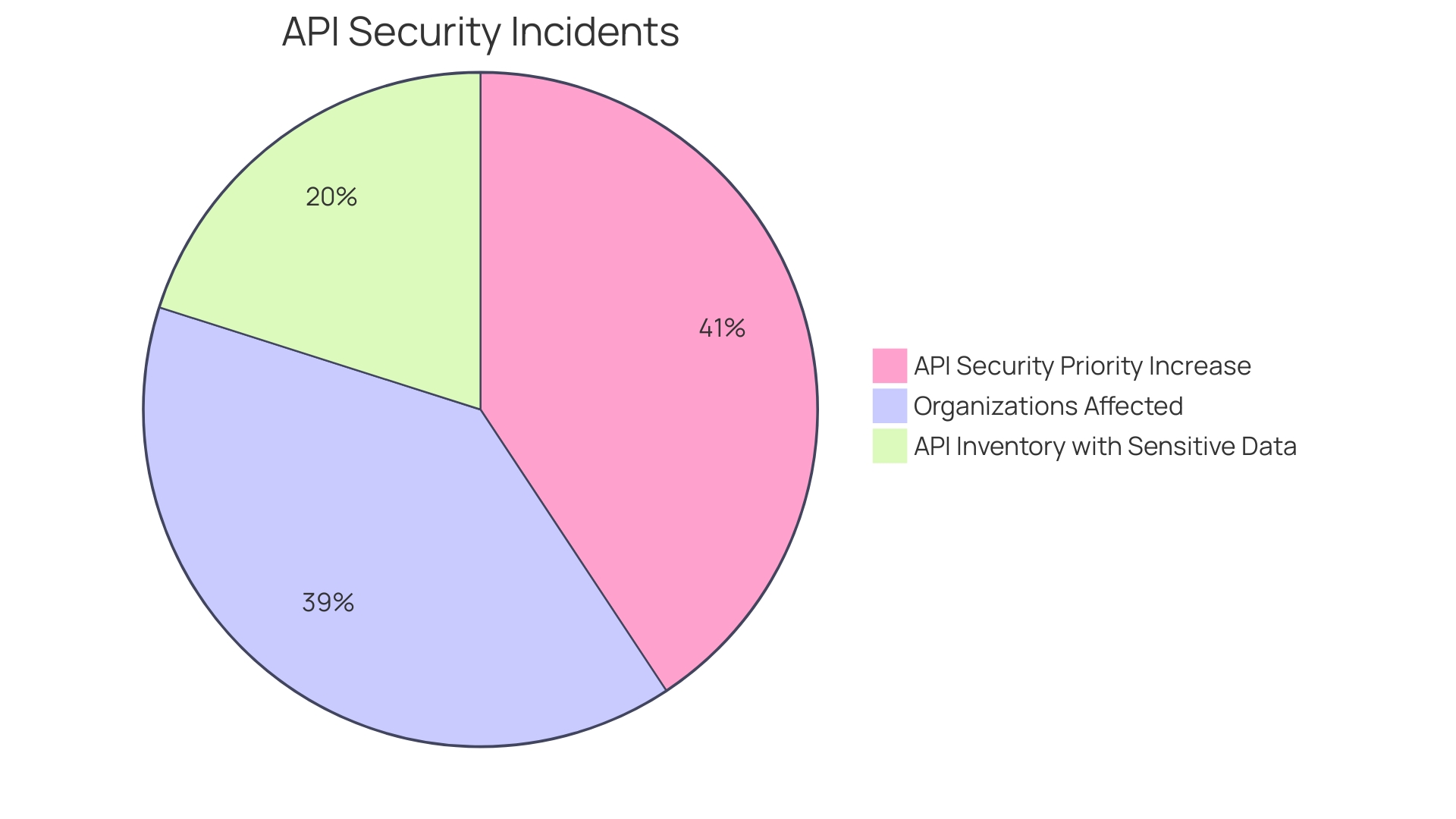

The rise in API utilization has unfortunately correlated with an uptick in API breaches, emphasizing the criticality of API security. Reports indicate that a staggering 78% of cybersecurity teams have encountered an API-related security incident in the past year. Despite many organizations having a full inventory of APIs, only 40% have clear visibility over which APIs return sensitive data, highlighting the urgency for increased API security measures.

Securing data both in transit and at rest is a foundational element of API protection. Utilizing HTTPS ensures encryption of data exchanges, safeguarding against interception by malicious actors. In this high-stakes digital landscape, the implementation of comprehensive and vigilant API security protocols is not just beneficial—it's indispensable for safeguarding sensitive information from exploitation.

Implementing Secure Authentication Mechanisms

In the realm of web applications and digital platforms, where APIs are the linchpins allowing software components to interact seamlessly, the pressing issue of API security has come to the forefront. With organizations like TotalEnergies Digital Factory (TDF) depending heavily on APIs for their operations across diverse locations, the implementation of robust authentication strategies is not just beneficial but necessary. Techniques such as OAuth 2.0 and JSON Web Tokens (JWT) act as gatekeepers, ensuring that only verified users can access your API's valuable resources and execute permitted actions.

These secure authentication methods are critical in contexts like TDF's expansive operations, with 80+ digital solutions and 215+ deployments worldwide, where they counteract the vulnerabilities that come with high personnel turnover and the need for flexible resource management. Moreover, in light of recent cybersecurity reports indicating that 78% of teams have faced API-related security incidents within a year, and with only 40% of those with a complete API inventory having visibility into which APIs return sensitive data, it's clear that traditional authentication mechanisms are inadequate.

The significance of better authentication is underscored by the fact that an API gateway, often based on Kubernetes, is the main access point to services and must be shielded from unauthorized entities. In the enterprise information systems of today, API gateways, augmented with protocols like LDAP Single Sign-On, provide a fortified and unified entry point, contributing to heightened security and system reliability. They are the cornerstone of a digital ecosystem, where security incidents not only breach data but also shake the very foundation of trust and integrity that enterprises like TotalEnergies endeavor to maintain.

Enforcing Proper Authorization Checks

To fortify the security of web applications, it is crucial to incorporate not only authentication mechanisms but also robust authorization checks. Authorization is pivotal in determining the permissions of users and ensuring that they can only carry out the functions for which they have clearance. Function Level Authorization (FLA) is a method of control that restricts access to certain operations within a system or application based on a user's permissions.

Rather than providing unrestricted access, FLA limits users to specific tasks they're authorized to perform, akin to assigning a unique 'secret code' that grants access to certain functionalities within an application.

Consider the scenario of an API gateway integrated with LDAP Single Sign-On, which acts as a centralized entry point for both internal and external services. It not only simplifies access management for developers but also enhances the system's security and reliability by providing security, monitoring, and traffic control features. The rise of microservices architecture has introduced various access management strategies.

One approach delegates the responsibility of access control to each individual service, much like having separate 'bouncers' for different rooms in a club, each responsible for checking the credentials of anyone trying to gain entry.

However, with the growth in the utilization of APIs, there has been a correspondingly significant increase in API security breaches, underscoring the need for stringent security measures. A breach can lead to the exposure of sensitive data, such as personal or financial information, which could be exploited for fraudulent activities or identity theft. To spot issues with broken FLA, a thorough analysis of the authorization mechanism is necessary, taking into account user roles and permissions within the application.

Questions such as whether a regular user can access administrative endpoints or perform sensitive actions they're not authorized for by changing the HTTP method (e.g., from GET to DELETE) are essential in identifying vulnerabilities.

Adopting practices like Role-Based Access Control (RBAC) or Attribute-Based Access Control (ABAC) can substantially enhance authorization checks by defining roles and attributes that restrict access to sensitive resources. In an era where APIs are the backbone of digital infrastructure, ensuring robust security protocols and monitoring practices is imperative to protect sensitive data from unauthorized access.

Using HTTPS for Data Encryption

The advent of digitalization and the increasing reliance on web applications have profoundly underscored the importance of robust API security. As a cornerstone of modern software development, APIs act as vital conduits for data exchange, often containing or granting access to sensitive information such as personal or financial details. Without stringent security measures, the data transmitted via APIs can be compromised, leading to significant threats such as unauthorized interceptions, data breaches, and identity theft.

In light of this, HTTPS has become an indispensable protocol, fortifying data integrity and confidentiality by encrypting the information exchanged between clients and servers. This encryption is critical in mitigating risks associated with cyberattacks, including man-in-the-middle attacks and eavesdropping, which seek to exploit unsecured API communications.

Real-world applications of such security measures can be seen in various industries. For instance, Breadwinner, a Software as a Service company, integrates Salesforce with various accounting and payment solutions, necessitating the secure handling of financial data. Similarly, Applied Systems safeguards vast amounts of financial records and personal information for its insurance clients.

In the iGaming sphere, SOFTSWISS confronts the challenge of defending against DDoS attacks and malicious bots targeting sensitive data.

The imperative for secure API protocols is echoed by cybersecurity experts, who advocate for encryption as a legal and compliance mandate across industries. It not only preserves consumer trust in digital services but is also crucial for adhering to privacy standards. With 78% of cybersecurity teams having encountered an API-related security incident in the past year, and only 40% of organizations with full API inventories having visibility into which APIs return sensitive data, the priority of API security has never been more evident.

Adopting such security protocols, however, is not solely a technical endeavor. Comprehensive cyber security training for employees is pivotal in reinforcing API defenses. As digital infrastructures become increasingly reliant on APIs, the collective effort in securing them remains paramount to protect critical data and maintain the integrity of digital services.

Request and Response Payloads

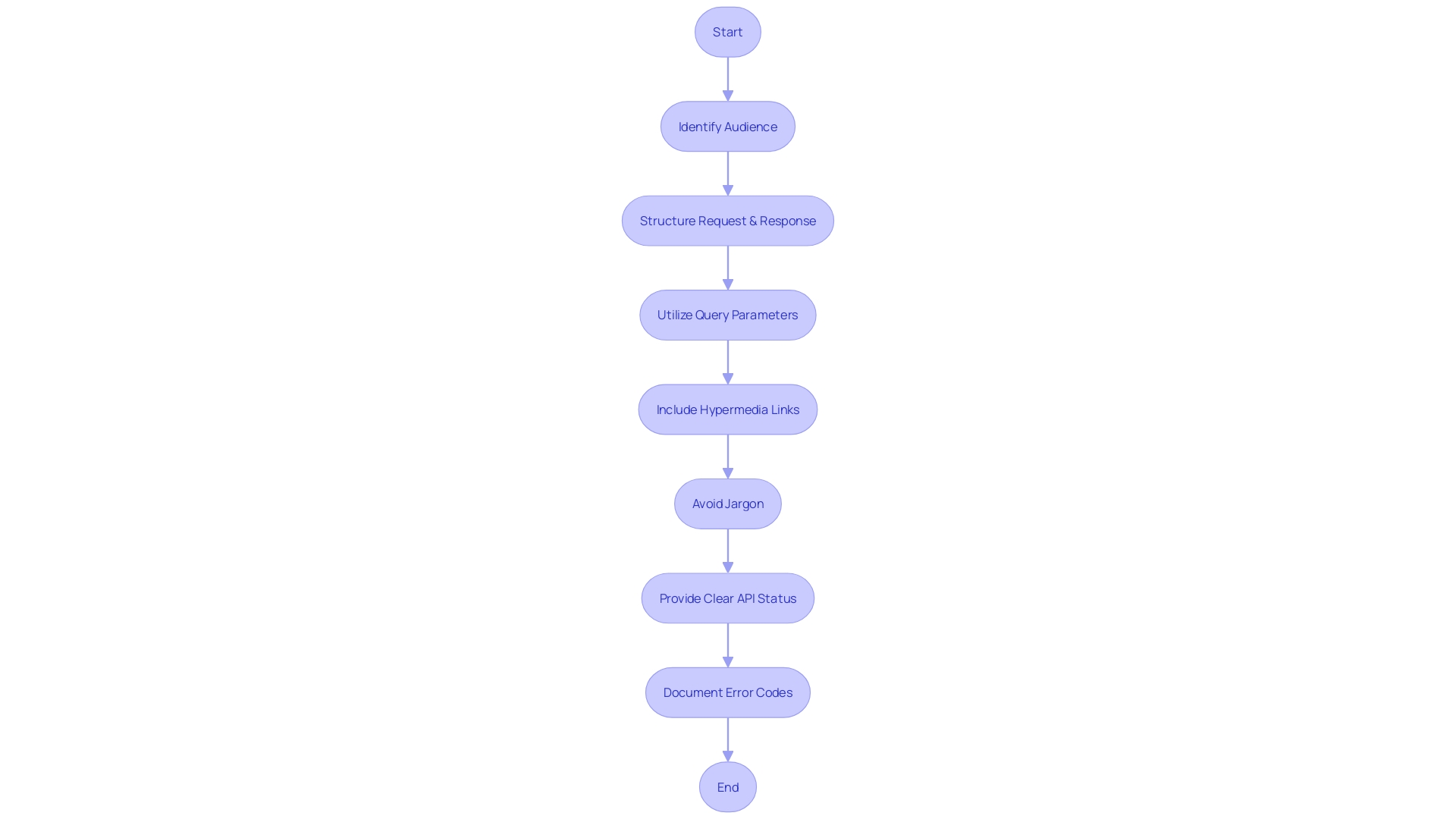

Creating effective API documentation is akin to providing a detailed user manual for software interaction, ensuring users can fully leverage the API's capabilities. To achieve this, it's important to craft request and response structures that are both informative and user-friendly. Best practices suggest keeping request payloads streamlined to avoid unnecessary data exchange, which not only optimizes performance but also simplifies integration for developers.

Utilizing query parameters effectively can enhance API usability by enabling fine-tuned data filtering, efficient sorting, and seamless pagination, thus providing a tailored experience for each use case.

In parallel, including hypermedia links within API responses can significantly improve navigation and discoverability of related resources, promoting a more intuitive and interconnected API ecosystem. By contemplating the user's perspective, especially newcomers to the field, it is advisable to avoid jargon and include links to glossaries or tooltips for complex terms, thereby fostering a more inclusive and educational environment. This approach not only facilitates a better understanding but also serves as a preventive measure against potential security breaches by providing clear and updated documentation on API status and error codes, thereby ensuring robust communication with API users.

Keeping Request Payloads Concise

Streamlining API request payloads is not just about reducing the data size; it's about enhancing the communication efficiency between software components. At the digital heart of TotalEnergies, the TotalEnergies Digital Factory (TDF) knows this all too well. With a global presence and a mission to foster sustainable energy, they've leveraged APIs to revamp legacy systems, developing over 80 digital solutions across 25 countries.

TDF's experience underscores that concise payloads are crucial for rapid and reliable API interactions. This approach aligns with the fundamental role of APIs as bridges between software components—whether server-side where they are commonly located, or client-side where front-end developers frequently interact with them.

Likewise, in the realm of software development, the adage 'less is more' holds true. As revealed by the collective insight of TotalEnergies' developers, efficient collaboration tools like Postman are vital. They echo the sentiment that focusing on essential data within API requests not only minimizes network load but also eliminates the 'overwhelming' complexity that can arise from bloated features.

By sending only necessary information through the APIs, developers ensure that responses are not just successful, but swift—paving the way for functionalities such as user authentication to operate smoothly.

The message is clear: in API communication, precision is paramount. By trimming the excess and prioritizing essential data, developers can achieve a more streamlined, performant, and ultimately successful API integration, much like drawing a clear, direct line on a digital map instead of a convoluted path of circles. The result is an enhanced user experience where reliability, accessibility, and efficiency are not just goals, but realities.

Using Query Parameters for Filtering, Sorting, and Pagination

APIs, or Application Programming Interfaces, are the lifeblood of modern software, offering a scalable way for different systems to interact. The true workhorses within these APIs are the endpoints, which are akin to the various doors within a building, each leading to distinct functionalities or data. These endpoints come alive through HTTP methods, allowing operations like creating, retrieving, updating, and deleting information across diverse platforms.

In the realm of RESTful APIs, query parameters enhance this communication by providing a means for clients to tailor requests, thus receiving exactly what they need. This granular control allows for filtering results, organizing data via sorting, and even managing the volume of data through pagination, all without overcomplicating the endpoint structures. By designing thoughtful query parameters, developers empower clients with the ability to refine their interactions with the API, ensuring a more efficient and streamlined experience.

Including Relevant Hypermedia Links in Responses

Hypermedia links are akin to the connective tissue within the architecture of an API, providing a roadmap that guides clients through the maze of resources. When responses are embedded with hypermedia links, users can effortlessly traverse the web of related resources, akin to following a well-designed map. The addition of these links transforms the user experience, offering a seamless and intuitive journey through the API's capabilities.

This strategic enhancement not only elevates the API's usability but also its adaptability, enabling clients to make informed decisions and execute actions with the context provided by these navigational aids.

To illustrate the importance of this feature, consider the analogy of delivering bad news, such as API errors. The effectiveness of such communication is rooted in its structure and actionability. In the past, the diverse error formats across APIs created a chaotic landscape, complicating error resolution and stifling system interoperability.

Introducing hypermedia links as part of the error messages can significantly alleviate these challenges by providing structured, informative, and actionable guidance.

In the realm of API documentation, clarity and structure are paramount. Breaking down the documentation into distinct sections with clear subheadings and descriptions aids in demystifying the API for the user. For instance, employing lists to outline key concepts or steps can greatly enhance the discoverability of information, allowing users to bypass the need to sift through extensive text.

Furthermore, the concept of 'content negotiation' is pivotal in the interaction between a client and a server, with the 'Accept' request header playing a crucial role. By specifying the MIME types that the client can handle, this header informs the server of the preferred formats for the response, ensuring compatibility and understanding.

In essence, embedding hypermedia links within an API is not just about providing a navigation tool; it's about creating a dialogue with the user, one that is as informative and useful as possible right from the outset. By doing so, an API becomes more than a mere interface; it becomes a user-centric platform that empowers developers to build, integrate, and troubleshoot with greater ease and efficiency.

Error Handling and Logging

Understanding and resolving API issues is a critical aspect of maintaining a seamless digital experience. Error messages need to be transparent and actionable, as they are a common occurrence given the unpredictable nature of the internet. When errors arise, providing enough detail to enable a fix is crucial, and in cases where a fix isn't necessary, this should be clearly communicated.

Logging API interactions plays a pivotal role in debugging and understanding software. It's not just about recording information but also about being judicious regarding what to log. One must always consider whether the logged data is sensitive and if it includes potentially identifiable user information.

In terms of API performance metrics, they offer invaluable insights into user behavior and system reliability. With 83% of web traffic involving API calls, monitoring APIs isn't just a technical necessity; it's a business imperative. Real-time logs can alert teams to response time issues, errors, and anomalies quickly, allowing for prompt resolution and maintaining system reliability.

From the operational perspective of TotalEnergies Digital Factory, APIs are the linchpin in modernizing legacy systems. By developing digital solutions and deploying them across various branches and countries, they showcase the significance of API resilience in a global operational context.

As we learn from the challenges faced by Spotify's Backstage platform, it's evident that technical limitations in API development, such as fixed data models and manual data ingestion, can lead to inefficiencies. This highlights the importance of adopting flexible and efficient approaches to API architecture.

Moreover, a case study involving backend microservices illustrates the peril of not anticipating API limitations. For instance, encountering a '429 Too Many Requests' error due to surpassing the request threshold underscores the need for proactive measures, like performance testing and adherence to API design best practices.

In summary, effective error handling, strategic logging, and diligent monitoring of API performance metrics are essential for modern businesses to thrive in the digital world. They not only ensure system integrity but also contribute to a better understanding of user needs and the creation of more user-centric products.

Handling Errors Effortlessly

To foster a smooth API experience, it is paramount to implement a robust error-handling strategy. Consistency in error responses is key, as they should not only enlighten the consumer on what went wrong but also offer clear steps to rectify the problem. Equally crucial is error logging, which serves as a diagnostic tool to pinpoint and address recurring issues, thereby bolstering the API's reliability.

Errors are an inevitable part of any API, given the inherent unpredictability of the internet. By crafting error messages that are structured and actionable, developers can navigate these uncertainties. A well-designed error response should be self-explanatory, empowering developers to resolve issues without additional support.

It's beneficial to integrate links to further information or tooltips for terms that may be unfamiliar, particularly to those new to the field. Transparent and educational error messaging can significantly improve a developer's experience.

Maintaining error messages is as critical as maintaining your API. Stale or outdated error documentation can lead to confusion and inefficiency, undermining the trust in your API. As the digital landscape evolves, so should the way APIs communicate errors.

This continuous improvement is essential to ensure compatibility and ease of use across various systems, ultimately enhancing the developer's workflow and the end-user experience.

Logging API Interactions

Maintaining a comprehensive log of API interactions is more than just a diagnostic measure; it's a window into the performance and usage of your applications. With detailed records of requests, including parameters, response times, and errors, you're equipped with actionable insights. These logs elucidate how your API functions under various conditions, revealing inefficiencies and potential points of failure.

For instance, consider the scenario of a critical microservice reliant on a third-party API. When an unexpected error, like a '429 Too Many Requests' response, occurs, it can disrupt operations significantly. Logging provides a real-time narrative of such events, allowing for prompt identification and resolution.

Furthermore, in a dynamic environment where 83% of web traffic involves APIs, understanding user behavior through API logs is not just technical—it's strategic. Businesses can leverage this data to refine their offerings, ensuring they align with user expectations and preferences. The visibility offered by logging is indispensable in a landscape dominated by microservices and cloud-based systems, where APIs are the scaffolding supporting complex inter-service communications.

This is evidenced by companies like Mixpanel, which harness events, users, and properties to track interactions, embodying the essence of modern data analysis powered by API logs. In essence, logging is not merely about keeping a record; it's about empowering developers and businesses to enhance their systems for peak performance and user satisfaction.

Monitoring API Performance Metrics

Understanding the bridge that facilitates communication between software components, API (Application Programming Interface), is paramount for client-side developers who regularly interact with these interfaces. A user's request, processed via the API, results in a responsive output to the client side. Hence, monitoring API performance metrics is of utmost significance.

These metrics include response times, throughput, and error rates, and they shed light on the API's efficiency and point out opportunities for optimization.

The financial implications of API downtime highlight the importance of efficient API performance. With downtime costs estimated at roughly $5,600 per minute, encompassing lost productivity and revenue, API uptime becomes crucial for any business's bottom line. Real-time API monitoring is necessary due to the dynamic nature of APIs, which are subject to continuous changes due to usage patterns, updates, and integrations.

Metrics such as error rates and latency are essential indicators of an API's health. A spike in errors signals availability issues, while latency measures the responsiveness of the API. These metrics are so pivotal that companies like Microsoft and Adobe, operating in a technology-driven environment, emphasize maintaining API observability.

This concept encompasses metrics monitoring, log analysis, and tracing analysis to ensure APIs function stably, perform optimally, and are troubleshootable.

For developers and organizations aiming to boost user productivity, the performance of APIs is a key contributor. User satisfaction and loyalty depend greatly on the efficiency with which applications respond and interact with users. To set up effective API monitoring, one should first define key metrics and thresholds that will trigger alerts when surpassed, and then configure monitoring tools to keep a vigilant eye on these metrics, ensuring the API delivers a seamless user experience.

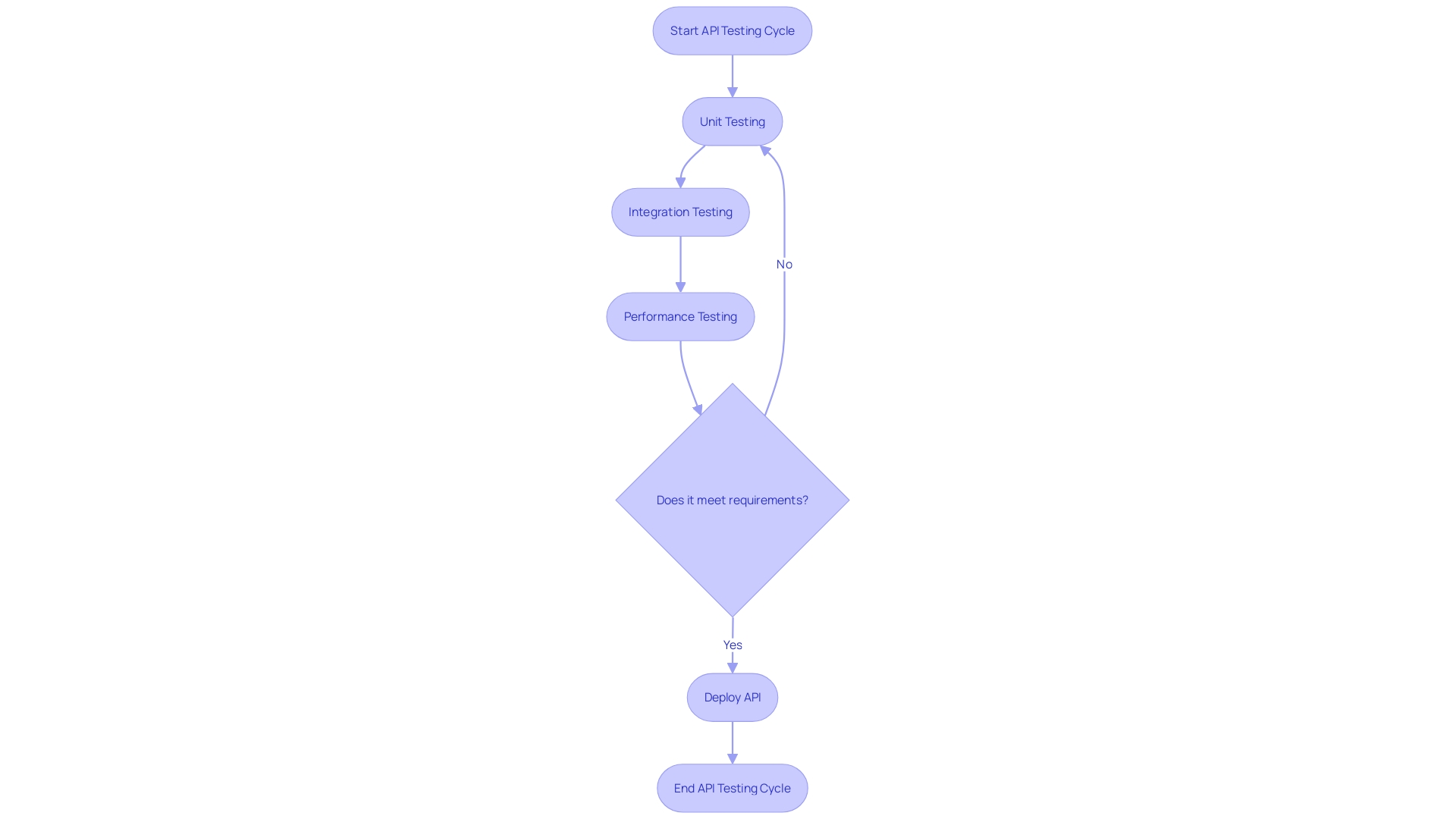

Testing and Documentation

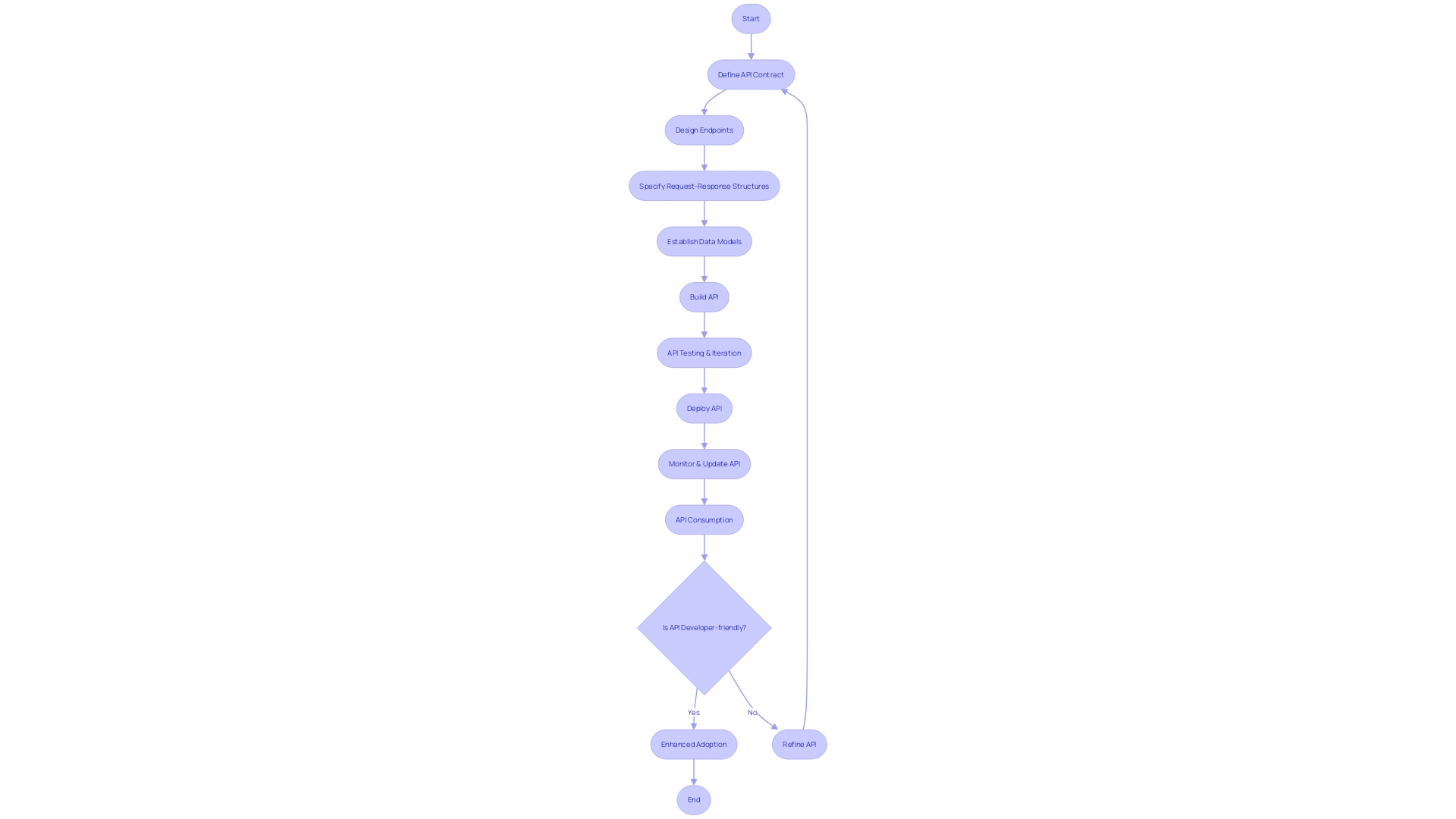

APIs serve as the backbone of modern digital solutions, enabling seamless interactions between different software systems. When it comes to ensuring the reliability and security of these APIs, testing plays a pivotal role. By implementing a regimented testing cycle, developers can verify API functionality and address any issues early on.

This not only guarantees the smooth operation of the APIs but also enhances the overall digital experience for users.

Unit testing, a critical aspect of this process, involves examining individual units of code—such as functions, methods, or classes—separately to confirm they produce expected results. A cornerstone of software development, unit testing facilitates early bug detection and mitigation, thereby streamlining the development process and contributing to a more robust final product.

Moreover, clarity, modularity, and independence are key attributes of testable code. These characteristics allow for more straightforward and effective defect identification, which is essential for high-quality, reliable software.

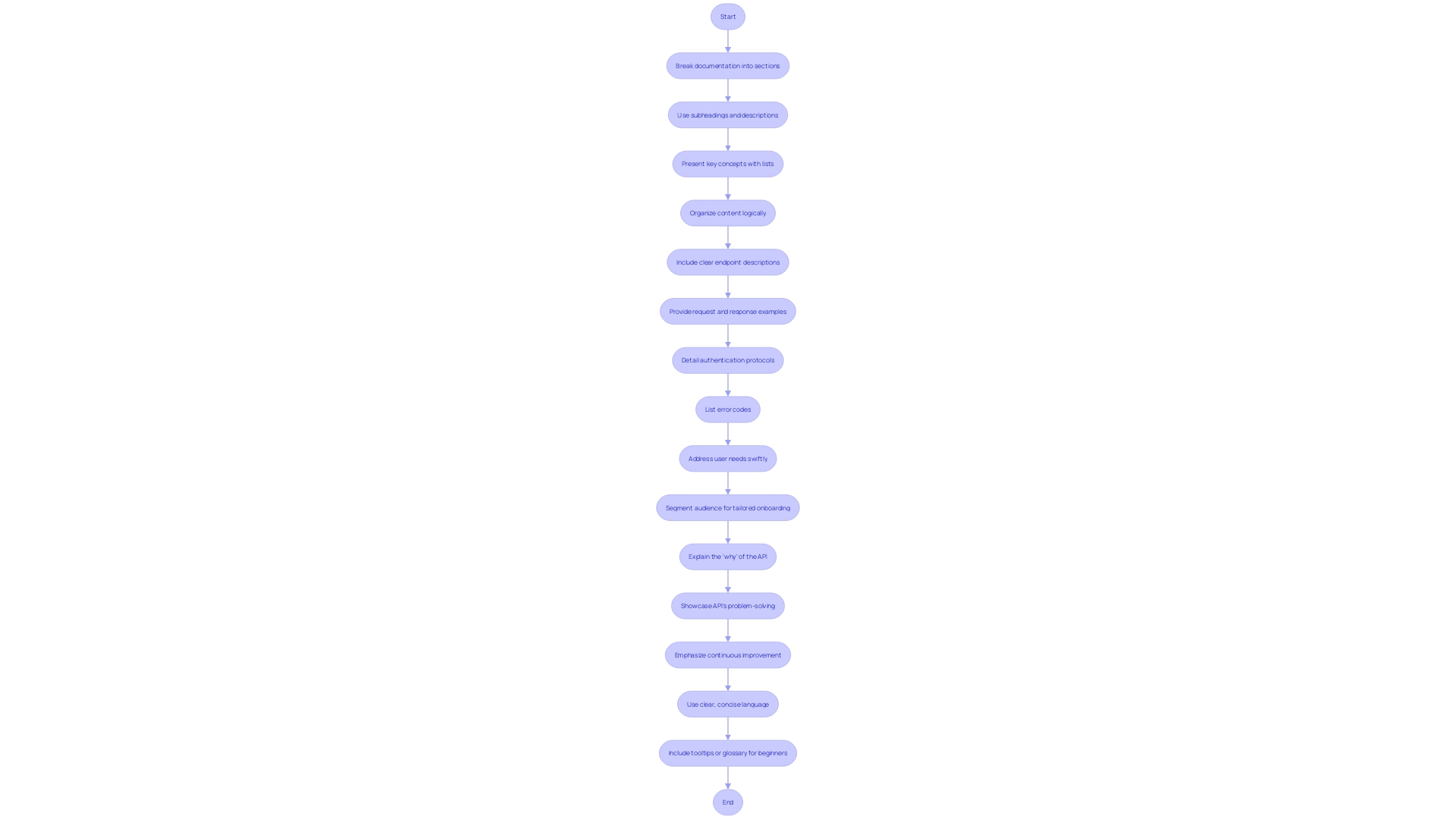

To convey the importance of an API and its capabilities, comprehensive documentation is a must. Good API documentation should introduce potential users to the API, guiding them on how they can quickly start using it. This involves outlining the benefits, providing step-by-step tutorials with clear objectives and prerequisites, and ensuring that the documentation is current and relevant to the latest version of the API.

In summary, the integration of systematic testing and thorough documentation into the development workflow is not merely a technical requirement but a mindset shift towards greater quality and efficiency in software engineering.

Writing Comprehensive Unit Tests

Unit testing is a cornerstone in software development, designed to validate the functionality of the smallest, independent code segments. These segments, known as units, are often functions or methods that are crucial for the stability of your API. Crafting unit tests is not just about ensuring that each unit behaves as expected under normal circumstances, but also about anticipating and checking how they react to unexpected or edge cases.

By focusing on these minute elements of code, developers can pinpoint defects early. This proactive approach is particularly valuable in the software testing life cycle (STLC), where identifying issues in later stages can become more complex and costly. According to a survey, 80% of software development professionals acknowledge the critical role of testing in their projects, with 58% actively developing automated tests.

Unit testing, a subset of automated testing, is not only about finding bugs; it also serves as documentation for your API's functionality, which is especially beneficial for onboarding new developers or for reference during maintenance. The practice is so integral to quality assurance that 59% of professionals who utilize unit tests also employ test coverage metrics to ensure thoroughness.

Adopting a test-first methodology such as Test Driven Development (TDD) can further enhance code quality. TDD encourages the creation of tests before the code itself, enforcing a clear understanding of the code’s purpose and making sure it meets all requirements from the onset.

In the context of Blazor unit testing, where developers work within the. NET framework to build web applications, the same principles apply. Testing the individual components in isolation ensures the application functions as intended, safeguarding against potential bugs that might otherwise reach production.

The complexity of software systems often leads to tightly coupled code, where changes in one part can unexpectedly affect others. This is where the importance of testable code comes to the fore, as highlighted by industry experts who emphasize modularity, clarity, and independence as key traits of highly testable systems. By adhering to these principles, developers can create more maintainable and reliable software.

Understanding the landscape of API testing is crucial to harnessing its benefits. Tools like Postman have become ubiquitous in the industry due to their user-friendly interfaces and comprehensive feature sets, making API testing more accessible and effective.

In summary, unit testing is an indispensable tool for developers aiming to deliver robust and reliable APIs. By isolating and rigorously testing each unit, software teams can ensure their APIs perform optimally, thus providing a seamless experience for the end user.

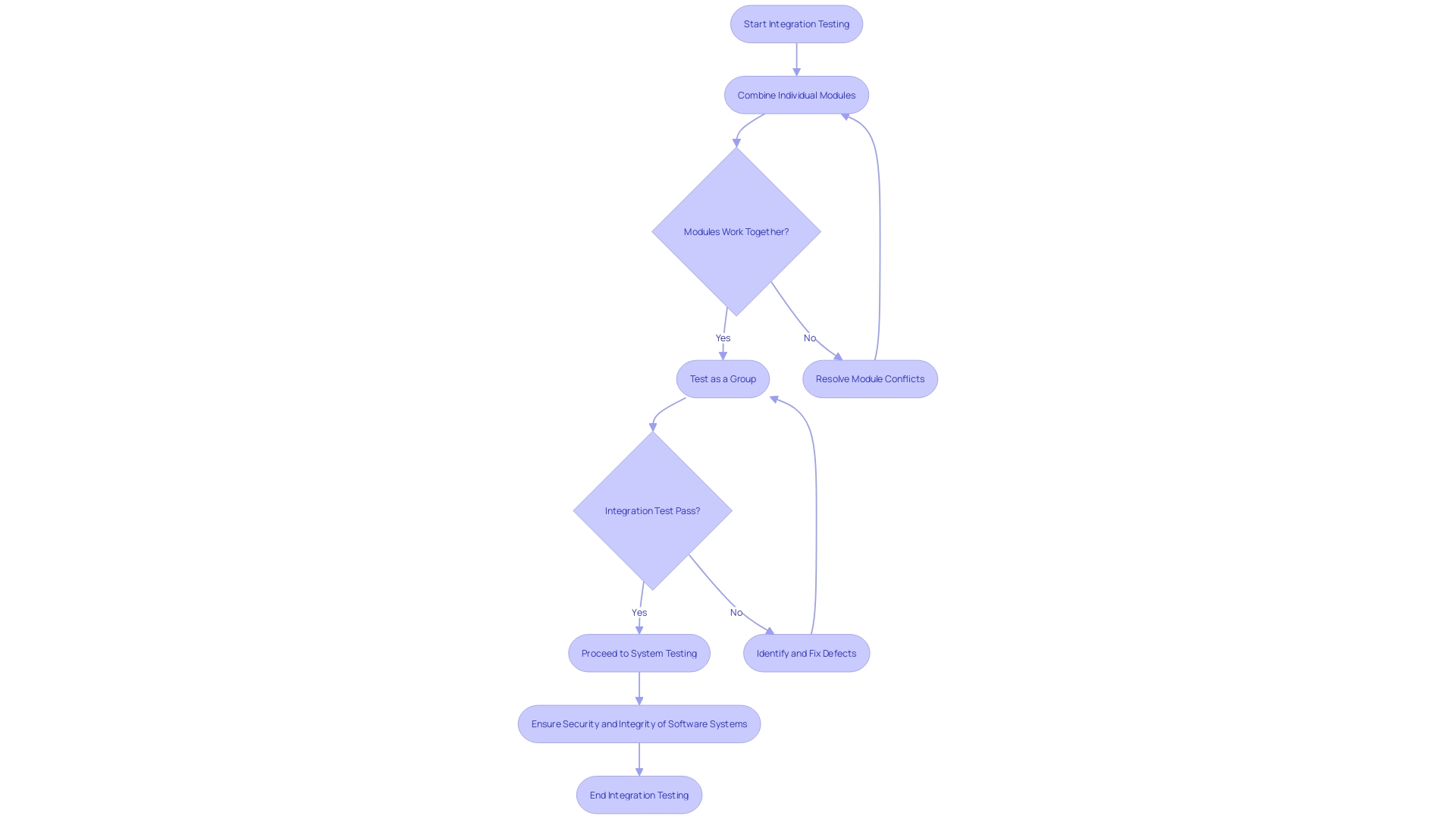

Conducting Integration Tests

Integration testing plays a pivotal role in the development and maintenance of APIs, which are the linchpins of modern digital experiences. It ensures that different software components communicate effectively, acting as a critical check to maintain the integrity of data flow and system interactions. This testing process is not just about finding bugs; it's about verifying that the collective behavior of integrated units meets the expected outcomes.

Take, for example, the Catalogue API used by a Content Management System (CMS) team. This API is central to delivering diverse content like movies, series, and sports events. By implementing integration tests, developers can simulate how the API interacts with various modules—ensuring that users have a seamless experience when accessing content.

In practice, integration tests can be quite intuitive. Consider a scenario where we're testing the /by_id endpoint of an API that filters content based on licensing for different countries. Using fixtures like a ready-to-use Android client, an asset, and indexed main territories documents, developers can create realistic test conditions.

These fixtures, often with sensible defaults like a movie with valid licenses for all countries, help pinpoint integration issues swiftly.

As APIs continue to underpin the collaborative efforts of developers and stakeholders, their significance is ever-growing. Google has even dubbed APIs as the "crown jewel of software development," highlighting their role in facilitating powerful, interconnected applications. With the rapid emergence of new technologies and the revelations of big data, integration testing becomes not just a best practice but a necessity for innovation and enhancement of digital platforms.

Documenting Your API Thoroughly

Creating top-notch API documentation is not just about laying out instructions; it's about crafting a thorough manual that empowers developers to seamlessly interact with your API. A solid documentation must encompass clear descriptions of endpoints, request and response examples, authentication protocols, and error codes, guiding users through the integration process with precision.

A well-documented API is akin to a user-friendly manual for a complex gadget. It breaks down the intricacies of the software interface into understandable segments, enabling developers to harness its full potential. Consider the user's first encounter with your API documentation.

They seek immediate clarity on its utility and how to begin engagement. Addressing this swiftly is crucial. By segmenting your audience, you can tailor the onboarding process to meet their unique needs, ensuring a smooth introduction to your API's capabilities.

The importance of API documentation cannot be overstated. In the words of a seasoned developer, 'Documentation not only increases readability but guides users and developers to a clear understanding of the project, allowing for efficient and effective collaboration.' This is especially vital considering the pivotal role APIs play in contemporary software development.

Google has even referred to APIs as the 'crown jewels' of the industry, underscoring their value in fostering innovation and collaboration.

To ensure your API documentation stands out, focus on the 'why' as much as the 'what.' This means not only detailing the functionalities but also elucidating the problems your API solves, which is pivotal for startups aiming to address market needs. Furthermore, your documentation should be a living document, kept current with clear, concise language, and supplemented with helpful tooltips or glossary links for beginners.

This approach is exemplified by platforms like Infobip API reference, which integrates concept explanations to assist novice developers.

In summary, effective API documentation is a gateway to developer productivity and application success, serving as a critical tool in the modern developer's arsenal. By ensuring clarity, simplicity, and completeness, your documentation can become a beacon of guidance in the technological landscape.

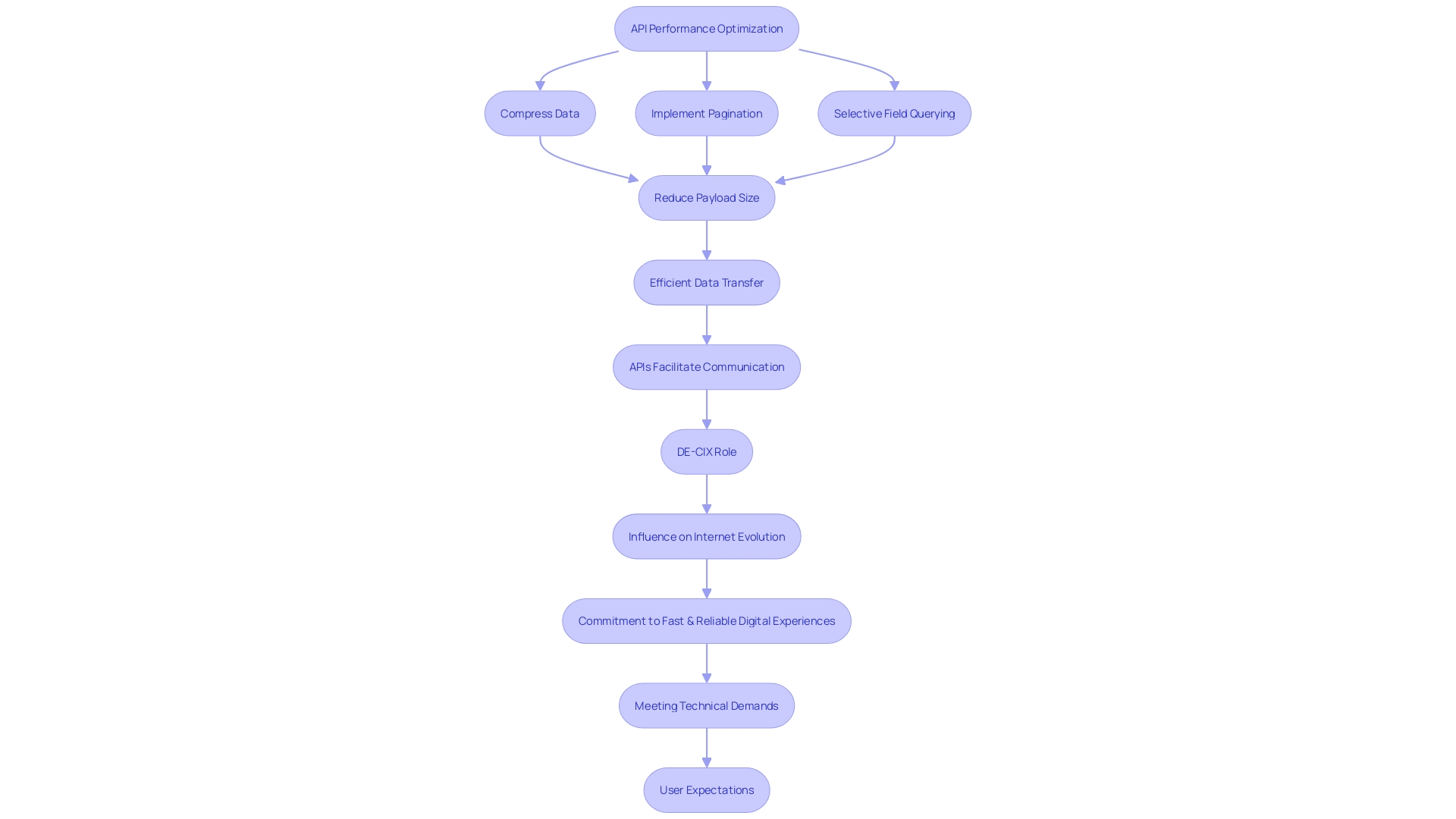

Performance Optimization Techniques

To ensure a swift and effective API experience, it's vital to focus on performance optimization strategies. One pivotal approach is leveraging caching mechanisms. Caching can significantly reduce the time needed to process repeated requests by storing responses temporarily, thus enhancing the overall speed of the API.

Another aspect to consider is the optimization of data transfer. Minimizing payload size by using techniques such as data compression and structuring efficient JSON objects can lead to faster response times and less bandwidth consumption.

Client-side developers frequently interact with APIs, relying on them for seamless communication between different software components. Therefore, optimizing these interfaces is not just about server capability but also about creating a user-centric experience that values speed and efficiency. For example, in the login and signup processes, where users expect immediate feedback, an optimized API can make the difference between a smooth onboarding and a frustrating start.

The philosophy behind API performance is well-captured by the saying, 'make it work, make it right, then make it fast.' Initially, ensuring that an API is functional and correct is paramount, but subsequently, optimizing for speed is what elevates the user experience. In the world of API development, where milliseconds can impact user satisfaction, focusing on performance optimization techniques becomes a game-changer for any frontend application relying on server-side interactions.

Using Caching Mechanisms

Harnessing the power of caching is a strategic move to accelerate the response time of APIs, particularly when dealing with frequently requested data. By implementing an efficient caching strategy, data is stored within a reachable memory cache, allowing for swift retrieval. This not only expedites the delivery of responses but also alleviates the burden on the underlying API resources, promoting a more scalable system.

"Understanding the Web Application Caching Problem" highlights the delicate balance between maintaining a fast-loading website and ensuring the freshness of content through cache invalidation. The complexity increases with dynamic content systems, where generating content requires database calls and access validations, making caching a critical aspect in improving response times.

A case study within the backend architecture involving microservices and the GCP PubSub event system illustrates the consequences of API rate limits. When a critical service exceeded the third-party API's request limit, it resulted in a '429 Too Many Requests' error, impacting operational flow. This underscores the importance of caching as a preventative measure against such issues.

In practice, when a service requires data, it first checks the cache (cache hit). If present, it can immediately serve the data, bypassing database queries and reducing latency. If not (cache miss), it falls back on the primary database.

This process is essential, especially when large amounts of data are at play, as it significantly mitigates the load on the primary database and enhances the user experience.

The adoption of APIs is on the rise, with "The State of APIs, Integration, and Microservices" research by Vanson Bourne revealing that 93% of organizations consider APIs crucial to their operations. Efficient caching contributes to this by improving the visibility of key data assets and supporting the seamless functioning of APIs, which are integral to avoiding operational silos and fostering integration between microservices.

Moreover, the necessity for clear problem details in HTTP APIs for better error handling is highlighted, emphasizing the role of caching in preventing such errors by managing the load. In the context of distributed systems and microservices, as demonstrated by Contentsquare's use of Apache Kafka for notification delivery, caching is instrumental in maintaining performance and reliability.

Optimizing Data Transfer

The art of refining API performance lies in the strategic reduction of data transfer. Consider the approach of TotalEnergies Digital Factory, which focuses on crafting digital solutions to enhance operational efficiency. They emphasize APIs as the backbone of modernizing systems, a testament to the importance of efficient data handling.

Similarly, the unified endpoint philosophy adopted by some tech teams consolidates data access, streamlining the response process for clients. By implementing methods like compression, pagination, and selective field querying, the payload size is significantly diminished, leading to a more agile and responsive API experience.

Recognizing the role of APIs as the facilitators of communication between software components, it becomes evident that optimizing these interactions is paramount. As quoted by industry experts, APIs form the server side bridge that client-side developers rely upon. The process—a client's request is processed by the API, which then delivers a response to the frontend—underscores the necessity for efficiency in data transfer.

With APIs forming the critical infrastructure that supports a vast array of applications, from weather forecasts to complex data analysis platforms, their optimization is not just a technicality but a cornerstone for seamless operation in our digitally connected ecosystem.

DE-CIX, a leader in IT infrastructure, understands the gravity of this responsibility, driving forward principles that shape the internet's evolution. Their commitment to seamless and secure data exchange globally mirrors the importance of optimizing API data transfer. By adopting performance enhancement techniques, the goal is to achieve the same level of reliability and speed that operators like DE-CIX strive for in their services.

This pursuit of efficiency not only meets the technical demands of modern software development but also aligns with the user's expectations for fast and reliable digital experiences.

Security Best Practices

The backbone of any digital platform, APIs (Application Programming Interfaces) have become indispensable for efficient communication between software components. Their critical role in web and mobile applications, however, exposes them to potential security threats. As enterprises embrace cloud-based infrastructures for their flexibility and speed, the reliance on APIs grows, bringing with it the increased risk of security breaches.

These breaches can lead to exposure of sensitive data, such as personal and financial information, which can have dire consequences if exploited for fraudulent activities or identity theft.

Securing APIs is thus a paramount concern, underscored by the alarming statistic that 78% of cybersecurity teams have encountered an API-related security incident in the last year. Despite the majority of organizations maintaining a full inventory of APIs, a mere 40% have visibility into which of those transmit sensitive data. This gap in security awareness has made API security a more significant priority now than ever before.

To address these security challenges, it's essential to adopt a comprehensive approach to API security that encompasses visibility and control over all APIs, adherence to best practices in development, and a proactive stance on vulnerability management. This means ensuring all APIs, whether part of digital transformation initiatives or new products, are included under the protective umbrella of the organization's security program.

Visibility is a particularly crucial aspect. The rapid deployment of APIs often results in blind spots like zombie, shadow, and rogue APIs that can elude traditional security measures. Organizations must implement cyber controls that enable the detection and management of all APIs, no matter how or where they are used.

Moreover, understanding the structure, architecture, and security attributes of APIs, such as authentication types and rate-limiting, is foundational. Organizations can then define and document corporate API standards and policies that align with best practices and regulatory requirements, becoming a source of truth that influences all stakeholders in the API lifecycle.

The Open Worldwide Application Security Project (OWASP) continuously highlights the top security risks to APIs, emphasizing the need for vigilant monitoring and the implementation of robust security protocols. By focusing on these essentials, businesses can fortify their digital infrastructures against unauthorized access and the consequent risks, thereby preserving the integrity of their operations and the trust of their users.

Maintaining Good Security Practices

To uphold robust security in API management, it's essential to adopt practices like diligent input validation, employing secure communication channels, and conducting periodic security assessments. These actions are pivotal in safeguarding your API against prevalent security threats while maintaining the integrity of data transfers. According to a study by Vanson Bourne, a staggering 93% of organizations recognize APIs as critical to their operations, underscoring the importance of solid security measures.

As APIs have become integral in various sectors, such as healthcare's integration of electronic health records and retail's personalized shopping experiences, their security is more pertinent than ever. The digital transformation driven by APIs demands vigilant protection strategies due to the sensitive data they often handle, including personal and financial information.

Recent breaches reported by Traceable and the Ponemon Institute, with 60% of surveyed organizations experiencing at least one breach in the past two years, highlight the urgency of addressing API security. Establishing API governance models and documenting corporate API standards are recommended practices for maintaining control over the API ecosystem. By understanding the data flow, structure, and security attributes of APIs, organizations can define and implement best practices and regulatory compliance measures tailored to their operational needs.

For example, TotalEnergies Digital Factory (TDF), a subsidiary of TotalEnergies active in nearly 130 countries, relies on APIs to modernize its information systems, emphasizing the role of APIs in facilitating digital transitions across global operations. Such examples demonstrate that securing APIs is not only a technical necessity but also a business imperative that affects the overall well-being of organizations and their customers.

Enforcing the Principle of Least Privilege

The Principle of Least Privilege (PoLP) is a security cornerstone, advocating that individuals and processes should have only the necessary privileges to execute their tasks—no more, no less. This concept is crucial in API security, where too much access can invite misuse or security lapses. When APIs are designed with minimal privilege, the risk of sensitive data exposure is significantly reduced.

As Eric Brandwine, VP and Distinguished Engineer at Amazon Security, aptly puts it, "Least privilege equals maximum effort." This highlights the substantial effort involved in curating the precise set of privileges for dynamic customer needs and changing features, but it's a balancing act. The effort in reducing privileges must be weighed against the risk reduction and compliance with business goals and regulatory demands.

Real-world scenarios underscore the practicality of PoLP. For instance, in Amazon Web Services (AWS) environments, utilizing Service Control Policies (SCPs) can provide guardrails for permissions, offering a pragmatic approach to limiting access without overexerting resources. This method aligns well with the necessity to evaluate the likelihood and impact of security incidents against the cost of prevention.

Recent studies reveal that within the past two years, 60% of organizations experienced at least one breach, highlighting the urgency of sound API design. The stakes are high, as APIs often contain or provide access to personal or financial data, which can be exploited for fraud or identity theft if compromised. Moreover, the integration of generative AI (GenAI) technology in API development introduces new challenges, demanding vigilant monitoring and response strategies to safeguard against automated threats like credential stuffing or DoS attacks.

In essence, by implementing PoLP in API design, organizations can craft a secure environment that balances the necessary access with protective measures, thereby minimizing potential unauthorized access and the adverse impacts of security breaches.

Conclusion

In conclusion, designing RESTful endpoints with adherence to best practices is crucial for creating robust, scalable, and user-friendly APIs. Using HTTP methods as verbs and resources as nouns enhances clarity and intuitiveness. Understanding the domain and prioritizing functionalities ensures effective API delivery.

Adhering to RESTful API design best practices, such as using nouns in endpoint URLs, promotes longevity. Hierarchical resource representation improves maintainability and adaptability. Evaluating standards and challenging conventions is necessary for continuous improvement.

Versioning APIs ensures compatibility and smooth updates. Meaningful error messages and appropriate HTTP status codes enhance communication. Returning responses in JSON format streamlines data exchange.

Strong authentication and authorization mechanisms are vital for API security. HTTPS encryption safeguards data. Concise request payloads and query parameters optimize performance.

Hypermedia links improve navigation.

Thorough documentation, rigorous testing, and performance monitoring ensure reliability. Implementing security best practices, such as input validation and secure communication, protects against threats.

In conclusion, following best practices and implementing comprehensive security measures create efficient, secure, and user-friendly APIs.

Start optimizing your APIs today with Kodezi's comprehensive suite of tools!

Frequently Asked Questions

What is REST, and why is it important for API development?

REST, or Representational State Transfer, is an architectural style that ensures each client-server request is self-contained with all necessary information to process it. It's important because it promotes a stateless, uniform interface for resource-based interactions, forming the backbone of RESTful API development.

How does a RESTful endpoint function?

A RESTful endpoint functions by using HTTP methods as verbs (GET, POST, PUT, DELETE) to perform actions on resources (the nouns, like /users or /products). For example, a GET request to /articles retrieves articles, while a POST to the same endpoint may add a new article.

Why should we use nouns instead of verbs in endpoint URLs?

Using nouns instead of verbs in endpoint URLs aligns with REST principles of treating an API as a consistent interface, decoupled from internal logic changes, making it more intuitive, future-proof, and adaptable.

What is the benefit of following a hierarchical structure in API design?

Following a hierarchical structure, inspired by Hexagonal Architecture, separates core business logic from external interfaces, making APIs more robust, maintainable, and adaptable to changes.

How are HTTP methods used for CRUD operations in RESTful APIs?

HTTP methods are used to map CRUD operations as follows: POST creates new resources, GET retrieves data, PUT updates a resource, and DELETE removes it, providing a standardized way for applications to interact.

What is API versioning, and why is it necessary?

API versioning manages changes to an API without disrupting existing client applications. It's necessary to maintain compatibility as software evolves due to new business needs or regulatory changes.

How should APIs handle response formats and status codes?

APIs should provide meaningful feedback using HTTP status codes, categorizing responses (success, error, etc.), and well-structured error messages for easy troubleshooting and understanding.

Why is JSON preferred as a response format in RESTful APIs?

JSON is lightweight, easy to parse and manipulate, making it the preferred format for exchanging data across platforms and contributing to robust and scalable web applications.

What are the best practices for API security?

Best practices for API security include enforcing authentication and authorization, using HTTPS for data encryption, and maintaining good security practices like input validation and periodic security assessments.

How can API performance be optimized?

API performance can be optimized by using caching mechanisms to store frequent responses, minimizing payload sizes through data compression, and streamlining response structures for efficiency.

What is the Principle of Least Privilege, and how does it apply to APIs?

The Principle of Least Privilege means granting only the necessary access for tasks. In APIs, it reduces the risk of sensitive data exposure by limiting privileges to what's necessary for the API's functionality.