Introduction

The integration of OpenAI's API with Node.js opens up a world of possibilities for developers looking to enhance efficiency and productivity. In this article, we will explore the prerequisites for integrating the API, setting up the development environment, installing the OpenAI NPM package, generating an API key, initializing the OpenAI SDK, sending API requests, and leveraging the API for text generation, summarization, translation, and fine-tuning. We will also address common errors and troubleshooting techniques.

By the end of this article, you will have a comprehensive understanding of how to harness the power of OpenAI's API in a Node.js environment and develop innovative, real-world applications. So let's dive in and unlock the full potential of OpenAI with Node.js!

Prerequisites

To integrate OpenAI's API with Node.js, ensure you're equipped with a few key components before diving in. Begin by obtaining your OpenAI API key, which is a gateway to accessing the AI's capabilities. Save it securely, as recommended by privacy best practices, to prevent unauthorized use.

Create the environment setup vital for the API's interaction. This will involve constructing a .env file that stores your unique API key and a Node.js file, something akin to mathTeacher.js, which will house your operational code.

In your Node.js file, you'll want to define critical parameters such as the AI model you intend to utilize, the conversational context, and an array of functions your application can execute. Think of this like setting the stage for an assistant who can deduce the significance of user messages, and maintain a seamless thread of communication, all while being adept at carrying out specific tasks when called upon.

Furthermore, the ability to run the assistant using inputs that necessitate function execution—like solving a mathematical word problem and converting it into a function call—is essential. This capability is powered by language understanding models, which enable the assistant to interpret natural language inputs and act accordingly.

Configure and prepare for an interactive thread leading up to running your sessions, ensuring your OpenAI application seamlessly weaves through conversations with competent understanding and responsiveness.

Setting Up Your Development Environment

Embarking on the integration journey with the OpenAI API and Node.js is more than just about setting up your environment; it's about creating a conduit for seamless, efficient interactions between services. Start by selecting an appropriate Integrated Development Environment (IDE), like the popular and versatile Visual Studio Code (VS Code), to write and test your code. Manage your project's dependencies and metadata by initializing a new npm project; this entails running specific commands to create a package.

Json file. Next, familiarize yourself with custom modules and how they reside in the lib directory while understanding that the node_modules directory, auto-created by npm, houses your project's dependencies.

As you proceed, you’ll save crucial identifiers like the assistant id in a variable within your code for reference, and get to know the mechanics of a session which stores conversation history pivotal for context understanding in AI interactions. Dive into the essence of API integration and how to handle function calls, messages, and model selections by examining our streamlined code examples and the methodology behind them, which remove the complexities of external libraries, revealing the process's core.

Moreover, reflect on the lessons drawn from industry experiences, where inefficiencies in integration are not just a technical hurdle, but a challenge to be overcome with tailored strategies and a robust understanding of systems like Node.js, npm, and IDEs. Your transformative journey in JavaScript development begins here, with each step methodically planned to pave the way for a system that's both robust and intuitive, ensuring that you stay aligned with up-to-date technologies and trends, and command the skills to implement a simple Node.js environment no matter the year.

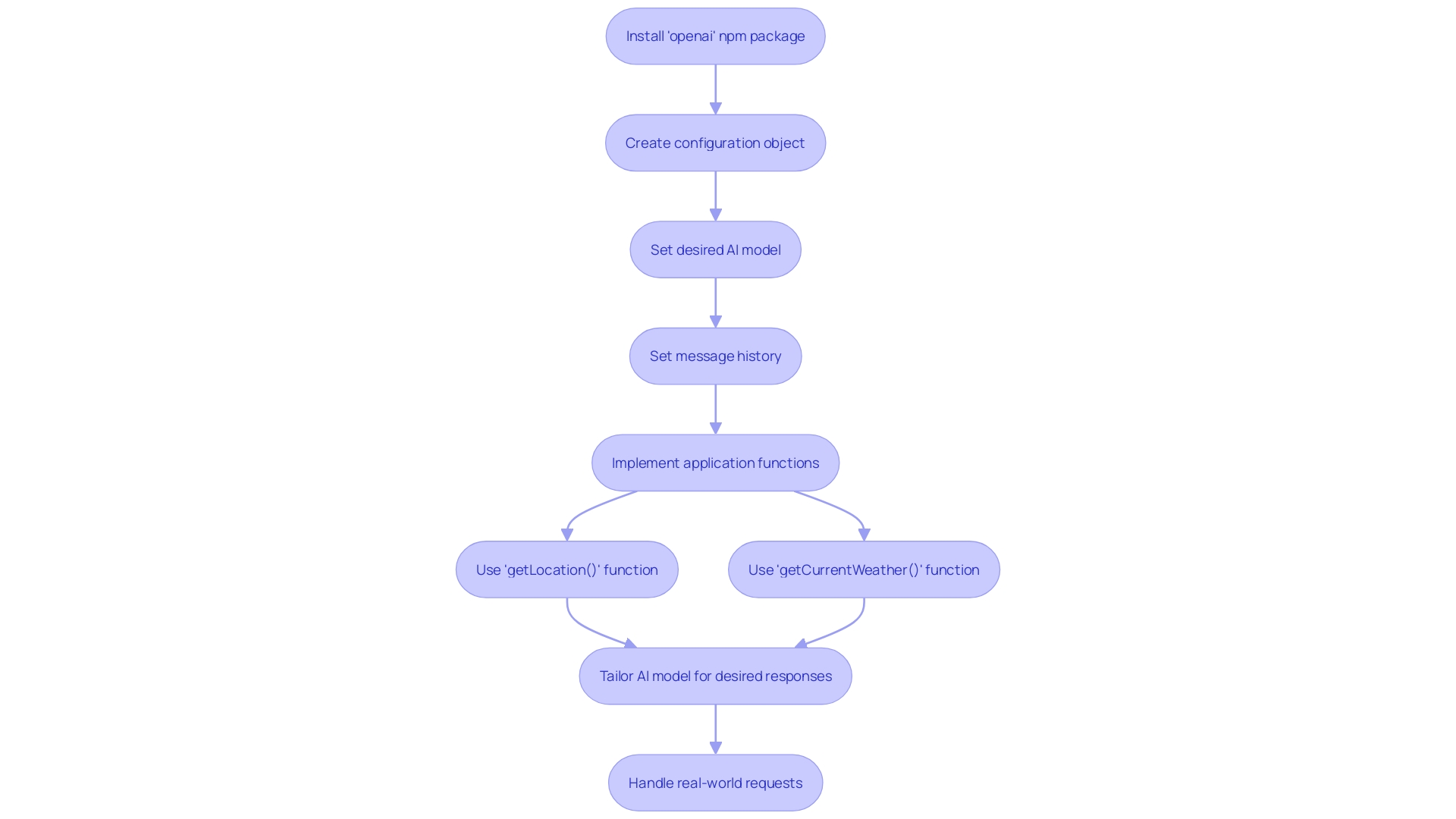

Installing the OpenAI NPM Package

Setting up the OpenAI API within a Node.js environment involves the use of an npm package specifically designed for this purpose. To get started, install the 'openai' npm package, which is the official OpenAI client for JavaScript, enabling Node.js applications to communicate with the OpenAI API seamlessly.

Once the package is installed, you can create a configuration object. This object is critical as it includes three vital pieces of information. Firstly, it determines the AI model you wish to employ - this is crucial for tailoring the API's response to your specific requirements.

Secondly, it records the history of messages exchanged between the user and your application, which is essential for maintaining context in the conversation. Lastly, it details the functions available in your app, like 'getLocation()' and 'getCurrentWeather()', which allow your application to offer practical suggestions based on the user's locale and current weather conditions.

It is important to note that while OpenAI provides guidance on which functions to invoke, it does not execute any code. The responsibility to run these functions lies with your application. For example, when prompted for activities nearby, your app would call 'getLocation()' to find the user's area and potentially 'getCurrentWeather()' to suggest an appropriate activity based on the weather conditions.

The vast npm ecosystem witnessed extraordinary growth last year, becoming an indispensable tool for developers worldwide. In just a single month, npm packages were downloaded over 184 billion times, underscoring the platform's integral role in the software development process.

By incorporating the OpenAI npm package into your project, you can harness the power of AI to redefine user interactions and elevate your application, ensuring it remains responsive and capable of handling real-world requests efficiently.

Generating an API Key

Obtaining an API key is a pivotal first step in harnessing the capabilities of an API—consider it your exclusive pass to interact with a particular service. To generate this key for the OpenAI API, you might have to traverse several technical steps, which include programming prerequisites like setting up your environment and including specific code libraries.

For instance, your project needs to have the serde and serde_json crates included, which are essential for serializing and deserializing data in Rust—something akin to having the right tools before starting a construction project. It's crucial to follow a detailed guide, preferably with step-by-step code annotations, allowing you to understand each part of the process. Imagine replacing the generic 'Hello World' with your robust API functionality; this is what you're aiming for.

Moreover, neglecting to secure your API key correctly could leave you exposed to cybersecurity risks. With a significant number of organizations reporting API-related security incidents in the recent year, and a majority acknowledging the necessity of API security, the stakes are high. Hackers target APIs due to insufficient security implementations, finding and exploiting vulnerabilities.

This means your API key, which gives you access to these services, must be treated with the highest confidentiality and integrity.

When you're ready to protect and manage your generated key, think of it as guarding a secret passphrase to an exclusive club, as APIs facilitate a seamless interaction between applications, leveraging data and functionalities. Some API keys are complimentary, while others may require a purchase, and often they don't expire, making the ongoing protection of your key critical.

In summary, generating an API key requires not only following the right coding steps but also understanding the importance of keeping your key secure in the spectrum of API security measures. Engage with credible guides, prepare your application with necessary libraries like serde, and once obtained, protect your API key as if it's the linchpin of your application's integrity, because it is.

Initializing the OpenAI SDK

To get started with the OpenAI Node.js package in your project, you'll first need to initialize the OpenAI SDK. This crucial step requires setting up a configuration object as an argument, where you will outline several parameters critical to your application's functionality. The configuration object consists of key elements such as the desired AI model to deploy, the full history of user interactions, and a descriptive list of available functions within your application, typically organized within a 'functionDefinitions' array.

For instance, when creating an AI-powered tool capable of solving complex word problems, seamless integration with OpenAI's GPT models allows for the interpretation of problems and the computation of corresponding mathematical expressions. The efficiency of such systems relies on the inherent understanding large language models (LLMs) have of natural language semantics. With the model identified, your application can call upon a dedicated JavaScript library to solve generated mathematical expressions, ensuring accurate and timely responses.

This all showcases the potential versatility of integrating OpenAI's NPM package, as seen in the broader npm ecosystem, which by the end of 2023 celebrated a milestone of over 2.5 million active packages and an unprecedented 184 billion monthly downloads. This growth emphasizes the thriving innovation and continuous development within the developer community, where new tools and libraries enhance the efficiency of coding practices and software development.

Embracing this initiative, you can conceptualize and engineer an application that not only leverages the capabilities of existing models but also contributes to the expansive repository of tools being utilized across diverse domains, from coding assistance to workflow automation.

Sending Your First API Request

After successfully configuring your development tools and integrating the OpenAI SDK, embark on your journey with API interactivity by initiating your premier request. Commence by choosing the fitting API, considering a multitude of services that offer diverse data sets like social networks, meteorological data, or financial information. RESTful APIs are prevalent and suitable for straightforward tasks.

You must comprehend the rules and protocols for data exchange, as APIs serve as an intermediary ensuring seamless communication between distinct software systems. While coding, remember, meaningful structuring is crucial, so use clear and concise models and consider managing state and tools. OpenAI, an influential entity in AI, provides comprehensive APIs ideal for diverse applications, simultaneously promoting ethical usage and expanding accessibility for nonprofits.

As you delve into your code, remember that each request you craft is more than a mere function call; it's a bridge to a world of data waiting to be harnessed.

Using the OpenAI API for Text Generation

Harnessing the text generation prowess of the OpenAI API, developers have created innovative solutions to streamline processes and enhance data management. One striking example is the development of a script that summons the API for summarizing technology articles. This script integrates with a SQLite3 database and ensures efficiency by checking if an article has been summarized before, akin to a smart caching system, thereby saving on costs and avoiding redundancy.

Companies like Holiday Extras are leveraging such capabilities to overcome industry challenges. They have empowered their less technical staff by automating translation and data analysis in multiple languages for their wide-reaching European market, thereby enhancing productivity.

Taking inspiration from the new Heroku Reference Applications, developers can build impressive projects like 'Menu Maker', which concocts dishes based on ingredients users have on hand, providing recipes and fine dining descriptions. This showcases the use of the OpenAI API in creating practical and user-friendly applications.

To give you a glimpse into constructing an API-enabled application, imagine a text generator that could work as a coding assistant or a mathematical problem solver. Such an AI-powered tool would not only understand natural language questions but also perform the required functions to deliver solutions, illustrating the versatile use of the OpenAI API in a Node.js environment for a variety of real-world applications.

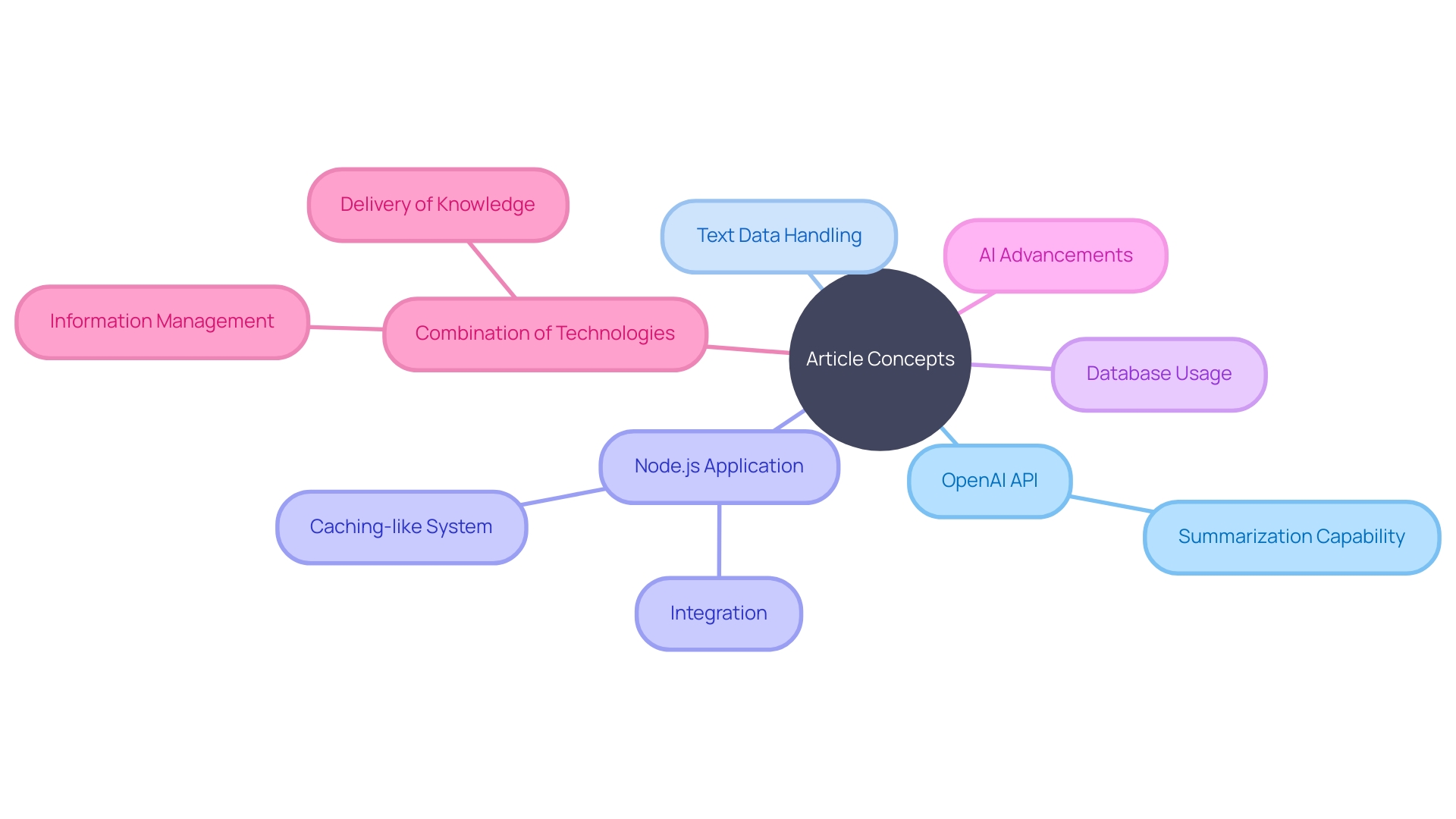

Using the OpenAI API for Summarization

The OpenAI API presents a powerful summarization capability, an essential tool for handling text data efficiently within your Node.js project. Leveraging this feature, you could streamline the way you digest content, providing concise versions of tech articles or extensive documents that demand a rapid understanding.

Imagine the ease of delivering compact summaries for articles, replacing the overwhelming details with clear, abridged content. Such functionality not only expedites the process of comprehension but also addresses the pain points of repetitive manual summarization. To effectively implement this, your Node.js application could incorporate a caching-like system, where you first check if an article's summary already exists before proceeding to distill a fresh one.

This method conserves resources and avoids redundant API requests—akin to cost-effective and proficient data management.

Following a systematic approach, as demonstrated by the developers in our case studies, you would employ a robust database such as sqlite3. Subsequently, each article would initiate a separate thread in line with OpenAI's guidelines, ensuring organized and non-blocking operations. Additionally, taking a cue from Google's latest AI advancements, such as the AI Overviews in Google Search, integrating similar technology with Node.js would position your application at the frontier of efficiency.

Coupled with the Node.js platform's inherent strengths like its Fetch API for seamless data requests and its native support for streams, your application will exemplify performance and productivity. As noted in industry insights, the combination of these technologies forms a compelling toolkit for developers aspiring to build scalable and responsive applications.

As you apply these insights to your project, keep in mind that while the max_length parameter significantly influences the summary output, it remains a guideline rather than a strict boundary. This flexibility allows for adjustments that ensure the content's essence is preserved, catering to the needs of your target audience with precision and relevance.

In conclusion, embracing the OpenAI's summarization tool within your Node.js project elevates your capability to deliver effective information management—condensing information significantly and unveiling the core message of the content, thereby serving your audiences with impactful and digestible knowledge.

Using the OpenAI API for Translation

Harnessing the power of machine translation is not just a technical feat, it's an empowerment tool for teams facing language barriers. Consider Holiday Extras, a company catering to an international clientele, which encountered the challenge of a single marketing team having to produce content across myriad languages. The temptation to get bogged down in menial tasks is a common pitfall, but by leveraging translation capabilities, employees can refocus on strategic initiatives that drive growth and enhance their impact.

Integrating OpenAI's translation feature into a Node.js application can be particularly transformative. For example, Summer Health has revolutionized pediatric care by enabling swift communication through text, but creating medical visit notes remains a manual and time-consuming task. By automating this task using OpenAI, practitioners can reduce administrative time and allocate more hours to patient care, thus preventing burnout.

Moreover, the emergent platform that simplifies deployment of open-source services, including an auto-subtitles API, exemplifies how easy it can be to experiment with and utilize translation services. Upon providing an OpenAI API key, developers can rapidly integrate and deploy translation capabilities, with costs transparently based on usage and a nominal community fee.

As highlighted in the Language and internationalization newsletter, the deployment of machine translation (MinT) for 202 language Wikipedias underscores the significance of accessible translation tools. This initiative not only enhances translation quality but fosters inclusivity by servicing languages previously underserved by digital tools. Additionally, the revamping of Wikipedia's Content Translation tools further accelerates the translation process through enhanced support and capabilities, demonstrating the universal drive toward efficient language services.

OpenAI's translation API exemplifies a lightweight, yet robust paradigm in technology, enabling precise language conversions and promoting efficiency within software applications. Developers are invited to explore these capabilities to bolster the reach and accessibility of their work. The integration requires managing various aspects such as conversation states and tool usage, however, the likely impact on personal and organizational effectiveness are compelling incentives for adoption.

Fine-Tuning the OpenAI API for Specific Use Cases

Understanding the concept of an API is essential to grasp how fine-tuning can enhance your interaction with them. An API is an interface allowing you to interact with a remote application in a structured way, akin to how you'd order a specific dish from a restaurant while sitting at home. Let's delve into how you can fine-tune the OpenAI API to cater to specialized tasks and get performance akin to the gourmet dish you crave.

Beginning with the basics, fine-tuning an API demands a solid foundation in Large Language Model (LLM) utilization. Prompt Engineering and Retrieval Augmented Generation (RAG) techniques help you set a benchmark for your model's initial performance. It's important to recognize that feeding improper data during the fine-tuning phase can degrade the performance of your base model.

Consequently, having a baseline performance is pivotal to identify any regressions post fine-tuning.

The exciting realm of generative AI opens up to us as we further refine these models. Microsoft's partnership event has highlighted the strides in fine-tuning technology emphasizing improvements such as epoch-based checkpoint creation and the novel comparative Playground UI. These enhancements bolster the model's grasp over content and diminish overtraining risks.

Furthermore, they enhance the developmental cycle by integrating human insights into evaluating model outputs.

Creating a successful fine-tuning strategy involves a careful preparation of your data. The format needed is json, where each line in the file holds a discrete JSON object. In a fine-tuning context, each line represents dialogic exchanges—a system message, user input, and a preferred response from the chatbot, encapsulating the essence of interaction you wish to cultivate.

Adoption of fine-tuning methods is rapidly growing among developers and businesses alike, as showcased by the buzz around GPT-3.5 Turbo and its fine-tuned version, which demonstrates superior task-specific capabilities. These custom tweaks ensure that sensitive data remains secure and proprietary, providing unique user experiences.

In the existing landscape defined by deterministic computing, it becomes crucial to understand the scope of probabilistic computing, or AI. Current AI models, while not perfect, serve as a pivotal foundation for future enhancements, where the emphasis won't solely be on power but also on efficiency and task-specific finesse. As we steer towards this future, the integration of third-party tools and extensive validation metrics signify a leap forward in fine-tuning technology.

Remember, while fine-tuning, it's not just about deploying sophisticated models but also about ensuring your fine-tuned model can demonstrate improved performance over the original base model by quantitatively assessing output quality.

Common Errors and Troubleshooting

Navigating the integration of the OpenAI API within a Node.js environment presents its challenges, notably when saving an assistant id. Whether acquired through a graphical user interface or programmatically, identifying and assigning this assistant id to your code's variable is crucial. Consider maintaining the threading of sessions to capture the nuanced dialogue between user and assistant, preserving message context.

This is especially pertinent when leveraging AI models to process the entire history of communication.

When errors arise, utilizing consistent and well-structured response formats can be imperative. HTTP status codes serve as your primary indicator, with categories like 2xx signaling successful requests. Let's take inspiration from the software architecture field – where using external APIs has exponentially increased productivity – and apply similar methodologies for swift troubleshooting and efficient error resolution.

To augment this, we can assimilate experiences such as those shared by software engineers who navigate through diverse integration landscapes, demonstrating the need for clarity in documentation and responses.

With the developer community continually pushing for improvements, a diligent approach to integration and trouble-shooting is vital. Consider running a thread with pre-defined models, messages, and function calls using a created function Definitions array to ensure coherent conversations and tailored responses, reflecting a step toward a more seamless integration and interaction flow.

Conclusion

In summary, integrating OpenAI's API with Node.js empowers developers to enhance efficiency and productivity in their applications. By following the prerequisites and setting up the development environment, developers can seamlessly integrate the OpenAI API. Installing the OpenAI NPM package enables the utilization of AI models and functions tailored to specific requirements.

Generating an API key is a crucial step in gaining access to the OpenAI API. It is important to prioritize the security of the API key and follow proper coding steps. Initializing the OpenAI SDK allows developers to outline critical parameters and leverage AI models in their applications.

Once the OpenAI SDK is set up, developers can start sending API requests, unlocking a world of data. The OpenAI API offers capabilities such as text generation, summarization, translation, and fine-tuning for specific use cases. These capabilities have been utilized across various industries to create innovative solutions and boost productivity.

Effectively addressing common errors and troubleshooting is essential in the integration process. Consistent response formats and well-structured techniques help developers navigate challenges and resolve issues efficiently.

In conclusion, integrating OpenAI's API with Node.js empowers developers to harness the power of AI and build real-world applications. By leveraging text generation, summarization, translation, and fine-tuning capabilities, developers can maximize efficiency and productivity. The integration not only contributes to the growth and innovation of the developer community but also positions developers at the forefront of technological advancements.

Frequently Asked Questions

What is required to integrate OpenAI's API with Node.js?

To integrate OpenAI's API with Node.js, you need to obtain your OpenAI API key, set up a development environment, create a .env file to store the API key, and develop a Node.js file to contain your operational code.

How do I obtain my OpenAI API key?

You can generate your OpenAI API key by following specific technical steps, which include setting up your environment and including necessary libraries. Ensure you secure this key to prevent unauthorized access.

What should I include in my Node.js file?

In your Node.js file, you should define parameters such as the AI model, conversational context, and available functions your application can execute, ensuring smooth interaction with the OpenAI API.

What is the purpose of creating a .env file?

The .env file is used to store sensitive information, such as your API key, securely. This helps prevent unauthorized access and keeps your application configuration private.

How do I install the OpenAI npm package?

You can install the OpenAI npm package by running the command npm install openai in your Node.js project to allow seamless communication between your application and the OpenAI API.

What does the configuration object in the OpenAI SDK include?

The configuration object should include the AI model you intend to use, the message history to maintain context, and a list of functions available in your application.

How can I send my first API request?

To send your first API request, configure your development tools and API settings, select the appropriate API service, and construct a clear and concise request that adheres to the data exchange protocols.

What are some practical applications of the OpenAI API?

The OpenAI API can be used for various applications, including text summarization, translation, and task automation, enhancing productivity and user experience in different sectors.

How can I implement text summarization using OpenAI's API?

You can implement text summarization by creating a script that calls the OpenAI API to condense lengthy articles into concise summaries, effectively managing and digesting information.

What are the benefits of using the OpenAI API for translation?

Using the OpenAI API for translation helps teams overcome language barriers, allowing for efficient content production and communication, ultimately enhancing productivity and operational effectiveness.

How does fine-tuning the OpenAI API work?

Fine-tuning involves customizing the API's responses to better fit specific tasks. This requires a well-prepared dataset for training, ensuring that the model performs efficiently for your unique use cases.

What common errors should I be aware of when integrating the API?

Common errors include issues with saving the assistant id, maintaining session threads for context, and ensuring correct HTTP status codes for troubleshooting. It's essential to have clear documentation and structured response formats for efficient error resolution.

How can I troubleshoot issues with the OpenAI API integration?

To troubleshoot issues, maintain a consistent response format, check HTTP status codes for successful requests, and utilize pre-defined models and messages to ensure coherent interactions.

What resources are available for further learning on this topic?

For further learning, explore the OpenAI documentation, community forums, and various coding tutorials that focus on integrating APIs with Node.js and practical examples of using the OpenAI API.