Introduction

Code optimization is a crucial aspect of software development, enabling developers to achieve maximum efficiency and productivity. By carefully analyzing the performance of their code through profiling, developers can identify areas of inefficiency and strategically target them for improvement. This iterative process of optimization is not a linear checklist but a creative journey that requires a tailored approach for each unique problem.

From choosing efficient algorithms and data structures to streamlining code for speed, optimizing memory usage, and reducing unnecessary computations, every aspect of code development plays a role in achieving optimal performance. Furthermore, utilizing built-in functions and libraries, caching frequently used data, minimizing function calls, understanding and optimizing cache efficiency, and using the right data types all contribute to the overall efficiency of the code. Database query optimization and knowing when to consider a complete code rewrite are also important considerations in achieving optimal performance.

By following best practices for maintaining optimized code and staying up-to-date with the latest advancements in programming languages and frameworks, developers can ensure that their code remains efficient and adaptable in the ever-evolving technological landscape. In conclusion, code optimization is an ongoing journey of continuous improvement, empowering developers to create superior systems and drive innovation in the world of software development.

The Importance of Profiling

Profiling serves as the compass in the journey of program optimization. It's a systematic procedure that examines the execution of your program, identifying inefficiencies that could be slowing down its speed and consuming resources. The insights gleaned from profiling aren't just numbers; they're a map that guides you to the specific areas in need of refinement. With this knowledge, you're empowered to strategically target and enhance the parts of your code that will yield the most significant performance gains.

For example, think about the transformative power of profiling as shown by a case where initial efforts led to an impressive 8x speed increase, and additional enhancements are expected to magnify this to an astonishing 100 in certain situations. This highlights that improving performance is far from a linear checklist; it's a creative, iterative process that melds software design and computer architecture knowledge. Each issue is distinct and requires a customized set of improvement methods.

Emphasizing the iterative nature of optimization, Nikhil Benesch, CTO at Materialize, highlighted the importance of tools like Polar Signals Cloud in the software development lifecycle. These tools have revolutionized profiling by leveraging technologies like eBPF, offering an unobtrusive, no-code-change solution that's compatible with a broad array of programming languages. The result? A swift and secure way to ascertain where server resources are allocated, all the way down to the line of code, transforming a process that traditionally spanned days into a matter of seconds.

Additionally, comprehending fundamental processes such as Garbage Collection is crucial in guaranteeing memory is effectively reclaimed, and unused memory does not persist, potentially destabilizing system efficiency. As we dissect the intricacies of memory management, we grasp how pivotal this silent process is to the seamless operation of our applications.

Performance evaluation, as highlighted by industry insights, is not just about maintaining a product's performance; it's about deepening our understanding of system behavior, limitations, and potential areas for improvement. It's a tool that not only enhances the system at hand but also enriches the developer's intuition, leading to the creation of superior systems in the future.

Essentially, the art of code improvement is not attained through a one-size-fits-all formula; it necessitates a watchful approach of 'measure, measure, measure.' This mantra ensures that changes lead to genuine advancements and that the journey of optimization, although potentially endless, is one of continuous enhancement and learning.

Choosing Efficient Algorithms and Data Structures

Selecting the appropriate algorithms and data structures is a cornerstone of high-performance coding, with profound impacts on execution speed and memory efficiency. An astute selection, guided by a clear comprehension of the program's requirements, can result in significant enhancements. For instance, embracing Single Instruction Multiple Data (SIMD) techniques can markedly expedite operations, as demonstrated in projects like OpenRCT2, where performance is paramount. Achieving optimal performance is a subtle skill, necessitating ongoing evaluation and profiling to guarantee authentic improvement of the code's efficiency.

Time and space complexity considerations are crucial for anticipating how an algorithm will scale with data size. A quintessential example is Rasmus Kyng's superfast network flow algorithm, which revolutionized computation speed for network improvement, showcasing that precise algorithmic innovations can leapfrog decades of established practices. Similarly, in coding challenges like determining the distinct ways to climb a staircase, both simplistic brute-force approaches and intricate algorithms reveal the undeniable importance of methodical evaluation. The wisdom 'make it work, make it right, then make it fast' encapsulates the iterative nature of optimization, underscoring the need for a balance between functionality, correctness, and performance.

Furthermore, the integration of AI pair-programming tools, such as GitHub Copilot, underscores the dynamic landscape of code completion systems. These tools not only enhance developer efficiency but also promote best practices in selecting structures, as mentioned by Micha Gorelick and Ian Oswald in 'High Performance Python'. They emphasize that skillfully aligning data structures with the particular queries made on data can greatly improve results. The process of improving is ongoing, and with each step, developers sharpen their acumen, enabling the creation of increasingly sophisticated and efficient systems.

Optimizing Loops and Minimizing Redundant Operations

Loop improvement is a powerful technique for enhancing code performance. By implementing techniques such as loop unrolling, developers can process several iterations within a single loop iteration, thus reducing the total number of iterations and potentially speeding up execution by a factor of 8x, or even up to 100x in some scenarios. The secret to successful fine-tuning lies in the art of customizing strategies to the distinct challenges each problem presents, rather than depending on a universal approach. Measuring and profiling are crucial for comprehending whether a change leads to actual improvement, as not every attempt to improve will yield positive results. Frequently, the process involves experimentation and learning from mistakes, and while some improvements may offer diminishing returns, others might require a complete change in strategy for significant gains. In the domain of CPU optimization, choosing effective algorithms plays a vital role, particularly when coding in languages like C#, where it can result in enhanced application efficiency and minimized energy usage, a significant aspect for mobile and web platforms.

Efficiency enthusiasts understand that refactoring is a vital part of maintaining and enhancing performance of the software. Programming issues such as duplicated code, overly complex methods, and bloated classes indicate the requirement for refactoring. By identifying these indicators and taking the required measures to refactor the software, developers can guarantee that their software remains optimized, maintainable, and efficient. Performance evaluation is a continuous process that benefits not only the system in question but also the developer's expertise, leading to the creation of better systems in the future. It's important to remember that the journey of optimization is never complete, as there's always room for improvement, whether it's in the fine-tuning of existing solutions or the exploration of new and innovative techniques.

Streamlining Code for Speed

Enhancing the efficiency of the program is not only about improving its speed; it is a meticulous procedure of enhancing how the program operates under the hood. This often involves pruning redundant elements and clarifying complex logic. For instance, as engineers at Check Technologies have illustrated, data-informed decision-making is key. They depend on their Data Platform for various analytics tasks, which requires efficient programming to handle large amounts of information rapidly. Similarly, in the realm of SIMD (Single Instruction Multiple Data), developers have successfully accelerated tasks by processing multiple data items simultaneously, showcasing the importance of adopting such approaches for performance gains.

Furthermore, improvements in technology consistently elevate the standard for program efficiency. A recent breakthrough by scientists, including Swinburne University of Technology's Professor David Moss, has led to the development of an ultra-high-speed signal processor capable of handling 400,000 real-time video images concurrently, operating at 17 Terabits/s. This innovation highlights the potential impact of optimized programming on fields such as AI, machine learning, and robotic vision, where speed and efficiency are crucial.

Refactoring software is integral to maintaining its health, as highlighted by Eleftheria, a seasoned Business Analyst. It involves reworking the internal structure without altering the external functionality, aiming to make the programming more maintainable and performance-friendly. This aligns with the software adage to 'make it work, make it right, then optimize it,' which emphasizes the progressive stages of development focused on functionality, correctness, and finally, efficiency.

When optimizing the efficiency of a program, it is crucial to acknowledge the constraints outlined by Amdahl's Law, which governs that the acceleration of a program is limited by its non-parallelizable sections. This emphasizes the need to focus on and optimize these crucial sections for overall improvement. AI pair-programming tools like GitHub Copilot have demonstrated significant productivity improvements across developer skill levels, by offering AI-driven suggestions that reduce cognitive load and task time while enhancing the quality of the code.

In essence, to achieve the best execution times, one must diligently review and refine their code, prioritizing areas where streamlining will most effectively enhance the overall effectiveness of the code. This not only results in faster execution but also contributes to the creation of robust, maintainable software that can adapt to the evolving technological landscape.

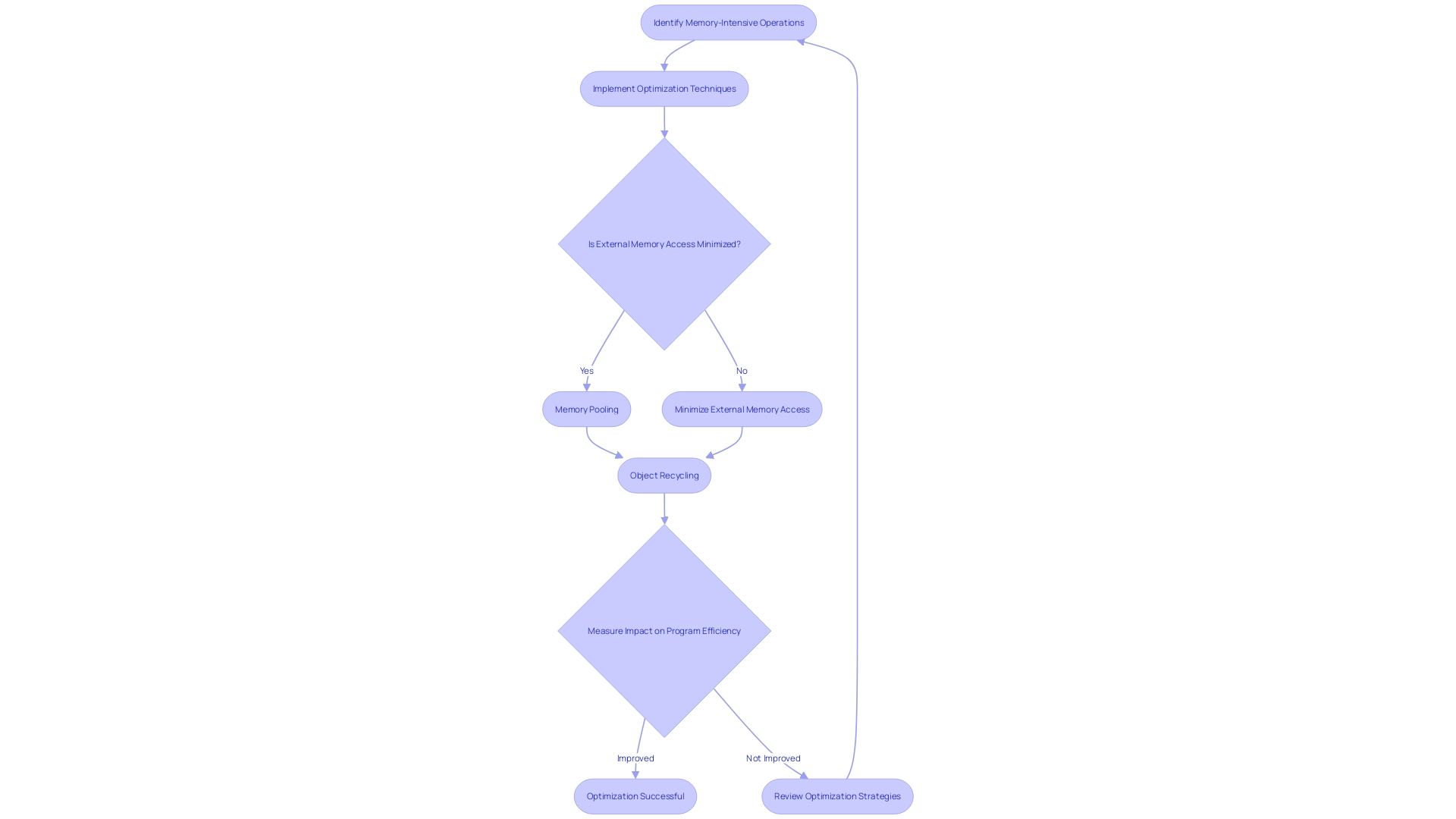

Optimizing Memory Usage and Reducing External Memory Access

Optimizing memory usage isn't just about trimming down the memory footprint; it's a vital strategy to accelerate the overall performance of your applications. With the rise of memory-intensive operations in modern centers, such as memcpy(), memmove(), hashing, and compression, the importance of efficient memory usage has never been greater. These operations are not limited to just database, image processing, or video transport applications; they extend to the core of a data center's infrastructure, where they consume a substantial amount of CPU cycles—a phenomenon often referred to as the "data center tax." The key to addressing this problem lies in techniques like memory pooling, object recycling, and minimizing external memory access, which can make a significant impact on your program's efficiency.

For instance, scalar replacement is a sophisticated technique employed in OpenJDK's Just-In-Time compiler, C2, which breaks down intricate objects into simpler scalar variables, resulting in more efficient code execution. This approach, along with other specific enhancements detailed in a three-part blog series, showcases the tangible improvements made to Java application performance.

However, it's not just about high-level optimizations; issues such as memory fragmentation from frequent system allocator calls and cache misses due to pointer dereferencing can slow down long-running systems, making them less responsive. Tackling these challenges requires creative solutions like modifying the structures used, such as transitioning from a vector of pointers to more efficient alternatives. By doing so, we can significantly reduce the impact of inefficient memory usage, from slowing down data manipulation to causing sluggish processing of large datasets.

As we continue to push the boundaries of what's possible with memory optimization, comments and community insights remain invaluable. Developers are encouraged to engage and share their experiences, contributing to the collective knowledge and driving forward the pursuit of optimal performance in our systems.

Utilizing Built-In Functions and Libraries

Utilizing the inherent functionalities of programming languages is a strategic method for optimizing the execution of software. Features like closures, which encapsulate a function along with its lexical environment, present a powerful pattern for maintaining state across function invocations. Function composition, another cornerstone of functional programming, enables developers to create complex operations by combining simpler ones, leading to more maintainable software. Similarly, event delegation leverages event bubbling to handle inputs at a higher level in the DOM hierarchy, thus minimizing the number of event listeners and enhancing efficiency.

The abundance of scripting languages like JavaScript has introduced approaches like the spread syntax for combining arrays or objects, which simplifies the process and can decrease execution time. Modern web development also benefits from asynchronous features such as web workers, allowing resource-intensive tasks to run in the background without impacting the user experience. These are just a few examples of how modern languages offer built-in functions that, when effectively employed, can significantly enhance program performance and application efficiency.

Energy efficiency in programming has gained prominence, with studies showing that even a 1% savings in data center energy use can be substantial. This is where the utilization of built-in libraries and functions becomes not just a matter of program efficiency, but also of environmental responsibility. As new languages emerge with a focus on optimization, it's clear that the trade-offs between convenience and control are pivotal in the decision-making process for software development teams.

To illustrate, consider the impact of AI pair-programming tools which leverage large language models to provide context-relevant code completions, demonstrating considerable productivity gains across the board. When developers utilize these advanced tools and built-in language features, they not only streamline their workflow but also contribute to more energy-efficient computing, aligning with the broader goals of sustainable technology practices.

Caching Frequently Used Data

Caching is a crucial technique for enhancing an application's efficiency, and it's found in every aspect, ranging from front-end to back-end systems, CPUs, and everyday devices. It involves temporarily storing data that is accessed frequently in a cache, so there's no need to repeat the full request process for the same information. This can dramatically decrease the need for expensive computations or slow external memory access, leading to more efficient execution times. In web applications, caching can take many forms, from function-level to full-page caching, each serving a unique purpose. By examining the code to identify the locations where caching can be most beneficial, developers can attain tangible improvements in system efficiency. As highlighted by experts, taking care of the 'little things' in development, such as consistent formatting and clear naming conventions, can result in notable enhancements in the overall system. For example, proper cache invalidation is frequently mocked as one of the most difficult responsibilities in software development, but it is crucial for preserving the integrity and efficiency of caching systems. With the latest advancements, AI pair-programming tools like GitHub Copilot are further revolutionizing developer productivity, offering context-aware completions and reducing cognitive load. By leveraging these tools and adopting sound caching practices, developers can set the stage for faster and more reliable applications, ensuring a smooth and efficient user experience.

Minimizing Function Calls and Optimizing Function Structure

Optimizing function calls is a crucial method for improving program performance. In scenarios where a function is invoked numerous times, each call adds a small delay, cumulatively impacting runtime. To optimize execution, consider techniques like inlining, which embeds a function's implementation within its caller, eliminating the call overhead. Alternatively, employing function pointers can provide a more flexible call mechanism, potentially reducing call frequency. It's also crucial to re-evaluate the logic that necessitates multiple calls; sometimes, an algorithmic overhaul can significantly cut down the number of function calls needed. A case in point is an optimization that yielded an 8-fold speed increase over a basic implementation, demonstrating the power of thoughtful software design and an intimate understanding of computer architecture.

Code refactoring plays a pivotal role in this process. It involves restructuring the programming instructions to make them cleaner and more efficient without altering their external behavior. Identifying 'smells' such as duplicated code or unnecessarily complex methods is the first step towards refactoring. For instance, if the same code exists in multiple locations, abstracting it into a single function can reduce redundancy and facilitate future maintenance. As the software evolves, continuous measurement and profiling are essential to validate the effectiveness of any changes made. Keep in mind, the process of maximizing efficiency is not a one-size-fits-all recipe; it requires a tailored approach for each unique challenge.

Recent research highlighted at the IEEE/ACM International Symposium on Microarchitecture explored advanced techniques like leveraging tensor sparsity in machine learning models. By pruning unneeded elements and adhering to certain sparsity patterns, researchers achieved more efficient computation, underscoring the innovative directions in which code enhancement is heading. While improving your own programming, it's crucial to stay updated about such advancements that could provide new optimization opportunities.

Ultimately, optimizing function calls is a continuous journey of exploration and refinement. It requires a blend of strategic changes, regular evaluation, and an openness to adopting new methodologies as they emerge in the field.

Understanding and Optimizing Cache Efficiency

Optimizing code for cache efficiency is a critical aspect of software performance. When a program frequently accesses a scattered array of pointers, such as in polymorphic object collections, it can lead to multiple calls to the system allocator, increasing memory fragmentation. This fragmentation can degrade the responsiveness of long-running systems. More critically, the dereferencing of pointers often triggers cache misses due to the unpredictable location of objects in memory, as highlighted by experts at Johnny's Software Lab LLC.

To address these concerns, structures should be designed to ensure that elements accessed together are stored close together in memory. This practice aligns with the principle that 'everything that is accessed together should be stored together,' minimizing the workload on the memory subsystem. Methods such as cache blocking and loop reordering are used to restructure information and computations in a manner that corresponds with the cache's line organization, thereby enhancing the hit rate. Alignment also plays a crucial part in enhancing cache efficiency, as it ensures that the structure's layout is conducive to the cache's architecture.

Research has indicated that a basic alteration in the receptacle of information can have significant impacts on cache efficiency. By avoiding excessive pointer dereferencing and organizing the information to exploit the cache line organization, software can achieve significant speed-ups. This is especially crucial in systems where efficiency is paramount. As we peel back the layers of CPU caches, from the swift L1 cache to more complex configurations, it becomes apparent that their role in modern computing is indispensable. They are the quiet workhorses that enable CPUs to access data at lightning-fast speeds, driving the functionality of both software and systems.

In the domain of optimization, the saying 'make it work, make it right, then make it fast' serves as a guiding principle. It emphasizes the significance of initially guaranteeing functionality and correctness before concentrating on enhancements. Thorough evaluations, as advocated in both academia and industry, offer insights into a system's behavior and limitations, resulting in enhanced design and superior outcomes in future iterations.

In conclusion, understanding and leveraging cache design and optimizations is fundamental for developers seeking to refine the execution speed and efficiency of their software. It's not only about composing software; it's about designing it with a keen understanding of the underlying machinery to extract the maximum efficiency.

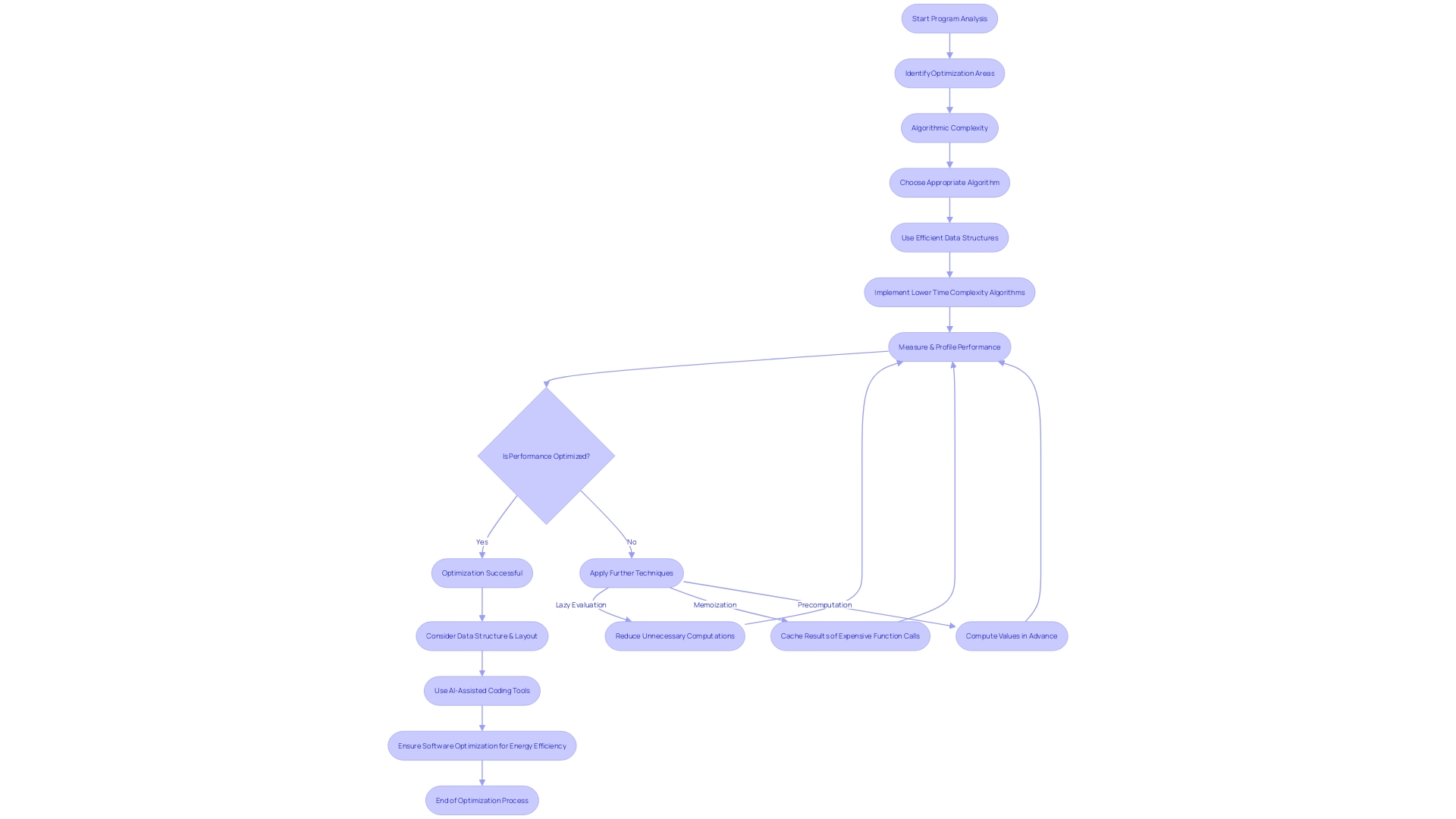

Avoiding Unnecessary Computations

Getting rid of unnecessary computations is crucial for enhancing performance in programming. By analyzing your program to identify where computations can be optimized or omitted, you pave the way for faster execution times. Employing smart techniques like lazy evaluation, memoization, and precomputation can be transformative, purging surplus calculations and boosting efficiency. It's a nuanced art form, where understanding your program's demands and judiciously applying these methods is key to avoiding superfluous computational work.

For example, programmers utilizing SIMD (Single Instruction Multiple Data) have witnessed its transformation of tasks by simultaneously processing multiple elements. Achieving such efficiency necessitates a deep comprehension of data layouts and access patterns, as demonstrated by the OpenRCT2 project's experience with performance-focused code improvements.

When optimizing, one must remember that it's a continuous journey rather than a finite task. It requires continuous measuring and profiling to ensure changes truly enhance results, as there's no universal solution. Actually, at times what seems to be a booster may result in a dead end; achievement in optimization is frequently the outcome of skill, training, and sometimes serendipity.

Furthermore, taking into account the structure and layout is also essential, as it directly affects performance. The principle that 'what is accessed together should be stored together' minimizes memory fetching resources, promoting a smoother, more responsive system. As developers at Johnny's Software Lab LLC affirm, enhancing data structure can significantly speed up data access and modification.

In the realm of AI-assisted coding, tools like GitHub Copilot have demonstrated substantial productivity boosts for developers of all expertise levels. AI suggestions during programming can positively impact various productivity facets, from reducing task times to enhancing script quality and lowering cognitive load.

In the end, the optimization of software execution is not only related to velocity but also to energy efficacy. With servers in centers contributing to over one percent of global power usage, efficient programming can lead to less hardware strain and a lower energy footprint, aligning with the broader goals of sustainability.

Using the Right Data Types

Enhancing your code for efficiency goes beyond mere tweaks; it's a complex dance between selecting the right data structures and understanding their implications on memory and processing speed. For instance, if precision isn't a necessity, favoring integers over floating-point numbers can significantly accelerate execution times. This is not simply a matter of selecting the fastest type; it's about aligning your types with the operations they'll undergo, much like how SIMD (Single Instruction Multiple Data) enhances by performing identical operations across arrays. This principle was effectively utilized in the OpenRCT2 project, where a switch to SIMD led to notable improvements in speed.

Data types fundamentally represent various forms of information from simple numerics to complex multimedia, and each has its role. Take, for example, tracking numbers used by shipping companies: long integers are employed to handle the sheer volume of unique identifiers efficiently. Similarly, when you're dealing with data interchange, especially in web development, JSON's role is pivotal. However, as crucial as it is, JSON must be managed wisely to avoid performance pitfalls.

Improving performance is not easily achieved with a single solution; it requires an ongoing process of measurement and refinement. It requires a deep understanding of how different data structures behave in terms of time and space complexity, and how mutable or immutable structures can affect the efficiency of your program. The skill of improving lies in carefully analyzing your programming, evaluating the effect of every modification, and identifying the areas to concentrate on to attain significant enhancements. Remember, each problem may require a unique approach to optimization, as there's no universal recipe for success.

Database Query Optimization

Optimizing database queries is a powerful lever for enhancing code performance. Effective techniques, such as indexing, can drastically cut down on execution times, transforming the responsiveness of your application. It's like streamlining a busy intersection with traffic lights, where proper indexing acts as a green light, swiftly guiding information flow. Query optimization is not just an exercise in technical refinement but a strategic endeavor that demands comprehensive understanding of your information architecture. This approach mirrors the meticulous design of a rideshare app's database, where the relationship between drivers, trips, and riders is crafted for peak efficiency.

Furthermore, take into account a PDF search application utilized by insurance firms to handle information. The intricate dance of parsing through reams of text to find a specific piece of information is a testament to well-tuned queries and schemas. This level of precision in retrieving information is crucial for making informed decisions quickly.

Exploring further into database configurations provides insights into how databases like PostgreSQL manage information internally. Understanding these mechanics is essential, as a superficial increase in resources only delays inevitable issues, leading to technical debt. Instead, adopting smart optimization techniques can significantly reduce resource requirements while maintaining, or even improving, database performance.

For example, pre-staging aggregated information from multiple large tables into a single table can save significant processing time. It's a proactive move that benefits the entire organization by eliminating redundant work. When feasible, leveraging in-memory tables can further accelerate data access, making the process as swift as retrieving a toy from a well-organized lego set, rather than rummaging through a mixed pile for that one elusive piece.

The journey towards database efficiency is ongoing, punctuated by continuous monitoring and iterative improvements. It's a combination of art and science, necessitating a harmony between thorough understanding of your database's distinctive attributes and a sharp eye for the broader context of system function. Such a holistic approach ensures a robust, scalable, and efficient database environment that stands the test of time and scale.

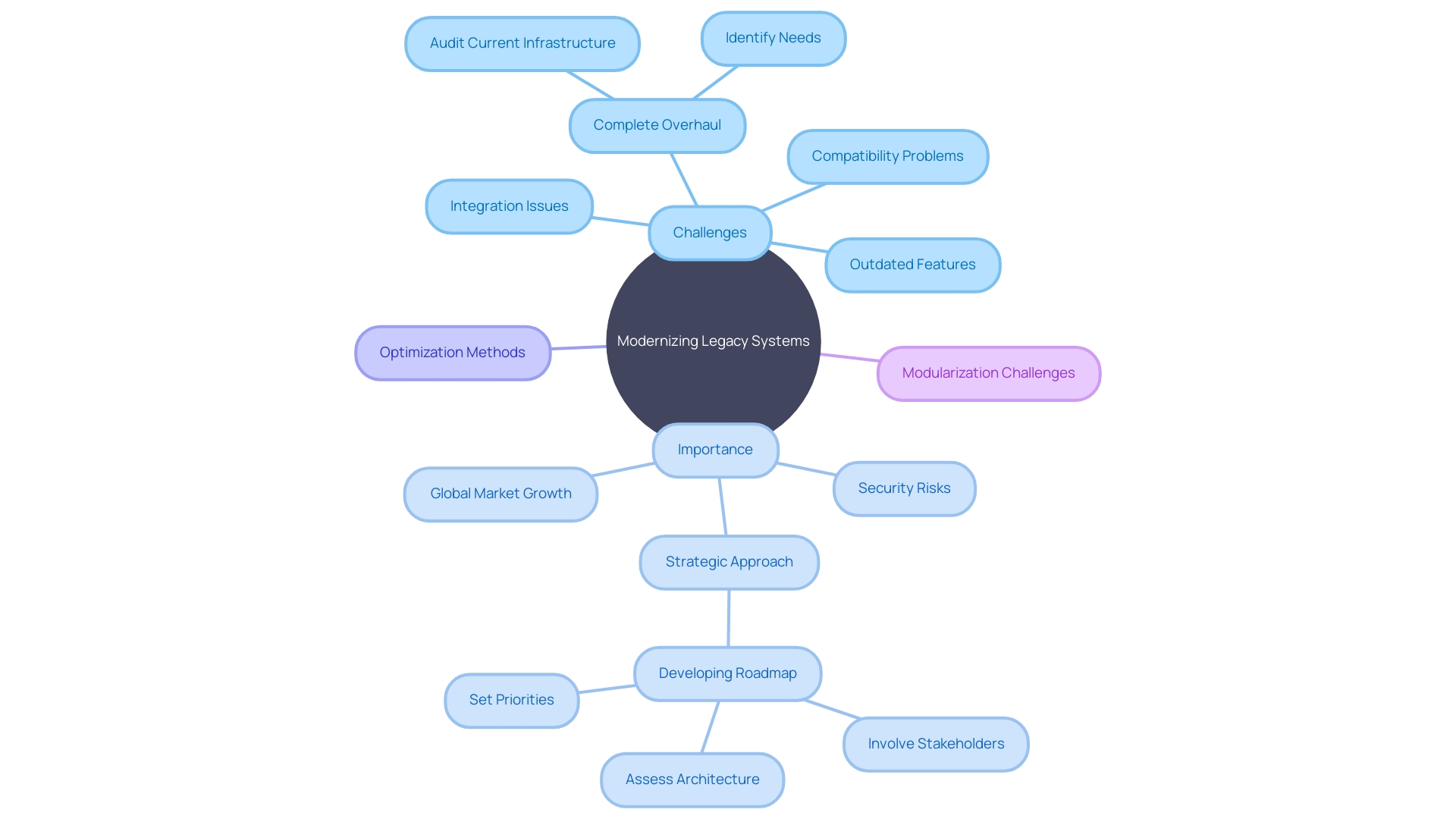

When to Consider a Complete Code Rewrite

When improving the efficiency of the program, programmers may come across situations where enhancement alone isn't sufficient, especially with outdated systems or fundamentally defective designs. These situations require a complete overhaul. By doing so, it's possible to incorporate cutting-edge optimization methods, harness the latest features of programming languages, and rectify any underlying architectural impediments to performance. Embarking on such an overhaul requires a meticulous assessment to weigh its breadth and repercussions, ensuring that decisions are tailored to the project's unique demands.

Legacy systems, which may have been integral for years, often present a formidable challenge due to their size and complexity. When transitioning these systems, particularly large-scale enterprise applications, to modern platforms, developers may opt for modularization. However, this process can be prolonged and filled with unexpected complications, such as undocumented 'easter eggs'âfeatures or behaviors within the software that are not formally defined yet need to be replicated in the new application.

Furthermore, the need for application modernization is emphasized by a concerning reality: a substantial portion of software, some of which supports crucial economic infrastructure, is constructed using outdated programming that can be susceptible to security risks. This underscores the importance of modernizing applications not only to enhance performance but also to fortify security and maintain economic stability.

The evolution of software development is a continuous cycle, from understanding user requirements to ongoing maintenance. This lifecycle necessitates a strategic approach to modernization, where the choice to refactor, rearchitect, or replace is driven by the specific needs of an organization. In this context, refactoring often becomes a balancing act between making non-functional improvements and meeting product stakeholder expectations.

With the emergence of AI and the widespread use of open-source components, the structure of programming is becoming more intricate. The 2024 OSSRA report highlights the critical role of automated security testing in managing software supply chain risks, given the impracticality of manually testing the vast number of components in modern applications. It's clear that comprehensive visibility into an application's codebase is paramount for securing software and mitigating potential vulnerabilities.

Best Practices for Maintaining Optimized Code

Keeping your code at peak performance requires a blend of art and science. After the initial improvement, it's crucial to maintain this state through a series of best practices. Documenting the optimization process meticulously is the first step. Regularly reviewing your software is just as crucial, ensuring that each enhancement does not unintentionally introduce new issues. Moreover, regression testing is a non-negotiable practice. It's a protective measure against the regression of code quality, verifying that recent code changes haven't adversely affected existing functionalities.

To stay ahead of the curve, keep abreast of the latest updates in programming languages, libraries, and frameworks. These are often packed with new features designed to enhance functionality. For example, SIMD (Single Instruction Multiple Data) techniques can significantly accelerate computation by processing multiple data points simultaneously, provided the data layout and access patterns are amenable to such an approach. Such strategies were put to effective use in projects like OpenRCT2, where performance is paramount.

Keep in mind, improving isn't a one-time job but a continuous journey. It requires continuous measurement and profiling to validate improvements and identify areas needing attention. Because the domain of improvement is extensive and constantly evolving, what works for one project may not be suitable for another. Customized methods frequently produce the most remarkable advancements, as shown by the speed enhancementsâup to 100 times in certain casesâattained through such tailor-made improvements.

As we embrace the future of technology, with developments in blockchain and AI as discussed at forums like WEF Davos, the significance of maintaining efficient and adaptable programming becomes increasingly clear. It supports innovation and sustainable growth in the dynamic landscape of Web3 and beyond. In the spirit of never-ending improvement, let's commit to code optimization as an essential part of our development practices.

Conclusion

In conclusion, code optimization is a continuous journey of improving efficiency and productivity in software development. By analyzing and profiling code, developers can identify areas of inefficiency and strategically improve them. This iterative process requires a tailored approach for each unique problem.

Choosing efficient algorithms and data structures, utilizing built-in functions and libraries, caching frequently used data, and minimizing function calls are important techniques for streamlined code and improved performance. Additionally, optimizing memory usage, reducing unnecessary computations, and understanding cache efficiency play a crucial role in code performance optimization.

Maintaining optimized code involves documenting the optimization process, regularly reviewing code, and staying up-to-date with the latest advancements in programming languages and frameworks. By following best practices, developers can ensure that their code remains efficient and adaptable in the ever-evolving technological landscape.

In summary, code optimization empowers developers to create superior systems and drive innovation. It is a continuous journey of refining and enhancing code to achieve maximum efficiency and productivity. By leveraging the right techniques and staying proactive in their approach, developers can create high-performance software systems that deliver superior results.

Frequently Asked Questions

What is profiling in programming?

Profiling is a systematic process that examines the execution of a program to identify inefficiencies that may slow down its performance or consume excessive resources. It provides insights that help developers target specific areas in need of improvement.

Why is profiling important for program optimization?

Profiling serves as a guide for optimizing code, directing developers to the parts that will yield the most significant performance gains. It transforms a traditionally lengthy optimization process into a faster and more strategic one.

How can profiling improve program performance?

Profiling helps identify slow code paths and resource allocation issues. For example, it has led to performance increases of up to 8x or more in certain cases, showcasing that optimization is often a creative and iterative process.

What tools can assist with profiling?

Tools like Polar Signals Cloud leverage technologies such as eBPF to provide unobtrusive profiling solutions that work across multiple programming languages without requiring code changes.

What role does memory management play in optimization?

Understanding and optimizing memory management processes, such as Garbage Collection, is crucial for reclaiming memory effectively and ensuring that unused memory does not hinder system performance.

How do algorithms and data structures affect performance?

Choosing the appropriate algorithms and data structures is essential for high-performance coding. Efficient selections can lead to significant improvements in execution speed and memory usage.

What techniques can enhance loop performance?

Techniques like loop unrolling can process multiple iterations in a single loop execution, significantly speeding up performance. However, measuring and profiling are essential to ensure that these changes provide actual improvements.

Why is code refactoring important?

Refactoring helps maintain and enhance software performance by eliminating issues such as duplicated code and complex methods. It ensures that the code remains optimized and maintainable.

How can built-in functions and libraries optimize performance?

Utilizing built-in functionalities of programming languages simplifies coding tasks and can lead to significant efficiency gains. Features like closures and event delegation enhance program performance when used effectively.

What is the significance of caching?

Caching temporarily stores frequently accessed data to reduce the need for expensive computations or slow memory access, leading to improved application efficiency.

How can I optimize database queries?

Techniques like indexing and pre-staging aggregated data can drastically reduce execution times, significantly enhancing the responsiveness of applications.

When should a complete code rewrite be considered?

A complete code rewrite may be necessary when dealing with outdated systems or flawed designs. This approach allows incorporation of modern optimization methods and rectifies underlying architectural issues.

What are the best practices for maintaining optimized code?

Best practices include documenting the optimization process, regular code reviews, regression testing, and staying updated on the latest programming languages and features to ensure ongoing efficiency.

Why is continuous evaluation important in optimization?

Continuous evaluation and profiling help validate improvements and identify new areas for enhancement, emphasizing that optimization is an ongoing journey rather than a one-time task.