Introduction

Effective error handling is a crucial element of software development, ensuring the stability and user experience of applications. From unexpected holiday declarations to software failures causing global outages, the consequences of inadequate error management can be severe. To address these challenges, developers have a range of error handling mechanisms at their disposal, such as exceptions and result types, each suited to different scenarios.

By understanding and implementing best practices for error handling, developers can create more resilient and maintainable codebases. Rigorous testing of error handling scenarios is essential to ensure software performance and user satisfaction. Logging and error monitoring play a vital role in identifying and resolving errors in real time, enhancing the overall stability of systems.

Controlled termination and fallback behaviors are key strategies to handle critical errors and maintain service continuity. Furthermore, error handling is not just about addressing exceptions; it also plays a crucial role in ensuring the security of software systems. By embedding security practices within error handling protocols, developers can fortify applications against potential threats.

Various tools and libraries, such as Pydantic, can enhance error handling capabilities. Real-world examples and case studies highlight the importance of robust error handling practices and the evolution of error management techniques. By embracing robust error handling, developers can create reliable software that stands the test of time, empowering them to tackle future challenges with confidence and efficiency.

Why Robust Error Handling is Crucial

At the core of program durability lies strong mistake handling â an essential for high-performing applications. Advanced mitigation strategies are vital, not only for preserving the stability of a program but for guaranteeing uninterrupted user experience. For instance, consider the scenario where a critical report generation process was missed due to an unexpected holiday declaration following President Reagan's death, leading to a cascade of overlooked issues. Similarly, the notorious 'Cupertino Effect,' in which a spell-checker's absence of the term 'cooperation' resulted in numerous official documents mentioning a city in California, reveals the amusing yet troublesome aspect of technology mishaps.

Such examples highlight the relevance of proactive mistake handling. Another case in point is the extensive outage that affected critical Windows OS systems worldwide, causing a 'Blue Screen of Death' owing to software failures. This incident illustrates how a single error can escalate into a global crisis, disrupting essential services and operations. It’s a stark reminder of why developers, like Greg Foster, advocate for transparency and a commitment to robustness, even when outages are as brief as two minutes.

The statements of cybersecurity experts resonate here, revealing the madness in the contemporary approach to application development: excessive code for simple tasks, over-reliance on external libraries, and the dire state of program security. The emphasis is often on doing more work, not making sure the work is robust, as highlighted by numerous professionals who have learned this the hard way. They suggest a shift in focus — from quantity to the enduring quality of code.

In the pursuit of efficiency, we must remember that robust error management isn't just about avoiding crashes; it's about building resilient systems that stand the test of time and usage, preserving not only function but also the user's trust. By exploring further into methods that protect against unforeseen events, we enable individuals to build not only operational programs, but also long-lasting and dependable digital systems.

Types of Error Handling Mechanisms

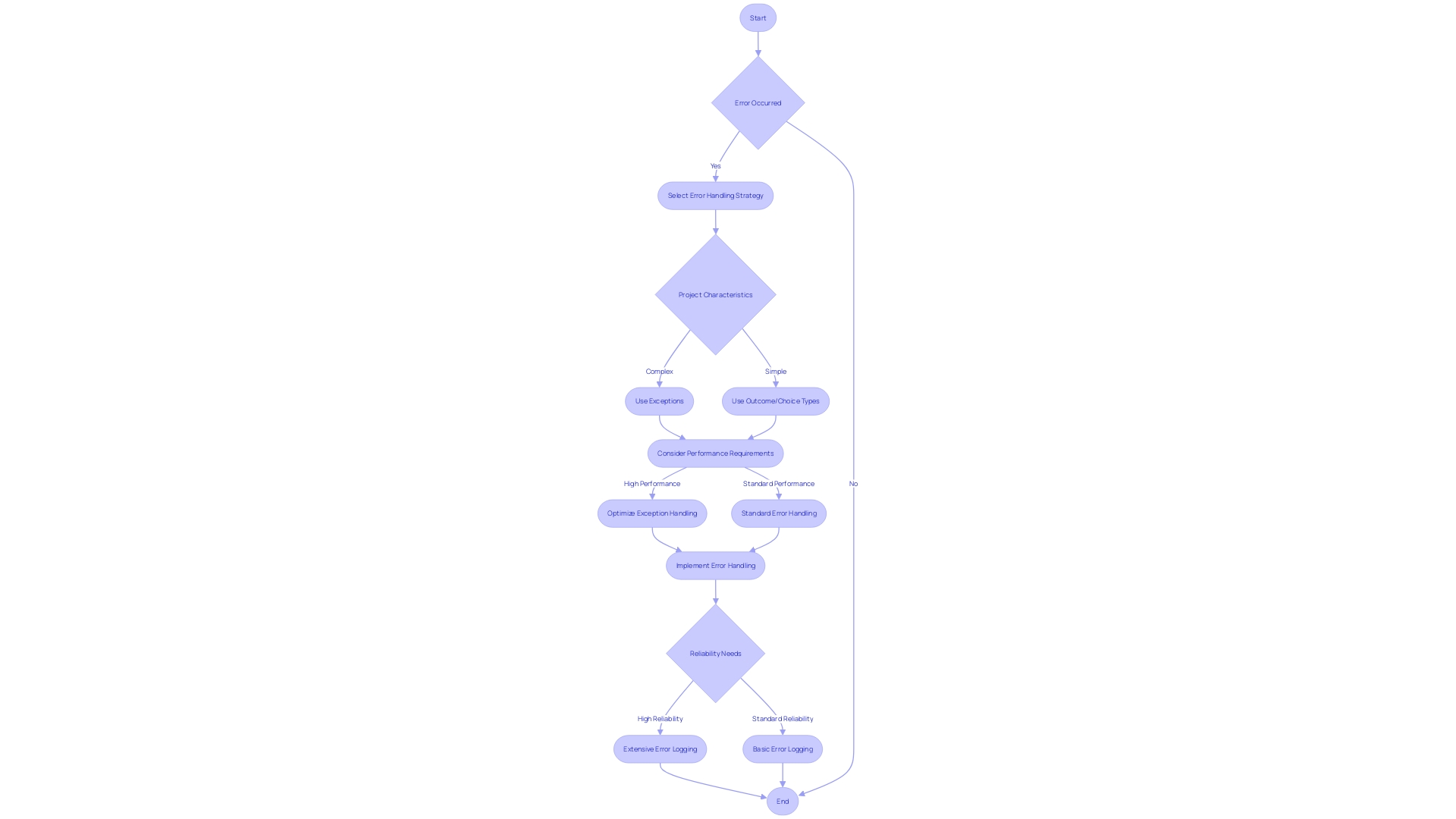

Error handling mechanisms are crucial for building robust software, and individuals involved in software development can select from various approaches, each designed for specific scenarios. Exceptions, for instance, are a dynamic way to handle issues that can't be foreseen at compile-time, allowing developers to separate issue processing from regular code. This preserves the readability and maintainability of codebases by encapsulating issue management in dedicated handlers.

In contrast, the utilization of outcome and choice types presents a structure where mistakes are regarded as typical values that a function can yield. This fixed methodology compels the management of mistakes during the compilation phase, guaranteeing that each potential mistake is taken into consideration prior to execution. It's particularly useful when you need to make explicit all the possible outcomes of a function, leading to a more predictable and verifiable codebase.

The impact of these mistake management strategies extends beyond mere code structure. They influence performance, as uncaught exceptions can incur a significant runtime cost, and they shape the development experience by affecting code readability and system design. Moreover, these approaches can be utilized in various contexts, ranging from managing mistakes within a class to overseeing issues across multiple components.

Developers must take into account the characteristics of the project and the anticipated conditions of mistakes when selecting the appropriate strategy for dealing with errors. For instance, in a system where performance is crucial and mistakes are anticipated to be infrequent, exceptions might be more desirable. In contrast, for applications that require high reliability and predictable behavior, result types could provide a more structured approach.

Comprehending the advantages and disadvantages of each mechanism for dealing with mistakes enables better decision-making in design, guaranteeing that the code is not only functionally accurate but also resilient and easily maintainable. This understanding is especially significant in view of developing programming paradigms, like the Practical Type System (PTS), which proposes new paths in mistake handling that may soon influence the manner in which programmers approach this essential aspect of software development.

Best Practices for Error Handling

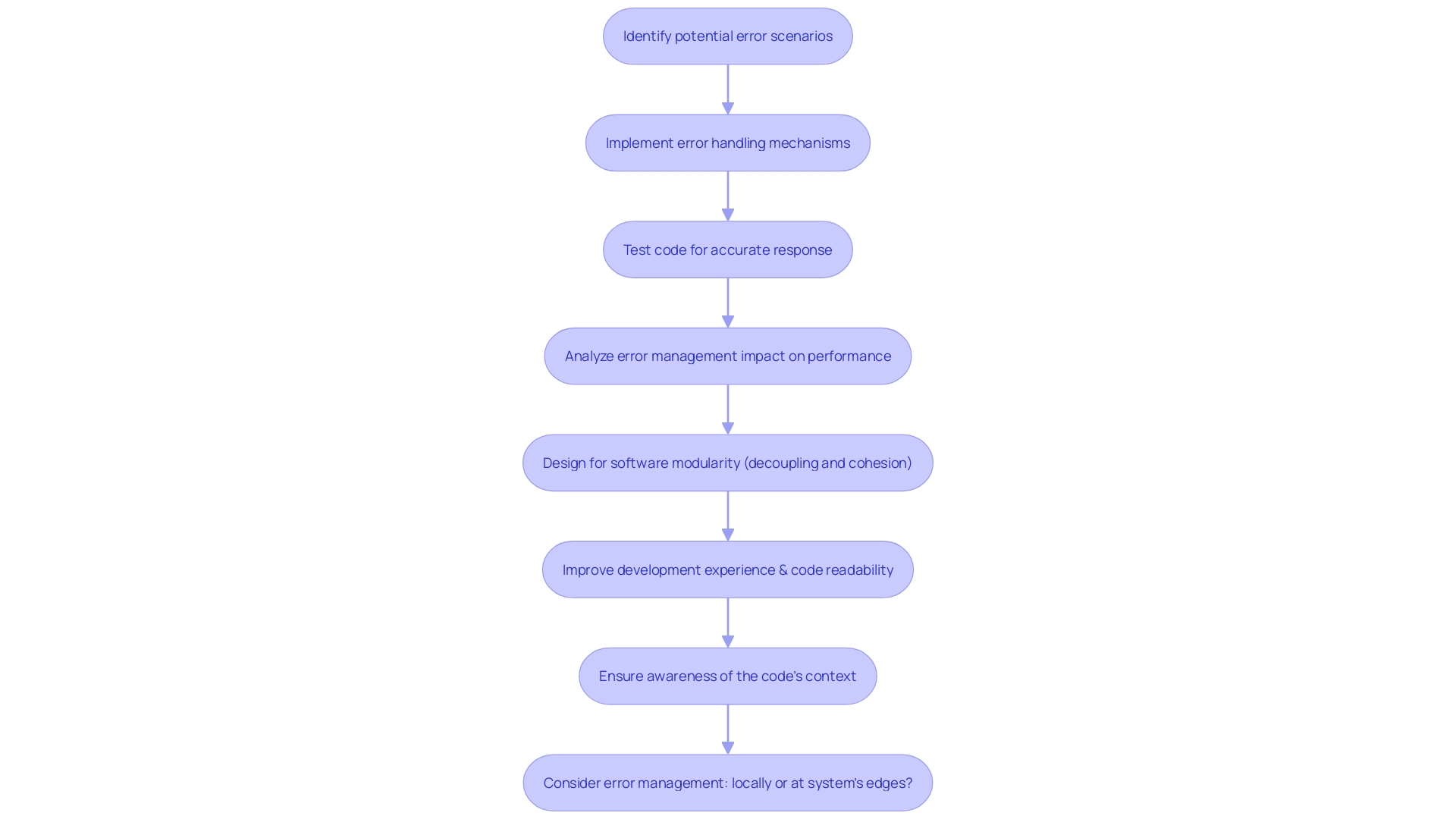

To navigate the complexities of mistake management effectively, developers must grasp the nuances of error management strategies. One critical aspect is the transition from return code-based mistake handling, which often led to convoluted control flow, to the more structured exception handling approach. This paradigm change simplifies issue management by offloading the responsibility to a specific exception handler, thereby enhancing code readability and maintainability.

Recognizing that mistake handling can be perceived differently, whether examining it locally within a class or at a system-wide level, is crucial. Decisions on when to employ exceptions constitute a vital aspect of design, impacting its modularity, performance, and the overall development experience. Developers must be adept at balancing these considerations to ensure code robustness and system stability.

Certainly, managing mistakes is an unavoidable aspect of program creation, frequently arising from a multitude of reasons like misunderstood specifications or lack of knowledge about specific tools. It is a journey that has seen the evolution from simple return codes to the sophisticated exception mechanisms we see in modern programming languages. As the field of program development keeps progressing, we must also adapt our strategies for handling mistakes, always with a focus on enhancing efficiency and guaranteeing the comprehensibility of our code repositories.

Testing Error Handling Scenarios

To guarantee that software functions efficiently even in unforeseen circumstances, programmers must thoroughly examine handling scenarios for unexpected problems. This process allows for the verification of code behavior under exceptional conditions, pinpointing where the system may not respond as intended. Through the utilization of unit testing, developers have the ability to examine individual components for accurate response to issues, while integration testing assesses the interaction between modules to guarantee a unified approach to managing issues.

The truth is that while programming the 'happy path' might be more enjoyable, anticipating and managing mistakes is a crucial aspect of resilient software design. Acknowledging this, the Practical Type System (PTS) paradigm, despite still evolving, has emphasized the need to take into account failure scenarios during development. Despite the challenges in experimenting with PTS due to its nascent stage, the concept emphasizes a proactive approach to error handling.

It's essential to acknowledge that the pain points caused by flaky tests in software development are not to be overlooked. As expressed in a recent article on The New Stack, unreliable tests can greatly impede velocity and, consequently, the overall well-being of the development process. The solutions to these unpredictable tests range from refining testing processes to rethinking test design principles.

Statistics from Google's experience indicate that a concentration on ecosystems for software creators can significantly reduce common defect rates. This involves safe coding practices to prevent predictable bugs and recognizing that relying solely on post-development defect detection methods is insufficient. As a result, a systemic problem arises from the ecosystem's design, requiring a comprehensive strategy that addresses all stages of software design, implementation, and deployment.

Testing allows us to detect and rectify issues that could lead to user frustration or more severe consequences such as security breaches or legal liabilities. By including testing at different stages, individuals can guarantee that applications not only fulfill stakeholder requirements but also provide a dependable and user-friendly experience. Considering this, the significance of testing, especially for managing errors, cannot be emphasized enough - it is as crucial as creating the initial code.

Logging and Error Monitoring

Becoming proficient in the skill of handling mistakes is a characteristic of experienced individuals in the field of programming. Logging and error monitoring stand as the twin pillars supporting this crucial aspect of application development. The importance of logging cannot be emphasized enough; it is the crystal ball through which developers can examine the past actions of their programs, comprehending their behaviors and quirks. Effective logging is akin to keeping a detailed diary, meticulously noting down what the software did or attempted to do, and what went sideways. These records are priceless, for they are the trail that leads to the origin of mistakes.

As highlighted by the research conducted in the Developer Experience Lab, enhancing the environment of software engineers is instrumental in fostering quality and productivity. This optimization extends to implementing robust logging mechanisms that can capture detailed information on failures without compromising sensitive data. Logs should be comprehensive, yet secure, avoiding the inclusion of any confidential information that could lead to security breaches.

Furthermore, monitoring tools are the vigilant guardians that notify individuals of problems in real time. These tools offer a comprehensive perspective of the issue landscape, aiding in identifying the frequency and context of problems that occur. The Developer Experience Lab underscores the importance of such tools in contributing to an optimized coding environment, which is essential for maintaining software quality and developer well-being.

Considering the downtime encountered by Graphite, the openness and comprehensive analysis provided by their CTO, Greg Foster, illustrate the advantages of comprehensive monitoring. Even a brief downtime can spur a learning opportunity and lead to system enhancements. This proactive approach to identifying and resolving issues can greatly enhance system performance and improve the end-user experience.

Essentially, thorough recording and careful mistake monitoring are not just actions to be marked as completed; they are the methods that, when carried out considerately, can revolutionize the manner in which systems function and develop. They empower developers to preemptively address issues, ensuring that the software backbone of companies remains strong and resilient against the ever-changing demands of the digital world.

Controlled Termination and Fallback Behavior

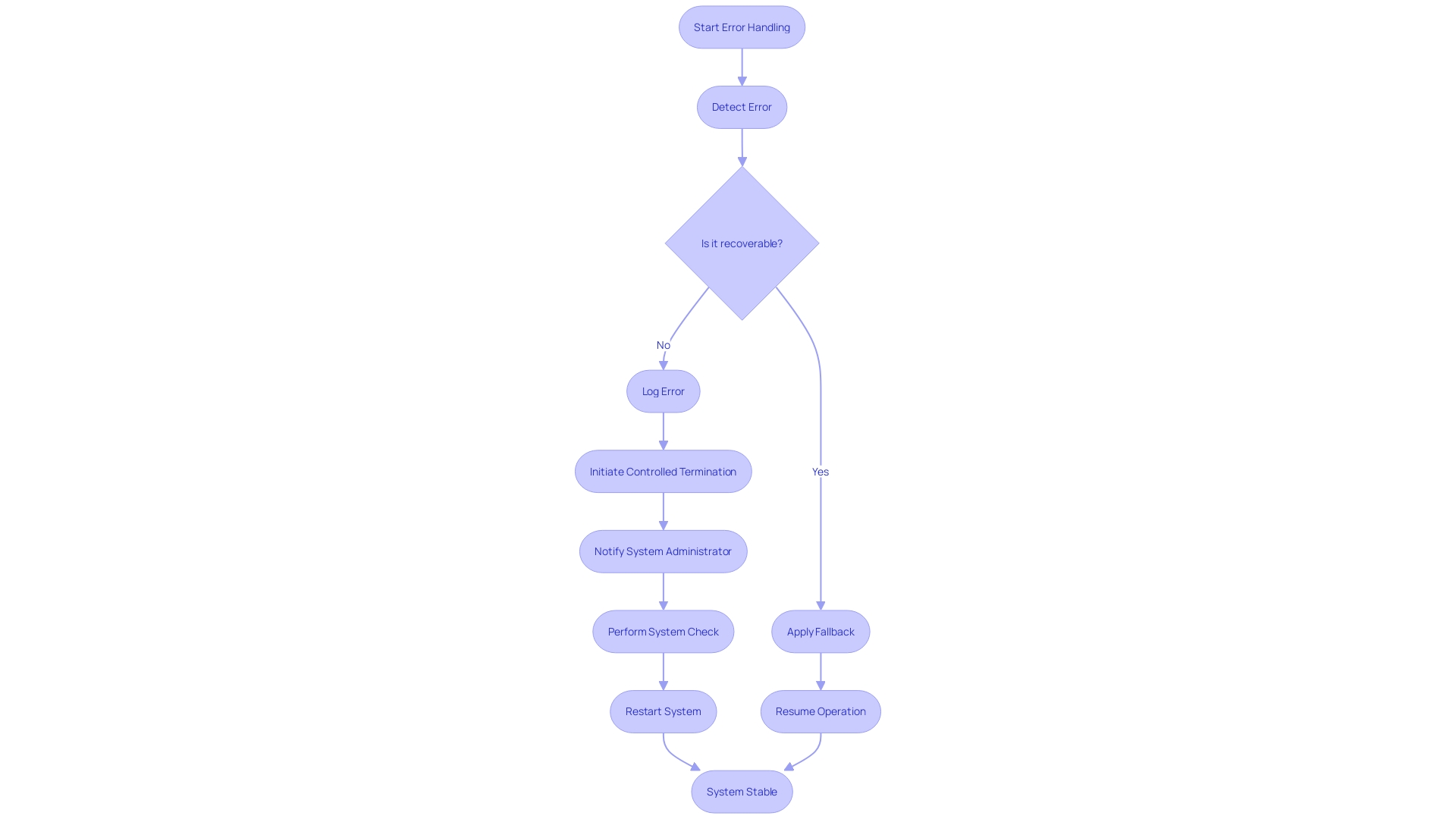

When building resilient applications, it's crucial to take into account how the system manages crucial failures. Should an error occur that cannot be rectified on the fly, having a strategy for controlled termination is key, ensuring that such events don't lead to system-wide instability. Moreover, fallback behaviors are essential, serving as a safety net that allows the application to keep running, albeit with reduced functionality, rather than coming to a complete halt.

To understand the significance of these strategies, let's examine the realm of engineering at a video streaming service for a major sports league. The high stakes of broadcasting live events to millions underscore the need for a system that can gracefully handle issues without interrupting the service. Similarly, consider a delivery company that expanded from bicycles to motorized vehicles to handle increased demand. This evolution parallels the need for systems that can adapt under stress, switching to 'fallback' modes when necessary to maintain service continuity.

In the ever-changing realm of software development, it's not rare for individuals involved in the creation of software to concentrate on the 'happy path,' where everything functions seamlessly. However, the reality of complex systems requires attention to the less glamorous but equally critical error management. It's a balancing act between maintaining performance and ensuring the codebase remains readable and manageable.

AI pair-programming tools exemplify this balance by offering suggestions that can lead to productivity gains across the board, with particular benefits for less experienced individuals. These tools enhance the capacity of a programmer to write error-resistant code while managing cognitive load and improving learning. It's a testament to the industry's recognition that developers are not just code creators but problem solvers who must navigate constraints and resources to deliver efficient solutions.

In line with these observations, controlled termination and fallback mechanisms are not just 'nice to have'—they're necessities in a world where downtime can equate to significant revenue loss and user dissatisfaction. They are the unsung heroes of system stability, ensuring that when errors do occur, the impact is contained and managed, allowing the application to persist and perform under adversity.

Security Considerations in Error Handling

Dealing with errors goes beyond simply addressing exceptions and failures; it is also a crucial component for preserving the security of software systems. Developers need to be vigilant about potential information leaks, guard against malicious attacks, and ensure that sensitive data is managed with utmost care. By embedding security-focused practices within error-handling protocols, developers can fortify their applications against a myriad of security threats.

For instance, a remarkable situation unfolded following the death of President Ronald Reagan, where a delay in declaring a federal holiday led to an unattended report generation by a mainframe on Friday, which would typically produce about 30 to 40 pages highlighting issues. This incident underscores the significance of designing systems that can adapt to unexpected changes and maintain security protocols, even when standard operational procedures are disrupted.

Furthermore, considering the rising complexity of cyberattacks, as demonstrated by the growing occurrences of security breaches and email attacks, it's crucial that mechanisms for managing mistakes are strong and able to adapt to evolving threats. This approach aligns with the insights from Google's security engineering team, which advocates for proactive bug prevention through safe coding practices to reduce common security vulnerabilities.

In addition, the real-world application of security techniques indicates an upward trend in organizations prioritizing security measures. Nevertheless, these approaches frequently lack complete incorporation into standardized procedures, indicating a chance for additional enhancement and implementation of optimal security measures within mistake administration.

Comprehending the interaction between mistake management, efficiency, and safety is essential. Strategic implementation of exception management is crucial, not only for the protection of the system at different levels, but also for capturing and addressing issues. As the field of cybersecurity evolves, our strategies for mitigating mistakes and managing exceptions must also evolve, ensuring that they not only efficiently address errors but also contribute to the overall security and resilience of computer systems.

Tools and Libraries for Robust Error Handling

Exploring a range of tools and libraries can greatly improve the way software development deals with mistakes. For instance, Pydantic has become a go-to library for Python developers seeking robust data validation. The recent Pydantic 2.5 upgrade introduces the JsonValue type among other new features, making it a more powerful tool for ensuring data accuracy. Meanwhile, the idea of a Practical Type System (PTS) is developing to offer a structured approach to managing errors. Despite the ongoing development of PTS and the inability to test its code examples in their present state, the philosophy underlying it is to foresee and handle mistakes preemptively.

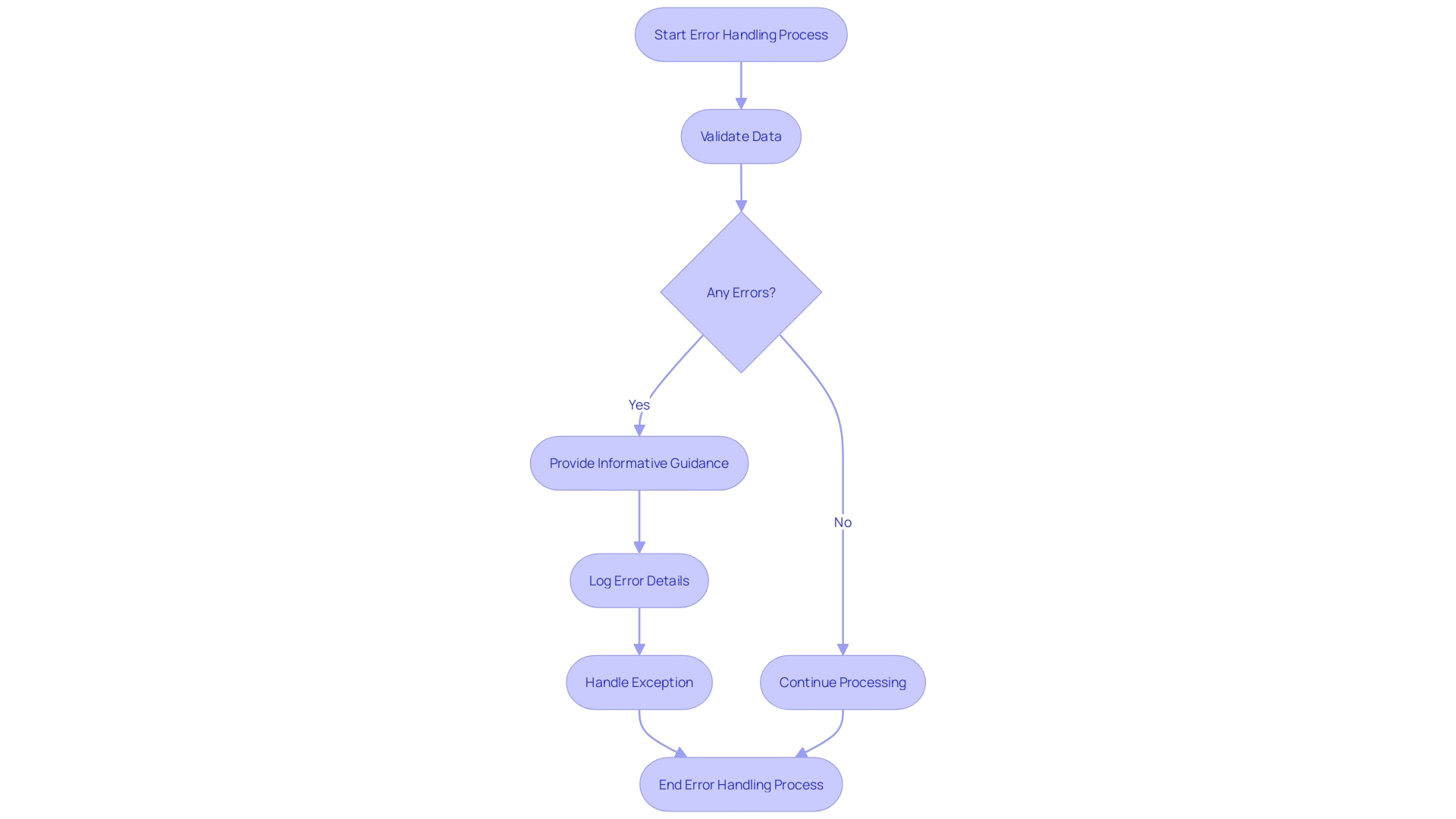

One of the most crucial elements of addressing mistakes is offering explicit, informative guidance that directs users towards resolution. This aligns with the insight offered by experienced programmers who highlight the significance of robustness over merely producing more code. They highlight that a key to productivity is ensuring that existing work remains reliable, thus not detracting from future progress. As an example, consider a scenario where a program needs to calculate the square root of a user input. Ensuring robustness would entail verifying that the input is a valid positive number, with a distinct and comprehensible message being returned in the event of invalid input.

In programming, learning through practice and encountering various exceptions is invaluable. For those exceptions not yet encountered, programmers could intentionally introduce typical mistakes, such as syntax or import issues, to comprehend the resulting messages. This practical experience with anomaly handling equips developers to effectively handle real-world irregularities in programs.

Real-World Examples and Case Studies

Exploring the world of coding, we come across a universal reality: mistakes are unavoidable. Nevertheless, the method for handling these mistakes can greatly differentiate the excellence and resilience of the ultimate software product. Although programmers may naturally write code following 'the happy path', bypassing the strict requirements of handling mistakes, there is a concealed wisdom in embracing this less explored route.

One experienced professional's viewpoint illuminates the significance of strong practices. He observed that a common pitfall over the past decade has been the tendency to prioritize quantity of work over its quality. This mindset often leads to recurring mistakes that undermine productivity and morale. By prioritizing techniques to guarantee the strength of every minor task, developers can build software that endures, freeing them from the perpetual requirement to reexamine previous mistakes.

The development of mistake handling methods is also remarkable. Traditional programming languages like C and C++ relied on return codes to signal success or failure, placing the onus on the caller to verify these codes. This often resulted in complex, hard-to-follow control flows littered with checks. The introduction of exceptions signified a paradigm shift, assigning the duty of handling exceptions to a designated handler, thus enhancing the code's readability and flow.

Case studies from the development trenches reveal a range of mistake management strategies, from local to systemic. This insight highlights the importance of context-awareness in software design. The decision between managing errors inside a class or at the system's boundaries can have significant impacts on both performance and the experience of the person creating the software.

Statistics suggest that programmers are naturally inclined to seek the easiest way, which isn't necessarily a negative characteristic. This 'laziness' can drive individuals to innovate and devise simple yet effective solutions when faced with unique challenges. The key is to balance resource constraints, deliverables, and creativity to achieve an optimal outcome. Ultimately, embracing robust error handling techniques not only yields more reliable software but also equips developers to address future challenges with greater confidence and efficiency.

Conclusion

In conclusion, robust error handling is crucial for the stability and user experience of software applications. It ensures uninterrupted functionality, even in the face of unexpected events or failures. The consequences of inadequate error management can be severe, as seen in real-world examples such as missed critical processes due to unexpected holiday declarations or global outages caused by software failures.

Developers have a range of error handling mechanisms at their disposal, such as exceptions and result types, each suited to different scenarios. Understanding and implementing best practices for error handling is essential to create resilient and maintainable codebases. Testing error handling scenarios rigorously is necessary to ensure software performance and user satisfaction.

Logging and error monitoring play a vital role in identifying and resolving errors in real time, enhancing the overall stability of systems. Controlled termination and fallback behaviors are key strategies to handle critical errors and maintain service continuity. Additionally, error handling is not just about addressing exceptions; it also plays a crucial role in ensuring the security of software systems.

Various tools and libraries, such as Pydantic, can enhance error handling capabilities. Real-world examples and case studies highlight the importance of robust error handling practices and the evolution of error management techniques. By embracing robust error handling, developers can create reliable software that stands the test of time, empowering them to tackle future challenges with confidence and efficiency.

In summary, by prioritizing robust error handling, developers can build resilient and maintainable software that delivers maximum efficiency and productivity.

Frequently Asked Questions

What is the importance of robust error handling in software development?

Robust error handling is essential for maintaining program durability and ensuring a stable user experience. It helps prevent software failures from escalating into larger issues, preserving functionality and user trust.

Can you provide examples of error handling failures?

Yes, notable examples include the missed report generation following President Reagan's death, which led to a series of overlooked issues, and the 'Cupertino Effect,' where a spell-checker mistakenly altered official documents. Additionally, the global outage caused by a 'Blue Screen of Death' in Windows OS systems illustrates the potential for a single error to cause widespread disruption.

What are the different types of error handling mechanisms?

Error handling mechanisms include: Exceptions, which allow developers to manage unforeseen issues separately from regular code, enhancing readability; and Outcome and Choice Types, which treat errors as common values that must be handled at compile-time, leading to predictable and verifiable code.

How do error handling strategies impact software performance?

Uncaught exceptions can incur significant runtime costs, while effective error handling strategies can enhance code readability and system design, ultimately improving performance.

What are some best practices for error handling?

Best practices include transitioning from return code-based handling to structured exception handling, recognizing the context of error handling whether at a local class level or system-wide, and balancing performance with code readability and maintainability.

Why is testing important for error handling?

Testing ensures that software operates efficiently in unexpected circumstances. Unit testing verifies individual components, while integration testing evaluates how modules manage issues together. This proactive approach helps identify potential problems before they affect users.

What role does logging and error monitoring play in error handling?

Logging serves as a detailed record of software behavior, helping developers understand and trace errors. Monitoring tools notify teams of problems in real time, enabling quicker responses and improvements to system performance.

What is controlled termination and fallback behavior?

Controlled termination involves having a strategy for safely shutting down systems when errors occur, while fallback behavior ensures that applications can continue running with reduced functionality instead of crashing completely.

How does error handling relate to security?

Effective error handling is crucial for maintaining security, as it helps prevent information leaks and exploits. Developers must embed security practices within error handling protocols to protect sensitive data and fortify systems against threats.

What tools and libraries can assist with robust error handling?

Tools like Pydantic for Python provide data validation, while emerging frameworks like the Practical Type System (PTS) offer structured approaches to error management. Utilizing libraries can enhance the reliability and robustness of code.

Can you give examples of real-world applications of error handling?

Real-world examples include software managing live sports broadcasts, where graceful error handling is critical to maintain service continuity. The evolution of error handling practices from simple return codes to sophisticated exception management highlights its significance in creating resilient software.