Introduction

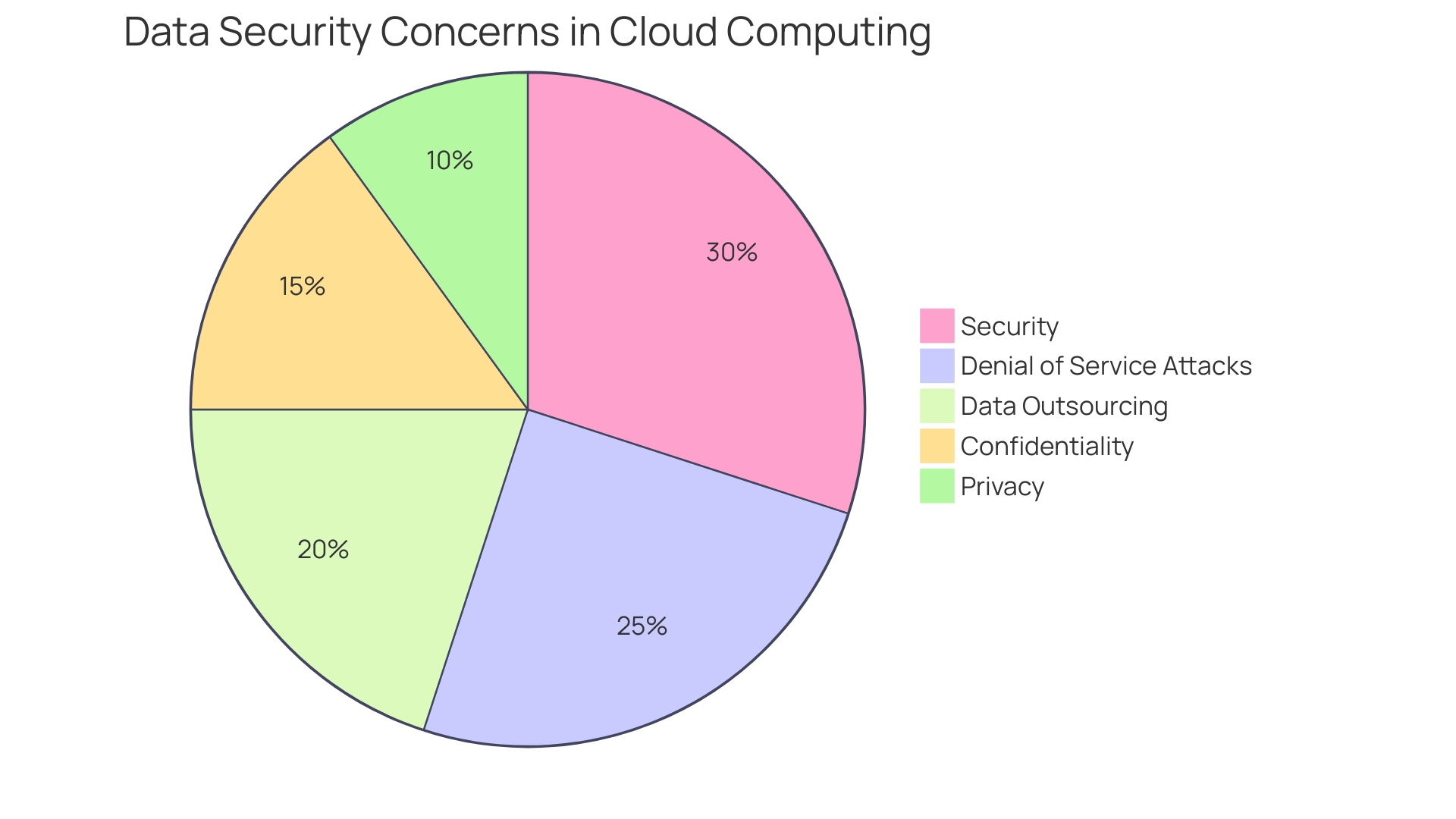

Understanding the critical security risks to web applications is essential for developers, particularly with the increasing sophistication of cyber threats. The Open Web Application Security Project (OWASP) Top 10 list provides an expert consensus on the most pressing web application security risks. From injection attacks to broken access control, these vulnerabilities can lead to unauthorized data exposure and bypassing of authentication measures.

The importance of adhering to OWASP guidelines is evident in industries like banking, where stringent security measures are necessary to protect sensitive user data. With up to 90% of vulnerabilities lying at the application layer, developers must prioritize security from the inception of their code. Implementing measures such as input validation, parameterized queries, and effective session management can significantly enhance security.

In an interconnected world where data breaches are on the rise, it is crucial for both users and companies to be vigilant and employ best practices to safeguard against attacks. The OWASP Top 10 serves as a guidepost for building safer digital spaces, ensuring maximum efficiency and productivity in web application development.

OWASP Top 10 Vulnerabilities Overview

Comprehending the crucial risks to web applications is indispensable for developers, particularly with the growing sophistication of cyber threats. The Open Web Application Security Project (OWASP) Top 10 list is an expert consensus on the most pressing web application vulnerabilities. For example, Injection attacks, like SQL injection, can result in unauthorized exposure, while Broken Access Control (BAC) can enable attackers to bypass authentication and authorization measures.

The digital landscape of the banking sector, as evidenced by M&T Bank's need for stringent security measures, highlights the urgency of adhering to such standards. In the ever-changing realm of web applications, where individual information, ranging from personal particulars to financial details, is regularly managed, the hazards are not hypothetical. Companies like Hotjar, which serve a vast number of websites, are responsible for securing a massive amount of sensitive user data, making adherence to OWASP guidelines crucial.

With Gartner Security reporting that up to 90% of vulnerabilities lie at the application layer, developers must prioritize protection from the inception of the code. Injections and BAC vulnerabilities are particularly prevalent, and while Web Application Firewalls (WAFs) offer a layer of defense, the responsibility lies with developers to implement strong protective measures. This involves verifying incoming information, employing parameterized queries with databases, and ensuring effective session management.

The importance of web application security cannot be overstated. As we interact with various websites and applications on a daily basis, it is essential for both individuals and companies to remain vigilant about possible data leaks and to implement best practices to protect against attacks. The OWASP Top 10 is not just a list; it's a guidepost for building safer digital spaces.

Broken Access Control

Ensuring strong access control is crucial in protecting an application from unauthorized actions by authenticated individuals. At the heart of this protection strategy lies the principle of least privilege, which dictates that individuals or systems should only have access to the information and resources necessary for their duties, and nothing beyond that. This is not only a cornerstone of information security, but also a proactive measure to curb privilege escalation and unauthorized access.

Access control mechanisms are divided into three key categories—authentication, authorization, and auditing. Authentication is the process of verifying an individual's identity, while authorization ensures that an authenticated individual has the correct permissions to access specific data or resources. Auditing, on the other hand, involves tracking and logging user actions to maintain a record of who did what and when.

Insufficient access controls can lead to improper access control issues, where restrictions are not adequately enforced, resulting in potential breaches. The Connectivity Standards Alliance's development of Aliro, a new protocol aimed at enhancing interoperability for access control, echoes the need for simplicity and flexibility in access management systems. Aliro aims to standardize experiences across various technologies like NFC, Bluetooth® LE, and UWB, and to incorporate secure, asymmetric cryptography for credentials.

As we navigate the complexities of Internet-connected services, the evolution of access control has become paramount. Lessons from the past underscore the transition from physical locks and keys to advanced digital access mechanisms, emphasizing the importance of watchful and flexible practices to safeguard sensitive data in an increasingly interconnected world. A Software Bill of Materials (SBOM) is now recommended to maintain visibility into open source components within your code, ensuring they are up to date and free from risks to confidentiality or license risks.

Cryptographic Failures

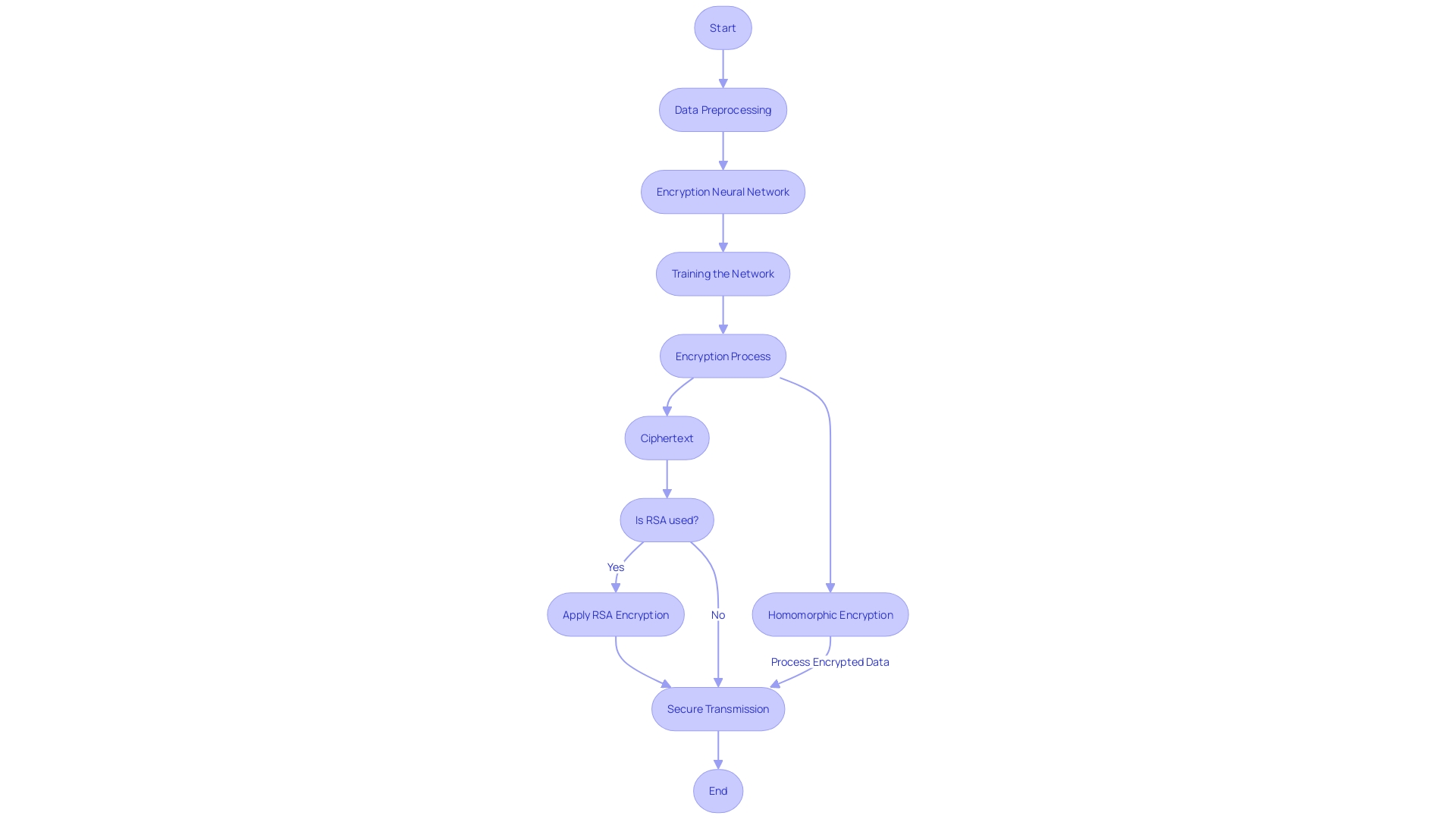

The importance of cryptographic practices cannot be overstated in our digitally connected world. Encryption is the foundation of security, converting plaintext into ciphertext to protect sensitive information even if intercepted. When developers contemplate encryption, they must recognize the critical difference between reversible encryption and irreversible hashing. As illustrated by the Marriott incident, where payment card and passport numbers were mistakenly protected with SHA-1 instead of AES-128, the consequences of cryptographic misunderstandings can be severe. Ensuring the proper implementation of cryptographic algorithms, such as the use of symmetric encryption for private communications or asymmetric encryption for secure exchange, is crucial.

Given the recent high-profile breaches, such as the AT&T exposure and Change Healthcare ransomware attack, it's clear that strong encryption and key management are indispensable. These incidents not only put personal information at risk but also escalate the threats posed by cybercriminals. To bolster defenses, developers can adopt practices such as dependency pinning, as used by Revoke.cash, to mitigate supply chain attacks. Furthermore, adopting advancements in public-key encryption, as emphasized by experts from Akamai, can enhance the protection of digital systems handling sensitive information.

Recognizing the record-breaking high average cost of breaches reported by the Ponemon Institute, the urgency for investment in effective security measures is evident. The changing landscape of cyber threats requires a proactive and knowledgeable approach to encryption, key management, and secure storage, thereby protecting the privacy and integrity of digital information.

Injection

Injection vulnerabilities, encompassing threats like SQL injection, pose a severe risk by potentially permitting attackers to execute unauthorized code within an application. Effective prevention strategies are critical to safeguard against such attacks. Input validation is a cornerstone of these strategies, designed to ensure that only appropriately formatted data passes into the application. This involves a meticulous process of examining inputs from individuals and applying stringent checks to disallow any potentially harmful code, such as excluding PHP comments, as seen in the validation function of Moodle, an extensively utilized learning management system.

Implementing parameterized queries is another robust defense mechanism. This approach includes predefining SQL commands and using placeholders for parameters, which are then supplied by inputs from individuals. By performing this action, the database can differentiate between code and information, regardless of input from individuals. The comparison between normal and malicious SQL statements illustrates the stark differences and the effectiveness of parameterized queries in preventing injection.

Moreover, adopting an allowlist approach can define acceptable user inputs, helping to block abnormal queries that could lead to exploits. Treating potentially dangerous inputs as simple information rather than executable commands also adds a layer of defense, escaping user-supplied input. By implementing these combined measures, applications can attain a greater level of protection and durability against the growing menace of cyber attacks.

Insecure Design

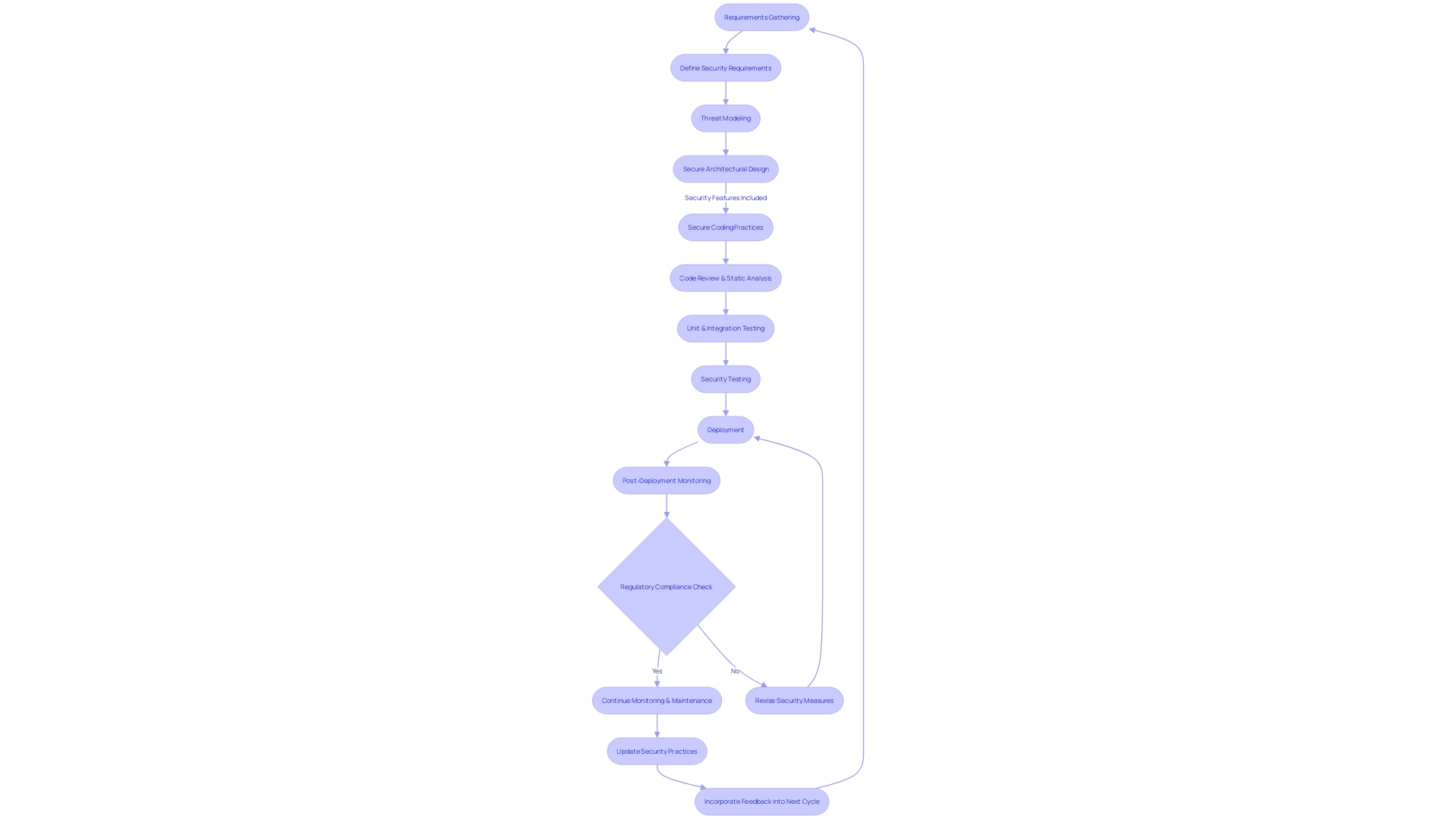

Developing software with strong protection is a vital part of application design, where the objective is not only to fulfill functional and non-functional prerequisites but also to incorporate resilient safety measures to avoid weaknesses that might result in system breakdowns, data breaches, or total system breach. Ensuring protection from the beginning involves embracing a 'secure by design' philosophy. This approach is emphasized by the recent update to CISA's whitepaper, which highlights the significance of assuming responsibility for customer protection outcomes through practices such as application fortification and defaulting to protected configurations within a secure software development lifecycle (SDLC) framework.

One illustration of the complexities involved in secure design is the OAuth protocol, an industry-standard for authorization and authentication. Despite its simplicity and widespread adoption among major web services, OAuth's underlying architecture is intricate, with a multitude of components and features that must be securely managed. This example shows that even apparently simple implementations require careful thought to ensure safety.

The revised CISA guidance, supported by a wide international alliance, also emphasizes the importance of complete openness and leadership in matters of protection. It provides a roadmap for technology manufacturers, including AI model developers, to display their safety initiatives. Artifacts of a secure by design program, as outlined by global experts, can serve as evidence for stakeholders to assess a manufacturer's commitment to security.

Security considerations should be interconnected with business goals, such as service availability and information sovereignty, to inform architectural decisions. For instance, if an application must be available globally, a multi-region architecture is advisable. Similarly, regulatory requirements for information storage in specific countries necessitate infrastructure deployment that complies with those mandates. Taking care of these requirements in the early stages is crucial, as adding protective measures after the production process can be expensive and necessitate substantial modifications to the application's structure.

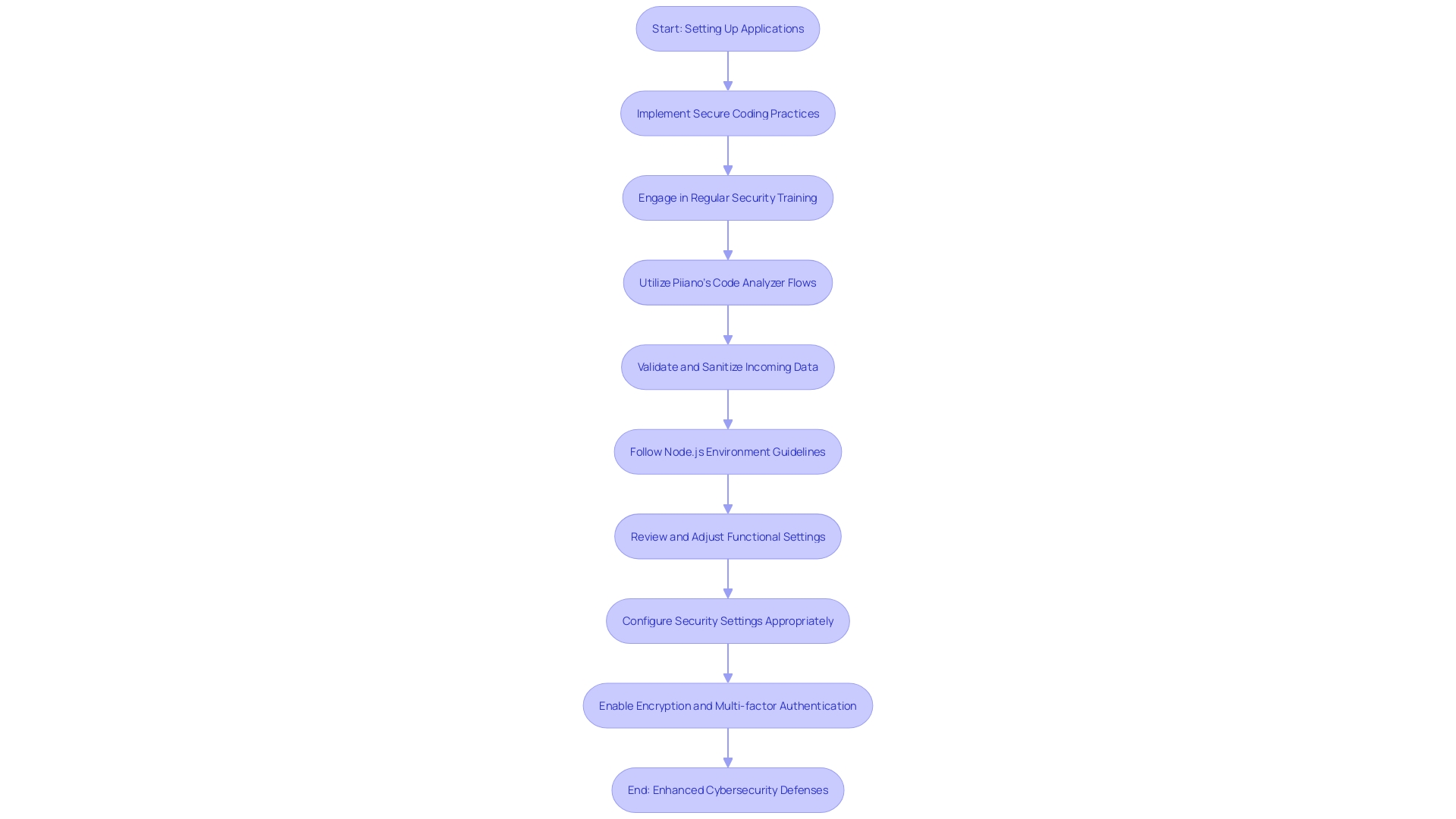

Security Misconfiguration

When setting up applications to protect sensitive information, it's crucial to prevent misconfigurations that may result in vulnerabilities. Such misconfigurations may arise from incorrect settings in systems, applications, or network devices due to oversight or complexity. To combat this, it's essential to implement secure coding practices and engage in regular security training to stay ahead of evolving cyber threats. One effective approach is to utilize tools like Piiano's code analyzer Flows that continuously scrutinizes source code for potential information leaks, ensuring protection prior to production. This aligns with the latest campaign from CISA, 'Secure Our World,' which emphasizes the necessity for secure-by-design products. Additionally, it's crucial to validate and sanitize incoming data to prevent attacks such as SQL injection and XSS. For Node.js environments, specific guidelines exist to address threats like typosquatting and malicious packages. Overall, the concerted effort of technology providers and continuous education can significantly reduce risks and fortify cybersecurity defenses.

Vulnerable and Outdated Components

Outdated or vulnerable components are often the weakest links that expose applications to risks. Addressing this requires a proactive approach to managing dependencies and ensuring that software components remain fresh. Dependency freshness, a critical metric, is the difference between the currently used version of a dependency and the latest available version that ideally should be used. It's important to regularly evaluate this freshness to prevent the gradual buildup of technical debt, which can appear as outdated dependencies that are ticking time bombs for breaches in safety.

The software supply chain resembles an intricate web, with each element forming a potential vulnerability. Analogous to a box of brownie mix, where each ingredient originates from a separate supply chain, the software supply chain encompasses layers of code, development tools, and processes that depend on each other. Each layer carries inherent risks that could potentially compromise the final product. The Cybersecurity Ventures Report predicts a significant rise in the expense of software supply chain attacks from 2024 to 2031, emphasizing the need for organizations to strengthen their supply chain protection.

To mitigate both known and unknown risks within the software supply chain, it's essential to adopt a new perspective that goes beyond conventional Software Composition Analysis (SCA) tools. These tools, while useful, fail to detect major supply chain attacks, leaving a critical part of the infrastructure exposed. A comprehensive approach that includes identifying patterns of defects and analyzing trends over time is necessary to enhance product quality and protect at scale. By extending solutions for categories of flaws, developers can transition from resolving specific problems to mitigating entire categories of vulnerabilities, effectively improving the overall posture.

Legacy systems often exacerbate the challenge, as they may fail to meet evolving customer needs, lead to unpredictable feature development times, and cause integration constraints that limit new feature designs. Software developers may experience frustration due to the complexity mismatch between features and their implementations. This calls for organizations to contemplate different strategies ranging from steady-state approaches, which focus on short-term business value while minimizing technical debt reduction, to more radical 'big-bang' approaches that overhaul systems entirely.

Community-driven software development projects, like those by the Public Knowledge Project (PKP), emphasize the importance of community involvement for sustainable and fair access to research infrastructure. By participating in community updates and discussions, organizations can stay informed about the latest developments and best practices in software supply chain. This collective wisdom is encapsulated in a knowledge base that includes initiatives, standards, regulations, and a host of resources that aid in securing the software supply chain.

Ultimately, securing the software supply chain is a dynamic process that demands vigilance, collaboration, and the willingness to evolve strategies as threats emerge. It's an investment in the long-term viability and protection of software products that organizations cannot afford to overlook.

Identification and Authentication Failures

In the fabric of our digital existence, secure user authentication and session management are linchpins in protecting against unauthorized access and potential data breaches. At the intersection of convenience and safety, businesses are tasked with navigating the complexities of authentication processes that span various platforms and devices. It's an ongoing battle against security risks, development complexities, and customer distrust.

A strong authentication strategy must incorporate the use of robust passwords—a fundamental yet critical measure. Passwords serve as the gatekeepers of individual identity, acting as the initial barrier to personal, financial, and sensitive information. When a password is compromised, the repercussions can be disastrous, resulting in financial losses, theft, and irreparable harm to an organization's reputation.

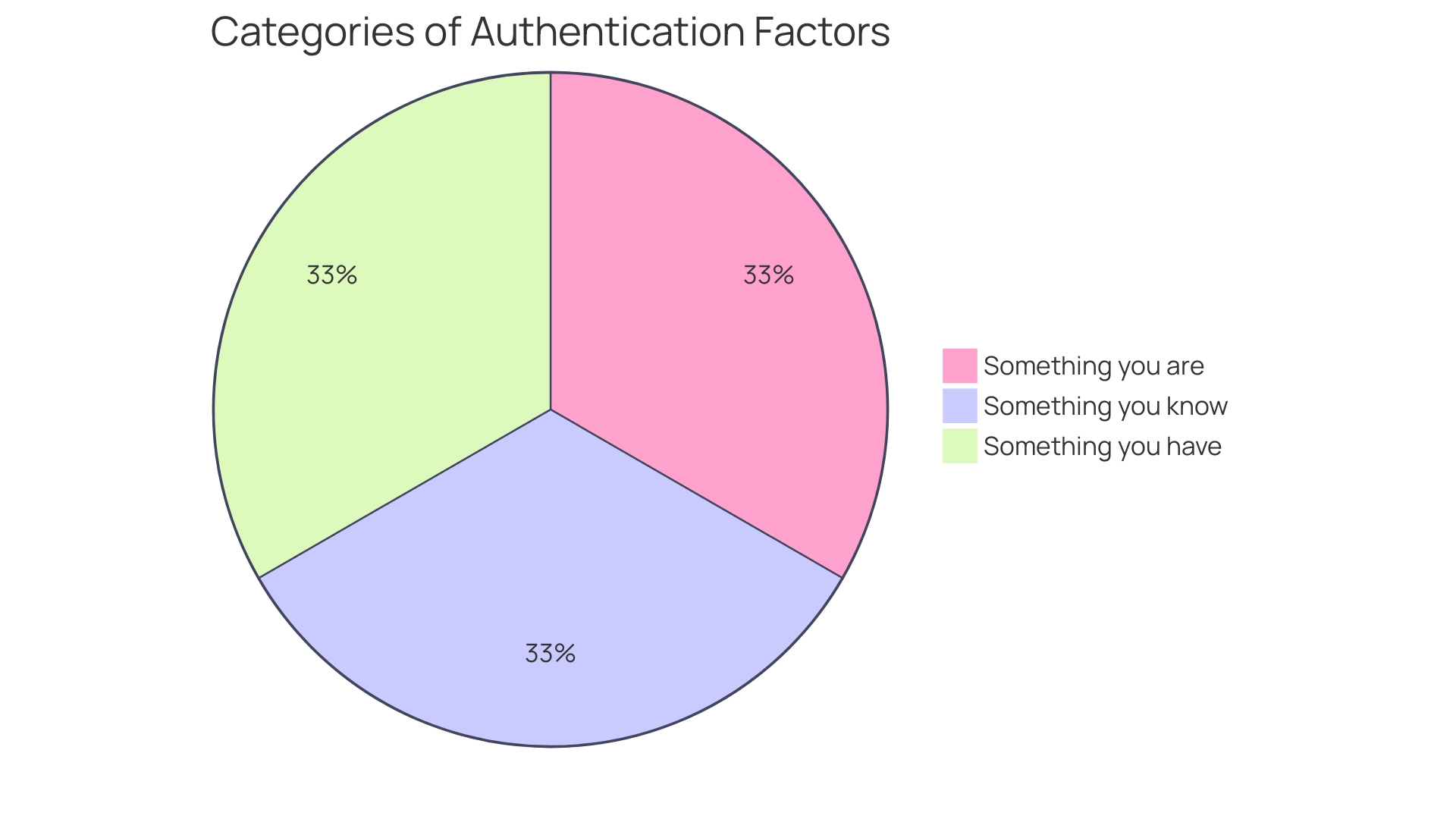

To fortify this first line of defense, multi-factor authentication (MFA) has emerged as a vital component. MFA necessitates individuals to provide multiple proofs of identity before granting access, thereby considerably reducing the probability of unauthorized entry. With 67% of SMBs feeling unequipped to handle data breaches, the pivot towards MFA and managed cybersecurity services has become more pronounced, with 89% of SMBs now partnering with service providers for enhanced security measures.

Furthermore, session handling is crucial for maintaining trust and safety in Single Page Applications (Spas) that utilize JavaScript and its associated frameworks. Given that the average organization utilizes around 20,000 APIs—each a potential entry point for attackers—vigilant session management is critical. It guarantees that even after initial verification, individual engagements stay protected throughout their involvement.

Ensuring reliable verification extends beyond just safeguarding information; it involves verifying the user's identity and managing entry meticulously. In a world where ransomware attacks are escalating, with 236.1 million incidents reported in just the first half of 2022, the role of authentication in safeguarding digital assets is more crucial than ever. Implementing authentication practices not only mitigates the risk of breaches but also reinforces the trust that users place in digital systems, ensuring the integrity and reputation of businesses in the digital marketplace.

Software and Data Integrity Failures

Protecting the authenticity and integrity of software and data is crucial to prevent unauthorized modifications, which can lead to software and data integrity failures. The implementation of coding practices and guidelines, such as those advocated by the Open Web Application Security Project (OWASP), plays a pivotal role in safeguarding against tampering.

To ensure software supply chain security, frameworks like in-toto offer a robust solution. In-toto provides verifiable metadata that traces the steps in the software supply chain, defining who can perform each step and setting thresholds for the validity of signatures. By enforcing this cryptographic verification on the final product, in-toto helps maintain the integrity of the entire development process.

For example, the CNCF Security Advisory Group endorses in-toto as a blueprint for safe and verifiable software updates. With its CNCF-graduated project status, in-toto offers standardized metadata schemas that are crucial for information about your supply chain and build process. This allows for the secure signing of artifacts and ensures that certificates, which are public keys bound to authenticated identities, are not misused.

Regular training is another key practice in maintaining software integrity. Cybersecurity threats are constantly evolving, making continuous education imperative for development teams to understand the latest threats and best practices. A combination of interactive workshops, online courses, and scenario-based training can effectively instill a proactive safety culture.

In addition, the importance of a Software Bill of Materials (SBOM) has been highlighted in reports such as the OSSRA, which underscores the need to know the components within your software. This understanding is vital to manage the risks posed by third-party and AI-generated code. With an average of 526 open source components per application, as noted in the 2024 OSSRA report, automated safety testing becomes essential. Manual testing is not scalable, hence the necessity for tools like software composition analysis (SCA) to ensure comprehensive protection.

When considering the larger scope, it's clear that protecting software and information from integrity failures is a complex challenge. It necessitates a fusion of technologies, frameworks like in-toto, regular training on safety, and the utilization of automated tools to guarantee that the software and data we depend on remain protected and reliable.

Security Logging and Monitoring Failures

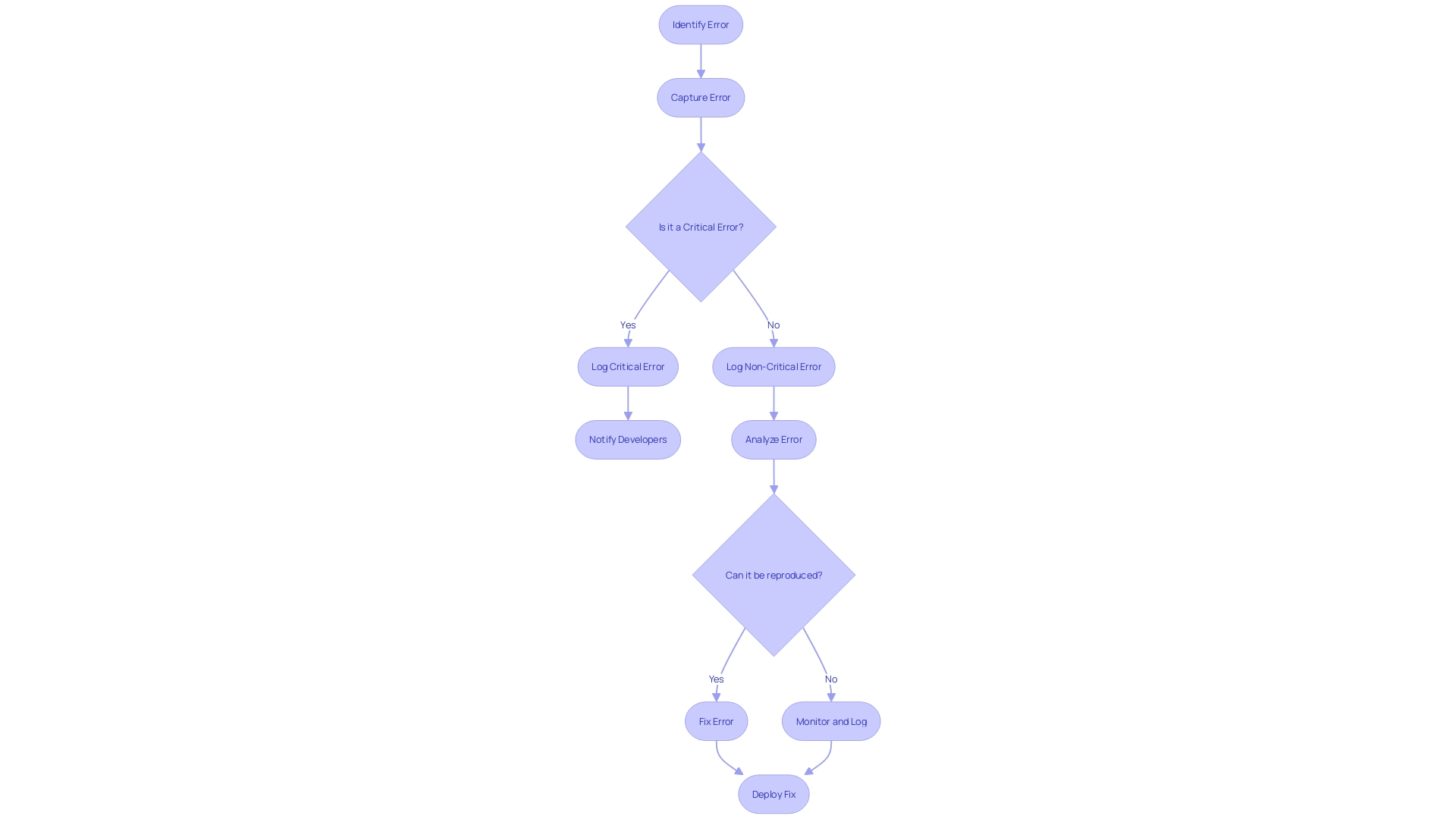

Efficiently handling incidents is a complex task that depends on the capability to detect and respond to threats promptly. Central to this is the strategic implementation of logging and monitoring. When an AWS incident revealed a suspicious request to increase SES sending limits—a service the client wasn't using—it highlighted the importance of vigilant monitoring. This incident serves as a case study underscoring the necessity of logs for early threat identification.

Logs are the foundation of safeguarding, offering comprehensive records of system activities that are vital for identifying and mitigating potential risks. However, questions arise about what to log and the extent of logging. Too much logging can overwhelm systems, while too little can leave gaps in security. The balance lies in best practices that harness tools and services to manage logs effectively. Artificial intelligence is set to have a growing impact in log analysis, assisting in the intricate process of standardizing and deciphering vast amounts of log information.

The recent development of Fluent Bit, a scalable open-source data collector, and LME by the UK's National Cyber Security Center, which offers real-time visibility into device activity, are examples of tools that streamline logging processes. These innovations, along with insights from Google on open-source supply chain protection, reinforce the value of logs in maintaining robust digital defenses.

From an organizational perspective, incident response involves a structured approach with documented procedures (IRP) and a dedicated team ready to counteract unplanned disruptions. Identifying contributing factors like configuration drift or code changes is critical for understanding and mitigating incidents. The Office of Homeland Security Statistics (OHSS) further emphasizes the breadth of cybersecurity concerns, with the promise of future reports covering these vital areas.

In summary, to strengthen and ensure compliance, organizations must utilize logging and monitoring as strategic tools within their incident response framework, supported by up-to-date technologies and informed by the latest industry insights.

Server-Side Request Forgery (SSRF)

Server-side request forgery (SSRF) is a critical security concern that allows attackers to send requests from a compromised server to internal systems, potentially causing significant damage. To combat SSRF threats, it's essential to implement robust preventive measures. One effective strategy involves meticulous input validation to ensure only permitted data passes through. Additionally, maintaining a whitelist of approved resources can further restrict the scope of accessible URLs, minimizing the risk of unauthorized internal network access. Using reliable APIs also plays a crucial role in protecting against SSRF attacks. For instance, implementing Node.js practices such as sanitizing user input and managing dependencies is crucial. This method is clearly demonstrated by the development of PlanetScale's webhooks service, where engineers combined their past exploits to improve webhook protection. These measures, in addition to staying informed about the most recent trends and weaknesses in server protection, are essential for establishing a safe and durable server environment.

Secure Coding Practices: A Detailed Guide

Secure coding practices are the bedrock of software security, particularly in industries where data sensitivity and compliance are paramount. Take, for example, the banking sector, where M&T Bank, a stalwart with over a century and a half of history, had to adapt to digital demands by implementing Clean Code standards to maintain their software's integrity. The coding guide encompasses a multitude of dimensions critical to safeguarding applications from common vulnerabilities that threat actors exploit.

Key aspects of secure coding include validating input to prevent SQL injection and other attacks, encoding output to stop cross-site scripting, ensuring robust authentication and secure password management, and managing sessions to prevent hijacking. Access control, cryptographic practices, including post-quantum algorithms like ML-KEM recently introduced by Microsoft, are also vital. Logging and error handling processes help identify and mitigate issues promptly, while protecting data both at rest and in transit is critical to thwart unauthorized access and leaks.

Furthermore, securing communications and diligently configuring systems and their deployment can significantly reduce the risk of breaches. As the culture of safeguarding in the software development lifecycle becomes more deeply rooted, as observed in recent industry discussions, the emphasis shifts from reactive patching to proactively constructing protection into the code from the foundation. This aligns with Google's approach, which emphasizes safe coding to prevent bugs and guarantees protection at scale across its vast array of applications and services.

Ultimately, the goal is to embed security into the fabric of the development process, ensuring that it is not an afterthought but a fundamental aspect of creating reliable, robust, and trustworthy software.

Input Validation

Validating input from individuals is a cornerstone of secure coding practices, essential for thwarting injection attacks such as SQL injection, where attackers manipulate a database through malicious input. To protect against these threats, it's crucial to understand and implement best practices for input validation, especially when dealing with user-controlled inputs.

One typical vulnerability arises when input from individuals is incorporated directly into applications without proper scrutiny. An attacker might input malicious code, including scripts or database commands that the application then executes. For example, a basic input like ' OR '1'='1'; DROP TABLE individuals; could have disastrous consequences if not handled correctly.

To mitigate these risks, developers should consider examples like the SQL courtroom analogy. In this scenario, an unexpected command disrupts the intended flow, much like an incorrect verdict might release a defendant inadvertently. Similarly, code injection vulnerabilities emerge when user input isn't validated or sanitized, leading to potential exploitation.

Developers can draw upon resources like the OWASP Smart Contract Top 10 to understand and guard against common vulnerabilities in smart contracts and other software components. This awareness document helps teams identify and secure against the top weaknesses that have been exploited in recent years.

Furthermore, industry initiatives such as Secure by Design highlight the significance of incorporating protection into products from the beginning. These efforts aim to reduce systemic vulnerabilities, such as cross-site scripting, by embedding best practices into the design and development lifecycle.

Regular expressions, often used for input validation, can also be a source of safety concerns. ReDoS vulnerabilities, for example, can be present in both dependency and application code. It's essential for developers to recognize potentially vulnerable patterns in regular expressions to prevent these issues.

From an industry perspective, significant efforts are being made to address the backlog of vulnerabilities requiring analysis, as noted by NIST's management of the National Vulnerability Database. The increasing number of software applications and associated vulnerabilities underscores the importance of robust input validation strategies.

In conclusion, effective input validation is a multifaceted approach that requires awareness of common vulnerabilities, the implementation of best practices, and an industry-wide commitment to Secure by Design principles. By prioritizing security at all stages of development, developers can better protect their applications from a wide array of injection attacks.

Output Encoding

To mitigate the risks of cross-site scripting (XSS) attacks, it’s crucial to understand the nuances of output encoding. As an example, while conducting a penetration test on Moodle, a widely adopted learning management system, it became clear that individuals with specific roles could carry out XSS attacks as part of the system, emphasizing the intricacy and possible vulnerabilities even in reputable software.

Output encoding involves transforming user-generated content into a secure format before it’s rendered on the page, which prevents malicious scripts from being executed. One approach, as demonstrated in a validation function from Moodle's codebase, is to impose constraints, such as disallowing PHP comments and standardizing variable names to avoid the inclusion of quotation marks. This process, although seemingly straightforward, is critical in preserving the integrity of the application and protecting the confidentiality of sensitive information.

In the context of Node.js, output encoding requires careful consideration. Because of Node.js’s distinctive implementation, where developers can specify the encoding scheme of information in the HTTP response body, it is essential to appropriately serialize Unicode information to prevent anomalies.

The importance of these measures is underscored by statistics that over 30% of websites are susceptible to XSS attacks, according to a quote highlighting the prevalence of this threat. Furthermore, firms such as Hotjar, which gathers comprehensive user information across more than a million websites, emphasize the importance of implementing strict output encoding techniques to safeguard confidentiality and information security.

Implementing these best practices is not just about following guidelines; it's about creating a secure environment where individuals can trust their data is safe from exploits. As the technical landscape evolves, so must our approaches to safeguarding, ensuring that encoding user-generated content remains a top priority in our defense against XSS vulnerabilities.

Authentication and Password Management

Protecting accounts through proper authentication and password management is not only vital but also a challenge for many organizations, as shown by British Telecom's (BT) transition toward a passwordless experience. In light of the increasing threats such as the SolarWinds hack, the focus has shifted to robust measures like Privileged Access Management (PAM), Identity Access Management (IAM), and credential management. These endeavors aim to achieve a seamless interaction while ensuring the safety of individuals.

To maintain this equilibrium, it's critical to understand the importance of secure authentication. As a cornerstone of digital protection, authentication serves as the guardian to sensitive information, utilizing usernames and passwords to verify user identities. This essential safeguard is the primary barrier against breaches, which can have severe consequences on an organization's financial position and reputation.

One significant measure in enhancing password protection is the implementation of password hashing. Hashing transforms a password into a unique string of characters, a one-way function that makes it practically impossible to reverse-engineer the original password. Unlike encryption, which is reversible, hashing ensures that even if the information is compromised, the actual passwords remain inaccessible.

Best practices in authentication also include the careful consideration of API safeguarding. With the increase of digital and cloud-based infrastructures, APIs have become the foundation of web and mobile applications, requiring strict protocols to safeguard sensitive information from unauthorized access and breaches.

Despite the reliance on passwords, which date back to ancient military times, the digital age has exposed their limitations. The challenge of managing multiple passwords for different platforms can lead to stress and risks to safety. This reality underlines the necessity for evolving authentication methods like biometrics and token-based systems, which fall into the categories of 'what you have' and 'who you are' respectively.

While only a small fraction of organizations feel fully prepared to defend against cyberattacks, the cybersecurity industry is rapidly growing, with an estimated global workforce of 4.7 million professionals. Their knowledge and ongoing adjustment to emerging threats are essential for protecting company data and maintaining the integrity of systems.

In conclusion, as we navigate through the complexities of digital security, it's evident that investing in efficient authentication practices and password management is not only recommended but essential for the safeguarding of accounts and the prevention of unauthorized access.

Session Management

Giving top priority to session management is crucial to prevent session hijacking and protect user privacy. Addressing this problem, organizations must prioritize the development of strong session management protocols that encompass the generation of reliable session IDs, handling sessions responsibly, and timely expiration of sessions. A robust software development lifecycle (SDLC) incorporates such practices throughout all stages of development, guaranteeing that protection remains central from inception to deployment.

In the realm of software businesses, seamless yet secure user experiences are the gold standard, particularly when spanning multiple platforms. However, the challenges are substantial, with fragmented authentication processes leading to heightened risks, increased development complexities, and eroded customer trust. A successful approach to this is DevSecOps, which intertwines safety practices with development and operations, fostering a cultural shift towards shared responsibility and transparency. This incorporation of safeguard measures, from the initial phases of progress, is critical in anticipating weaknesses.

For instance, New America, a hybrid think tank, juggles the protection of sensitive data with the need for user-friendly credential management for a workforce that fluctuates between 150 and 180 employees. Their experience underscores the importance of robust session management in protecting classified information from cyber threats.

The cybersecurity landscape today is evidence of the progression of protective measures. Only 4% of organizations are fully confident in their protection against cyber attacks, while the global cybersecurity workforce numbers approximately 4.7 million. The median yearly salary for analysts specializing in information protection in 2021 was $102,600, illustrating the growth of the sector and the importance given to safeguarding digital assets. Such statistics highlight the critical nature of secure session management as part of a comprehensive security strategy.

Access Control

In the realm of web applications, the subject of access control goes beyond mere permission settings; it is about ensuring appropriate access based on roles and relationships within an app's ecosystem. Traditional role-based access control (RBAC) systems assign static roles to individuals, which can become cumbersome in dynamic organizational structures. On the other hand, attribute-based access control (ABAC) systems offer flexibility by employing attributes of individuals to govern access privileges, though with the possibility of complexity in administration.

To address the evolving landscape, a nuanced approach called Relationship-Based Access Control (ReBAC) has emerged. ReBAC considers the intricate web of relationships between various entities, creating a 'Policy as a Graph' model that mirrors the interconnected pathways of a city's map. This model encompasses not only the individuals and their roles but also the dynamic interplay between different departments and teams within an organization.

The distinction between authentication and authorization is critical to understand. Authentication serves as the front gate, verifying identities, while authorization determines the access privileges once inside the system. An analogy that illustrates this is visiting an amusement park; authentication is showing your ticket at the entrance, and authorization is the criteria that allow you to enjoy specific rides based on certain attributes, like height.

With the rise of digital accessibility, companies are urged to contractually stipulate their software accessibility requirements to ensure compliance and clarity of expectations. Accessibility is not only about technical specifications but also about the 'soft' components—layout, icons, fonts, language—that require expert human assessment to achieve a truly accessible system.

For effective access control, it is essential to engage key stakeholders and maintain robust policies, coupled with user training and regular audits to ensure the system's ongoing effectiveness. As the ASIS research underscores, access control is a vital component of physical security, touching every level of an organization and requiring a collaborative effort to optimize and prepare for future advancements.

Cryptographic Practices

Understanding the intricacies of secure coding practices is crucial for preserving the sanctity of sensitive information. In the realm of Java applications, where customer information, financial details, and transaction records are frequently managed, the implementation of robust cryptographic measures is not just a recommendation but a necessity.

Encryption, the art of transforming plaintext into ciphertext, ensures that data remains incomprehensible to unauthorized entities. However, developers must judiciously decide when to employ encryption. For example, passwords should not be encrypted, as they must never be decrypted to their original form. Instead, hashing is the preferred technique for protecting passwords.

Digital signatures play a pivotal role in verifying the authenticity of digital messages, serving as an electronic equivalent of a traditional ink signature. They confirm the signer's identity and the message's integrity, particularly in sensitive online environments like banking and healthcare. LeGrow, a mathematics professor, highlights the importance of digital signatures by stating, "A digital signature assures that you are the only one who could have produced that signature and that you endorse the content of the document." Yet, there are situations where alternative forms of digital signatures, such as ring or blind signatures, are more appropriate to preserve anonymity or the confidentiality of a voter's choice.

To ensure that the coding practices align with international standards, the Common Criteria for Information Technology Security Evaluation (CC) provides a comprehensive certification process. Products undergo thorough evaluation by licensed laboratories to confirm their attributes, and certificates issued by Certificate Authorizing Schemes are recognized globally.

Incorporating these secure coding practices is not only a strategic move for Java developers but also a commitment to safeguarding the digital ecosystem. With the guidance of seasoned experts like Rich Salz and Jan Schaumann from Akamai, who advocate for heightened default safety in systems and customer relations, developers can aspire to create applications that are not only functional but also fortresses of digital protection.

Error Handling and Logging

Navigating error handling and logging in software development is a complex yet crucial aspect of security. Best practices suggest that errors must be managed with finesse, ensuring that they provide necessary insights without disclosing sensitive data. Strategically embracing some technical debt during the exploratory phase of a project can foster rapid innovation, as highlighted by research-focused companies. These firms differentiate between exploration, where diverse solutions are tested, and exploitation, where the optimal solution is polished and packaged.

While developers may favor coding along the "happy path", avoiding the less appealing task of error handling, the reality is that effective error management is a necessary journey. The evolution of error management techniques has come a long way, transitioning from the cumbersome return codes approach to the streamlined use of exceptions, which offload the burden of error handling to a dedicated handler.

A key to reducing common defect rates, as Google's experience reveals, is focusing on the developer ecosystem. Ensuring that coding practices prioritize safety by design can prevent numerous vulnerabilities. This holistic approach to developer resources, processes, and tooling is more effective than post-development remedies like code review and testing, which may only identify a subset of defects. Implementing safety and dependability together, as suggested by industry practices, can significantly enhance the robustness of software systems.

To further emphasize the significance of internalizing coding practices that promote safety, contemplate the understanding that the most powerful tool for application protection is education. Customizing education to the distinct attributes of both the company and the engineers is crucial for instilling these methods, a procedure that, although it takes time, has the potential to significantly enhance code protection.

Data Protection

Protecting sensitive information is not just a technical challenge but a strategic imperative. It involves a constellation of practices such as encryption, secure storage, and anonymization. Consider Pyramid Healthcare, which had to manage a complex IT infrastructure across its vast network of facilities and staff. By embracing a strategic approach to information security, they could guarantee consistent protection across various systems.

Similarly, in the case of RetailBank, the decision to use artificial information to test the Car Detect product exemplifies the principle of information minimization. This method not only adheres to privacy regulations but also reduces the danger of revealing customer information.

Moreover, a clearly defined security policy, as recommended by professionals, offers a framework for managing sensitive information and is essential for regulatory compliance. It's a roadmap that navigates the complexities of cybersecurity, delineating what is permissible for employees and reducing the risk of accidental breaches.

The significance of de-identification is emphasized by the fact that apparently harmless information can be triangulated to recognize individuals, thus privacy risks extend beyond the obvious PII. The sensitive nature of information necessitates a strong method for administration, emphasizing the necessity for reduction and cautious handling.

As the cybersecurity industry evolves, with only 4% of organizations feeling confident in their security measures, the role of security professionals becomes even more vital. Their knowledge is vital in upholding the integrity and confidentiality of information, as the worldwide cybersecurity workforce expands to an estimated 4.7 million individuals.

In this rapidly evolving landscape, staying informed is crucial. For those interested in exploring further the connection between privacy and usefulness, resources on statistical information privacy and the equilibrium between privacy and information usefulness are invaluable. They explore the intricacies of information release and the trade-offs involved, providing guidance for organizations navigating this complex terrain.

Communication Security

To effectively protect information during its journey across the digital landscape, encryption serves as a pivotal defense mechanism. By scrambling information into a covert code decipherable only through a unique digital key, encryption ensures that sensitive information is protected whether it is at rest, in transit, or being processed.

A fundamental part of communication security is the utilization of HTTPS, the encrypted edition of HTTP, functioning on Port 443. HTTPS incorporates encryption to create a secure channel over which information can safely travel between the client and server. The fundamental power of HTTPS resides in its cryptographic protocols, like SSL/TLS, which protect the confidentiality and integrity of the information exchanged by creating an encrypted connection between the two parties.

The encryption process involves converting plaintext to ciphertext using cryptographic algorithms. To revert the ciphertext back to plaintext, a decryption key is required. This key is constructed to be resistant to brute force attacks, which attempt to decode data by exhaustively trying every combination.

As an example of encryption in action, RSA, a public key system, uses two keys: a public key for encryption and a private key for decryption. This system not only encrypts messages but also allows for digital signatures, adding a layer of authenticity and non-repudiation to communications. With RSA, one can encrypt a message using the recipient's public key, ensuring that only they, with their private key, can decrypt it. Conversely, a sender can sign a message with their private key, allowing anyone with the corresponding public key to verify the message's origin.

The significance of mastering secure communication protocols is underlined by statistics highlighting the precarious cybersecurity landscape. A staggering 236.1 million ransomware attacks have been reported globally in the first half of 2022, highlighting the crucial importance of strong protective measures. Small and medium-sized businesses (SMBs) are particularly vulnerable, with 67% admitting a lack of in-house skills to address breaches, although reliance on Managed Service Providers is on the rise to fill this gap.

Acknowledging these challenges, the release of OpenSSL 3.2.0 marks a monumental stride in encryption technology, a culmination of over two years of development and contributions from a diverse group of over 300 authors. This progress is a testament to the collective effort to strengthen data protection, supported by organizations that offer financial support and nourishment for ongoing enhancements in the field.

As we navigate an era where cyber threats are increasingly sophisticated, comprehending and implementing communication practices that ensure protection is not just a technical necessity but an imperative to guard against the exploitation of private and sensitive information.

System Configuration and Deployment

Effective system configuration and algorithm deployment are pivotal for sustaining a robust security posture. Within this domain, one must carefully protect servers, network devices, and various components to defend against vulnerabilities. A poignant case in point is British Telecom (BT), grappling with access management across disparate systems. Their reliance on SSH for secure connections has underscored the pitfalls of subpar credential management. The telecom giant is now moving towards a paradigm without passwords, prioritizing both strengthened protection and improved user experiences, despite the intrinsic challenges in reconciling the two.

When considering the heart of modern protection, three domains emerge: privileged access management (PAM), identity access management (IAM), and credential/secrets management. BT's approach aligns with the proactive stance advocated by industry leaders like Intel, who promote embedding intelligence across the technological spectrum to transform data into a catalyst for societal and business advancement.

The six best practices for safe application deployment are designed to avoid lapses in protection without hindering deployment agility. This includes a rigorous management of the code that instigates automated deployments, ensuring that only trusted codebases, absent of forks or branches, are deployed to production environments.

It is also crucial to uphold the cloud shared responsibility model, recognizing that cybersecurity is a joint venture between the cloud service provider and the user, no matter the service model—be it IaaS, PaaS, or SaaS. Comprehending the demarcation of duties is crucial to ensure both accountability and optimal safety standards.

The principles of secure design are equally important. Manufacturers must take ownership of safety outcomes, champion radical transparency, and foster leadership that prioritizes security in product development. This ethos is not confined to traditional software but extends to AI systems and models too, ensuring a foundational resilience against cyber threats.

To improve protection at scale, the industry must embrace a proactive approach, analyzing defect trends and identifying patterns to prevent recurring vulnerabilities. This shift in perspective—from treating individual symptoms to preventing classes of defects—can lead to a substantial elevation in product quality and security, emulating strategies employed in sectors like aviation to achieve comprehensive improvements.

Conclusion

In conclusion, understanding and addressing the critical security risks to web applications is paramount for developers in today's digital landscape. The OWASP Top 10 list serves as an expert consensus on the most pressing vulnerabilities that can lead to unauthorized access, data breaches, and system compromise. From injection attacks to broken access control, these risks must be mitigated through robust security measures.

Adhering to OWASP guidelines is essential, especially in industries like banking, where stringent security measures are necessary to protect sensitive user data. With up to 90% of vulnerabilities lying at the application layer, developers must prioritize security from the inception of their code. Implementing measures such as input validation, parameterized queries, and effective session management can significantly enhance security.

It is crucial for both users and companies to be vigilant about potential data leaks and employ best practices to safeguard against attacks. The OWASP Top 10 serves as a guidepost for building safer digital spaces, ensuring maximum efficiency and productivity in web application development. By implementing secure coding practices, organizations can create reliable, robust, and trustworthy software products.

Efficient and results-driven solutions like Kodezi can assist developers in achieving maximum efficiency and productivity while maintaining a strong focus on security. By incorporating secure coding practices, developers can strengthen the integrity, confidentiality, and availability of web applications, protecting user data and mitigating the risks posed by cyber threats. With the increasing sophistication of attacks, it is imperative to prioritize security and leverage tools and resources that enable developers to build secure applications.

Try Kodezi today and boost your productivity while ensuring the security of your web applications!

Frequently Asked Questions

What is the OWASP Top 10?

The OWASP Top 10 is a widely recognized list that outlines the most critical web application vulnerabilities. It serves as a guide for developers to understand and mitigate risks associated with web applications.

Why is understanding web application vulnerabilities important for developers?

Understanding these vulnerabilities is crucial for developers because cyber threats are becoming increasingly sophisticated. Recognizing and addressing these risks can prevent significant data breaches and unauthorized access.

Can you give examples of common web application vulnerabilities?

Yes, common vulnerabilities include: Injection attacks (e.g., SQL injection) which can lead to unauthorized data exposure, and Broken Access Control (BAC) that allows attackers to bypass security measures.

What are some key practices to prevent injection attacks?

To prevent injection attacks, developers should implement input validation to ensure only properly formatted data is accepted, use parameterized queries to differentiate between code and user input, and employ an allowlist approach to define acceptable inputs.

What role does access control play in web application security?

Access control is essential for preventing unauthorized actions by authenticated users. It ensures that individuals have access only to the information necessary for their duties, minimizing the risk of privilege escalation and unauthorized access.

What are the three key categories of access control mechanisms?

The three categories are: Authentication (verifying an individual's identity), Authorization (ensuring that the authenticated user has the correct permissions), and Auditing (tracking user actions to maintain a record of activities).

How important is cryptography in web application security?

Cryptography is vital as it protects sensitive information by converting plaintext into ciphertext. Proper implementation of encryption and hashing ensures data confidentiality and integrity, preventing unauthorized access.

What is the principle of least privilege?

The principle of least privilege dictates that users or systems should have the minimum level of access necessary to perform their functions. This approach reduces the risk of misuse and helps contain potential breaches.

What is a Software Bill of Materials (SBOM)?

An SBOM is a comprehensive inventory of all components in a software product. It helps organizations understand their software dependencies and manage risks associated with third-party components.

What is multi-factor authentication (MFA) and why is it important?

MFA requires users to provide multiple proofs of identity before accessing an application. It significantly reduces the likelihood of unauthorized access, especially if passwords are compromised.

How can organizations protect against misconfigurations?

Organizations can protect against misconfigurations by implementing secure coding practices, conducting regular security training, and using tools to analyze source code for potential vulnerabilities.

What are dependency freshness and why does it matter?

Dependency freshness refers to keeping software components updated to the latest versions. Regularly evaluating this freshness is crucial to prevent vulnerabilities that could be exploited by attackers.

How can organizations manage logging and monitoring effectively?

Effective logging and monitoring involve determining what information to log to balance between too much and too little data, utilizing tools that streamline the logging process, and establishing documented incident response procedures for quick threat identification.

What is the significance of session management in web applications?

Strong session management practices are critical to prevent session hijacking and ensure user privacy. This includes generating secure session IDs and managing sessions responsibly throughout the user’s interaction with the application.

How do organizations ensure secure coding practices?

Organizations can ensure secure coding by incorporating security into all phases of the software development lifecycle, providing training and resources to developers on best practices, and utilizing frameworks and guidelines, such as those from OWASP.