Overview

The article "Top 7 Strategies to Overcome Token Limit Challenges" focuses on effective methods for managing token limits in AI interactions to enhance productivity and output quality. It presents strategies such as truncation, chunking, and optimizing prompts, supported by examples and case studies, demonstrating that a structured and collaborative approach can significantly mitigate the challenges posed by token limits in language models.

Introduction

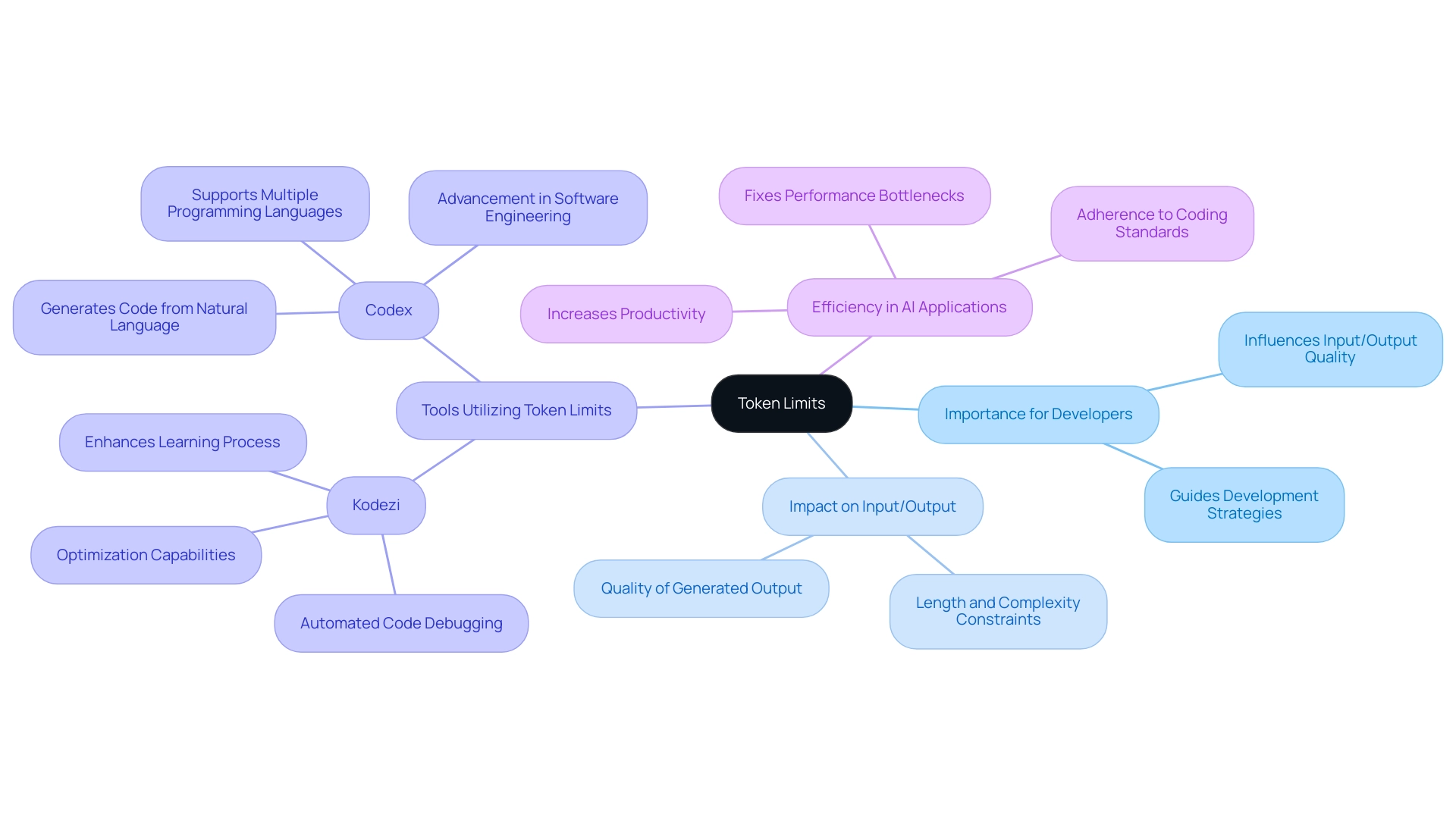

In the realm of artificial intelligence, understanding the intricacies of token limits is paramount for developers striving to harness the full potential of language models. Token limits dictate the boundaries within which models can operate, influencing everything from the complexity of inputs to the quality of outputs.

As organizations seek to optimize their AI interactions, strategies for managing these limits become essential. With tools like Kodezi leading the charge in automated code debugging and optimization, teams can enhance their productivity and streamline their workflows.

This article delves into effective techniques for navigating token limit challenges, monitoring usage, and adapting input formats, all while highlighting the transformative impact of collaborative efforts and continuous learning in maximizing efficiency and productivity in AI applications.

Understanding Token Limits: What They Are and Why They Matter

Token limit refers to the maximum number of tokens—comprising words, punctuation, and special characters—that a system can manage in a single interaction. Understanding the token limit is crucial for developers, as it dictates the length and complexity of input, directly influencing the quality of generated output. For example, the Palm system, featuring its 540 billion parameters, illustrates the magnitude of linguistic frameworks and the significance of managing limit constraints efficiently.

Mastering these intricacies can enhance strategies for working with linguistic systems, ensuring precise and pertinent outputs. In this context, Kodezi's automated code debugging and optimization capabilities shine. It not only allows developers to swiftly identify and fix issues but also provides detailed explanations and insights into the nature of the problems and the resolutions applied, enhancing the learning process.

Tools like Codex demonstrate how a nuanced understanding of symbol processing enhances code generation from natural language, showcasing a significant leap in software engineering. Furthermore, Kodezi CLI empowers teams to auto-heal codebases, increasing productivity and eliminating wasted time on pull requests. By fixing performance bottlenecks and addressing security issues through precise recommendations, Kodezi ensures adherence to best practices and coding standards.

Consequently, understanding the token limit is not just a technical necessity; it's essential for achieving maximum efficiency and productivity in AI applications, especially considering the variety of models and configurations from various AI companies.

Effective Strategies to Navigate Token Limit Challenges

- Truncation: Streamlining data by eliminating unnecessary details can significantly enhance token efficiency. Concentrate on the core message to convey your intent succinctly, ensuring that every word counts. As 't' approaches infinity, the solutions indicate that 'a' approaches

-log(t) - log(1-alpha)(4-2*alpha)and 'b' approacheslog(alpha / (1-alpha)), which highlights the importance of concise data for effective processing. - Chunking: Divide larger inputs into smaller, manageable segments. This method enables the system to process each part effectively, safeguarding against the loss of critical information.

- Optimizing Prompts: Hone your prompts to be direct and specific. By removing ambiguity, you reduce the token limit usage while still communicating essential information clearly and efficiently.

- Using Contextual Clues: Present context in a condensed manner. Instead of lengthy explanations, employ keywords or brief phrases that encapsulate the essence of your request, enabling the model to generate relevant and precise outputs.

- Prioritizing Key Information: Identify the most essential components of your data. Focus on including only the critical details that maximize the effectiveness of your prompts, ensuring clarity and purpose.

- Iterative Refinement: Experiment with different data formats and structures. Continuously refining your approach will help you uncover the most effective ways to communicate with the model, driving better results.

- Utilizing External Resources: Make use of specialized tools and libraries created to handle limit restrictions, such as those that can automatically shorten or summarize data before submission. Utilizing these tools can streamline the process and significantly boost overall efficiency.

- Case Study: The Prometheus research paper illustrates the application of these strategies through an all-in-one platform for evaluating and testing LLM applications on the cloud, integrated with DeepEval. This platform enhances LLM evaluation capabilities, allowing for comprehensive testing and security assessments of LLM applications.

- Expert Insight: As Kristin Vongthongsri states, 'In this article, I'll share what you should definitely look for in your next LLM Observability solution.' This perspective emphasizes the importance of effective input techniques in optimizing performance.

Monitoring Token Usage for Better Management

Utilizing real-time monitoring tools for tracking usage can dramatically enhance your management of interactions with language models. For instance, New Relic charges $149 for every 500k pageviews, highlighting the cost implications of effective monitoring tools. By closely monitoring resource consumption, you can fine-tune your strategies to optimize performance.

Establishing efficient logging systems provides valuable insights into consumption patterns, allowing you to identify inefficiencies and adjust proactively before reaching the token limit. A practical example is the Amazon SNS case study, where users receive immediate notifications when their usage consumption exceeds defined thresholds, facilitating quick responses. Moreover, incorporating usage metrics into your development dashboard offers a comprehensive overview of your interaction efficiency over time.

This practice not only aids in identifying trends but also enhances your ability to respond swiftly to any consumption spikes, ensuring that your AI applications run smoothly and effectively. As Shantesh states, 'For more information: please go through the elastic search link,' highlighting the significance of monitoring consumption.

Utilizing Summarization Techniques

Employing effective summarization methods can result in a significant decrease in resource usage, allowing organizations to enhance communication without compromising quality. However, it is essential to balance optimization with user experience considerations, as highlighted in the case study on optimization challenges. By leveraging algorithms that distill information down to its core components, redundant content is systematically eliminated, but this must be done carefully to preserve context.

Recent advancements in specialized natural language processing (NLP) libraries designed for text summarization have made this process more efficient. For instance, the library introduced by Deutsch and Roth (2020) focuses on developing summarization metrics, albeit only covering a fraction of the evaluation metrics available. Utilizing techniques like subword segmentation, intelligent division, and truncation, as highlighted by Ajit Dash of Microsoft, organizations can reduce count numbers, resulting in significant cost reductions in infrastructure and processing time.

Moreover, utilizing a zero-shot model for evaluation can further enhance the effectiveness of these summarization techniques. Condensing lengthy texts into brief bullet points or short paragraphs not only saves space but also improves clarity, ensuring that the essence of the message is maintained while adhering to the token limit. Nevertheless, it is essential to acknowledge the trade-offs between usage and accuracy, as over-summarization may lead to loss of critical information.

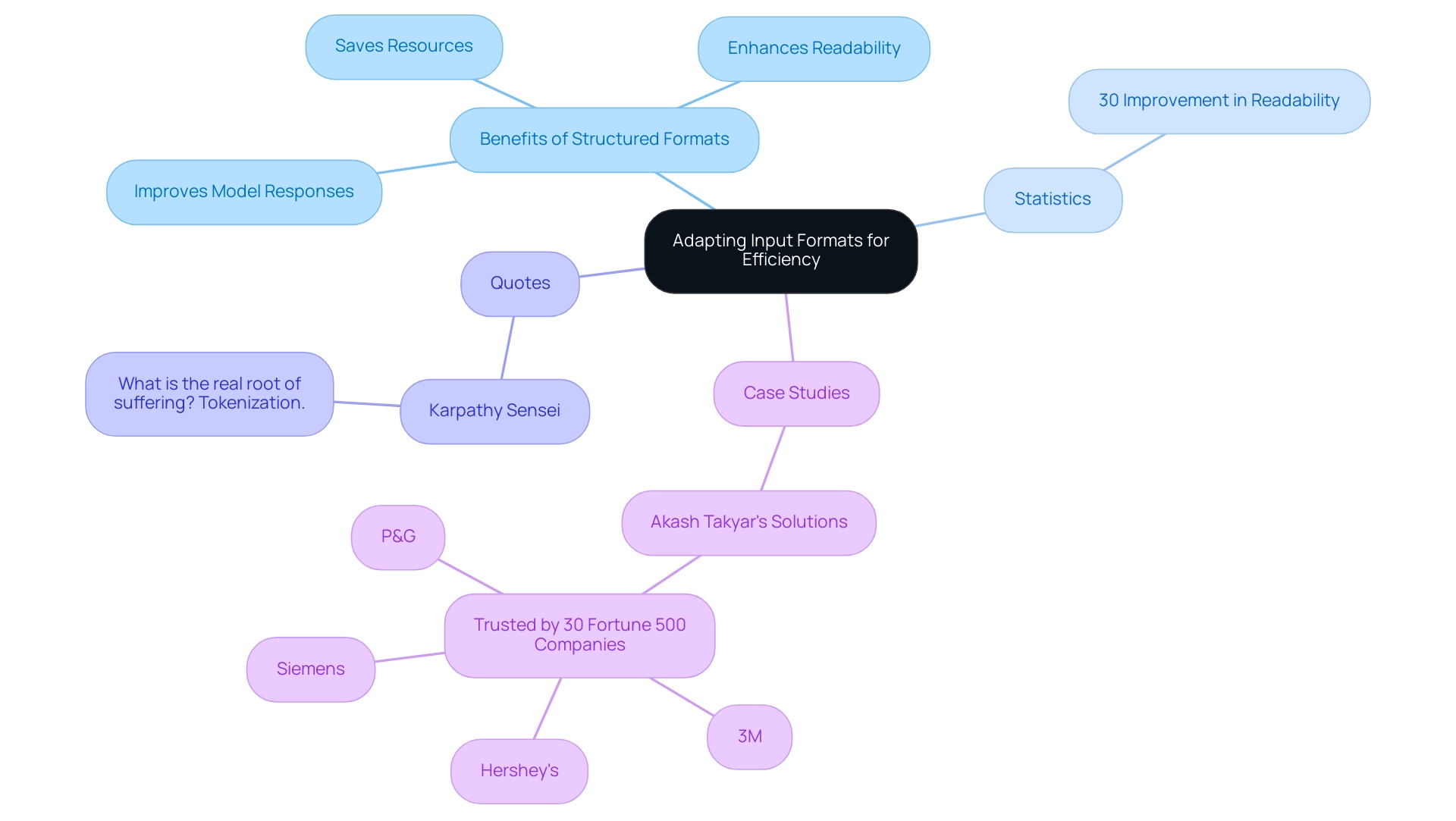

Adapting Input Formats for Efficiency

Adopting structured input formats can significantly enhance efficiency in AI interactions. Rather than depending on extensive narratives, using brief formats like tables or lists can effectively communicate information while saving resources. This method not only decreases resource consumption but also enhances readability, facilitating quicker and more accurate model responses.

Clear headings and bullet points are essential tools in this approach, as they help prioritize crucial information, ensuring that key elements are highlighted without surpassing limits. As Karpathy Sensei aptly notes, 'What is the real root of suffering? Tokenization.'

This emphasizes the significance of optimizing format types to mitigate challenges associated with the token limit. Supporting this, the case study titled 'Impact of Prompt Complexity on LLM Performance' reveals that understanding prompt complexity is vital for interpreting outputs from language models. It highlights the potential for non-standard results when standard prompting approaches are applied, suggesting that structured formats can aid in minimizing such issues.

Furthermore, statistics indicate that structured formats can improve readability by up to 30%, showcasing their effectiveness in enhancing communication with AI systems. Furthermore, Akash Takyar's technology solutions, relied upon by more than 30 Fortune 500 firms, illustrate the practical uses of organized formats in real-world situations. By experimenting with these formats, developers can optimize their interactions with systems, resulting in improved performance and efficiency.

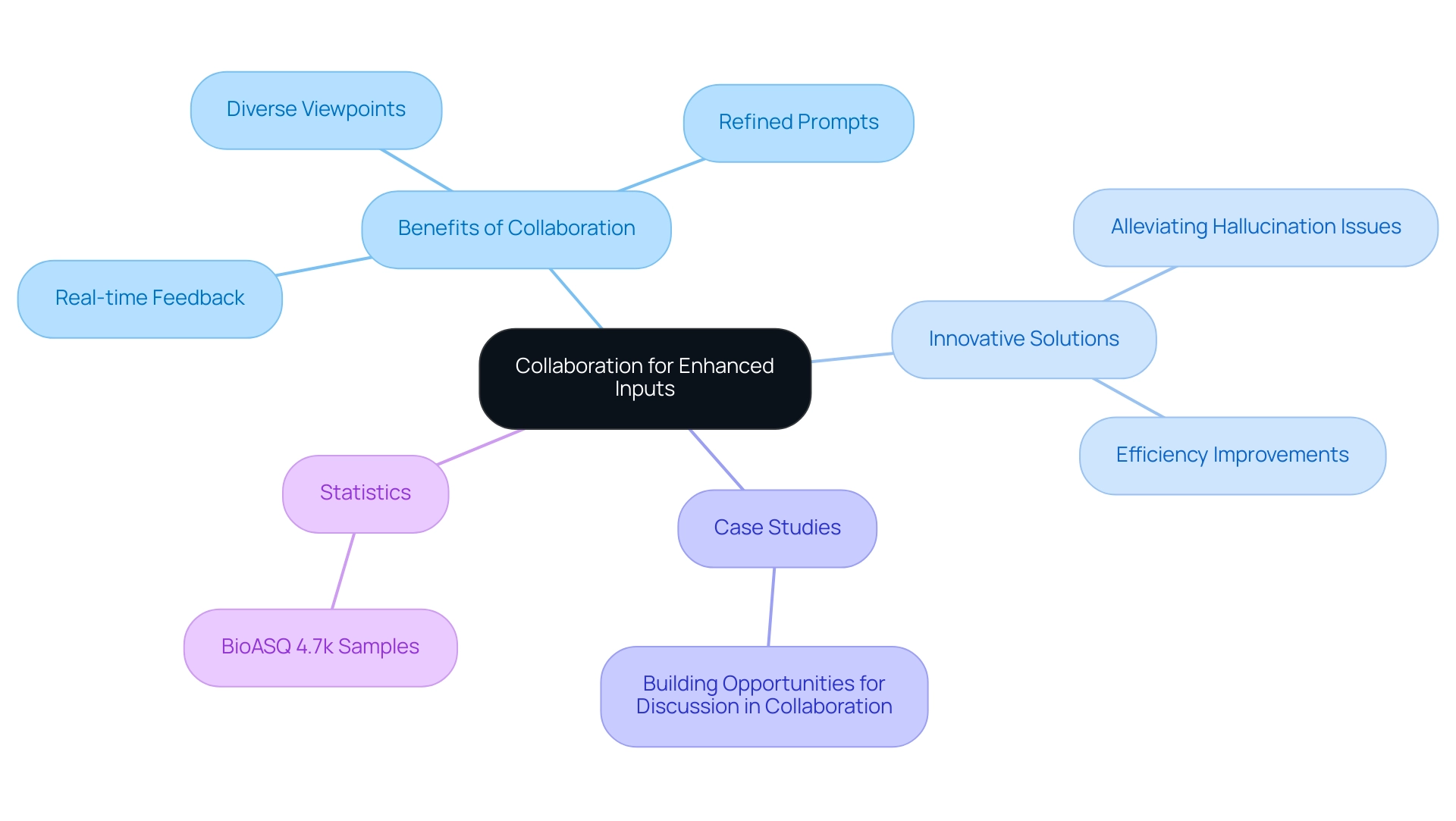

Collaborating with Others for Enhanced Inputs

Collaborating with colleagues or peers significantly enhances the effectiveness of inputs for language models. By sharing insights and brainstorming together, teams can create refined prompts that enhance clarity while reducing usage. This collaborative method utilizes diverse viewpoints, often leading to innovative solutions for managing the token limit.

For instance, recent developments indicate that offloading difficult tokens can alleviate hallucination issues of the base model, leading to better generalization. Moreover, employing collaborative tools or platforms facilitates real-time feedback and adjustments to input strategies, thereby enhancing both efficiency and effectiveness. The significance of nurturing a cooperative atmosphere aligns with the case study named 'Building Opportunities for Discussion in Collaboration,' which emphasizes the need to equip students with tools and strategies for conflict resolution to encourage meaningful discussions.

Furthermore, D. J. Wu from information systems claims that collaboration is essential to improving prompt formulations for optimal performance. Furthermore, statistics show that BioASQ contains 4.7k samples for medical question answering, underscoring the effectiveness of collaborative input strategies in specific contexts.

Continuous Learning and Adaptation

Remaining at the forefront in the swiftly changing realm of linguistic technology is crucial for efficient oversight of limit restrictions. Participating in ongoing education via workshops, webinars, and online courses focused on systems significantly improves your understanding and application abilities. Recent statistics indicate that participation in targeted workshops can lead to substantial gains in user engagement and understanding of these technologies.

For instance, HatchWorks reports a 30-50% productivity boost for clients using Generative-Driven Development, highlighting the benefits of continuous learning and engagement. Additionally, Amazon's recent success, with a 17% increase in AWS revenue attributed to AI integration, serves as a real-world example of how staying updated can drive productivity and revenue. It's also beneficial to follow industry blogs and forums, where best practices and optimization techniques are frequently shared.

As highlighted by HatchWorks,

Generative AI has fundamentally reshaped not just the development landscape, but also job functions and productivity across every business sector.

In this context, utilizing Kodezi CLI can be a game-changer; its Auto Heal feature allows teams to quickly resolve issues and enhance coding efficiency, enabling you to Auto Heal codebases in seconds and never waste time on a pull request again. This continuous involvement not only enables users to innovate quickly but also prepares them to address challenges related to the token limit with the most effective strategies available.

Gabriel Bejarano asserts that AI will not replace developers in the near future due to the creativity involved in software development, reinforcing the ongoing need for human expertise alongside technological advancements. By fostering a proactive learning mindset and leveraging tools like Kodezi CLI, professionals can navigate the complexities of managing the token limit while maximizing the potential of language models. We encourage you to try Kodezi CLI today and experience how it can enhance your programming productivity!

Conclusion

Understanding and effectively managing token limits is crucial for developers looking to maximize the potential of language models. By employing strategies such as:

- Truncation

- Chunking

- Optimizing prompts

teams can streamline their inputs and enhance the quality of outputs. Monitoring token usage in real time allows for proactive adjustments, ensuring that interactions with AI remain efficient and effective.

Adopting summarization techniques and structured input formats further aids in conserving tokens while maintaining clarity and precision. Collaborative efforts among team members can lead to more refined inputs, tapping into diverse perspectives to tackle challenges associated with token limits. Continuous learning and adaptation are vital in this fast-evolving field, empowering developers to stay ahead of the curve and utilize the latest advancements in AI technology.

Ultimately, leveraging tools like Kodezi can transform how organizations approach coding and AI interactions. By integrating these practices and technologies, teams can significantly enhance their productivity and streamline workflows, ensuring they remain at the forefront of innovation in the realm of artificial intelligence. Embracing these insights not only leads to immediate benefits but also positions organizations for long-term success in harnessing the power of language models.

Frequently Asked Questions

What is a token limit?

A token limit refers to the maximum number of tokens—comprising words, punctuation, and special characters—that a system can manage in a single interaction. It is crucial for developers as it influences the length and complexity of input, which in turn affects the quality of generated output.

Why is understanding the token limit important for developers?

Understanding the token limit is essential for developers because it dictates how they structure input, directly impacting the efficiency and productivity of AI applications. Proper management of token limits can lead to better output quality.

How can truncation improve token efficiency?

Truncation improves token efficiency by streamlining data through the elimination of unnecessary details, allowing developers to focus on the core message and convey intent succinctly.

What is the chunking method?

Chunking is the process of dividing larger inputs into smaller, manageable segments. This approach enables the system to process each part effectively, reducing the risk of losing critical information.

How can optimizing prompts help with token limits?

Optimizing prompts involves making them direct and specific, which reduces token limit usage while still clearly communicating essential information.

What are contextual clues, and how do they help with token limits?

Contextual clues are condensed presentations of context, such as keywords or brief phrases, that encapsulate the essence of a request. They help the model generate relevant and precise outputs without lengthy explanations.

Why is it important to prioritize key information?

Prioritizing key information ensures that only the most essential components are included in prompts, maximizing effectiveness and clarity while adhering to token limits.

What does iterative refinement involve?

Iterative refinement involves experimenting with different data formats and structures to continuously improve communication with the model, leading to better results.

How can external resources assist with token limits?

External resources, such as specialized tools and libraries, can help manage limit restrictions by automatically shortening or summarizing data before submission, thereby boosting overall efficiency.

What is a case study mentioned regarding token limit strategies?

The Prometheus research paper is mentioned as a case study that illustrates the application of strategies for managing token limits through an all-in-one platform for evaluating and testing LLM applications on the cloud, integrated with DeepEval.

What expert insight is provided in the article?

Kristin Vongthongsri emphasizes the importance of effective input techniques in optimizing performance, highlighting what to look for in an LLM Observability solution.