Overview

To successfully train a Large Language Model (LLM) on a codebase, developers often face significant challenges. Understanding foundational concepts, gathering the right tools, and following structured training procedures are essential. Kodezi addresses these challenges by emphasizing the importance of:

- Data preparation

- Model fine-tuning

- Overcoming common obstacles

A well-organized approach not only enhances coding efficiency but also improves model performance. By utilizing Kodezi, developers can streamline their processes and achieve better results.

Introduction

In the rapidly evolving landscape of artificial intelligence, developers face significant challenges when it comes to training Large Language Models (LLMs) on codebases. These sophisticated AI systems possess the ability to understand and generate human-like text, offering tremendous potential for enhancing software development processes.

However, how can organizations effectively harness these capabilities? The journey requires:

- A solid grasp of foundational concepts

- The right tools

- A systematic approach to training

From preparing datasets to troubleshooting common issues, this guide delves into essential steps and strategies that empower organizations to optimize their LLM training efforts. Ultimately, this can drive innovation and efficiency in their coding endeavors.

Understand the Basics of LLMs and Codebases

To effectively train LLM on codebase, it is crucial to understand several foundational concepts. Developers often face significant challenges when managing complex codebases. How can these challenges be addressed? Enter Kodezi, a platform designed to enhance coding efficiency.

Large Language Models are sophisticated AI systems capable of comprehending and generating human-like text. They are trained on extensive datasets, including code, enabling them to perform tasks such as code generation, debugging, and optimization. Understanding codebases is essential; a codebase comprises the source code necessary for building a specific software application. Its structure, programming languages, and frameworks directly influence the learning process of the system. Furthermore, automated code debugging plays a vital role by instantly identifying and fixing issues, providing detailed explanations and insights into what went wrong, and ensuring compliance with security best practices.

In addition, LLMs require data to be formatted appropriately. It is essential to know how to present code snippets, comments, and documentation in a way that aids comprehension and learning. Similarly, understanding the architecture of the chosen LLM is important, as different models have unique requirements and capabilities. This understanding will direct how to set up your codebase for optimal results, and by mastering these concepts, you will be well-prepared to address the challenges involved in training LLM on codebase. The benefits of using Kodezi are clear: improved productivity and enhanced code quality. Explore the tools available on the platform to transform your software development processes.

Gather Required Tools and Resources

Assembling the right tools and resources is crucial to effectively train LLM on codebase. Consider the following essential components:

- Programming Language: Python remains the leading choice for LLM development, thanks to its extensive ecosystem of libraries and strong community support.

- Libraries and Frameworks: Key libraries include TensorFlow and PyTorch for model development, alongside Hugging Face's Transformers library, which offers pre-trained models and tools for fine-tuning.

- Data Preparation Tools: Utilize tools like Pandas for effective data manipulation and NumPy for numerical tasks. This ensures your programming environment is well-prepared for training.

- Hardware Requirements: Access to a powerful GPU or TPU is essential, as LLM development demands significant computational resources. Consider leveraging cloud platforms such as Google Cloud or AWS for scalable solutions, while you train LLM on codebase by implementing Git as your Version Control System to effectively manage changes in your project and monitor the training process.

By gathering these essential tools and resources, you will establish a strong foundation for successful LLM training. Are you ready to enhance your LLM training experience?

Follow Step-by-Step Training Procedures

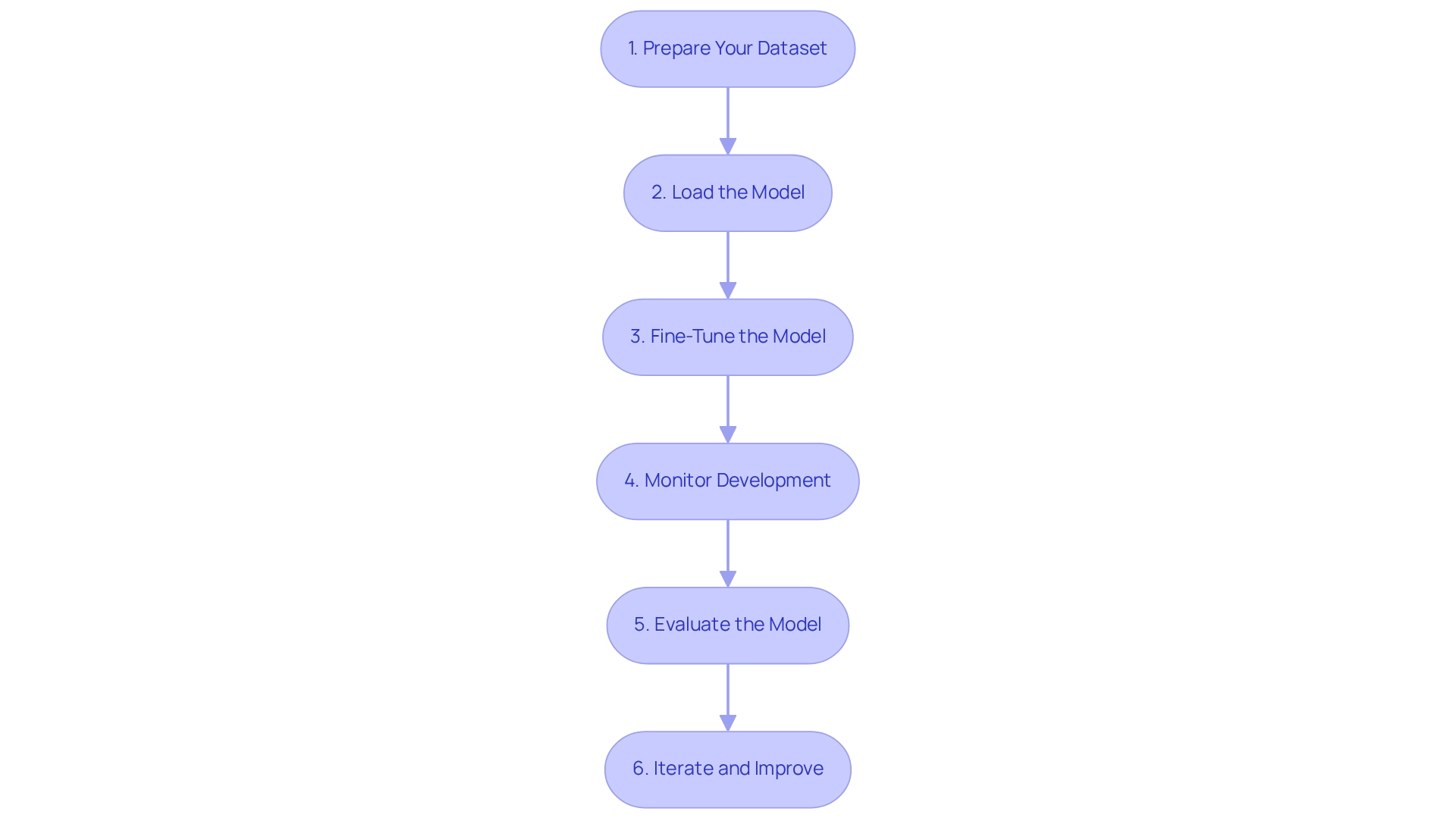

Coding challenges often hinder developers' productivity and efficiency. To effectively train LLM on codebase, you should adhere to the following steps:

- Prepare Your Dataset: Begin by cleaning and preprocessing your codebase. Eliminate unnecessary files and comments, ensuring that the code is well-structured and formatted. Split the dataset into preparation, validation, and test sets to facilitate effective learning.

- Load the Model: Utilize the Transformers library to load a pre-trained LLM. This method considerably decreases the time and computational resources needed compared to developing a system from the ground up.

- Fine-Tune the Model: Configure the optimization parameters, including learning rate, batch size, and number of epochs. Utilize your prepared dataset to refine the system, making adjustments based on validation performance to enhance outcomes.

- Monitor Development: Implement tools like TensorBoard to visualize performance metrics. Consistently assess loss and accuracy to guarantee that the system is learning efficiently and advancing.

- Evaluate the Model: Upon completion of the learning process, assess the model's performance using the test set. Examine its functionalities in producing code, troubleshooting, and enhancing based on the dataset.

- Iterate and Improve: Use the evaluation results to refine your development process. Adjust hyperparameters, enhance your dataset, or improve preprocessing steps to boost model performance. By following these structured procedures, you will successfully train LLM on codebase, which ensures it aligns with your coding requirements and enhances your development efficiency. Explore the tools available on the Kodezi platform to further improve your coding practices and outcomes.

Troubleshoot Common Issues During Training

During the development process, developers often face common challenges that can hinder progress. Have you encountered issues like insufficient memory or overfitting? Here are some troubleshooting tips that can help you navigate these obstacles effectively:

- Insufficient Memory: If you exhaust memory during the process, consider lowering the batch size or utilizing gradient accumulation to handle memory usage efficiently. Furthermore, methods such as activation offloading can be advantageous, as they move activations between GPU and CPU memory, enabling the development of larger systems or increased batch sizes.

- Overfitting: Is your system performing well on the training set but poorly on the validation set? This may indicate overfitting. Implement techniques such as dropout, early stopping, or data augmentation to mitigate this. In addition, focusing on optimizing essential parameters can drastically reduce the computing power and time needed, making AI customization faster and more affordable.

- Poor Performance: If the system's performance is not enhancing, it may be time to revisit your data preprocessing steps. Ensure that your dataset is diverse and representative of the tasks you want the model to perform. Testing different configurations can help find the right balance between memory efficiency and computational speed.

- Training Instability: Are you noticing erratic behavior during the process? Try adjusting the learning rate or utilizing learning rate schedulers to stabilize the procedure. This can lead to more consistent performance improvements.

- Debugging Errors: If you encounter errors during the learning process, utilize logging to capture error messages and stack traces. This information can help you identify the source of the problem, and by being proactive in addressing these common issues, you can effectively train LLM on codebase to ensure a smoother learning experience and achieve better results. For instance, in setups with high-bandwidth interconnects, leveraging strategies like ZeRO has demonstrated 3–5x faster training to train LLM on codebase for models ranging from 20 to 80 billion parameters, showcasing the importance of optimizing memory management during training. Explore the tools available on the Kodezi platform to enhance your productivity and code quality.

Conclusion

The journey to effectively train a Large Language Model (LLM) on codebases presents significant challenges for developers. Understanding the multifaceted approach grounded in tools, systematic procedures, and foundational concepts is essential. By recognizing the intricacies of LLMs and the importance of data representation, organizations can lay the groundwork for successful training. Furthermore, integrating this knowledge with the right tools—such as Python, TensorFlow, and cloud computing resources—can significantly enhance LLM training efforts.

In addition, following a structured training process is crucial for optimizing LLM capabilities. Each step, from preparing datasets to fine-tuning models and monitoring performance, contributes to the overall effectiveness of the training program. Being equipped to troubleshoot common issues ensures that potential setbacks do not derail progress. By proactively addressing challenges like memory constraints and overfitting, developers can maintain momentum and refine their models for better outcomes.

Ultimately, harnessing the power of LLMs can drive innovation and efficiency in software development. By adopting a comprehensive approach that encompasses understanding foundational principles, utilizing the right tools, and following systematic training procedures, organizations can unlock the full potential of these advanced AI systems. Embracing this journey not only enhances coding practices but also positions organizations at the forefront of technological advancement.

Frequently Asked Questions

What are the foundational concepts necessary for training LLMs on a codebase?

Key foundational concepts include understanding the structure of the codebase, the programming languages and frameworks used, and the formatting of data for effective comprehension and learning.

What challenges do developers face when managing complex codebases?

Developers often encounter significant challenges related to understanding and navigating the complexities of codebases, which can impact coding efficiency and productivity.

How does Kodezi help address the challenges of managing codebases?

Kodezi is a platform designed to enhance coding efficiency, helping developers manage complex codebases more effectively and improve productivity and code quality.

What are Large Language Models (LLMs) and what tasks can they perform?

LLMs are sophisticated AI systems capable of understanding and generating human-like text. They can perform tasks such as code generation, debugging, and optimization.

Why is understanding a codebase important for training LLMs?

Understanding a codebase is essential because its structure, programming languages, and frameworks directly influence the learning process of the LLM, affecting how well it can comprehend and generate code.

What role does automated code debugging play in the context of LLMs?

Automated code debugging helps by instantly identifying and fixing issues, providing detailed explanations of what went wrong, and ensuring compliance with security best practices.

How should data be formatted for LLMs?

Data should be formatted appropriately, including how code snippets, comments, and documentation are presented, to aid comprehension and learning for the LLM.

Why is it important to understand the architecture of the chosen LLM?

Understanding the architecture of the LLM is important because different models have unique requirements and capabilities, which influences how to set up your codebase for optimal results.