Introduction

In the realm of software development, code coverage has emerged as a vital metric that not only measures the effectiveness of testing efforts but also serves as a barometer for overall code quality. With tools like SonarQube at their disposal, developers gain invaluable insights into how much of their code is truly tested, paving the way for more robust applications and fewer bugs. As organizations strive for excellence in their software products, the integration of cutting-edge solutions like Kodezi enhances this process, allowing teams to identify untested areas swiftly and improve their coding practices.

By embracing automated debugging and continuous monitoring, developers can foster a culture of quality that drives efficiency and innovation, ensuring that their applications not only meet but exceed user expectations. As the focus on code coverage continues to evolve, understanding its nuances becomes paramount for teams aiming to stay ahead in the competitive landscape of software development.

Introduction to Code Coverage in SonarQube

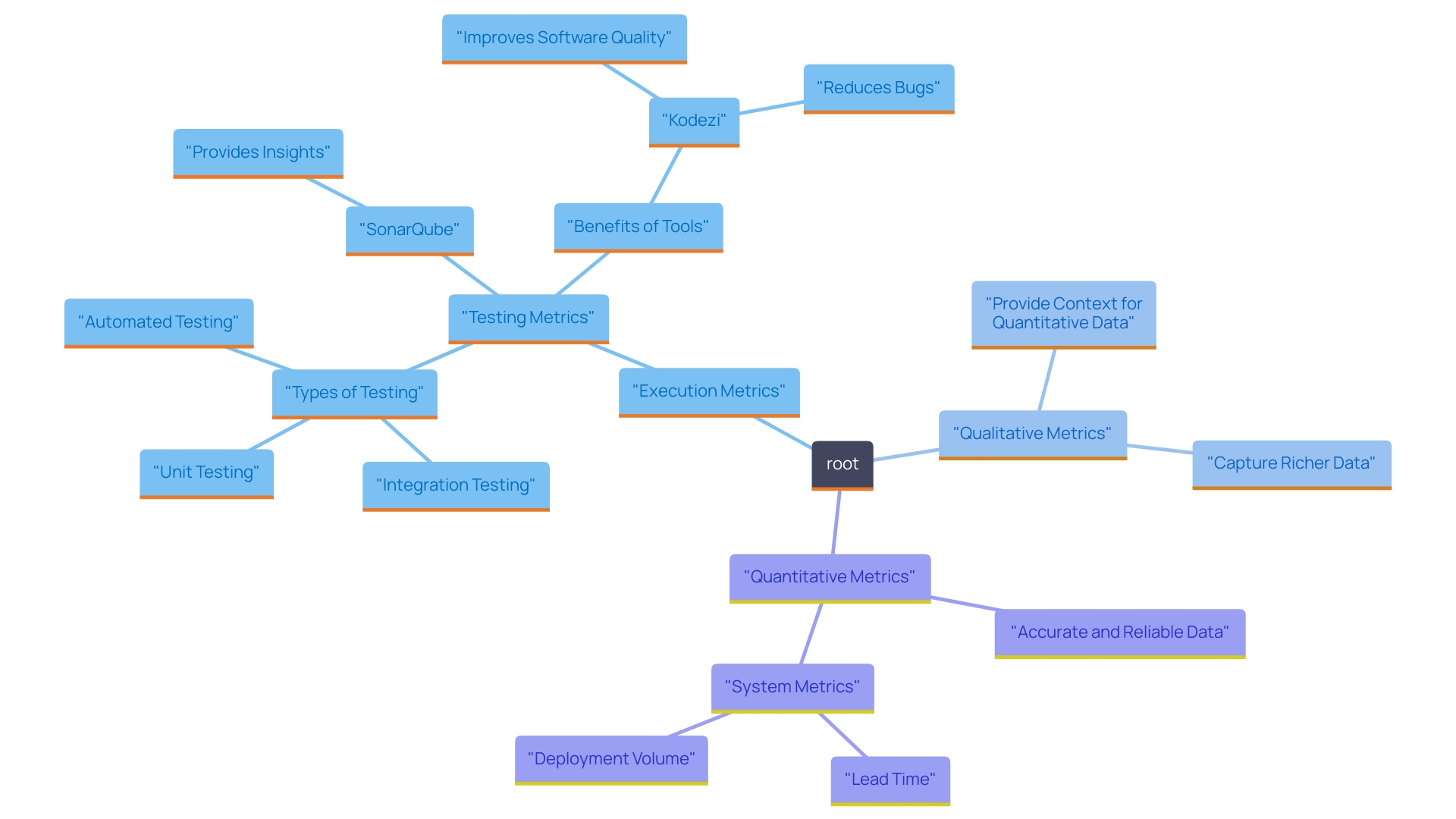

Execution metrics serve as a key measure that quantifies the extent to which the source material of a program is run during testing. Within the framework of SonarQube, testing metrics offer essential insights into the effectiveness of your testing efforts, revealing how much of your program is exercised through unit tests, integration tests, and other automated testing methodologies. Extensive testing usually indicates a reduced occurrence of errors and a more manageable software base.

SonarQube excels in providing comprehensive visual reports and detailed metrics that empower developers to identify untested areas of their work. This ability is crucial for teams aiming to improve test completeness and overall software standards. As Johanna South aptly noted, "How do you know what value test automation brings to your projects and teams? Metrics can guide you in understanding this value."

The significance of testing metrics cannot be exaggerated, particularly as organizations can now conduct unlimited scans of their programs to constantly oversee standards. This ongoing assessment not only helps in adhering to best practices in software development but also contributes to delivering robust applications that meet user expectations and industry standards. Furthermore, the ability for unlimited scans improves the proactive detection of potential problems, ensuring that teams stay ahead of concerns.

In 2024, the emphasis on test coverage as a fundamental aspect of software performance metrics continues to evolve, further solidifying its role in the development process. Incorporating Kodezi's automated debugging and AI-driven automated builds into this framework highlights the efficiency and results-oriented strategy essential for sustaining high programming standards. For instance, teams utilizing the tool have reported a significant reduction in bug incidence due to its ability to identify and rectify code issues before deployment. With these tools, teams can optimize performance, ensure security compliance, and enforce coding standards, ultimately achieving optimal software quality. This seamless integration of Kodezi's features with SonarQube's metrics not only enhances the testing process but also reinforces a culture of continuous improvement in software development.

Troubleshooting Code Coverage Discrepancies in SonarQube

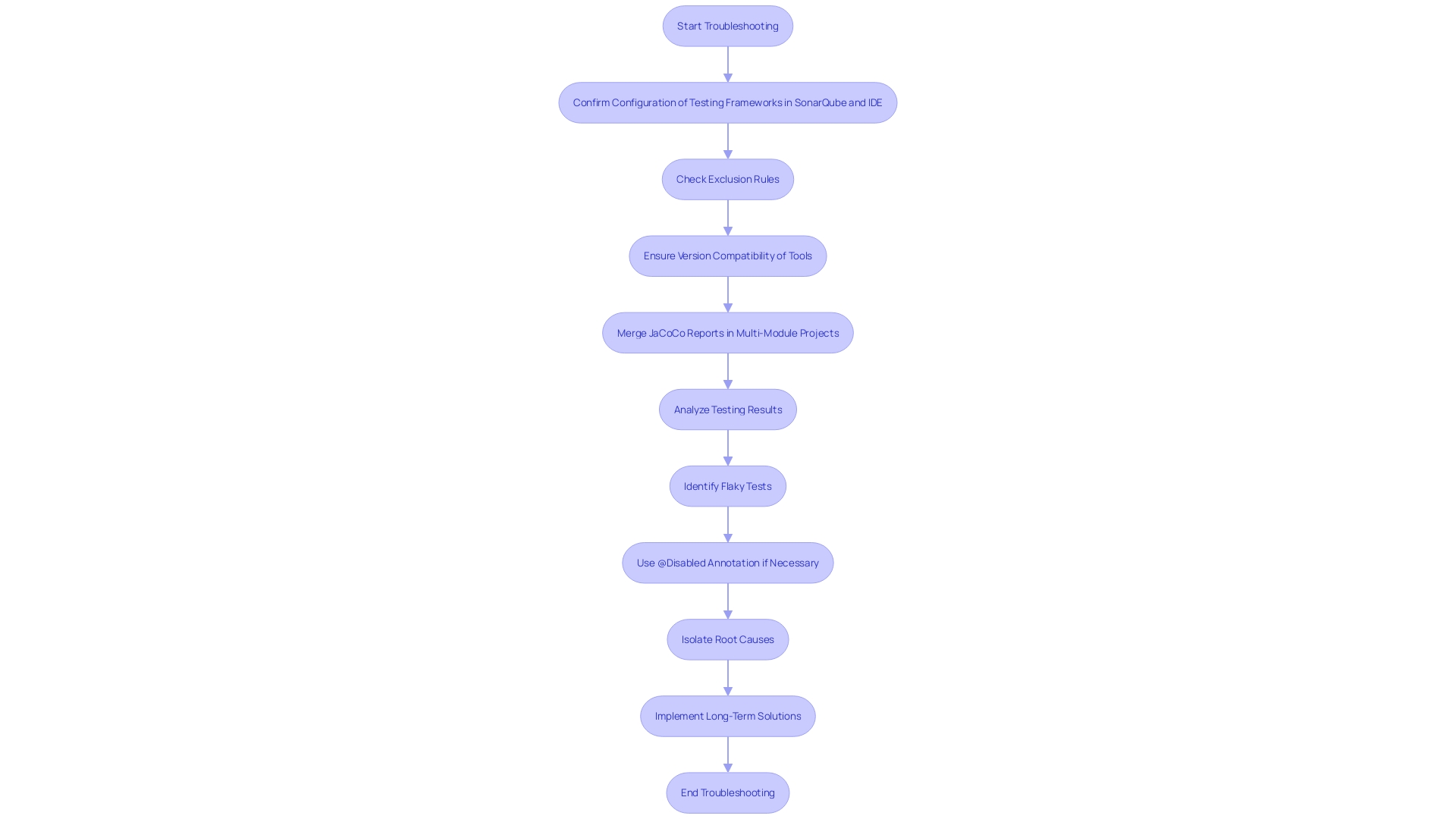

Discrepancies between SonarQube assessment reports and IDE outcomes can pose considerable challenges for developers. Key factors contributing to these inconsistencies often include variations in test environments, configuration settings, and the methodologies used by different tools to compute results. As per recent statistics, roughly 30% of developers indicate facing inconsistencies in their testing results, which emphasizes the significance of tackling these problems.

To effectively troubleshoot these problems, begin by confirming that your testing frameworks are correctly configured in both SonarQube and your IDE. Pay close attention to the rules and settings within SonarQube that could affect reporting, such as exclusion rules for specific files or folders. Typical exclusions consist of test directories or specific paths that may not be pertinent for analysis. A significant element to confirm is that the versions of your code being tested correspond; even minor changes can result in notable differences in results.

A valuable insight from a user who faced similar challenges underscores the necessity of merging JaCoCo reports from individual modules in a multi-module Maven project. As they noted,

'>After a lot of struggle finding the correct solution on Maven multi-module projects, we need to ensure we pick JaCoCo reports from individual modules and merge them into one report.<'

Furthermore, consider the compatibility of your tools. A case study involving Jenkins revealed that version mismatches between the Jenkins JaCoCo plugin and the Maven JaCoCo plugin led to results not displaying correctly. This issue was resolved when the user updated to a more recent version of the Maven JaCoCo plugin, restoring the anticipated results in Jenkins.

By systematically reviewing these elements, including configuration settings and tool compatibility, developers can accurately identify and rectify the sources of discrepancies. This approach ensures that their coverage metrics provide a trustworthy reflection of their testing efforts.

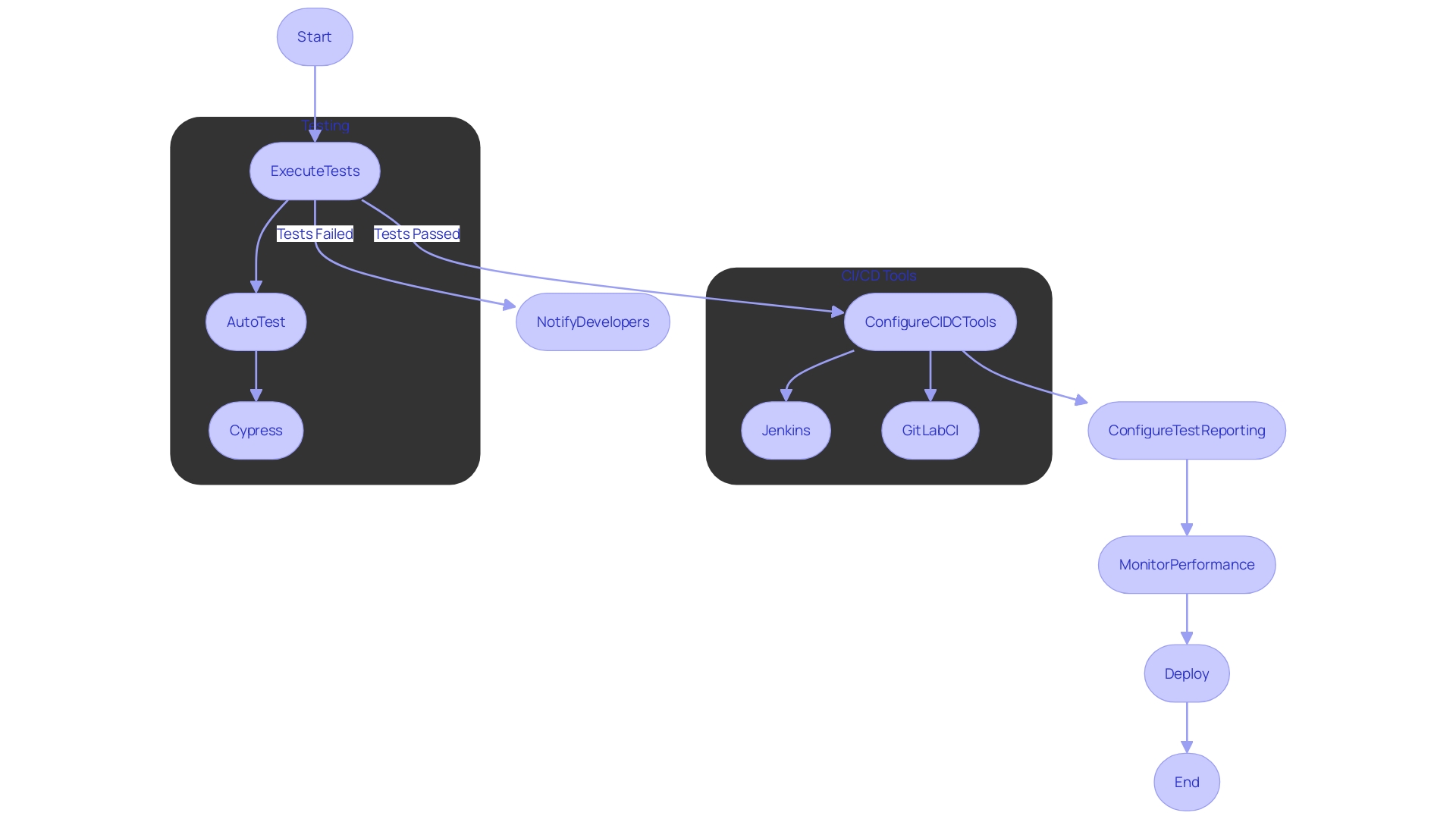

Integrating SonarQube with CI/CD Pipelines

Incorporating this tool with your CI/CD pipelines is a strategic step for ongoing assessment of software quality. In 2023, we launched CLI, enabling professionals to seamlessly integrate it into their CI/CD pipelines and utilize our features locally. This integration allows for the assessment of code coverage metrics within your build process. To implement this, configure your CI/CD tool—whether it be Jenkins, GitLab CI, or Travis CI—to initiate analysis during each build. This involves adding straightforward commands to your build scripts that initiate Kodezi scans.

Before triggering the analysis, it is crucial to execute your tests. Conducting tests in advance guarantees that the data gathered is precise, enabling a genuine representation of the software standard. With this setup, you can automatically fail builds if coverage dips below specified thresholds, encouraging developers to prioritize robust testing practices and uphold high-quality standards.

This proactive method not only enhances the overall quality of the code but also nurtures a culture of continuous improvement within development teams. For example:

- Jenkins can be set up to perform scans by adding a specific plugin.

- GitLab CI can utilize a straightforward YAML configuration to incorporate analysis within its pipeline.

As noted by Manish Kapur, senior director for product & solutions at the company, 'It’s not enough to have tools.' Teams must ensure the ones they use fit the Clean As You Code model and don’t create more problems for developers than they actually solve. This perspective underscores the importance of selecting tools that enhance productivity without adding complexity. By aligning with the Clean As You Write philosophy, you can minimize complications and maximize productivity, especially as program churn is anticipated to double by the end of the year. Kodezi currently supports over 30 languages and is compatible with Visual Studio Code, with plans for future IDE support.

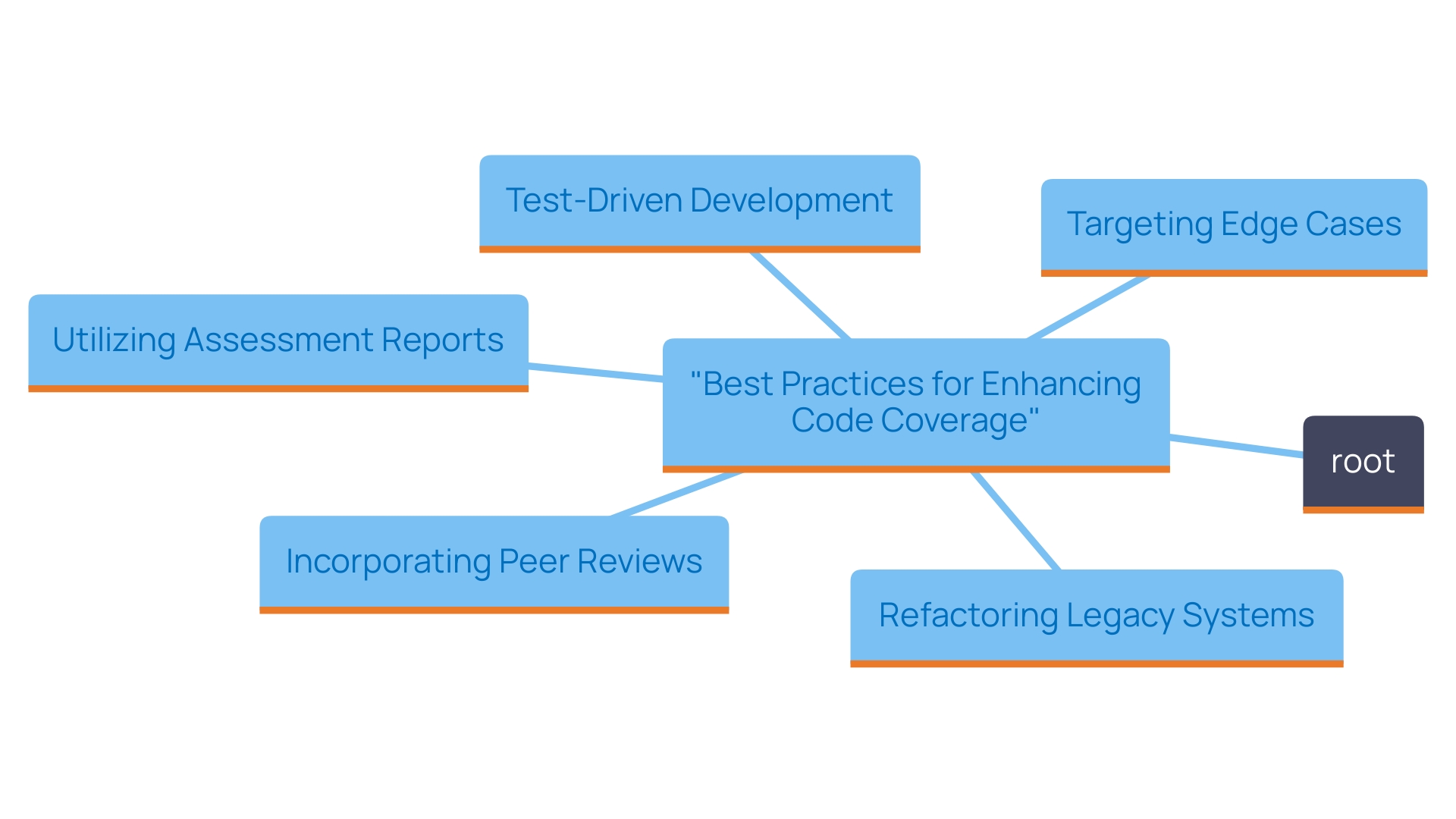

Best Practices for Improving Code Coverage

To achieve consistent improvements in code coverage, developers should adopt the following best practices:

-

Write Tests First: Embrace Test-Driven Development (TDD) by creating tests prior to the implementation itself. This proactive method not only leads to improved test comprehensiveness but also motivates developers to foresee the application's usage scenarios. Recent studies have indicated that teams employing TDD can boost their test completeness by as much as 30% within the initial few iterations.

-

Target Edge Cases: Focus on identifying and testing edge cases that may escape standard testing protocols. This encompasses situations involving error conditions and unusual inputs, ensuring thorough examination of all possible outcomes. For example, a project at XYZ Corp saw a 25% reduction in bugs reported after actively addressing edge cases in their TDD approach.

-

Utilize Assessment Reports: Regularly examine assessment reports produced by SonarQube to identify areas of untested programming. Prioritize expanding reach in critical components of your application to enhance overall reliability. By focusing on these metrics, teams can make informed decisions about where to allocate testing resources effectively.

-

Refactor Legacy System: Gradually refactor legacy systems to enhance their readability and maintainability. This makes it easier to implement tests for previously untested segments, fostering a culture of continuous improvement. Refactoring not only increases coverage but also enhances team morale by reducing technical debt.

-

Incorporate Reviews: Implement peer reviews to ensure that all new contributions are accompanied by appropriate tests. This practice fosters responsibility and emphasizes the significance of programming standards within the team. Groups that embrace this practice frequently indicate a substantial improvement in programming standards and team cooperation.

By consistently applying these best practices, developers can systematically enhance code coverage, leading to the development of more reliable and maintainable software. As Shigeru Mizuno wisely observes, 'Excellence must be continually enhanced, but it is equally essential to ensure that standards never decline.' Making quality a habitual practice ensures excellence in every release.

Conclusion

Achieving high code coverage is essential for delivering robust software applications that meet user expectations and industry standards. By leveraging tools like SonarQube and integrating Kodezi, developers can gain critical insights into their testing effectiveness, identify untested areas, and foster a culture of quality improvement. The ongoing assessment capabilities of these tools empower teams to continuously monitor and enhance their code quality, ultimately leading to fewer bugs and a more maintainable codebase.

Addressing discrepancies in code coverage reports is equally vital. By ensuring proper configuration and compatibility of testing frameworks and tools, developers can trust that their metrics accurately reflect their testing efforts. This diligence not only enhances the reliability of the code coverage data but also contributes to a more effective testing strategy.

Implementing best practices such as Test-Driven Development, targeting edge cases, and incorporating peer code reviews can significantly elevate code coverage levels. By committing to these practices, teams can ensure that quality is ingrained in their development processes. As the landscape of software development continues to evolve, embracing these strategies will enable organizations to stay ahead, delivering exceptional software that meets the demands of an ever-changing market.

Frequently Asked Questions

What are execution metrics in the context of software testing?

Execution metrics quantify the extent to which the source material of a program is run during testing, providing insights into the effectiveness of testing efforts.

How does SonarQube contribute to testing metrics?

SonarQube provides comprehensive visual reports and detailed metrics that help developers identify untested areas, improving test completeness and overall software standards.

Why are testing metrics important for organizations?

Testing metrics allow organizations to conduct unlimited scans of their programs, ensuring adherence to best practices, delivering robust applications, and proactively detecting potential problems.

What role does Kodezi play in improving software quality metrics?

Kodezi integrates automated debugging and AI-driven automated builds, which help reduce bug incidence by identifying and rectifying code issues before deployment, optimizing performance, and ensuring security compliance.

What are common discrepancies between SonarQube and IDE testing results?

Discrepancies often arise from variations in test environments, configuration settings, and the methodologies used by different tools to compute results.

How can developers troubleshoot discrepancies in testing results?

Developers should confirm that testing frameworks are correctly configured, check exclusion rules in SonarQube, ensure code versions match, and merge reports from individual modules in multi-module projects.

What steps can be taken to integrate Kodezi into CI/CD pipelines?

Developers can configure their CI/CD tools to initiate Kodezi scans during each build by adding commands to their build scripts and ensuring tests are executed beforehand for accurate data.

What best practices can improve code coverage?

Best practices include writing tests first (TDD), targeting edge cases, utilizing assessment reports, refactoring legacy systems, and incorporating peer reviews to enhance test completeness and reliability.

How can regular assessment reports from SonarQube benefit developers?

Regular assessment reports help identify areas of untested programming, allowing teams to prioritize testing resources effectively and enhance overall reliability.

What is the significance of maintaining high programming standards?

Maintaining high programming standards through practices like TDD, peer reviews, and refactoring leads to more reliable and maintainable software, fostering a culture of continuous improvement.