Introduction

In the rapidly evolving landscape of software development, ensuring code quality and security has never been more critical. Static code analysis tools, particularly those powered by AI, offer developers an invaluable resource for enhancing their Golang projects.

By meticulously examining source code without execution, these tools identify potential bugs, security vulnerabilities, and stylistic inconsistencies early in the development process. This proactive approach not only streamlines debugging and optimization but also cultivates a culture of continuous improvement within development teams.

As organizations increasingly recognize the importance of efficiency and productivity, the integration of sophisticated static analysis tools becomes a pivotal strategy in achieving robust, maintainable, and high-quality codebases.

Understanding Static Code Analysis Tools for Golang

Static analysis tools, including Kodezi's AI-driven solutions, play a crucial role in upholding the integrity of Golang programs through golang code analysis by examining source content without execution. This proactive approach to golang code analysis enables developers to instantly identify and fix potential bugs, security vulnerabilities, and style inconsistencies, thereby enhancing overall quality. Kodezi's automated debugging features provide detailed explanations and insights into issues, enabling rapid resolution and performance enhancement via golang code analysis.

According to recent statistics, the use of such resources can reduce bugs in production environments by up to 30%. Among the most commonly used resources are golint, go vet, and staticcheck, each providing unique perspectives on quality and adherence to best practices. For instance, while golint addresses stylistic issues, go vet focuses on detecting possible runtime errors, ensuring compliance with the latest security best practices and coding standards.

Kodezi's instruments not only improve formatting and add exception handling features but also incorporate automated builds and testing into the development workflow, which boosts collaboration and maintainability while ensuring efficient resource management. Furthermore, monitoring runtime statistics provides developers with valuable metrics regarding memory allocation and garbage collection, aiding in diagnosing performance issues. Therefore, the deployment of Kodezi's fixed programming evaluation resources is essential to nurturing a strong and efficient development environment.

Best Practices for Implementing Static Code Analysis in Golang

To optimize the implementation of static code analysis in Golang, consider these essential best practices, enhanced by the capabilities of Kodezi CLI:

- Integrate Early: Deploy fixed evaluation instruments at the beginning of the development cycle. By identifying issues early, teams can prevent minor problems from escalating into significant setbacks, ultimately conserving both time and resources. Kodezi CLI autonomously enhances your codebase, addressing issues before they reach production and functioning as an autocorrect for programming.

- Automate the Procedure: Integrate fixed evaluation instruments within your CI/CD pipeline, ensuring that software experiences automated examination with every commit. Kodezi’s AI-powered features facilitate seamless integration into your workflow, maintaining consistent code quality and fostering efficiency across the project.

- Set Clear Guidelines: Define explicit coding standards and guidelines for your team. With Kodezi, all members can recognize the significance of fixed evaluations and apply the platform’s insights efficiently to understand the feedback produced by these resources.

- Regularly Update Tools: Keep your fixed evaluation tools current. Kodezi continuously evolves to include enhanced detection algorithms and new features, significantly improving effectiveness in identifying issues.

- Review and Act on Feedback: Encourage regular examination of consistent feedback among developers, treating it as a constructive learning opportunity. Kodezi’s automatic bug assessment provides detailed explanations, fostering a culture of continuous improvement within the team.

- Customize Rulesets: Modify the rulesets of your fixed evaluation systems to fit your project’s specific requirements. Kodezi enables customized evaluation, ensuring that the feedback remains relevant and concentrated on the most essential areas.

A practical example of effective fixed evaluation execution is SonarLint, a lightweight application that offers real-time assessment within IDEs such as Visual Studio Code and IntelliJ IDEA. By installing the SonarLint plugin, developers receive instant feedback on programming issues as they write or modify their work, enhancing the development process by highlighting bugs and security vulnerabilities in real-time. As noted by Ivan Homola, "You can also use it to detect and patch vulnerabilities in existing software applications and systems, reducing the risk of a malicious attack or data breach."

By adopting these optimal methods, along with using Kodezi CLI, development teams can fully take advantage of fixed structure evaluation tools for Golang code analysis, resulting in cleaner, more efficient, and more secure Golang repositories. Statistics indicate that companies that invest in efficient development cycles grow revenue four to five times faster than those that do not prioritize productivity, underscoring the significant impact of these practices on overall business success. Additionally, Kodezi's unique features, such as its ability to automatically correct programming and provide detailed bug explanations, enhance its value proposition for teams looking to improve their coding practices.

Common Pitfalls to Avoid When Using Static Code Analysis

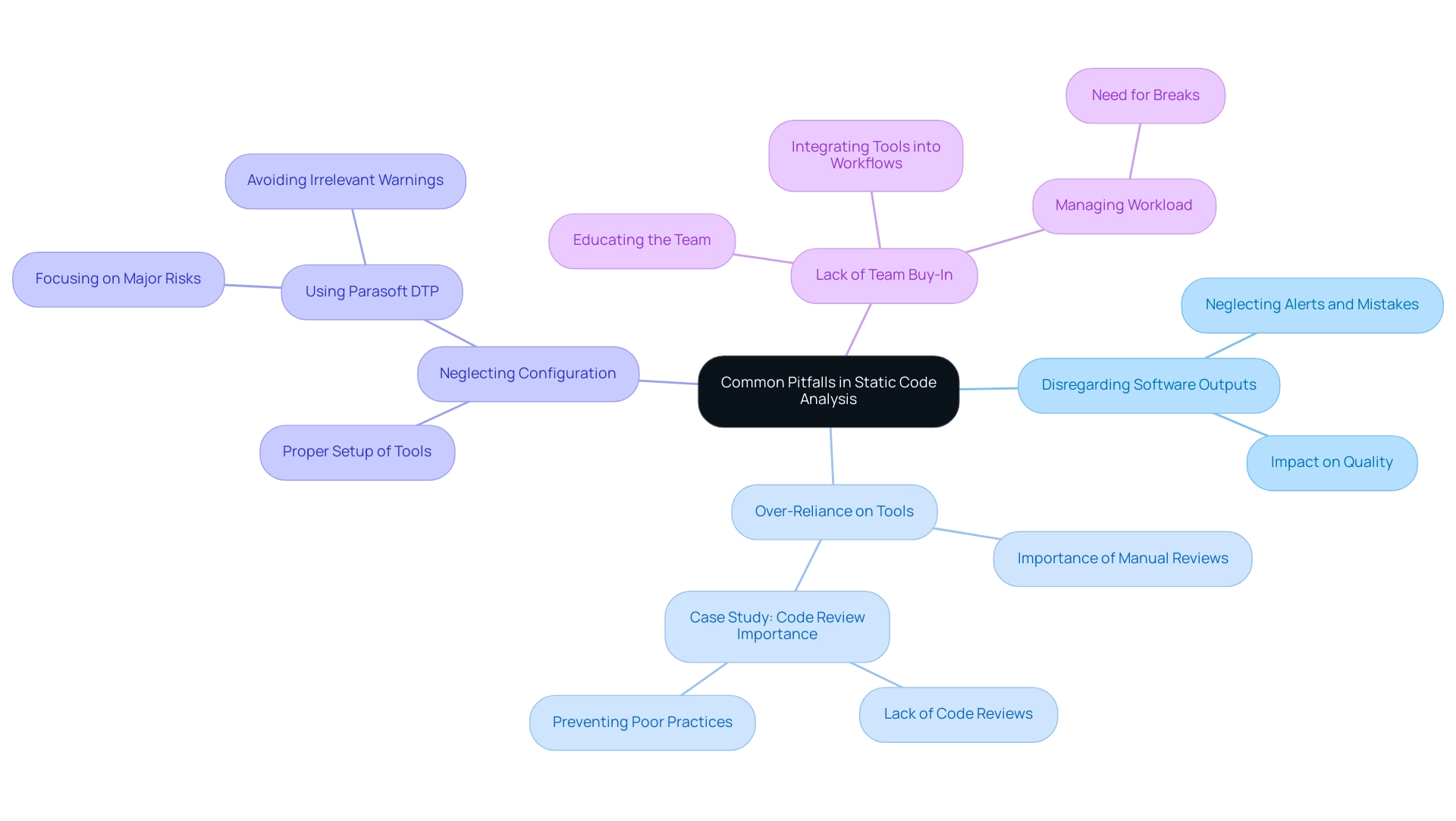

When applying fixed programming evaluation in Golang, it is essential for developers to identify and tackle the following frequent shortcomings:

- Disregarding Software Outputs: As time passes, developers might become insensitive to the alerts and mistakes generated by code evaluation systems, leading to significant problems being neglected. It is crucial to handle outputs with the seriousness they merit and address them swiftly to uphold quality.

- Over-Reliance on Tools: Although fixed evaluation instruments are indispensable, they should not be considered the sole method of guaranteeing software quality. A robust development process must also incorporate golang code analysis, manual reviews of the software, and comprehensive testing, which together enhance overall project outcomes. As emphasized in the case study regarding the significance of golang code analysis, the absence of code reviews can lead to the emergence of substandard coding practices that automated inspection alone may not detect.

- Neglecting Configuration: Proper setup of static review utilities is vital for golang code analysis, as many offer extensive options to align their outputs with specific coding standards. Failing to set up these resources correctly can lead to irrelevant warnings or, worse, the omission of critical issues that require attention. Tools such as Parasoft DTP can assist in concentrating on major risks instead of minor problems, thereby simplifying the golang code analysis process.

- Lack of Team Buy-In: The efficiency of fixed evaluation instruments significantly decreases if team members do not acknowledge their worth. Educating the team about the benefits of these tools and integrating them into daily workflows is essential for achieving successful adoption and utilization. Given that many teams are working 60 hours a week or more, it is vital to implement efficient practices to avoid burnout and maintain productivity.

Fragmented quality often arises from irregular application of fixed examination across various teams or projects. Creating a standardized method guarantees consistent assessment of all programs, which is essential for upholding high standards throughout.

By actively tackling these challenges, development teams can effectively manage the intricacies of non-dynamic program evaluation, resulting in enhanced efficiency and results. As mentioned by XENON HOLDINGS, effective practices in quality assurance significantly enhance platform performance, showcasing the tangible benefits of a well-executed evaluation strategy.

Integrating Static Code Analysis with Other Development Tools

Incorporating fixed evaluation resources with development settings greatly improves both efficiency and programming quality. Here are effective integration strategies to consider:

-

Continuous Integration (CI) Systems: Incorporating golang code analysis tools within CI pipelines automates the analysis process on every commit.

This integration provides prompt feedback for developers, ensuring high quality is maintained throughout the development lifecycle. Organizations can reduce the number of security vulnerabilities in their software by up to 30%, demonstrating the tangible benefits of this approach. For example, by employing fixed evaluation tools in CI systems, teams can discover and resolve problems before they grow, which is essential for preserving strong software security. -

Version Control Systems: Implement hooks in version control systems, such as Git, to initiate a review before merging changes. This proactive measure assists in catching issues early, preventing potentially problematic elements from being integrated into the main branch.

-

Code Review Platforms: Connecting fixed review results to code review platforms, such as GitHub or GitLab, improves visibility during code evaluations.

This practice not only fosters collaboration among team members but also reinforces adherence to established coding standards. -

IDE Integration: Numerous integrated development environments (IDEs) provide plugins for static evaluation systems. By integrating these resources directly into the developer's workspace, immediate feedback is available, promoting a culture of proactive coding.

For instance, SonarLint is a lightweight application that offers real-time software evaluation within IDEs such as Visual Studio Code and IntelliJ IDEA, emphasizing programming issues as developers write or alter scripts. This instant feedback helps developers address issues, bugs, and security vulnerabilities directly within their development environment. As Moataz Nabil aptly notes,This automation streamlines the development process, increases productivity, and allows developers to spend more time on creative problem-solving.

-

Investigating Alerts: It’s essential to have a procedure in place for examining warnings produced by non-dynamic assessment tools. Not all warnings should be dismissed; some may indicate underlying issues that require attention. By categorizing specific alerts as mistakes, teams can guarantee that possible issues are tackled proactively, enhancing overall software quality.

By applying these integration strategies, development groups can establish a unified setting that optimizes the benefits of golang code analysis, resulting in improved software quality and better collaboration.

Measuring the Impact of Static Code Analysis on Code Quality

To effectively measure the impact of fixed code evaluation on code quality, development teams should concentrate on several crucial metrics:

-

Reduction in Bugs: It is essential to monitor the number of bugs found during preliminary evaluation compared to those identified during runtime. A notable decrease signifies the effectiveness of these resources in identifying issues early, which can enhance software reliability and security.

-

Quality Scores: Many static analysis tools assign quality scores based on metrics such as complexity, duplications, and adherence to programming standards. Monitoring these scores over time offers insights into enhancements in programming quality, aligning with high documentation standards—defined as having over 80% consistent comments and comprehensive documentation. This alignment ensures that the program not only meets functional requirements but also maintains clarity and maintainability.

-

Time Saved in Reviews: Examining the impact of automated evaluation on the length of reviews is essential. A decrease in evaluation durations suggests that resources are successfully identifying problems, enabling reviewers to focus on more intricate elements of the programming, ultimately resulting in quicker deployment.

-

Developer Satisfaction: Conducting surveys to evaluate developer satisfaction with the fixed review process can provide valuable insights. Positive feedback indicates that developers consider these tools as advantageous, strengthening their role in upholding high quality in programming. As Berin Loritsch aptly states,

Quality is by definition a fuzzy concept; the degree of excellence of something

<— emphasizing the subjective nature of quality evaluation.

-

Technical Debt Reduction: Monitoring the amount of technical debt within the software repository before and after the deployment of static program evaluation is vital. A reduction in technical debt indicates improved software quality and maintainability, promoting a healthier development environment.

-

Lines of Code: Utilizing the 'Lines of Code' metric can help in comparing the overall size of classes, projects, or applications, primarily at the method level. This case study demonstrates that techniques which cannot be seen in their entirety on a screen are likely too lengthy and should be refactored for better readability, thereby enhancing software quality.

By incorporating management expectations into the evaluation, teams can also understand how these expectations influence developers' ability to produce high-quality work. By focusing on these metrics, teams can effectively gauge the impact of golang code analysis on their practices. Recent studies indicate that organizations employing such tools experience a significant reduction in bugs, thus bolstering the overall software quality and performance.

Conclusion

In the realm of software development, the significance of static code analysis tools, particularly those like Kodezi, cannot be overstated. These tools empower developers by proactively identifying bugs, security vulnerabilities, and stylistic inconsistencies before they escalate into larger issues. By integrating such tools early in the development process and embedding them within CI/CD pipelines, teams can enhance code quality, streamline workflows, and significantly reduce bugs in production environments.

Successful implementation hinges on adopting best practices, such as:

- Setting clear coding standards

- Customizing rulesets

- Fostering a culture of continuous improvement through regular feedback reviews

However, it is equally important to avoid common pitfalls like:

- Ignoring tool outputs

- Over-relying on automated processes

By recognizing the value of manual code reviews alongside static analysis, development teams can achieve a more robust quality assurance strategy.

Ultimately, the integration of static code analysis tools not only leads to cleaner, more maintainable code but also drives efficiency and productivity within development teams. As organizations increasingly prioritize these practices, they position themselves to thrive in a competitive landscape, ultimately resulting in higher quality software and improved business outcomes. Embracing these tools and methodologies is a strategic investment that can transform the software development process for the better.

Frequently Asked Questions

What is the role of static analysis tools in Golang programming?

Static analysis tools, such as Kodezi's AI-driven solutions, are essential for maintaining the integrity of Golang programs by analyzing source code without execution. They help developers identify and fix potential bugs, security vulnerabilities, and style inconsistencies, ultimately enhancing overall code quality.

How does Kodezi improve the debugging process in Golang?

Kodezi offers automated debugging features that provide detailed explanations and insights into issues, allowing for rapid resolution and performance enhancements through its code analysis capabilities.

What impact can using static analysis tools have on production environments?

The use of static analysis tools can reduce bugs in production environments by up to 30%, improving the reliability and stability of applications.

What are some commonly used static analysis tools for Golang?

Commonly used tools include golint, which addresses stylistic issues, go vet, which detects possible runtime errors, and staticcheck, each offering unique insights into code quality and adherence to best practices.

How does Kodezi enhance the development workflow?

Kodezi improves formatting, adds exception handling features, incorporates automated builds and testing, and monitors runtime statistics, which collectively boost collaboration, maintainability, and resource management.

What are the best practices for implementing static code analysis in Golang?

Best practices include integrating early in the development cycle, automating the procedure within CI/CD pipelines, setting clear coding guidelines, regularly updating tools, reviewing and acting on feedback, and customizing rulesets to fit project requirements.

Can you provide an example of a tool that supports real-time fixed evaluation?

SonarLint is a lightweight application that provides real-time assessment within IDEs like Visual Studio Code and IntelliJ IDEA, offering instant feedback on programming issues as developers write or modify code.

What benefits do companies gain from investing in efficient development cycles?

Companies that prioritize productivity through efficient development cycles can grow their revenue four to five times faster than those that do not, highlighting the significant impact of these practices on overall business success.