Overview

In the world of software development, coding challenges are a common hurdle that developers face daily. How can these challenges be effectively addressed? This is where Kodezi comes into play, offering a suite of features designed to streamline the testing process. With tools like Jest and Mocha, alongside Kodezi, developers can navigate their unique evaluation needs with ease.

Kodezi stands out by enhancing productivity and code quality through its automation capabilities and robust community support. Imagine the time saved when testing becomes more efficient—this is a reality with Kodezi. By utilizing this tool, developers not only improve their workflow but also ensure that their code meets high-quality standards.

Are you ready to elevate your coding practices? Explore the various testing tools available on the Kodezi platform and discover how they can transform your development experience. With Kodezi, the path to improved productivity and superior code quality is at your fingertips.

Introduction

In the rapidly evolving landscape of software development, developers face numerous coding challenges that can impede their progress. The significance of robust testing practices cannot be overstated, and JavaScript test runners have emerged as indispensable tools to address these challenges. These tools streamline the testing process, enabling developers to efficiently validate their code through various methodologies, including:

- Unit testing

- Integration testing

- End-to-end testing

As teams increasingly embrace automation, understanding the nuances of different test runners—like Jest, Mocha, and Cypress—becomes crucial for optimizing both productivity and code quality.

Furthermore, the essential features, performance metrics, and community support that define these testing tools provide valuable insights for developers seeking to enhance their testing strategies. By leveraging these tools, developers can significantly improve the reliability of their applications. Are you ready to explore how these JavaScript test runners can transform your coding practices? Discover the benefits of integrating robust testing into your workflow and ensure your code stands up to scrutiny.

Understanding JavaScript Test Runners

In the realm of software development, coding challenges are ubiquitous. Developers often grapple with ensuring their JavaScript scripts function correctly. JavaScript evaluation tools serve as essential resources that streamline the assessment process for scripts authored in JavaScript, enabling developers to perform unit, integration, and end-to-end evaluations effectively. By executing evaluation scripts and generating detailed reports, these tools ensure that code operates as intended, facilitating early identification and resolution of bugs in the development cycle. Notable frameworks such as Jest, Mocha, and Jasmine each present unique characteristics, catering to diverse assessment needs.

The importance of JavaScript test runners in modern software development is profound. Industry specialists emphasize that automating evaluation processes significantly enhances productivity and code quality. In 2025, approximately 75% of developers reported using JavaScript evaluation tools, indicating a growing trend towards automated assessment solutions. This shift is supported by data revealing that teams utilizing JavaScript test runners can reduce the time spent on manual evaluations by up to 40%. Furthermore, platforms like LambdaTest provide access to over 10,000 combinations of browsers, devices, and operating systems, underscoring the versatility and extensive evaluation capabilities of these tools.

Recent advancements in JavaScript evaluation tools have further optimized the assessment process. For instance, the Robot Framework, a keyword-driven framework, has gained traction for its ability to accommodate both technical and non-technical users. With over 10.6K stars on GitHub, it facilitates the creation of custom keywords and data-driven cases, enhancing coverage by allowing the same case to be executed with various data sets. Unlike conventional JavaScript test runners, the distinctive attributes of the Robot Framework represent a significant enhancement to the evaluation landscape.

Moreover, integrating automated debugging tools, such as those offered by Kodezi, can greatly improve the evaluation process. Kodezi's features empower developers to swiftly identify and rectify codebase issues, providing detailed explanations and insights into what went wrong and how it was resolved. This capability not only aids in quick problem-solving but also ensures adherence to the latest security best practices and development standards, ultimately optimizing performance and enhancing quality. Compared to other tools like Jest and Mocha, Kodezi's automated debugging offers a more comprehensive approach to maintaining code quality throughout the evaluation process.

As the JavaScript evaluation landscape evolves, the upcoming Node Congress 2026 in April is poised to address the latest advancements and best practices in the field, including insights on Kodezi's offerings. This event will provide a platform for developers to share experiences about the benefits of automating evaluations using JavaScript test runners. As Patrick Collison aptly noted, "Programming is full of odd ideas. Using shorter, less descriptive names often produces code that’s more readable overall." This perspective reinforces the idea that effective testing is foundational to successful software development, highlighting the necessity for clarity and efficiency in coding.

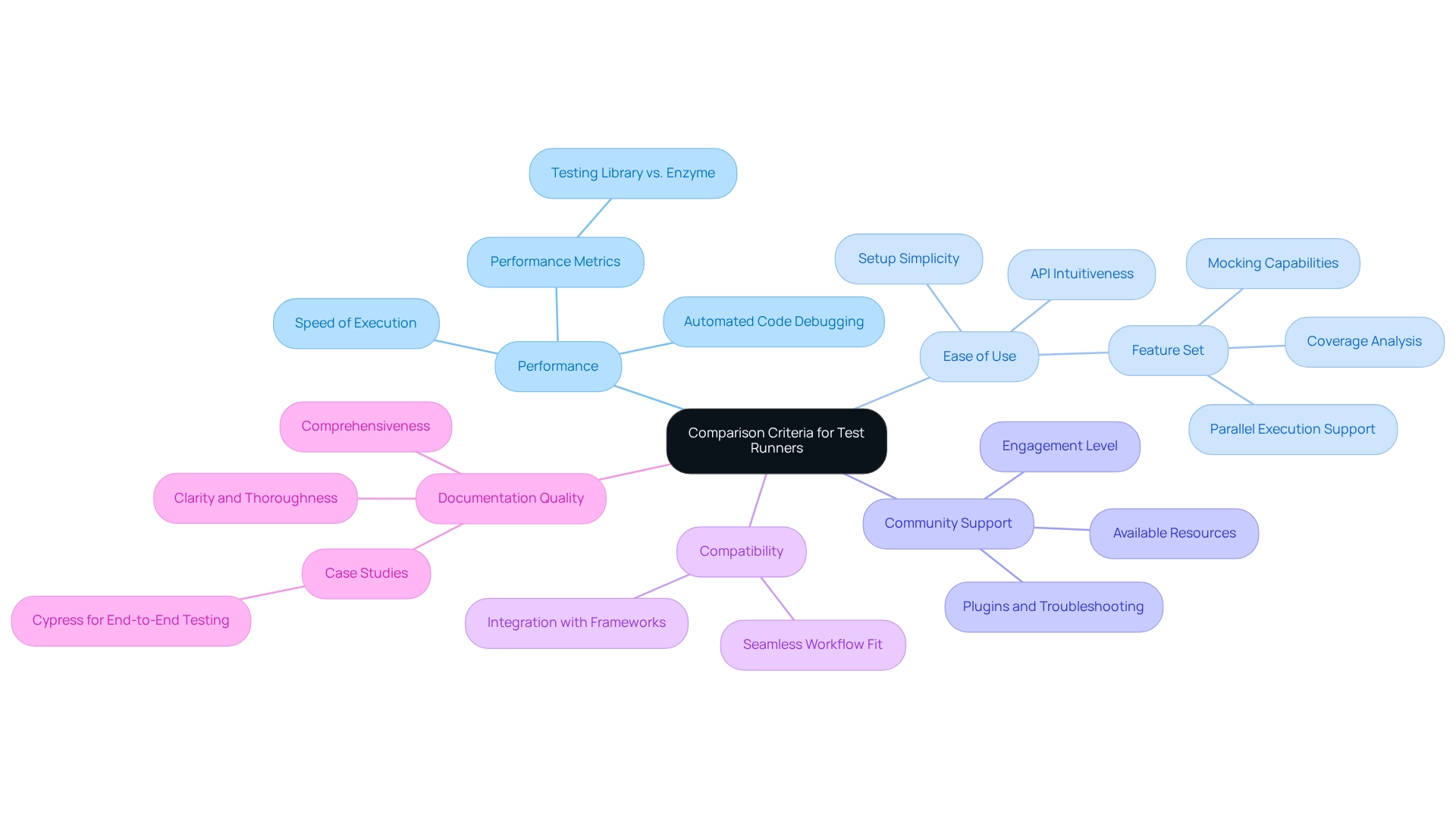

Comparison Criteria for Test Runners

When evaluating javascript test runners, developers often face critical challenges that can impact their workflow. Performance is paramount; the speed at which a runner executes tests and delivers results can significantly influence development efficiency. For instance, the increasing volume of questions about Testing Library on Stack Overflow compared to Enzyme indicates a growing interest in its performance metrics. Furthermore, javascript test runners equipped with automated code debugging capabilities can assist in swiftly identifying and resolving performance bottlenecks and security concerns, ensuring evaluations run efficiently and adhere to the latest security best practices.

Ease of use is another essential criterion. Developers should consider the simplicity of the setup process and the intuitiveness of the API, which can facilitate a smoother evaluation experience. As Roman aptly noted, 'If you need or desire ESM, Vitest is the finest,' underscoring the importance of simplicity in selecting a javascript test runner, while also emphasizing that the feature set of a test runner is crucial. Essential attributes such as mocking capabilities, coverage analysis, and support for parallel execution enhance evaluation flexibility. Automated code debugging can also provide detailed insights into issues, allowing developers to improve their codebase rapidly while ensuring compliance with coding standards.

Community support is vital as well. Assessing the scale and engagement level of the community surrounding the evaluation tool can reveal valuable resources, plugins, and troubleshooting assistance, all of which are crucial for effective usage. This aligns with the emphasis on community resources in external sources, which is important for javascript test runners, and compatibility is another significant aspect. Evaluators should consider how effectively the tool integrates with various frameworks and libraries, ensuring it fits seamlessly into existing workflows.

Finally, documentation quality cannot be overlooked. Reviewing the comprehensiveness of the documentation available is essential, as clear and thorough documentation is vital for effective usage and onboarding. Case studies, such as Cypress for end-to-end evaluation, illustrate its dependability and speed in execution, highlighting how specific evaluation tools meet these criteria. Moreover, Kodezi's AI-powered automated builds and evaluation features can greatly enhance programming quality, simplifying the maintenance of high standards in software development.

In-Depth Comparison of Leading JavaScript Test Runners

A comparative examination of three prominent JavaScript test runners reveals distinct strengths and weaknesses that cater to various development needs.

Jest

- Performance: Jest is recognized for its swift execution, utilizing built-in parallel execution to enhance efficiency.

- Ease of Use: It features a straightforward setup process, often requiring zero configuration for most projects, making it accessible for developers.

- Feature Set: Jest offers robust mocking capabilities, snapshot evaluation, and built-in coverage, which are crucial for contemporary evaluation practices. These features enhance the agile evaluation process by ensuring that code modifications are validated swiftly and efficiently.

- Community Support: With a large and active community, Jest provides extensive resources and plugins that facilitate development.

- Compatibility: It integrates seamlessly with popular frameworks like React, Angular, and Vue.js, ensuring broad applicability.

- Documentation: The documentation is comprehensive and user-friendly, aiding developers in navigating its features effectively.

- User Satisfaction: Developers have rated Jest highly for its ease of use and performance, contributing to its popularity in the community.

Mocha

- Performance: While slightly slower than Jest, Mocha's performance is commendable, especially given its high configurability.

- Ease of Use: Mocha requires more setup, particularly for asynchronous evaluations, which may pose a challenge for some users. However, this configuration enables greater adaptability in evaluation scenarios, aligning well with agile methodologies.

- Feature Set: Its flexibility allows integration with various assertion libraries and reporters, catering to diverse assessment needs. Incorporating best practices, such as establishing clear setup and teardown procedures with JavaScript test runners like Mocha or Jest, can enhance reliability and reduce manual errors. This organized method has been demonstrated to reduce assessment flakiness by 30% and halve setup time, highlighting the significance of structured evaluation environments as applications develop. Automated evaluation frameworks like these not only enhance software quality but also integrate smoothly into current release processes, ensuring that changes are deployed efficiently and effectively.

Choosing the Right Test Runner for Your Project

Choosing the suitable JavaScript test runners is essential for enhancing evaluation efficiency and ensuring code quality. Developers often face challenges in selecting the right tools. How can they navigate these complexities effectively?

Several key factors should guide this decision:

- Project Type: The nature of the project significantly influences runner selection. For instance, Jest is preferred for unit evaluation due to its speed and user-friendly interface, while Cypress excels in end-to-end scenarios, offering robust features for comprehensive assessment. Statistics indicate that a majority of developers favor Jest for unit assessments, highlighting its popularity in the community.

- Team Experience: Utilizing the existing expertise of the development team can simplify the evaluation process. If the group is already skilled in a specific framework, selecting a testing tool that aligns with their expertise can boost productivity and lessen the learning curve.

- Evaluation Requirements: Different projects may prioritize various assessment types—unit evaluations, integration evaluations, or end-to-end evaluations. Comprehending the primary focus of the assessment efforts will aid in choosing JavaScript test runners that specialize in those areas, ensuring thorough coverage and effective results.

- Performance Requirements: For larger projects with extensive test suites, performance becomes a critical consideration. Runners such as Jest, which enable parallel execution, can significantly decrease evaluation time, making them perfect for projects that require efficiency. As Sujit Karpe, Co-Founder & CTO at iMocha, aptly puts it, 'Performance evaluation is an art and science mixed together,' emphasizing the complexity involved in selecting the right tools.

- Community and Support: A robust community supporting a testing tool can offer vital resources, plugins, and assistance, which can be crucial for developers. Runners with active communities often have better documentation and more readily available solutions to common issues.

Integrating continuous evaluation methods and effective bug reporting can enhance software durability and user contentment, further emphasizing the significance of choosing the appropriate assessment tool. Ultimately, the optimal selection of JavaScript test runners should correspond with the particular objectives and limitations of the project, ensuring that evaluations are both efficient and effective. By considering these factors, developers can make informed decisions that enhance their testing strategies and contribute to the overall success of their projects.

Furthermore, Kodezi illustrates how developers can sustain quality and productivity in their work. Users have reported significant improvements in debugging and productivity, with Kodezi's tools enabling automatic bug analysis, code optimization, and enhanced programming efforts across multiple languages and IDEs. To experience these benefits firsthand, consider integrating Kodezi into your development workflow for a more efficient testing process.

Conclusion

In the ever-evolving realm of software development, developers often face significant coding challenges that can hinder their productivity. How can these obstacles be effectively addressed? Tools like Kodezi are designed to tackle these issues head-on, offering advanced debugging features that streamline the testing process and enhance overall code quality.

By automating testing with tools such as Jest, Mocha, and Cypress, Kodezi empowers developers to conduct unit, integration, and end-to-end testing with remarkable ease. The advantages of employing these test runners—ranging from improved performance to comprehensive community support—underscore their necessity in modern development workflows. Furthermore, the integration of Kodezi into these processes not only boosts productivity but also reinforces the overall quality of software applications.

The criteria for selecting a suitable test runner include performance, ease of use, feature set, and compatibility with existing tools. Each runner possesses unique strengths tailored to specific project needs, making it essential for developers to align their choice with their project's requirements. Understanding these distinctions facilitates informed decisions that can ultimately lead to more reliable and maintainable code.

Embracing these testing methodologies will undoubtedly lead to a more resilient coding practice. As the landscape of JavaScript testing continues to evolve, Kodezi provides developers with the ability to swiftly identify and resolve code issues. In addition, this synergy between robust testing and effective debugging ensures that applications can withstand the scrutiny of both developers and users alike. Explore the tools available on the Kodezi platform to enhance your coding practices today.

Frequently Asked Questions

What are JavaScript evaluation tools and why are they important?

JavaScript evaluation tools are resources that help developers assess their JavaScript scripts through unit, integration, and end-to-end evaluations. They streamline the assessment process, allowing for the execution of evaluation scripts and generating detailed reports to ensure code operates correctly, facilitating early identification and resolution of bugs.

What are some notable JavaScript testing frameworks?

Notable JavaScript testing frameworks include Jest, Mocha, and Jasmine, each offering unique characteristics that cater to different assessment needs.

How do JavaScript test runners impact productivity and code quality?

Automating evaluation processes with JavaScript test runners significantly enhances productivity and code quality. In 2025, around 75% of developers reported using these tools, and teams utilizing them could reduce manual evaluation time by up to 40%.

What is the role of platforms like LambdaTest in JavaScript evaluation?

Platforms like LambdaTest provide access to over 10,000 combinations of browsers, devices, and operating systems, enhancing the versatility and extensive evaluation capabilities of JavaScript evaluation tools.

What is the Robot Framework and how does it differ from conventional test runners?

The Robot Framework is a keyword-driven framework that accommodates both technical and non-technical users. It allows for the creation of custom keywords and data-driven cases, enhancing test coverage by executing the same case with various data sets, which is a significant enhancement compared to conventional JavaScript test runners.

How does Kodezi improve the evaluation process for developers?

Kodezi offers automated debugging tools that help developers quickly identify and rectify codebase issues. It provides detailed explanations and insights into problems, ensuring adherence to the latest security best practices and development standards, thus optimizing performance and enhancing code quality.

What is the significance of the upcoming Node Congress 2026?

The Node Congress 2026, scheduled for April, will address the latest advancements and best practices in JavaScript evaluation, including insights on Kodezi's offerings. It will serve as a platform for developers to share experiences regarding the benefits of automating evaluations using JavaScript test runners.