Introduction

In the fast-evolving landscape of software development, the importance of unit testing cannot be overstated. As organizations strive for higher quality and efficiency, the ability to meticulously test individual components of a codebase becomes a cornerstone of successful software delivery. Unit testing not only helps in identifying bugs early but also fosters a culture of confidence among developers, allowing them to innovate and adapt without fear of introducing critical errors.

By understanding the foundational principles of unit testing, following a structured approach to test generation, and leveraging modern tools, developers can significantly enhance their productivity and the robustness of their applications. This article delves into the essentials of unit testing, offering a comprehensive guide to best practices, the integration of AI tools, and the seamless incorporation of tests into CI/CD pipelines, ultimately paving the way for more reliable and efficient software development processes.

Understanding the Basics of Unit Testing

Unit testing is a vital technique in software development that involves testing individual components of a codebase in isolation to generate unit tests that validate their correctness. The main goal is to verify that each component of the program operates as expected, allowing us to generate unit tests that greatly enhance overall software quality. By incorporating individual assessments, developers can generate unit tests to identify issues early in the development phase, which improves software quality and enables simpler maintenance.

This proactive approach allows developers to generate unit tests confidently, knowing that a robust safety net is in place to catch potential issues. According to industry insights, 52% of IT teams credit their increased QA budgets to the growing number of releases, emphasizing the critical role of testing in maintaining software quality amidst rapid development cycles. The case study titled 'Differences Between TDD and Traditional Development' illustrates how Test-Driven Development (TDD) involves a cyclical process where teams generate unit tests first to ensure that code is continuously refined and meets functionality, resulting in fewer bugs and a more reliable software development process.

As Massimo Pretti, an Embedded Software Senior Designer, observes,

Normally in my company, software tests are launched on a hardware prototype that functionally is a replica of the final hardware but that can be different in the sense that can hide potential problems.

This highlights the significance of thorough component evaluation in revealing concealed problems before they worsen. Additionally, considering what set theory states about non-existent objects can add a philosophical dimension to our understanding of testing; it reminds us that just because a component may not seem problematic does not mean it is free of hidden issues.

Grasping these fundamental principles is crucial for efficiently generating unit tests and executing evaluations within your development process, ultimately resulting in more dependable software outcomes.

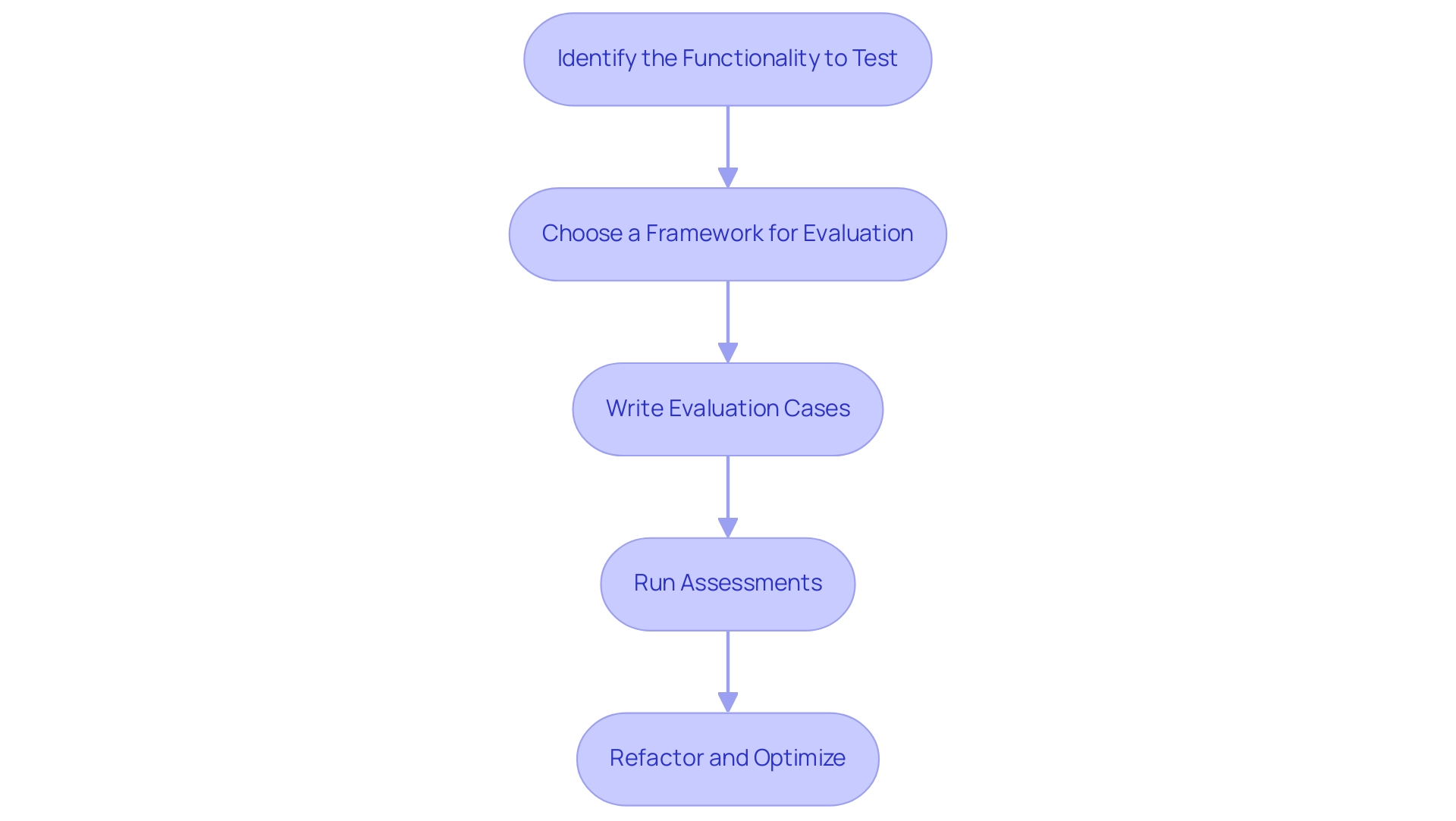

Step-by-Step Guide to Generating Unit Tests

- Identify the Functionality to Test: Start by pinpointing the functions or methods that need testing, prioritizing critical components or those characterized by complex logic. This foundational step sets the stage for effective testing.

- Choose a Framework for Evaluation: Select a framework for validation that aligns with your programming language. For example, JUnit is widely used for Java, NUnit for .NET, and pytest for Python. Utilizing the right framework can simplify your evaluation process and enhance productivity. Notably, frameworks like Riteway, which boasts a version number of 252, provide simple and readable unit evaluations, contributing significantly to the clarity of your assessment efforts.

- Write Evaluation Cases: Create comprehensive evaluation cases for each identified function, ensuring that you cover a range of scenarios, including edge cases. Each case should assert expected outcomes to validate functionality effectively.

- Run Assessments: Utilize the framework’s runner to execute your individual evaluations. Monitor for any failures and debug issues accordingly. This step is crucial for maintaining the integrity of your code.

- Refactor and Optimize: Once your evaluations run successfully, consider refactoring your program to enhance efficiency and clarity. After implementing modifications, repeat the evaluation process to ensure that the refactoring did not create any new problems.

By following these steps, developers can generate unit tests that greatly improve code reliability and overall performance. Efficient component evaluation is not merely a best practice; it’s a crucial factor in generating unit tests for the creation of high-quality software. For example, the OpenEdge ABL Validation Frameworks, including proUnit and OEUnit, support structured evaluation in OpenEdge environments, demonstrating the effectiveness of well-implemented module assessment strategies.

Furthermore, as linuxbuild mentions about their API Sanity Checker, it acts as an automatic generator of basic functional validations for C/C++ libraries, emphasizing the practical use of testing frameworks in real-world situations.

Leveraging AI for Automated Unit Test Generation

AI tools such as GitHub Copilot and JetBrains AI Assistant are transforming the manner in which developers handle the creation of unit verification by examining existing scripts to generate unit test case scenarios. To harness the full potential of these tools, especially in conjunction with Kodezi's comprehensive features, follow these steps:

-

Integrate AI Tools: Start by integrating the selected AI tool into your development environment.

Use the official documentation for Kodezi CLI to ensure seamless integration, enabling automated program enhancements and debugging processes that save valuable time.

-

Provide Context: When prompted by the AI, supply context about the code you wish to evaluate.

Detailed information enables the AI to produce more relevant and targeted scenarios, enhancing their effectiveness and aligning with Kodezi’s commitment to quality.

-

Review and Refine: Once the AI generates the case scenarios, review them meticulously for accuracy and completeness.

Customize any assessments as required to fulfill your specific project criteria, ensuring that they conform to Kodezi’s standards for security compliance and performance optimization.

Kodezi ensures that your codebase adheres to the latest security best practices, helping to identify vulnerabilities early in the development process.

-

Run AI-Generated Assessments: Execute the AI-generated evaluations alongside your manual assessments for comprehensive coverage.

This dual approach ensures that you're capturing potential issues from both perspectives, bolstered by Kodezi's automated debugging capabilities, which quickly pinpoint performance bottlenecks and security issues.

Utilizing AI for generating assessments can help developers to generate unit tests more efficiently, saving significant time and allowing them to concentrate on more intricate aspects of their projects.

A study indicates that organizations utilizing AI for testing can achieve up to 80% savings compared to traditional solutions.

For example, Seniordev.ai illustrates how AI improves efficiency by reviewing pull requests, updating documentation, and creating component evaluations.

As highlighted by Craig S. Smith, a technology contributor,

Without functional evaluations, if something fails, identifying the issue can be like searching for a needle in a haystack.

By investing in AI tools such as Kodezi for quality assurance, organizations not only boost efficiency but also enhance developers' quality of life and drive improved business results.

Integrating Unit Tests into CI/CD Pipelines

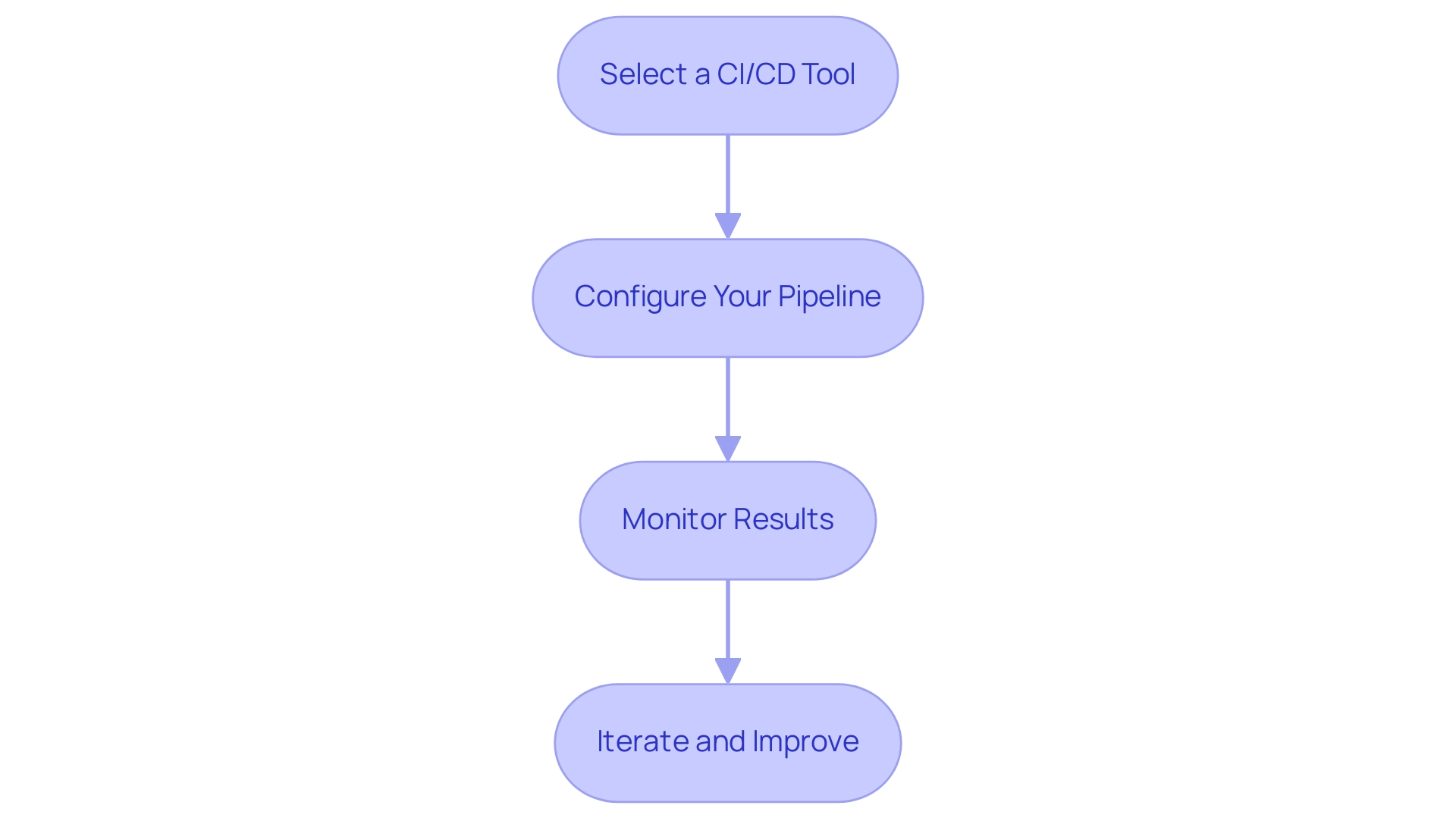

Incorporating component assessments into your CI/CD pipeline is crucial for attaining superior software quality and operational effectiveness. By following these steps, you can streamline the process and leverage modern insights:

- Select a CI/CD Tool: Opt for a CI/CD tool that aligns with your project's requirements.

Popular choices include Jenkins, CircleCI, and GitHub Actions, each offering unique features that can enhance your workflow. - Configure Your Pipeline: Set up your pipeline to generate unit tests as a critical step. This involves configuring your chosen CI/CD tool to automatically execute your evaluations upon code commits, ensuring continuous feedback.

As highlighted in the change failure rate chart introduced in GitLab 15.2, effective configuration can significantly reduce failure rates. - Monitor Results: Implement mechanisms within your pipeline to oversee outcomes and promptly report any failures. This feedback loop is vital for early error detection, which significantly improves software quality.

Incremental development approaches in CI/CD, as noted in industry insights, enhance this process by allowing teams to catch issues early and adapt quickly. - Iterate and Improve: Regularly assess your testing strategy and CI/CD configurations. Identify areas that can be optimized and modify your evaluations and pipeline settings accordingly.

Continuous improvement is key to maintaining efficiency and adapting to evolving project needs. Tracking DevOps metrics, as discussed in the case study titled 'Conclusion on DevOps Metrics,' can provide insights into your pipeline performance and highlight areas needing attention.

Incorporating expert opinions can also provide valuable perspectives. As Miłosz Jesis states, 'Additionally, valuable translations provided by Milosz further enhance GitProtect's communication and global outreach.'

By incorporating integration evaluations into your CI/CD pipeline, you not only identify problems early but also generate unit tests that promote a culture of quality assurance, thereby improving overall development productivity. Given the statistics indicating that a significant percentage of CI/CD pipelines now include testing, it’s clear that this practice is becoming a standard for success in software development. Staying updated on modern trends and managing CI/CD pipelines effectively is crucial in this fast-evolving landscape.

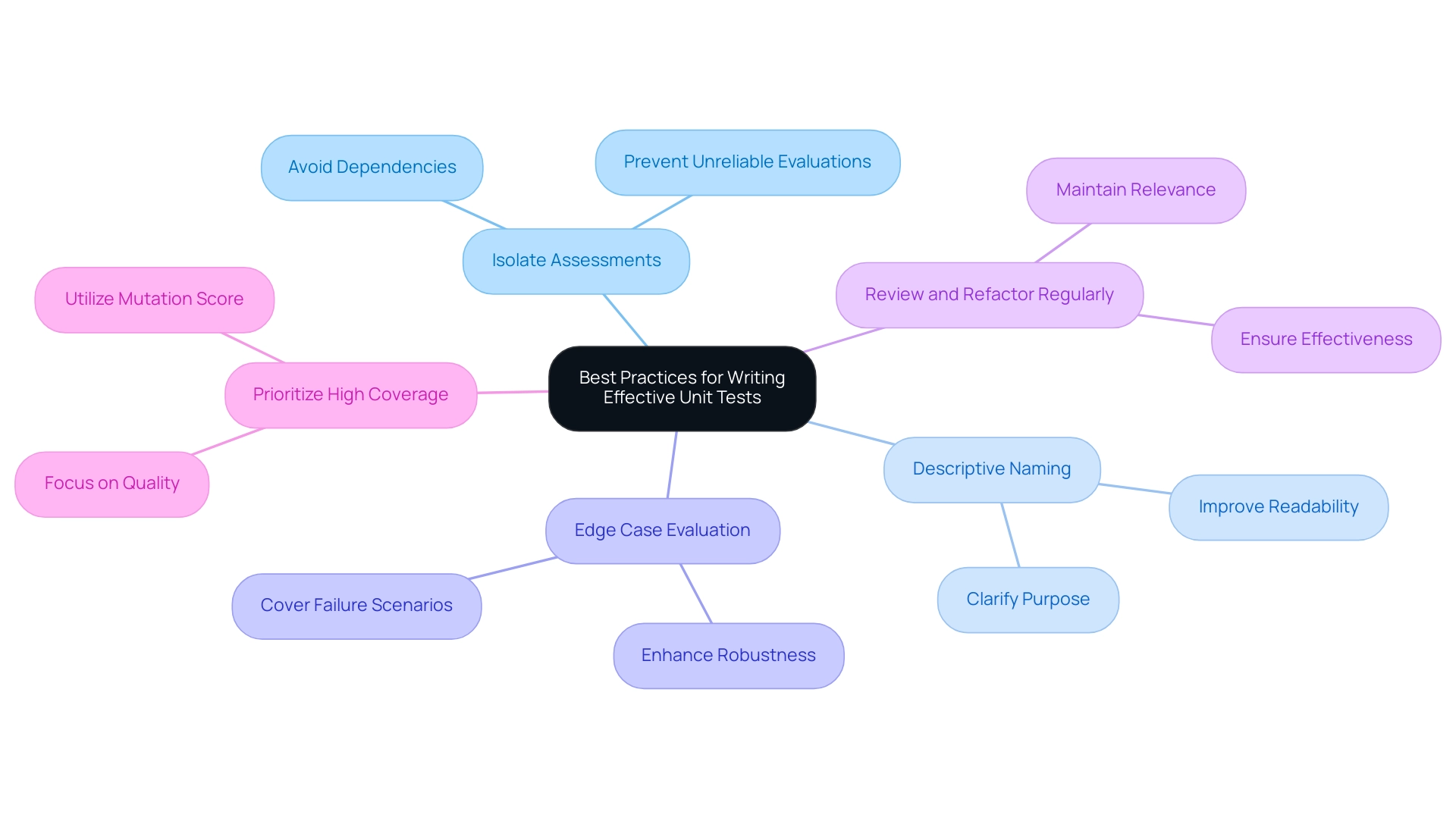

Best Practices for Writing Effective Unit Tests

- Isolate Assessments: Ensure that each individual evaluation concentrates on a single component of work, devoid of dependencies that may cause instability. Isolation is essential to preventing unreliable evaluations, which can obscure actual performance and dependability. Common issues faced when adopting unit testing include evaluations being tightly coupled with procedural code, which can lead to such instability.

- Descriptive Naming: Use clear and descriptive names for your cases, reflecting the specific functionality being evaluated. This practice not only improves readability but also assists in grasping the purpose of each assessment at a glance.

- Edge Case Evaluation: Incorporate assessments that cover edge cases and potential failure scenarios. By doing so, you fortify the robustness of your code and prepare it for unexpected conditions, ultimately leading to a more resilient application.

- Review and Refactor Regularly: Make it a habit to periodically review and refactor your assessments. As your codebase evolves, maintaining the relevance and effectiveness of your assessments is crucial for ongoing quality assurance.

- Prioritize High Coverage: Aim for elevated assessment coverage, but prioritize quality over sheer quantity. Utilize the mutation score to gauge how well your evaluations detect changes in code. Significant evaluations that confirm essential functionalities are far more valuable than a high number of superficial assessments.

Secoda's input to data teams emphasizes the significance of transparency regarding data resources and dependencies, which can greatly boost cooperation among data experts and enhance the quality of assessments. As Stephan Petzl aptly states, "This guide will provide you with practical tips to ensure that your assessments work effectively for you, rather than becoming a maintenance burden."

By adhering to these best practices, developers can generate unit tests that significantly enhance the quality and maintainability of their code, transforming tests from a maintenance burden into reliable tools for continuous improvement.

Conclusion

Unit testing stands as a critical pillar in modern software development, offering a structured approach to ensuring code quality and reliability. By meticulously testing individual components, developers can identify bugs early, which not only enhances the overall quality of the software but also instills a sense of confidence that drives innovation. The systematic process of generating and implementing unit tests, as outlined in the article, serves as a roadmap for developers aiming to achieve excellence in their coding practices.

The integration of AI tools into the unit testing workflow further amplifies productivity and efficiency. By automating the generation of test cases, developers can focus their efforts on more complex tasks, reducing the time spent on repetitive testing processes. This combination of traditional testing methodologies and modern AI capabilities paves the way for a more agile development environment, where quality assurance is seamlessly woven into the fabric of continuous integration and deployment pipelines.

Incorporating best practices for unit testing not only strengthens the reliability of individual code units but also fosters a culture of quality within development teams. By prioritizing:

- Isolation

- Descriptive naming

- Robust edge case testing

developers can transform unit tests into invaluable assets that contribute to the long-term success of software projects. As the landscape of software development continues to evolve, embracing these practices and tools will be essential for organizations striving to maintain a competitive edge in delivering high-quality, reliable software solutions.

Frequently Asked Questions

What is unit testing in software development?

Unit testing is a technique that involves testing individual components of a codebase in isolation to validate their correctness, ensuring that each component operates as expected and enhancing overall software quality.

What are the benefits of generating unit tests?

Generating unit tests helps identify issues early in the development phase, improves software quality, enables simpler maintenance, and provides a safety net to catch potential problems.

How does Test-Driven Development (TDD) relate to unit testing?

TDD involves a cyclical process where teams generate unit tests first to ensure that code is continuously refined and meets functionality, resulting in fewer bugs and a more reliable software development process.

What is the significance of thorough component evaluation in unit testing?

Thorough component evaluation is crucial for revealing concealed problems before they worsen, ensuring that even components that seem non-problematic are assessed for hidden issues.

What are the steps to generate effective unit tests?

The steps include: 1. Identify the functionality to test. 2. Choose a framework for evaluation. 3. Write evaluation cases covering various scenarios. 4. Run assessments using the framework’s runner. 5. Refactor and optimize the code, then repeat evaluations.

What frameworks can be used for unit testing?

Common frameworks include JUnit for Java, NUnit for .NET, and pytest for Python. Other frameworks like Riteway provide simple and readable evaluations to enhance assessment clarity.

Why is refactoring important after running unit tests?

Refactoring is important to enhance efficiency and clarity in the code. After modifications, it’s essential to repeat the evaluation process to ensure no new problems were introduced.

Can you provide an example of a tool that supports unit testing?

The OpenEdge ABL Validation Frameworks, including proUnit and OEUnit, support structured evaluation in OpenEdge environments, demonstrating effective module assessment strategies. Additionally, the API Sanity Checker acts as an automatic generator of basic functional validations for C/C++ libraries.